Technologies

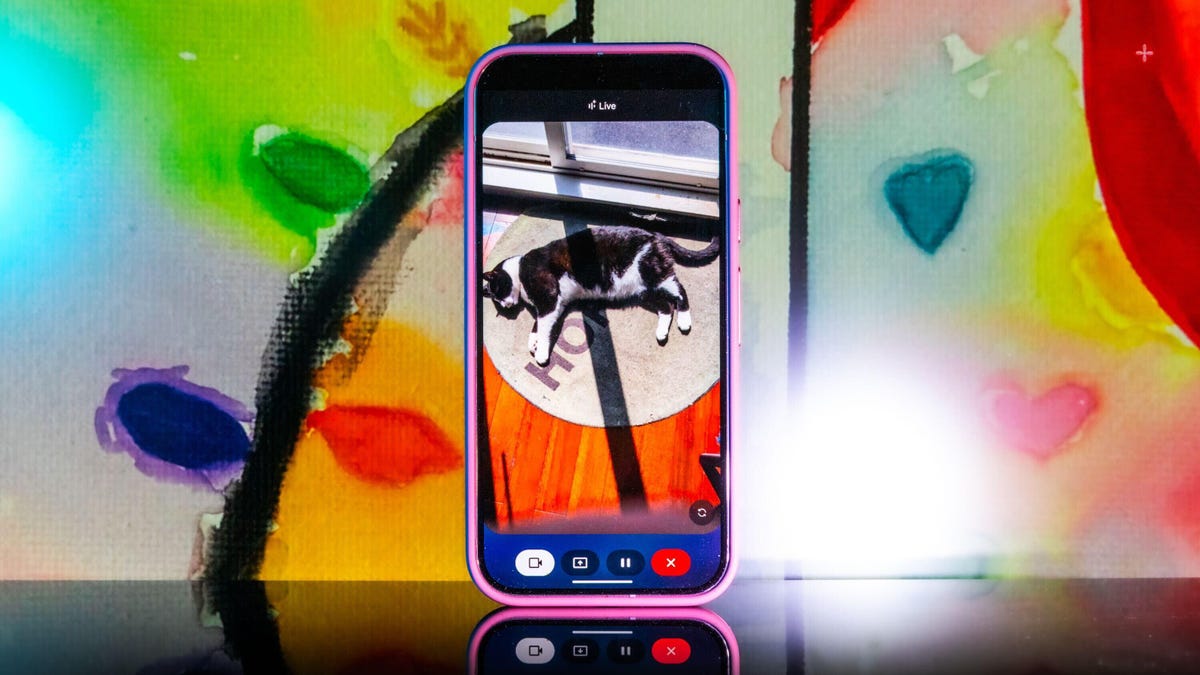

The Future’s Here: Testing Out Gemini’s Live Camera Mode

Gemini Live’s new camera mode feels like the future when it works. I put it through a stress test with my offbeat collectibles.

«I just spotted your scissors on the table, right next to the green package of pistachios. Do you see them?»

Gemini Live’s chatty new camera feature was right. My scissors were exactly where it said they were, and all I did was pass my camera in front of them at some point during a 15-minute live session of me giving the AI chatbot a tour of my apartment. Google’s been rolling out the new camera mode to all Android phones using the Gemini app for free after a two-week exclusive to Pixel 9 (including the new Pixel 9A) and Galaxy S5 smartphones. So, what exactly is this camera mode and how does it work?

When you start a live session with Gemini, you now how have the option to enable a live camera view, where you can talk to the chatbot and ask it about anything the camera sees. Not only can it identify objects, but you can also ask questions about them — and it works pretty well for the most part. In addition, you can share your screen with Gemini so it can identify things you surface on your phone’s display.

When the new camera feature popped up on my phone, I didn’t hesitate to try it out. In one of my longer tests, I turned it on and started walking through my apartment, asking Gemini what it saw. It identified some fruit, ChapStick and a few other everyday items with no problem. I was wowed when it found my scissors.

That’s because I hadn’t mentioned the scissors at all. Gemini had silently identified them somewhere along the way and then recalled the location with precision. It felt so much like the future, I had to do further testing.

My experiment with Gemini Live’s camera feature was following the lead of the demo that Google did last summer when it first showed off these live video AI capabilities. Gemini reminded the person giving the demo where they’d left their glasses, and it seemed too good to be true. But as I discovered, it was very true indeed.

Gemini Live will recognize a whole lot more than household odds and ends. Google says it’ll help you navigate a crowded train station or figure out the filling of a pastry. It can give you deeper information about artwork, like where an object originated and whether it was a limited edition piece.

It’s more than just a souped-up Google Lens. You talk with it, and it talks to you. I didn’t need to speak to Gemini in any particular way — it was as casual as any conversation. Way better than talking with the old Google Assistant that the company is quickly phasing out.

Google also released a new YouTube video for the April 2025 Pixel Drop showcasing the feature, and there’s now a dedicated page on the Google Store for it.

To get started, you can go live with Gemini, enable the camera and start talking. That’s it.

Gemini Live follows on from Google’s Project Astra, first revealed last year as possibly the company’s biggest «we’re in the future» feature, an experimental next step for generative AI capabilities, beyond your simply typing or even speaking prompts into a chatbot like ChatGPT, Claude or Gemini. It comes as AI companies continue to dramatically increase the skills of AI tools, from video generation to raw processing power. Similar to Gemini Live, there’s Apple’s Visual Intelligence, which the iPhone maker released in a beta form late last year.

My big takeaway is that a feature like Gemini Live has the potential to change how we interact with the world around us, melding our digital and physical worlds together just by holding your camera in front of almost anything.

I put Gemini Live to a real test

The first time I tried it, Gemini was shockingly accurate when I placed a very specific gaming collectible of a stuffed rabbit in my camera’s view. The second time, I showed it to a friend in an art gallery. It identified the tortoise on a cross (don’t ask me) and immediately identified and translated the kanji right next to the tortoise, giving both of us chills and leaving us more than a little creeped out. In a good way, I think.

I got to thinking about how I could stress-test the feature. I tried to screen-record it in action, but it consistently fell apart at that task. And what if I went off the beaten path with it? I’m a huge fan of the horror genre — movies, TV shows, video games — and have countless collectibles, trinkets and what have you. How well would it do with more obscure stuff — like my horror-themed collectibles?

First, let me say that Gemini can be both absolutely incredible and ridiculously frustrating in the same round of questions. I had roughly 11 objects that I was asking Gemini to identify, and it would sometimes get worse the longer the live session ran, so I had to limit sessions to only one or two objects. My guess is that Gemini attempted to use contextual information from previously identified objects to guess new objects put in front of it, which sort of makes sense, but ultimately, neither I nor it benefited from this.

Sometimes, Gemini was just on point, easily landing the correct answers with no fuss or confusion, but this tended to happen with more recent or popular objects. For example, I was surprised when it immediately guessed one of my test objects was not only from Destiny 2, but was a limited edition from a seasonal event from last year.

At other times, Gemini would be way off the mark, and I would need to give it more hints to get into the ballpark of the right answer. And sometimes, it seemed as though Gemini was taking context from my previous live sessions to come up with answers, identifying multiple objects as coming from Silent Hill when they were not. I have a display case dedicated to the game series, so I could see why it would want to dip into that territory quickly.

Gemini can get full-on bugged out at times. On more than one occasion, Gemini misidentified one of the items as a made-up character from the unreleased Silent Hill: f game, clearly merging pieces of different titles into something that never was. The other consistent bug I experienced was when Gemini would produce an incorrect answer, and I would correct it and hint closer at the answer — or straight up give it the answer, only to have it repeat the incorrect answer as if it was a new guess. When that happened, I would close the session and start a new one, which wasn’t always helpful.

One trick I found was that some conversations did better than others. If I scrolled through my Gemini conversation list, tapped an old chat that had gotten a specific item correct, and then went live again from that chat, it would be able to identify the items without issue. While that’s not necessarily surprising, it was interesting to see that some conversations worked better than others, even if you used the same language.

Google didn’t respond to my requests for more information on how Gemini Live works.

I wanted Gemini to successfully answer my sometimes highly specific questions, so I provided plenty of hints to get there. The nudges were often helpful, but not always. Below are a series of objects I tried to get Gemini to identify and provide information about.

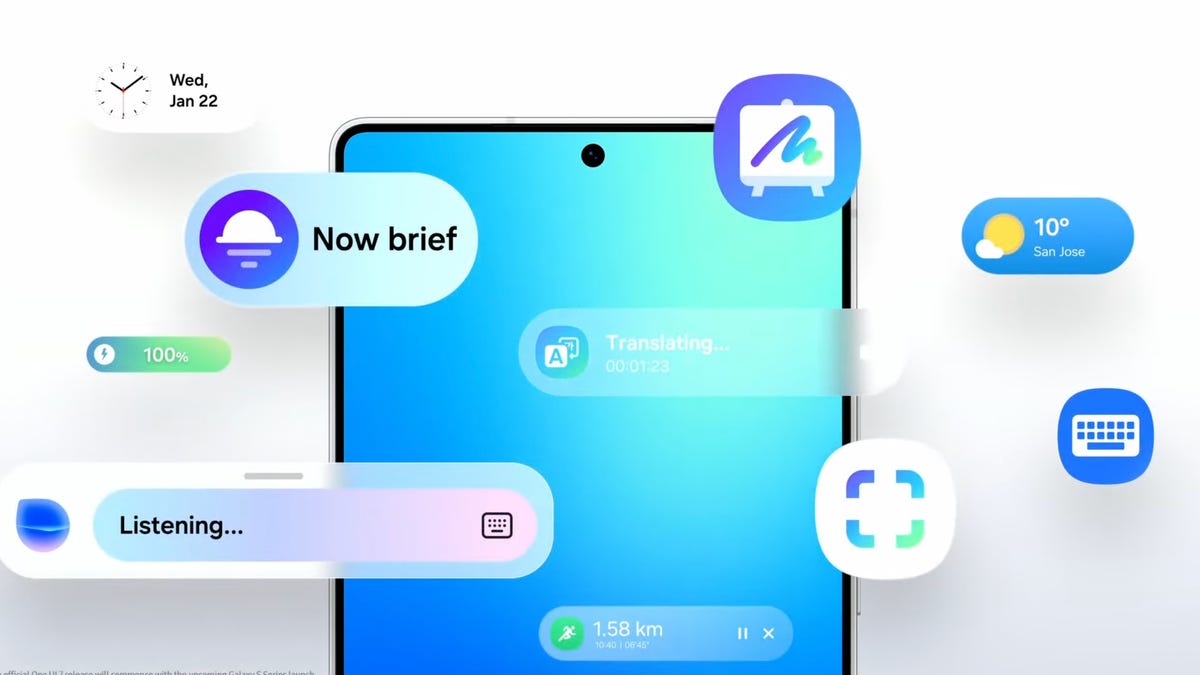

Technologies

How to Turn Off AI Features on Your Samsung Galaxy Smartphone

Too much AI on your Galaxy phone? Here’s how to disable it.

Samsung is known for throwing virtually every feature imaginable into its smartphones, whether or not you intend to use them. There are countless additional customizations and apps in Galaxy phones that make it a bit overwhelming to figure out what you actually need.

Every new generation of Galaxy phone seems to gain more and more AI features that you may not find useful. Luckily, you can remove nearly anything you don’t want on your Samsung phone.

Whether you just want to turn off some of the AI features on your Galaxy smartphone or you want to disable them completely, we’ll show you how to do that below.

Read more: The 8 Biggest Announcements from Samsung’s Galaxy Unpacked 2026 Event

How to turn off Galaxy AI services

Some of the features that smartphones can do using AI are undeniably cool. If you like some of them but don’t want AI everywhere, Samsung will allow you to pick and choose what to take advantage of.

To turn off AI for individual services:

- Go to Settings.

- Tap Galaxy AI.

- Select the service to disable.

- Tap the toggle to Off.

You’ll see a list of the services that form Galaxy AI. You can choose the apps for which you want to turn AI on or off from here.

If you’re more privacy-minded but still want to take advantage of Galaxy AI features, there’s a toggle at the bottom of this screen that will limit AI from sending anything to the cloud and keep data processing on your device only. Going for this option will disable some AI features altogether that require the internet to process, and the results may be less useful.

Goodbye, Bixby

Bixby, the Samsung digital assistant that you may not even realize is on your phone, is something that you’ll want to disable if you’re trying to tame the AI on your Galaxy device.

Luckily, Samsung has a way for you to replace Bixby with another voice assistant of your choosing. For all intents and purposes, Google Assistant will be your best bet, even if it will eventually be replaced by Google’s advanced AI assistant somewhere down the road.

It’s likely already installed on your phone, but you may need to redownload the Google app if you uninstalled it or never had it.

- Go to Settings.

- Tap Apps.

- Tap Default apps.

- Tap Digital assistant app.

- Tap Google.

That’s it. Now, when you trigger your voice assistant, you’ll be greeted by Google Assistant instead of the AI-powered Bixby.

Technologies

How to Watch the February 2026 Pokemon Presents Livestream

Celebrate Pokemon Day with the latest and greatest announcements from the world of pocket monsters.

We are just a day away from the annual celebration of all things Pokemon. The Pokemon Day event starts tomorrow morning and should be chock full of free goodies and exciting game reveals for creature-collecting fans across the world. Considering the juggernaut franchise is celebrating its 30th anniversary, it’s safe to assume there will be juicy information included in the next Pokemon Presents stream.

There’s plenty of excitement leading up to the main event. Pokemon TCG Pocket just released the Paldean Wonders card set expansion, and The Pokemon Company revealed that FireRed and LeafGreen are getting Switch ports with Pokemon Home compatibility. Now the stage is set for The Pokemon Company to reveal new mainline Pokemon games for the Switch and Switch 2.

Here’s when The Pokemon Company goes live with its first Pokemon Presents stream of the year, setting audience expectations for what we can expect to see from the world of pocket monsters in 2026.

What time is the Pokemon Presents stream on Pokemon Day?

The first Pokemon Presents livestream of the year takes place tomorrow, Friday, Feb. 27. The show begins bright and early for American audiences, so you’ll have to avoid sleeping in if you want to keep up with the latest announcements.

Here’s when Friday’s Pokemon Day livestream begins in your time zone:

ET: 9 a.m.

CT: 8 a.m.

MT: 7 a.m.

PT: 6 a.m.

How to watch the February Pokemon Presents livestream

The Pokemon Company is responsible for the Pokemon Presents livestreams, which means you can view the announcement through any of its social media channels.

While I recommend watching the stream on The Pokemon Company’s YouTube or Twitch channels, you can also keep up with the announcements on TikTok. Regular updates will also be posted to the company’s Instagram account throughout the event.

What will be announced on Pokemon Day 2026?

It’s safe to assume that the February Pokemon Presents livestream will feature some long-anticipated reveals, since 2026 is a big year for the Pokemon brand.

The livestream marks the 30th anniversary of Pokemon Red and Pokemon Green (the original Pokemon games for the Game Boy) releasing in Japan. We know the stream will be roughly 25 minutes long, making one of the longest Pokemon Presents showcases ever.

I suspect the livestream will serve as a victory lap celebrating Pokemon’s cultural impact before pivoting to the future and showing fans what’s coming next.

And what treats are in store for tomorrow’s event? I expect to see updates and freebies for Pokemon mobile games first, since these are some of the big moneymakers. If this Pokemon Day presentation mirrors the one from last year, we’ll be treated to some goodies in Pokemon Go, Pokemon Masters EX, Pokemon Cafe ReMix and Pokemon TCG Pocket. We might also see an announcement for a special Pokemon Scarlet and Violet raid event and Pokemon Legends: Z-A Mega stone distributions.

After celebrating the currently released games, it’s likely that the presentation will pivot to what’s coming next. We’ll almost certainly get a reminder that Nintendo Switch ports of Pokemon FireRed and Pokemon LeafGreen are available starting on Pokemon Day. I expect the biggest news will be a concrete release date for Pokemon’s big new competitive game, Pokemon Champions, which is slated to come out in time for the Pokemon World Championships 2026.

If we’re really lucky, we might even hear about the 10th generation of mainline Pokemon games. While Game Freak has unmoored itself from a consistent release schedule, we’re certainly due to see the rumored Pokemon Wind and Wave. While the infamous Teraleak hints toward what the development studio might show off next, it’s high time we get a glimpse of what the next big Pokemon games are really all about.

Technologies

Only Hours Remain to Grab the Skullcandy Push 720 Open Earbuds for 40% Off

That drops these earbuds to under $100, a hard-to-beat bargain.

Until the end of the day, you can pick up a pair of the Skullcandy Push 720 open-earbuds for a nice $60 discount. That’s just $10 more than the previous record low, and this deal is available at both Best Buy and Amazon. Best Buy has labeled this deal as ending tonight and we except Amazon to follow suit. Grab yours now before the deal expires.

The Skullcandy Push 720 Open earbuds are designed to keep you connected to your audio and the world at the same time. The open-ear clip-on design delivers directional sound. But since they’re open, you’ll still be able to hear the outside world, making them great for commuting, workouts and more. The buds are lightweight and comfortable in your ear as they are built for all-day wear. They have an an IP67 rating for sweat- and water-resistance, so you can confidently take them on outdoor runs, gym sessions and through rainy weather.

The buds use precision directional speakers so your audio stays personal without disturbing people nearby. The over-the-ear fit is one-size-fits-all, and it’s made to stay secure through any kind of activity. They have a decent 30-hour battery life. But with rapid charging you’ll be back up and running in no time, and the carrying pouch has a built-in charger for on the go convenience.

HEADPHONE DEALS OF THE WEEK

-

$248 (save $152)

-

$170 (save $181)

-

$398 (save $62)

-

$200 (save $250)

Why this deal matters

These open-ear earbuds are great for anyone that’s active. Whether you’re running in the gym or playing sports, these headphones will comfortably clip onto your ears. Previously, we saw these buds come down to $80. Considering this is only $10 more, it’s not worth waiting to see if the price will drop back to $80. Best Buy says there’s only hours left for this deal. We think the Best Buy and Amazon deals will expire together, so act fast.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow