Technologies

Apple Vision Pro Hands-On: Far Better Than I Was Ready For

I experienced incredible fidelity, surprising video quality and a really smooth interface. Apple’s first mixed-reality headset nails those, but lots of questions remain.

I was in a movie theater last December watching Avatar: The Way of Water in 3D, and I said to myself: «Wow, this is an immersive film I’d love to watch in next-gen VR.» That’s exactly what I experienced in Apple’s Vision Pro headset, and yeah, it’s amazing.

On Monday, I tried out the Vision Pro in a series of carefully picked demos during WWDC at Apple’s Cupertino, California, headquarters. I’ve been using cutting-edge VR devices for years, and I found all sorts of augmented reality memories bubbling up in my brain. Apple’s compact — but still not small —headset reminds me of an Apple-designed Meta Quest Pro. The fit of the back strap was comfy yet stretchy, with a dial to adjust the rear fit and a top strap for stability. The headset’s sleek design, and even its glowing front faceplate, also gave me an instant Ready Player One vibe.

05:35

I couldn’t wear my glasses during the demo, though, and neither will you. Apple’s headset does not support glasses, instead relying on Zeiss custom inserts to correct wearers’ vision. Apple did manage, through a setup process, to easily find lenses that fit my vision well enough so that everything seemed crystal clear, which is not an easy task. Also, we adjusted the fit and tuned spatial audio for my head using an iPhone, a system that will be finessed when the headset is released in 2024.

From there, I did my demos seated, mostly, and found myself surprised from the start. The passthrough video camera quality of this headset is good —really, really good. Not as good as my own vision, but good enough that I could see the room well, see people in it with me, see my watch notifications easily on my wrist. The only headset that’s done this previously was the extremely impressive but PC-connected Varjo XR-3, and Apple’s display and cameras feel even better.

Apple’s floating grid of apps appears when I press the top digital crown, which autocenters the home screen to wherever I’m looking. I set up eye tracking, which worked like on many other VR headsets I’ve used: I looked at glowing dots as musical notes played, and got a chime when it all worked.

A list of apps as they would appear inside of the Apple Vision Pro headset.

From there, the interface was surprisingly fluid. Looking at icons or interface options slightly enlarges them, or changes how bold they appear. Tapping with my fingers while looking at something opens an app.

I’ve used tons of hand-tracking technology on headsets like the HoloLens 2 and the Meta Quest 2 and Pro, and usually there’s a lot of hand motion required. Here, I could be really lazy. I pinched to open icons even while my hand was resting in my lap, and it worked.

Scrolling involves pinching and pulling with my fingers; again, pretty easy to do. I resized windows by moving my hand to throw a window across the room or pin it closer to me. I opened multiple apps at once, including Safari, Messages and Photos. It was easy enough to scroll around, although sometimes my eye tracking needed a bit of extra concentration to pull off.

More from WWDC 2023

Apple’s headset uses eye tracking constantly in its interface, something Meta’s Quest Pro and even the PlayStation VR 2 don’t do. That might be part of the reason for the external battery pack. The emphasis on eye tracking as a major part of the interface felt transformative, in a way I expected might be the case for VR and AR years ago. What I don’t know is how it will feel in longer sessions.

I don’t know how the Vision Pro will work with keyboards and trackpads, since I didn’t get to demo the headset that way. It works with Apple’s Magic Keyboard and Magic Trackpad, and Macs, but not with iPhone and iPad or Watch touchscreens —not now, at least.

Dialing in reality

I scrolled through some photos in Apple’s preset photo album, plus a few 3D photos and video clips shot with the Vision Pro’s 3D camera. All the images looked really crisp, and a panoramic photo that spread around me looked almost like it was a window on a landscape that extended just beyond the room I was in.

Apple has volumetric 3D landscapes on the Vision Pro that are immersive backgrounds like 3D wallpaper, but looking at one really shows off how nice that Micro OLED display looks. A lake looked like it was rolling up to a rocky shore that ended right where the real coffee table was in front of me.

Raising my hands to my face, I saw how the headset separates my hands from VR, a trick that’s already in Apple’s ARKit. It’s a little rough around the edges but good enough. Similarly, there’s a wild new trick where anyone else in the room can ghost into view if you look at them, a fuzzy halo with their real passthrough video image slowly materializing. It’s meant to help create meaningful contact with people while wearing the headset. I wondered how you could turn that off or tune it to be less present, but it’s a very new idea in mixed reality.

Apple’s digital crown, a small dial borrowed from the Apple Watch, handles reality blend. I could turn the dial to slowly extend the 3D panorama until it surrounded me everywhere, or dial it back so it just emerged a little bit like a 3D window.

Mixed reality in Apple’s headset looks so casually impressive that I almost didn’t appreciate how great it was. Again, I’ve seen mixed reality in VR headsets before (Varjo XR-3, Quest Pro), and I’ve understood its capabilities. Apple’s execution of mixed reality felt much more immersive, rich and effortless on most fronts, with a field of view that felt expansive and rich. I can’t to see more experiences in it.

Cinematic fidelity that wowed me

The cinema demo was what really shocked me, though. I played a 3D clip of Avatar: The Way of Water in-headset, on a screen in various viewing modes including a cinema. Apple’s mixed-reality passthrough can also dim the rest of the world down a bit, in a way similar to how the Magic Leap 2 does with its AR. But the scenes of Way of Water sent little chills through me. It was vivid. This felt like a movie experience. I don’t feel that way in other VR headsets.

Avatar: The Way of Water looked great in the Vision Pro.

Apple also demonstrated its Immersive Video format that’s coming as an extension to Apple TV Plus. It’s a 180-degree video format, similar to what I’ve seen before in concept, but with really strong resolution and video quality. A splash demo reel of Alicia Keys singing, Apple Sports events, documentary footage and more reeled off in front of me, a teaser of what’s to come. One-eighty-degree video never appears quite as crisp to me as big-screen film content, but the sports clips I saw made me wonder how good virtual Jets games could be in the future. Things have come a long way.

Would I pay $3,499 for a head-worn cinema? No, but it’s clearly one of this device’s greatest unique strengths. The resolution and brightness of the display were surprising.

03:59

Convincing avatars (I mean, Personas)

Apple’s Personas are 3D-scanned avatars generated by using the Vision Pro to scan your face, making a version of yourself that shows up in FaceTime chats if you want, or also on the outside of the Vision Pro’s curved OLED display to show whether you’re «present» or in an app. I didn’t see how that outer display worked, but I had a FaceTime with someone in their Persona form, and it was good. Again, it looked surprisingly good.

I’ve chatted with Meta’s ultra-realistic Codec Avatars, which aim for realistic representations of people in VR. Those are stunning, and I’ve also seen Meta’s phone-scanned step-down version in an early form last year, where a talking head spoke to me in VR. Apple’s Persona looked better than Meta’s phone-scanned avatar, although a bit fuzzy around the edges, like a dream. The woman whose Persona was scanned appeared in her own window, not in a full-screen form.

And I wondered how expressive the emotions are with the Vision Pro’s scanning cameras. The Pro has an ability to scan jaw movement similar to the Quest Pro, and the Persona I chatted with was friendly and smiling. How would it look for someone I know, like my mom? Here, it was good enough that I forgot it was a scan.

We demoed a bit of Apple’s Freeform app, where a collaboration window opened up while my Persona friend chatted in another window. 3D objects popped up in the Freeform app, a full home scan. It looked realistic enough.

Dinosaurs in my world

The final demo was an app experience called Encounter Dinosaurs, which reminded me of early VR app demos I had years ago: An experience emphasizing just the immersive «wow» factor of dinosaurs appearing in a 3D window that seemed to open up in the back wall of my demo room. Creatures that looked like carnotauruses slowly walked through the window and into my space.

All my demos were seated except for this one, where I stood up and walked around a bit. This sounds like it wouldn’t be an impressive demo, but again, the quality of the visuals and how they looked in relation to the room’s passthrough video capture was what made it feel so great. As the dinosaur snapped at my hand, it felt pretty real. And so did a butterfly that danced through the room and tried to land on my extended finger.

I smiled. But even more so, I was impressed when I took off the headset. My own everyday vision wasn’t that much sharper than what Apple’s passthrough cameras provided. The gap between the two was closer than I would have expected, and it’s what makes Apple’s take on mixed reality in VR work so well.

Then there’s the battery pack. There’s a corded battery that’s needed to power the headset, instead of a built-in battery like most others have. That meant I had to make sure to grab the battery pack as I started to move around, which is probably a reason why so many of Apple’s demos were seated.

11:44

What about fitness and everything else?

Apple didn’t emphasize fitness much at all, a surprise to me. VR is already a great platform for fitness, although no one’s finessed headset design for fitness comfort. Maybe having that battery pack right now will limit movement in active games and experiences. Maybe Apple will announce more plans here later. The only taste I got of health and wellness was a one-minute micro meditation, which was similar to the one on the Apple Watch. It was pretty, and again a great showcase of the display quality, but I want more.

2024 is still a while away, and Apple’s headset is priced way out of range for most people. And I have no idea how functional this current headset would feel if I were doing everyday work. But Apple did show off a display, and an interface, that are far better than I was ready for. If Apple can build on that, and the Vision Pro finds ways of expanding its mixed-reality capabilities, then who knows what else is possible?

This was just my fast-take reaction to a quick set of demos on one day in Cupertino. There are a lot more questions to come, but this first set of demos resonated with me. Apple showed what it can do, and we’re not even at the headset’s launch yet.

Technologies

Samsung’s Galaxy S25 Edge Is Down to Just $730 Today and It’s a Low-Profile Powerhouse

The Galaxy S25 Edge is down from $1,220 — a 40% savings on Samsung’s sleekest, slimmest phone yet.

Samsung’s Galaxy S25 Edge is built for people who want it all — a powerful camera, a sleek design and AI features that actually make life easier. From finding your favorite photo with a voice command to capturing stunning night video, this phone blends performance and personality in a titanium frame that’s as tough as it is beautiful.

Amazon has dropped the price of the Galaxy S25 Edge to just $730 — a 40% discount off its $1,220 list price. This is an excellent deal, but it could end at any time, so we suggest making your order sooner rather than later.

The Galaxy S25 Edge is fast. It has a 6.7-inch QHD Plus ProScaler display with a refresh rate of up to 120Hz, powered by a Snapdragon processor and paired with 12GB of RAM. It has 512GB of storage, which gives you plenty of room for high-res photos and 4K video, especially with its 200-megapixel rear camera and AI-enhanced selfie system.

Samsung’s Night Video mode helps you capture crisp footage in low light, while AI tools clean up background noise and even help you find specific photos by description. The virtual assistant can handle multistep tasks like searching for a restaurant and texting a friend — all in one ask.

Hey, did you know? CNET Deals texts are free, easy and save you money.

The titanium body is ultra-slim yet durable, with Corning Gorilla Glass Ceramic 2 for added toughness. And with Android 15 and One UI 7, you’ll get the latest software features and customization options.

For more Android savings, check out our best Galaxy S25 deals and top phone discounts.

MOBILE DEALS OF THE WEEK

-

$350 (save $50)

-

$525 (save $125)

-

$300 (save $100)

-

$334 (save $295)

Why this deal matters

This is one of the best prices we’ve seen on Samsung’s Galaxy S25 Edge, and it’s packed with premium features like a 200MP camera, AI-powered search and editing and a titanium build. If you’ve been waiting for a flagship phone that’s smart, stylish and seriously discounted, this is one of the most tempting Android deals this season.

Join Our Daily Deals Text Group!

Get hand-picked deals from CNET shopping experts straight to your phone.

By signing up, you confirm you are 16+ and agree to receive recurring marketing messages at the phone number provided. Consent is not a condition of purchase. Reply STOP to unsubscribe. Msg & data rates may apply. View our Privacy Policy and Terms of Use.

Technologies

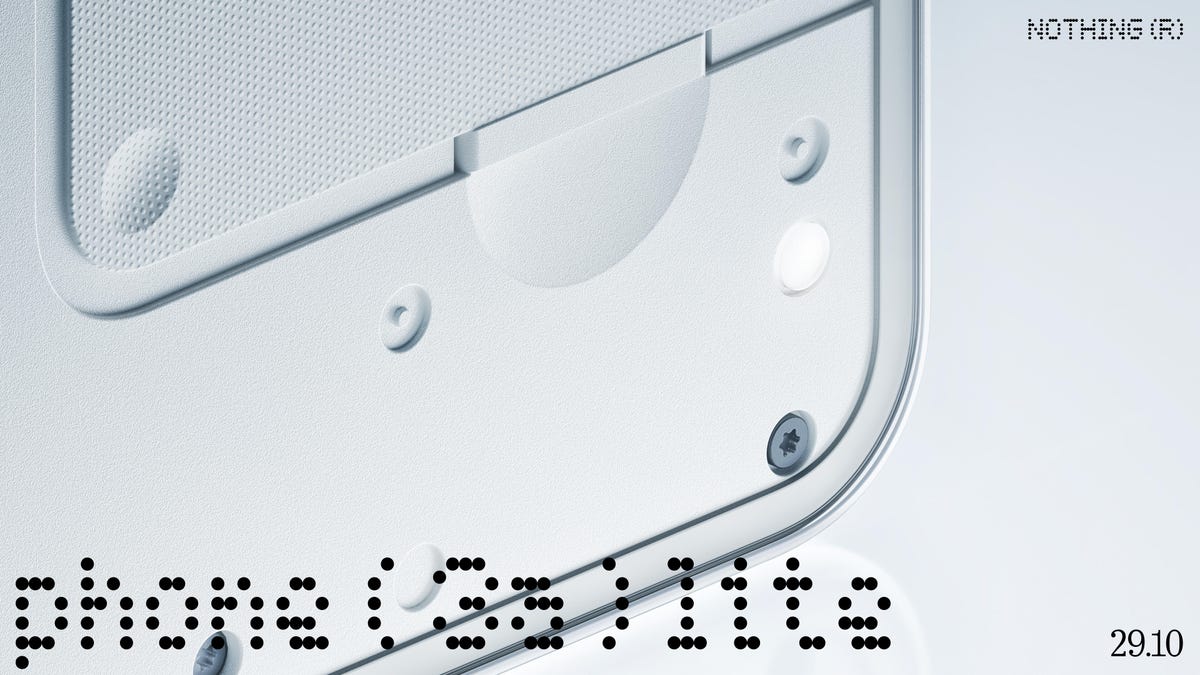

Nothing’s Signature Transparent Design Is Coming to a New Budget Phone This Week

Do you love the design of Nothing’s phones, but dislike the price? The Nothing 3A Lite might be the perfect device for you.

British tech company Nothing is best known for its retro-inspired transparent technology design, but it’s never been the most affordable option on the market. That looks set to change this week, with the company set to unveil the Nothing 3A Lite on Wednesday.

Nothing says that this will be its first entry-level smartphone, and it will incorporate the transparent design elements seen across the company’s range of phones and headphones. We’ve been given our first glimpse of what looks like the back panel of the phone in a photo from Nothing, but we’ll have to wait until Wednesday at 1 p.m. GMT for the full reveal.

The addition of the 3A Lite to Nothing’s phone lineup follows on from the launch of the Nothing Phone 3 (the company’s «first, true flagship») this summer, and the mid-range 3A and 3A Pro back in the spring. For the first time, the company will offer smartphones that range from budget to high-end in price, meaning that there should be something for everyone.

The Nothing Phone 3A Lite is an «interesting prospect,» said CNET Editor at Large Andrew Lanxon, who reviewed the all three of the existing phones in the 3 series. «Nothing’s phones are already budget-focused, with the existing Phone 3A coming with a low to midrange price tag,» he said. «I’ll be keen to see just how much cheaper Nothing can make its phones, while still offering a pleasant everyday user experience.

«Crucially, they should still offer long software support periods to increase the shelf life — and thereby reduce the overall carbon footprint,» he added. «Value should not come at the expense of longevity»

Nothing currently offers six years of Android support with the Nothing Phone 3, which falls short of the seven years Google offers with its latest Pixel phones. The company alsorecently killed off its flashy Glyph interface — I personally think the replacement is better — and has increasingly been emphasizing its original use of AI as a selling point for its phones.

Technologies

Today’s NYT Strands Hints, Answers and Help for Oct. 27, #603

Here are hints and answers for the NYT Strands puzzle for Oct. 27, No. 603.

Looking for the most recent Strands answer? Click here for our daily Strands hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle, Connections and Connections: Sports Edition puzzles.

Today’s NYT Strands puzzle is fun, but some of the answers are long and quite tough to unscramble.. So if you need hints and answers, read on.

I go into depth about the rules for Strands in this story.

If you’re looking for today’s Wordle, Connections and Mini Crossword answers, you can visit CNET’s NYT puzzle hints page.

Read more: NYT Connections Turns 1: These Are the 5 Toughest Puzzles So Far

Hint for today’s Strands puzzle

Today’s Strands theme is: Witch way?

If that doesn’t help you, here’s a clue: What Harry Potter finds out he is.

Clue words to unlock in-game hints

Your goal is to find hidden words that fit the puzzle’s theme. If you’re stuck, find any words you can. Every time you find three words of four letters or more, Strands will reveal one of the theme words. These are the words I used to get those hints but any words of four or more letters that you find will work:

- CARD, DINT, RANT, MULE, MALE, HARM, MALT, TALE, TINT, CANT, ROAD

Answers for today’s Strands puzzle

These are the answers that tie into the theme. The goal of the puzzle is to find them all, including the spangram, a theme word that reaches from one side of the puzzle to the other. When you have all of them (I originally thought there were always eight but learned that the number can vary), every letter on the board will be used. Here are the nonspangram answers:

- WAND, CHARM, AMULET, POTION, INCANTATION, CAULDRON.

Today’s Strands spangram

Today’s Strands spangram is WIZARDRY. To find it, look for the W that’s three letters to the right on the top row, and wind down.

Quick tips for Strands

#1: To get more clue words, see if you can tweak the words you’ve already found, by adding an «S» or other variants. And if you find a word like WILL, see if other letters are close enough to help you make SILL, or BILL.

#2: Once you get one theme word, look at the puzzle to see if you can spot other related words.

#3: If you’ve been given the letters for a theme word, but can’t figure it out, guess three more clue words, and the puzzle will light up each letter in order, revealing the word.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies4 года ago

Technologies4 года agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow