Technologies

Honor’s Magic V5 Boasts On-Device Live AI Call Translation for Guaranteed Privacy

In an exclusive interview with CNET, Honor’s President of Product Fei Fang reveals how the V5’s AI model will allow for more speed, accuracy and privacy.

«Hola! ¿Hablas inglés?» I asked the woman who answered the phone in the Barcelona restaurant.

I was calling in a futile attempt to make a reservation for the CNET team dinner during Mobile World Congress this year. Unfortunately, I don’t know Spanish (I learned French and German at school). And as it turned out, she didn’t speak English either.

«No!» she said, and brusquely hung up.

What I needed in that moment was the kind of AI call translation feature that’s becoming increasingly prevalent on phones — including those made by Samsung and Google, and, starting next week, Honor.

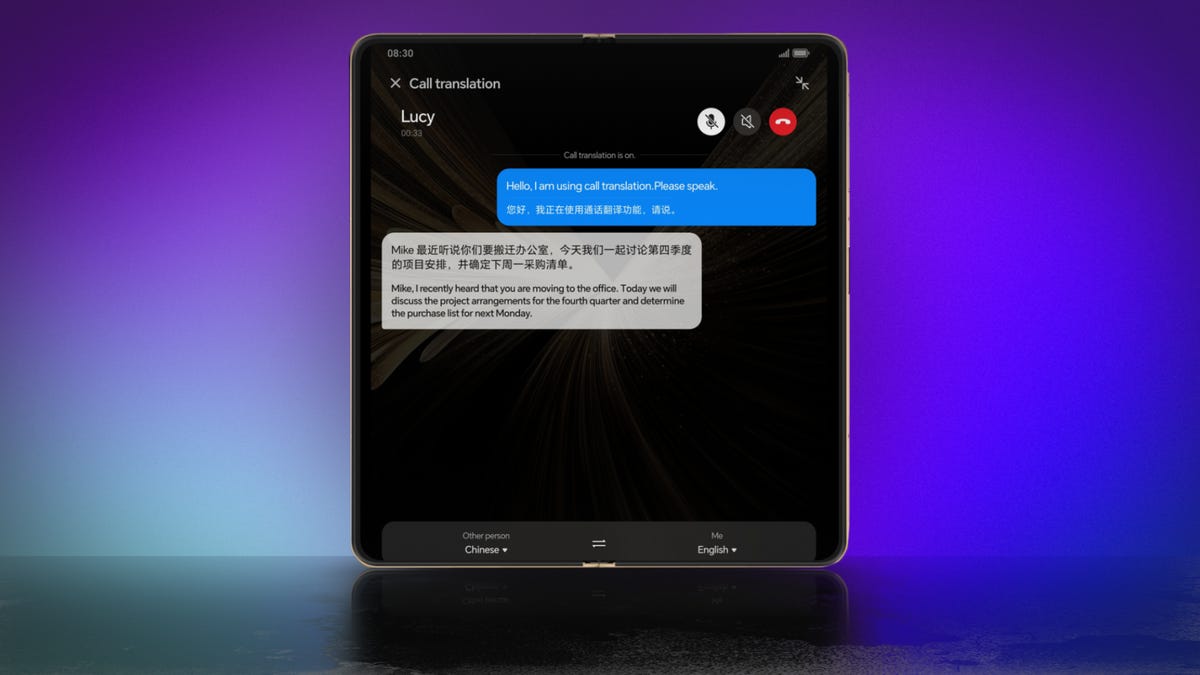

When Honor unveils its Magic V5 foldable at a launch event on Aug. 28 in London, it will come with what the company is calling «the industry’s first on-device large speech model,» which will allow live AI call translation to take place on device, with no cloud processing.

Currently the phone supports six languages — English, Chinese, French, German, Italian and Spanish. For aforementioned reasons, I can’t test all of these, but I’ve already had a play around with the feature and can confirm it did a very effective job of translating my garbled messages into French. I only wish I’d had it available to me in Spain when I needed it.

The model Honor has deployed was designed by the company in collaboration with Shanghai Jiao Tong University, based on the open-source Whisper model, said Fei Fang, president of product at Honor in an interview. It’s been optimized for streaming speech recognition, automatic language detection and translation inference acceleration (that’s speed and efficiency, to you and I).

According to Fang, Honor’s user experience studies have shown that as long as translation occurs within 1.5 seconds, it doesn’t «induce waiting anxiety,» in anyone attempting to use AI call translation. As such, it’s made sure to keep the latency to within these parameters so you won’t get anxious waiting for the translation to kick in.

«We also work together with industry language experts to consistently and comprehensively evaluate the accuracy of our output,» she added. «The assessment is primarily based on five metrics: accuracy, logical coherence, readability, grammatical correctness and conciseness.»

In addition to Honor’s AI model, live translation is being powered by Qualcomm’s Snapdragon 8 Elite chip. The 8 Elite’s NPU allows multimodal generative AI applications to be integrated onto the device. Honor’s algorithms work together with the NPU to keep power consumption as low as possible while maintaining the required accuracy of the translations, said Christopher Patrick, SVP of mobile handsets at Qualcomm.

There are a number of benefits to having the AI model embedded on the Magic V5, but perhaps the most compelling is the privacy it guarantees. It means that everything is processed locally and your calls will therefore remain completely confidential. The fact that the model lives on device and you don’t need to download voice packages also reduces its storage needs.

Another benefit of running the model on the phone itself is «offline usability,» said Patrick. «All conversation information is stored directly on-device and users can access it anytime, anywhere, without network restrictions.»

The work Honor has done on AI call translation is set to be recognized at the upcoming Interspeech conference on speech science and tech. But already, Honor is thinking about how this use of AI can be used to enable other new and exciting features for the people who buy its phones.

«Beyond the essential user scenario of call translation, Honor’s on-device large speech model will also be deployed in scenarios such as face-to-face translation [and] AI subtitles,» said Fang. The process of developing the speech model has allowed Honor’s AI team to gain extensive experience of model optimization, which it will use to develop other AI applications, she added.

«Looking ahead, we will continue to expand capabilities in areas such as emotion recognition and health monitoring, further empowering voice interactions with your on-device AI assistant,» she said.

Technologies

Today’s NYT Mini Crossword Answers for Saturday, Feb. 21

Here are the answers for The New York Times Mini Crossword for Feb. 21.

Looking for the most recent Mini Crossword answer? Click here for today’s Mini Crossword hints, as well as our daily answers and hints for The New York Times Wordle, Strands, Connections and Connections: Sports Edition puzzles.

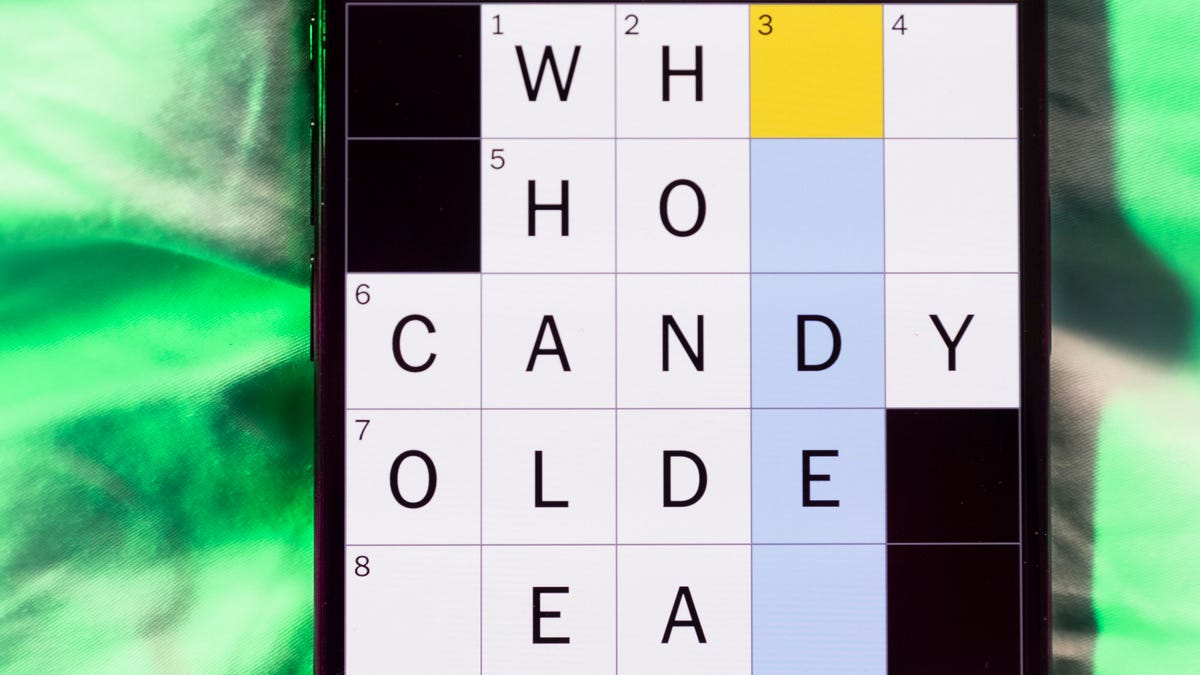

Need some help with today’s Mini Crossword? It’s the long Saturday version, and some of the clues are stumpers. I was really thrown by 10-Across. Read on for all the answers. And if you could use some hints and guidance for daily solving, check out our Mini Crossword tips.

If you’re looking for today’s Wordle, Connections, Connections: Sports Edition and Strands answers, you can visit CNET’s NYT puzzle hints page.

Read more: Tips and Tricks for Solving The New York Times Mini Crossword

Let’s get to those Mini Crossword clues and answers.

Mini across clues and answers

1A clue: «Jersey Shore» channel

Answer: MTV

4A clue: «___ Knows» (rhyming ad slogan)

Answer: LOWES

6A clue: Second-best-selling female musician of all time, behind Taylor Swift

Answer: MADONNA

8A clue: Whiskey grain

Answer: RYE

9A clue: Dreaded workday: Abbr.

Answer: MON

10A clue: Backfiring blunder, in modern lingo

Answer: SELFOWN

12A clue: Lengthy sheet for a complicated board game, perhaps

Answer: RULES

13A clue: Subtle «Yes»

Answer: NOD

Mini down clues and answers

1D clue: In which high schoolers might role-play as ambassadors

Answer: MODELUN

2D clue: This clue number

Answer: TWO

3D clue: Paid via app, perhaps

Answer: VENMOED

4D clue: Coat of paint

Answer: LAYER

5D clue: Falls in winter, say

Answer: SNOWS

6D clue: Married title

Answer: MRS

7D clue: ___ Arbor, Mich.

Answer: ANN

11D clue: Woman in Progressive ads

Answer: FLO

Technologies

Today’s NYT Connections: Sports Edition Hints and Answers for Feb. 21, #516

Here are hints and the answers for the NYT Connections: Sports Edition puzzle for Feb. 21, No. 516.

Looking for the most recent regular Connections answers? Click here for today’s Connections hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle and Strands puzzles.

Today’s Connections: Sports Edition is a tough one. I actually thought the purple category, usually the most difficult, was the easiest of the four. If you’re struggling with today’s puzzle but still want to solve it, read on for hints and the answers.

Connections: Sports Edition is published by The Athletic, the subscription-based sports journalism site owned by The Times. It doesn’t appear in the NYT Games app, but it does in The Athletic’s own app. Or you can play it for free online.

Read more: NYT Connections: Sports Edition Puzzle Comes Out of Beta

Hints for today’s Connections: Sports Edition groups

Here are four hints for the groupings in today’s Connections: Sports Edition puzzle, ranked from the easiest yellow group to the tough (and sometimes bizarre) purple group.

Yellow group hint: Old Line State.

Green group hint: Hoops legend.

Blue group hint: Robert Redford movie.

Purple group hint: Vroom-vroom.

Answers for today’s Connections: Sports Edition groups

Yellow group: Maryland teams.

Green group: Shaquille O’Neal nicknames.

Blue group: Associated with «The Natural.»

Purple group: Sports that have a driver.

Read more: Wordle Cheat Sheet: Here Are the Most Popular Letters Used in English Words

What are today’s Connections: Sports Edition answers?

The yellow words in today’s Connections

The theme is Maryland teams. The four answers are Midshipmen, Orioles, Ravens and Terrapins.

The green words in today’s Connections

The theme is Shaquille O’Neal nicknames. The four answers are Big Aristotle, Diesel, Shaq and Superman.

The blue words in today’s Connections

The theme is associated with «The Natural.» The four answers are baseball, Hobbs, Knights and Wonderboy.

The purple words in today’s Connections

The theme is sports that have a driver. The four answers are bobsled, F1, golf and water polo.

Technologies

Wisconsin Reverses Decision to Ban VPNs in Age-Verification Bill

The law would have required websites to block VPN users from accessing «harmful material.»

Following a wave of criticism, Wisconsin lawmakers have decided not to include a ban on VPN services in their age-verification law, making its way through the state legislature.

Wisconsin Senate Bill 130 (and its sister Assembly Bill 105), introduced in March 2025, aims to prohibit businesses from «publishing or distributing material harmful to minors» unless there is a reasonable «method to verify the age of individuals attempting to access the website.»

One provision would have required businesses to bar people from accessing their sites via «a virtual private network system or virtual private network provider.»

A VPN lets you access the internet via an encrypted connection, enabling you to bypass firewalls and unblock geographically restricted websites and streaming content. While using a VPN, your IP address and physical location are masked, and your internet service provider doesn’t know which websites you visit.

Wisconsin state Sen. Van Wanggaard moved to delete that provision in the legislation, thereby releasing VPNs from any liability. The state assembly agreed to remove the VPN ban, and the bill now awaits Wisconsin Governor Tony Evers’s signature.

Rindala Alajaji, associate director of state affairs at the digital freedom nonprofit Electronic Frontier Foundation, says Wisconsin’s U-turn is «great news.»

«This shows the power of public advocacy and pushback,» Alajaji says. «Politicians heard the VPN users who shared their worries and fears, and the experts who explained how the ban wouldn’t work.»

Earlier this week, the EFF had written an open letter arguing that the draft laws did not «meaningfully advance the goal of keeping young people safe online.» The EFF said that blocking VPNs would harm many groups that rely on that software for private and secure internet connections, including «businesses, universities, journalists and ordinary citizens,» and that «many law enforcement professionals, veterans and small business owners rely on VPNs to safely use the internet.»

More from CNET: Best VPN Service for 2026: VPNs Tested by Our Experts

VPNs can also help you get around age-verification laws — for instance, if you live in a state or country that requires age verification to access certain material, you can use a VPN to make it look like you live elsewhere, thereby gaining access to that material. As age-restriction laws increase around the US, VPN use has also increased. However, many people are using free VPNs, which are fertile ground for cybercriminals.

In its letter to Wisconsin lawmakers prior to the reversal, the EFF argued that it is «unworkable» to require websites to block VPN users from accessing adult content. The EFF said such sites cannot «reliably determine» where a VPN customer lives — it could be any US state or even other countries.

«As a result, covered websites would face an impossible choice: either block all VPN users everywhere, disrupting access for millions of people nationwide, or cease offering services in Wisconsin altogether,» the EFF wrote.

Wisconsin is not the only state to consider VPN bans to prevent access to adult material. Last year, Michigan introduced the Anticorruption of Public Morals Act, which would ban all use of VPNs. If passed, it would force ISPs to detect and block VPN usage and also ban the sale of VPNs in the state. Fines could reach $500,000.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow