Technologies

I Need Apple to Make the iPhone 17 Cameras Amazing. Here’s What It Should Do

Commentary: After a lackluster WWDC, Apple needs to bring the razzle dazzle with the iPhone 17. Here’s how it can do just that.

Apple’s WWDC was a letdown for me, with no new hardware announced and few new features beyond a glassy interface for iOS 26. I’m pinning my hopes that the iPhone 17 will get my pulse racing, and the best way it can do that is with the camera. The iPhone 16 Pro already packs one of the best camera setups found on any phone, it’s capable of taking stunning images in any conditions. Throw in its ProRes video, Log recording and the neat 4K slow motion mode and it’s a potent video shooter too. It even put up a strong fight against the other best camera phones around, including the Galaxy S25 Ultra, the Pixel 9 Pro and the Xiaomi 14 Ultra.

Read more: Camera Champions Face Off: iPhone 16 Pro vs. Galaxy S25 Ultra

Despite that, it’s still not the perfect camera. While early reports from industry insiders claim that the phone’s video skills will get a boost, there’s more the iPhone 17 will need to make it an all-round photography powerhouse. As both an experienced phone reviewer and a professional photographer, I have exceptionally high expectations for top-end phone cameras. And, having used the iPhone 16 Pro since its launch, I have some thoughts on what needs to change.

Here are the main points I want to see improved on the iPhone 17 when it likely launches in September 2025.

An accessible Pro camera mode

At WWDC, Apple showed off the changes to the upcoming iOS 26 which included a radical change to the interface with Liquid Glass. But that simplified style extended to the camera app too, with Apple paring the interface down to the most basic functions of Photo, Video and zoom levels. Presumably, the idea is to make it super easy for even the most beginner of photographers to open the camera and start taking Instagram-worthy snaps.

And that’s fine, but what about those of us who buy the Pro models in order to take deeper advantage of features like exposure compensation, Photographic Styles and ProRaw formats? It’s not totally clear yet how these features can be accessed within the new camera interface, but they need to not be tucked away. Many photographers — myself very much included — want to use these tools as standard, using our powerful iPhones in much the same way we would a mirrorless camera from Canon or Sony.

That means relying on advanced settings to take control over the image-taking process to craft shots that go beyond simple snaps. If anything, Apple’s camera app has always been too simple, with even basic functions like white balance being unavailable. To see Apple take things to an even more simplistic level is disappointing, and I want to see how the company will continue to make these phones usable for enthusiastic photographers.

Larger image sensor

Though the 1/1.28-inch sensor found on the iPhone 16 Pro’s main camera is already a good size — and marginally larger than the S24 Ultra’s 1/1.33-inch sensor — I want to see Apple go bigger. A larger image sensor can capture more light and offer better dynamic range. It’s why pro cameras tend to have at least «full frame» image sensors, while really high-end cameras, like the amazing Hasselblad 907X, have enormous «medium format» sensors for pristine image quality.

Xiaomi understands this, equipping its 15 Ultra and previous 14 Ultra with 1-inch type sensors. It’s larger than the sensors found on almost any other phone, which allowed the 15 Ultra to take stunning photos all over Europe, while the 14 Pro was heroic in capturing a Taylor Swift concerts. I’m keen to see Apple at least match Xiaomi’s phone here with a similar 1-inch type sensor. Though if we’re talking pie-in-the-sky wishes, maybe the iPhone 17 could be the first smartphone with a full-frame image sensor. I won’t hold my breath on that one — the phone, and the lenses, would need to be immense to accommodate it, so it’d likely be more efficient just to let you make calls with your mirrorless camera.

Variable aperture

Speaking of the Xiaomi 14 Ultra, one of the other reasons that phone rocks so hard for photography is its variable aperture on the main camera. Its widest aperture is f/1.6 — significantly wider than the f/1.78 of the iPhone 16 Pro.That wider aperture lets in a lot of light in dim conditions and more authentically achieves out-of-focus bokeh around a subject.

But Xiaomi’s 14 Ultra aperture can also close down to f/4, and with that narrower aperture, it’s able to create starbursts around points of light. I love achieving this effect in nighttime imagery with the phone. It makes the resulting images look much more like they’ve been taken with a professional camera and lens, while the same points of light on the iPhone just look like roundish blobs. Disappointingly, Xiaomi actually removed this feature from the new 15 Ultra so whether Apple sees value in implementing this kind of technology remains to be seen.

More Photographic Styles

Though Apple has had various styles and effects integrated into the iPhone’s cameras, the iPhone 16 range took it further, with more control over the effects and more toning options. It’s enough that CNET Senior Editor Lisa Eadicicco even declared the new Photographic Styles her «favorite new feature on Apple’s latest phone.»

I think they’re great too. Or rather, they’re a great start. The different color tones, like the ones you get with the Amber and Gold styles, add some lovely warmth to scenes, and the Quiet effect adds a vintage filmic fade, but there’s still not a whole lot to choose from and the interface can be a little slow to work through. I’d love to see Apple introduce more Photographic Styles with different color toning options, or even with tones that mimic vintage film stocks from Kodak or Fujifilm.

And sure, there are plenty of third-party apps like VSCO or Snapseed that let you play around with color filters all you want. But using Apple’s styles means you can take your images with the look already applied, and then change it afterward if you don’t like it — nothing is hard-baked into your image.

I was recently impressed with Samsung’s new tool for creating custom color filters based off the look of other images. I’d love to see Apple bring that level of image customization to the iPhone.

Better ProRaw integration with Photographic Styles

I do think Apple has slightly missed an opportunity with its Photographic Styles, though, in that you can use them only when taking images in HEIF (high-efficiency image format). Unfortunately, you can’t use them when shooting in ProRaw. I love Apple’s use of ProRaw on previous iPhones, as it takes advantage of all of the iPhone’s computational photography — including things like HDR image blending — but still outputs a DNG raw file for easier editing.

The DNG file typically also offers more latitude to brighten dark areas or tone down highlights in an image, making it extremely versatile. Previously, Apple’s color presets could be used when shooting in ProRaw, and I loved it. I frequently shot street-style photos using the high contrast black-and-white mode and then edited the raw file further.

Now using that same black-and-white look means only shooting images in HEIF format, eliminating the benefits of using Apple’s ProRaw. Oddly, while the older-style «Filters» are no longer available in the camera app when taking a raw image, you can still apply those filters to raw photos in the iPhone’s gallery app through the editing menu.

LUTs for ProRes video

And while we’re on the topic of color presets and filters, Apple needs to bring those to video, too. On the iPhone 15 Pro, Apple introduced the ability to shoot video in ProRes, which results in very low-contrast, almost gray-looking footage. The idea is that video editors will take this raw footage and then apply their edits on top, often applying contrast and color presets known as LUTs (look-up tables) that gives footage a particular look — think dark and blue for horror films or warm and light tones for a romantic drama vibe.

But Apple doesn’t offer any kind of LUT for editing ProRes video on the iPhone, beyond simply ramping up the contrast, which doesn’t really do the job properly. Sure, the point of ProRes is that you would take that footage off the iPhone, put it into software like Davinci Resolve, and then properly color grade the footage so it looks sleek and professional.

But that still leaves the files on your phone, and I’d love to be able to do more with them. My gallery is littered with ungraded video files that I’ll do very little with because they need color grading externally. I’d love to share them to Instagram, or with my family over WhatsApp, after transforming those files from drab and gray to beautifully colorful.

With the iPhone 17, or even with the iPhone 16 as a software update, I want to see Apple creating a range of its own LUTs that can be directly applied to ProRes video files on the iPhone. While we didn’t see this software functionality discussed as part of the company’s June WWDC keynote, that doesn’t mean it couldn’t be launched with the iPhone in September.

If Apple were able to implement all these changes — excluding, perhaps, the full-frame sensor which even I can admit is a touch ambitious — it would have an absolute beast of a camera on its hands.

Technologies

Today Only You Can Get the Super Mario Galaxy 2-Pack at $14 Off

Enjoy two of Nintendo’s best Mario games in one package with a decent amount off.

Best Buy has a deal on at the moment that knocks the price of Super Mario Galaxy and Mario Galaxy 2 on Nintendo Switch down to $56. That’s a $14 discount, which is a lot on a first-party Nintendo game.

Nintendo Switch games are notorious for never really going down in price, which makes every deal that happens worth at least considering. Last time this was on sale, it was for $59; this is $3 cheaper than that, making the value even better. That’s two all-time-classic games for $28 each, basically, which is fantastic.

The only problem with this is that it’s a Best Buy daily deal, which means that it runs out tonight. So, if you do want to pick this up at this price, you’re going to need to be quick.

In his review, CNET’s Scott Stein was a big fan of both revamped Mario titles included in this bundle, but less so the $70 asking price. This deal goes a long way to helping fix that problem and gives you the chance to add two classic Mario titles to your collection at a discount.

Originally released on the Wii, both Super Mario Galaxy and Galaxy 2 have been updated with higher-resolution visuals, an improved interface and new content, so there’s never been a better time to play them. And unlike the originals, you can play these Switch games anywhere and at any time.

Why this deal matters

Mario games are like no other, and they’re great for adults and kids alike. This bundle includes two of the best, and right now you can pick it up at a price that makes them an even better buy than they already were. Whether you played them the first time around, you’re looking to see what all the fuss was about or want to introduce them to a new generation of Mario fans, this is the deal for you.

Technologies

I’ve Seen It With My Own Eyes: The Robots Are Here and Walking Among Us

The «physical AI» boom has created a world of opportunity for robot makers, and they’re not holding back.

It’s been 24 years since CNET first published an article with the headline The robots are coming. It’s a phrase I’ve repeated in my own writing over the years — mostly in jest. But now in 2026, for the first time, I feel confident in declaring that the robots have finally arrived.

I kicked off this year, as I often do, wandering the halls of the Las Vegas Convention Center and its hotel-based outposts on the lookout for the technology set to define the next 12 months. CES has always been a hotbed of activity for robots, but more often than not, a robot that makes a flashy Vegas debut doesn’t go on to have a rich, meaningful career in the wider world.

In fact, as cute as they often are and as fun as they can be to interact with on the show floor, most robots I’ve seen at CES over the years amount to little more than gimmicks. They either come back year after year with no notable improvements or are never seen or heard from again.

In more than a decade of covering the show, I’ve been waiting for a shift to occur. In 2026, I finally witnessed it. From Hyundai unveiling the final product version of the Boston Dynamics Atlas humanoid robot in its press conference to Nvidia CEO Jensen Huang’s focus on «physical AI» during his keynote, a sea change was evident this year in how people were talking about robots.

«We’ve had this dream of having robots everywhere for decades and decades,» Rev Lebaredian, Nvidia’s vice president of Omniverse and simulation told me on the sidelines of the chipmaker’s vast exhibition at the glamorous Fontainebleau Hotel. «It’s been in sci-fi as long as we can remember.»

Throughout the show, I felt like I was watching that sci-fi vision come to life. Everywhere I went, I was stumbling upon robot demos (some of which will be entering the market this year) drawing crowds, like the people lining up outside Hyundai’s booth to see the new Atlas in action.

So what’s changed? Until now, «we didn’t have the technology to create the brain of a robot,» Lebaredian said.

AI has unlocked our ability to apply algorithms to language, and it’s being applied to the physical world, changing everything for robots and those who make them.

The physical AI revolution

What truly makes a robot a robot? Rewind to CES 2017: I spent my time at the show asking every robotics expert that question, sparked by the proliferation of autonomous vehicles, drones and intelligent smart home devices.

This exercise predated the emergence of generative AI and models such as OpenAI’s ChatGPT, but already I could see that by integrating voice assistants into their products, companies were beginning to blur the boundaries of what could be considered robotics.

Not only has the tech evolved since that time, but so has the language we use to talk about it. At CES 2026, the main topic of conversation seemed to be «physical AI.» It’s an umbrella term that can encompass everything from self-driving cars to robots.

«If you have any physical embodiments, where AI is not only used to perceive the environment, but actually to take decisions and actions that interact with the environment around it … then it’s physical AI,» Ahmed Sadek, head of physical AI and vice president of engineering at chipmaker Qualcomm told me.

Autonomous vehicles have been the easiest expression of physical AI to build so far, according to Lebaredian, simply because their main challenge is to dodge objects rather than interact with them. «Avoiding touching things is a lot easier than manipulating things,» he said.

Still, the development of self-driving vehicles has done much of the heavy lifting on the hardware, setting the stage for robot development to accelerate at a rapid pace now that the software required to build a brain is catching up.

For Nvidia, which worked on the new Atlas robot with Boston Dynamics, and Qualcomm, which announced its latest robotics platform at CES, these developments present a huge opportunity.

But that opportunity also extends to start-ups. Featured prominently at the CES 2026 booth of German automotive company Schaeffler was the year-and-a-half-old British company Humanoid, demonstrating the capabilities of its robot HMND 01.

The wheeled robot was built in just seven months Artem Sokolov, Humanoid’s CEO, told me, as we watch it sort car parts with its pincerlike hands. «We built our bipedal one for service and household much faster — in five months,» Sokolov added.

Humanoid’s speed can be accounted for by the AI boom plus an influx of talent recruited from top robotics companies, said Sokolov. The company has already signed around 25,000 preorders for HMND 01 and completed pilots with six Fortune 500 companies, he said.

This momentum extends to the next generation of Humanoid’s robots, where Sokolov doesn’t foresee any real bottlenecks. The main factors dictating the pace will be improvements in AI models and making the hardware more reliable and cost effective.

Humanoid hype hits its peak

Humanoid the company might have the rights to the name, but the concept of humanoids is a wider domain.

By the end of last year, the commercialization of humanoid robots had entered an «explosive phase of growth,» with a 508% year-on-year increase in global market revenue to $440 million, according to a report released by IDC this month.

At CES, Qualcomm’s robot demonstration showed how its latest platform could be adapted across different forms, including a robotic arm that could assemble a sandwich. But it was the humanoids at its booth that caused everyone to pull out their phones and start filming.

«Our vision is that if you have any embodiment, any mechatronic system, our platform should be able to transform it to a continuously learning intelligent robot,» said Qualcomm’s Sadek. But, he added, the major benefit of the humanoid form is its «flexibility.»

Some in the robotics world have criticized the focus on humanoids, due to their replication of our own limitations. It’s a notion that Lebaredian disagrees with, pointing out that we’ve designed our world around us and that robots need to be able to operate within it.

«There are many tasks that are dull, dangerous and dirty — they call it the three Ds — that are being done by humans today, that we have labor shortages for and that this technology can potentially go help us with,» he said.

We already have many specialist robots working in factories around the world, Lebaredian added. With their combination of arms, legs and mobility, humanoids are «largely a superset of all of the other kinds of robots» and, as such, are perfect for the more general-purpose work we need help with.

The hype around robots — and humanoids in particular — at CES this year felt intense. Even Boston Dynamics CEO Robert Playter acknowledged this in a Q&A with reporters moments after he unveiled the new Atlas on stage.

But it’s not just hype, Playter insisted, because Boston Dynamics is already demonstrating that they can put thousands of robots in the market. «That is not an indication of a hype cycle, but actually an indication of an emerging industry,» he said.

A huge amount of money is being poured into a rapidly growing number of robotics start-ups. The rate of this investment is a signal that the tech is ready to go, according to Nvidia’s Lebaredian.

«It’s because, fundamentally, the experts, people who understand this stuff, now believe, technically, it’s all possible,» he said. «We’ve switched from a scientific problem of discovery to an engineering problem.»

Robot evolution: From industry to home

From what I observed at the show, this engineering «problem» is one that many companies have already solved. Robots such as Atlas and HMND 01 have crossed the threshold from prototype to factory ready. The question for many of us will be as to when will these robots be ready for our homes.

Playter has openly talked about Boston Dynamics’ ambitions in this regard. He sees Atlas evolving into a home robot — but not yet. Some newer entrants to the robotics market — 1X, Sunday Robotics and Humanoid among them — are keen to get their robots into people’s homes in the next couple of years. Playter cautions against this approach.

«Companies are advertising that they want to go right to the home,» he said. «We think that’s the wrong strategy.»

The reasons he listed are twofold: pricing and safety. Playter echoed a sentiment I’ve heard elsewhere: that the first real use for home humanoid robots will be to carry out care duties for disabled and elderly populations. Perhaps in 20 years, you will have a robot carry you in and out of bed, but relying on one to do so when you’re in a vulnerable state poses «critical safety issue,» he said.

Putting robots in factories first allows people to work closely with them while keeping a safe distance, allowing those safety kinks to be ironed out. The deployment of robots at scale in industrial settings will also lead to mass manufacturing of components that will, at some point, make robots affordable for the rest of us, said Playter (unlike 1X’s $20,000 Neo robot, for example).

Still, he imagines the business model will be «robots as a service,» even when they do first enter our homes. Elder care itself is a big industry with real money being spent that could present Boston Dynamics with a market opportunity as Atlas takes its first steps beyond the factory floor.

«I spent a lot of money … with my mom in specialty care the last few years,» he said. «Having robots that can preserve autonomy and dignity at home, I think people will actually spend money — maybe $20K a year.»

The first «care» robots are more likely to be companion robots. This year at the CES, Tombot announced that its robotic labrador, Jennie, who first charmed me back at the show in 2020, is finally ready to go on sale. It served as yet another signal to me that the robots are ready to lead lives beyond the convention center walls.

Unlike in previous years, I left Vegas confident that I’ll be seeing more of this year’s cohort of CES robots in the future. Maybe not in my home just yet, but it’s time to prepare for a world in which robots will increasingly walk among us.

Technologies

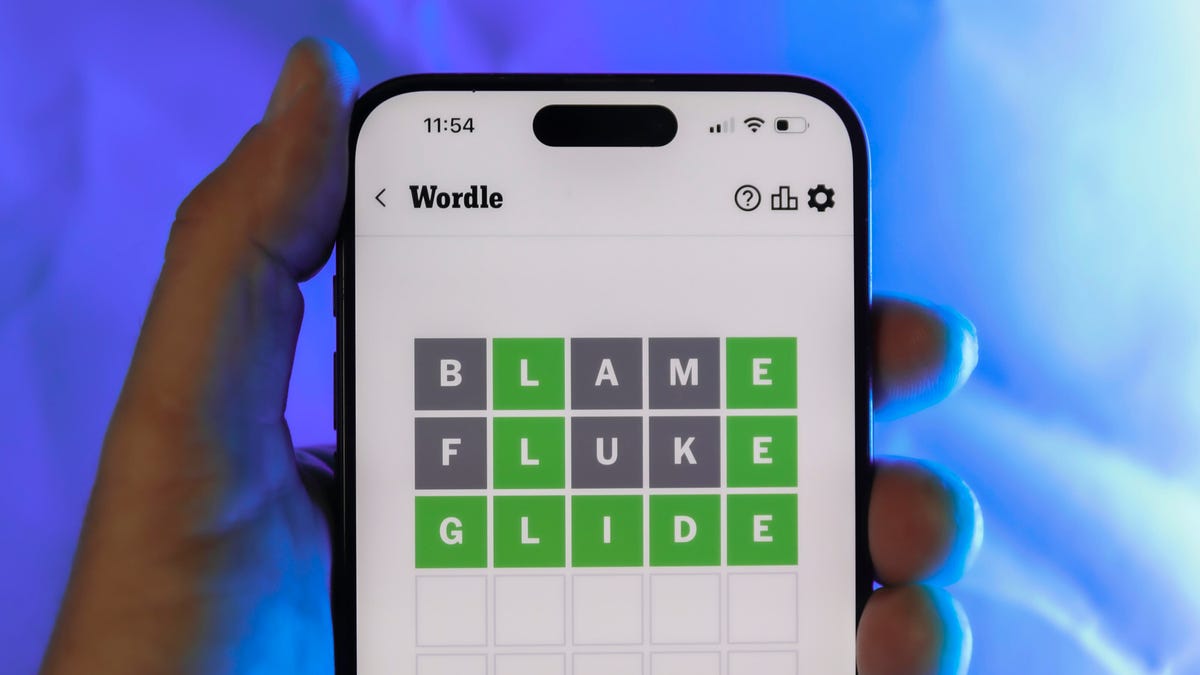

Today’s Wordle Hints, Answer and Help for Jan. 29, #1685

Here are hints and the answer for today’s Wordle for Jan. 29, No. 1,685.

Looking for the most recent Wordle answer? Click here for today’s Wordle hints, as well as our daily answers and hints for The New York Times Mini Crossword, Connections, Connections: Sports Edition and Strands puzzles.

Today’s Wordle puzzle was a tough one for me. I never seem to guess three of the letters in this word. If you need a new starter word, check out our list of which letters show up the most in English words. If you need hints and the answer, read on.

Read more: New Study Reveals Wordle’s Top 10 Toughest Words of 2025

Today’s Wordle hints

Before we show you today’s Wordle answer, we’ll give you some hints. If you don’t want a spoiler, look away now.

Wordle hint No. 1: Repeats

Today’s Wordle answer has no repeated letters.

Wordle hint No. 2: Vowels

Today’s Wordle answer has one vowel and one sometimes vowel.

Wordle hint No. 3: First letter

Today’s Wordle answer begins with F.

Wordle hint No. 4: Last letter

Today’s Wordle answer ends with Y.

Wordle hint No. 5: Meaning

Today’s Wordle answer can refer to a pastry that breaks apart easily.

TODAY’S WORDLE ANSWER

Today’s Wordle answer is FLAKY.

Yesterday’s Wordle answer

Yesterday’s Wordle answer, Jan. 28, No. 1684 was CRUEL.

Recent Wordle answers

Jan. 24, No. 1680: CLIFF

Jan. 25, No. 1681: STRUT

Jan. 26, No. 1682: FREAK

Jan. 27, No. 1683: DUSKY

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow