Technologies

Galaxy S24 Ultra: One Day With Samsung’s New Phone

Circle to Search and Instant Slow-Mo are my favorite new features so far.

The Galaxy S24 Ultra may look a lot like the Galaxy S23 Ultra at first glance. But Samsung’s newest phones are the first to come with Galaxy AI. It’s an umbrella term for tools and features powered by generative AI that can generate content and responses that sound conversational (but aren’t always accurate) after being trained on data. It’s the same flavor of AI that fuels ChatGPT, and the Galaxy S24 lineup is an example of how the tech is being applied to new smartphones.

I’ve been using the Galaxy S24 Ultra for a day, and one Galaxy AI feature has stood out to me in that short time: Circle to Search. I just press and hold the home button and draw a circle around anything I see on screen to launch a Google search for that object. It works intuitively and reliably so far and feels practically useful in everyday life unlike other AI-powered additions to the Galaxy S24.

Read more: Samsung’s Galaxy Ring Will Need Less of Your Attention Than a Smartwatch

I need more time with the S24 Ultra to truly assess the usefulness of Galaxy AI and to test out the new 50-megapixel telephoto camera among other updates.

As I wrote in my initial first impressions story, Samsung’s new AI features don’t feel strikingly new and different from the generative AI features from Microsoft and Google. Instead, the Galaxy S24 Ultra feels like a statement about how generative AI features are becoming table stakes on new phones.

Circle to Search is the standout Galaxy AI feature so far

Galaxy AI is a collection of features that spans everything from photo editing to texting, phone calls and note-taking. There’s a tool for moving and removing unwanted objects from photos and refilling the scene so that it looks natural, for example. The Samsung Notes app can organize notes into bullet points and phone calls can be translated between languages in real time. (Check out my first impressions story for a list of some of the top Galaxy AI features.)

But Circle to Search is the one that stood out to me the most. The feature, which was developed in partnership with Google, allows you to search for almost anything on your phone’s screen just by circling it. Based on the time I’ve had with it so far, Circle to Search seems fairly accurate in determining the type of content I’m looking for based on what I’ve circled.

For example, when I circled an image of the character Siobhan Roy from the HBO drama series Succession in a news article, the Galaxy S24 pulled up results that showed more information about the actress Sarah Snook, who plays her in the series. But when I just circled her outfit, I got results showing where to buy cream-colored blazers and slacks similar to those she was wearing in the image.

I’ve also been using the Galaxy S24 Ultra to organize my notes during the process of writing my review and transcribe meetings. I appreciated being able to have the phone turn my list of tests I’d like to run on the Galaxy S24 Ultra into neat and tidy bullet points. Samsung’s Recorder app also transcribed a meeting and summarized the key points into bullet points. While I wouldn’t rely on those bullet points alone for work-related tasks, it was a handy way to see which topics were discussed at specific timestamps in the conversation.

That feature isn’t unique to Samsung’s Recorder app; Google’s app can also do this, as can the transcription service Otter.ai. But combined with other features like the ability to automatically format notes, I’m beginning to see how generative AI could make phones more capable work devices.

Galaxy S24 Ultra’s new telephoto camera and slow motion

The biggest difference between the Galaxy S23 Ultra’s camera and the Galaxy S24 Ultra’s is the latter’s new 50-megapixel telephoto camera with a 5x optical zoom. That replaces the Galaxy S23 Ultra’s 10-megapixel telephoto camera with a 10x optical zoom, a choice that Samsung made after hearing feedback that users generally preferred to zoom between 2x and 5x.

Read more: Samsung Galaxy S24 Phones Have a New Zoom Trick to Get That Close-Up Photo

I haven’t had too much time to test this extensively, but I’m already seeing a difference. Take a look at the 5x zoom photos below of a wooden sign I came across at a San Jose, California, park. The photos may look similar at first, but you can see the changes when enlarging the images. The text is sharper in the Galaxy S24 Ultra’s photo, and there’s less image noise.

Galaxy S24 Ultra

Galaxy S23 Ultra

Image quality aside, Samsung also introduced some new camera tricks on the Galaxy S24 Ultra. While Generative Edit may have gotten a lot of attention following Samsung’s announcement, Instant Slow-Mo has impressed me the most so far. I just hold down on a video clip I captured and the phone converts it into a slow motion video by generating extra frames. I can preview how the clip will look in slow motion by pressing and lifting my finger to switch between the regular and slowed-down footage.

Taken together, it seems like Galaxy AI has the potential to make Samsung’s phones more useful and helpful. Most of the features that are currently available, like Circle to Search and note summaries, feel practical rather than gimmicky. But the bigger question is whether Samsung will be able to meaningfully differentiate its offerings moving forward, especially since Google’s Pixel phones provide similar functionality and Samsung plans to bring Galaxy AI to the Galaxy S23 lineup as well.

Editors’ note: CNET is using an AI engine to help create some stories. For more, see this post.

Technologies

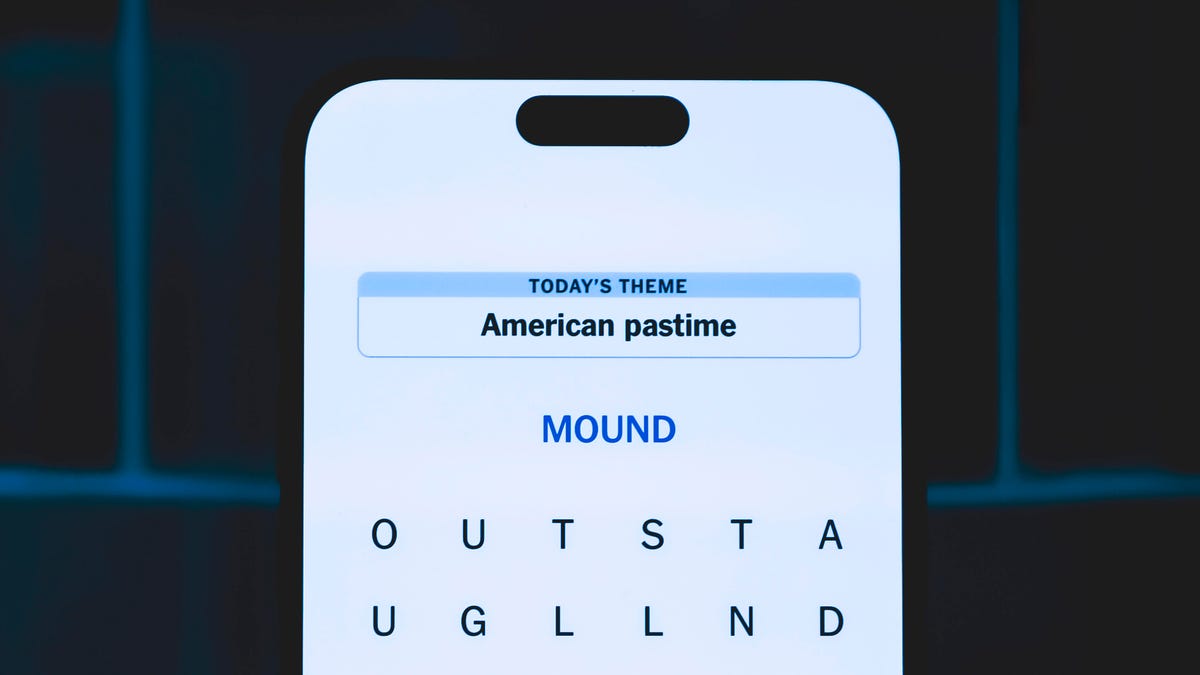

Today’s NYT Strands Hints, Answers and Help for Feb. 7 #706

Here are hints and answers for the NYT Strands puzzle for Feb. 7, No. 706.

Looking for the most recent Strands answer? Click here for our daily Strands hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle, Connections and Connections: Sports Edition puzzles.

Today’s NYT Strands puzzle is especially tricky, as a variety of words could fit the theme. Some of the answers are difficult to unscramble, so if you need hints and answers, read on.

I go into depth about the rules for Strands in this story.

If you’re looking for today’s Wordle, Connections and Mini Crossword answers, you can visit CNET’s NYT puzzle hints page.

Read more: NYT Connections Turns 1: These Are the 5 Toughest Puzzles So Far

Hint for today’s Strands puzzle

Today’s Strands theme is: Boo-o-o-o-ring

If that doesn’t help you, here’s a clue: Zzzz… not very exciting.

Clue words to unlock in-game hints

Your goal is to find hidden words that fit the puzzle’s theme. If you’re stuck, find any words you can. Every time you find three words of four letters or more, Strands will reveal one of the theme words. These are the words I used to get those hints but any words of four or more letters that you find will work:

- HIND, DATE, DRUM, MOST, CHIN, PAIN, RAIN, NOSE, TOME, TOMES

Answers for today’s Strands puzzle

These are the answers that tie into the theme. The goal of the puzzle is to find them all, including the spangram, a theme word that reaches from one side of the puzzle to the other. When you have all of them (I originally thought there were always eight but learned that the number can vary), every letter on the board will be used. Here are the nonspangram answers:

- DULL, DREARY, HUMDRUM, MUNDANE, TIRESOME

Today’s Strands spangram

Today’s Strands spangram is WATCHINGPAINTDRY. To find it, start with the W that’s three letters up from the bottom on the far-left row, and wind up, across and down.

Toughest Strands puzzles

Here are some of the Strands topics I’ve found to be the toughest.

#1: Dated slang. Maybe you didn’t even use this lingo when it was cool. Toughest word: PHAT.

#2: Thar she blows! I guess marine biologists might ace this one. Toughest word: BALEEN or RIGHT.

#3: Off the hook. Again, it helps to know a lot about sea creatures. Sorry, Charlie. Toughest word: BIGEYE or SKIPJACK.

Technologies

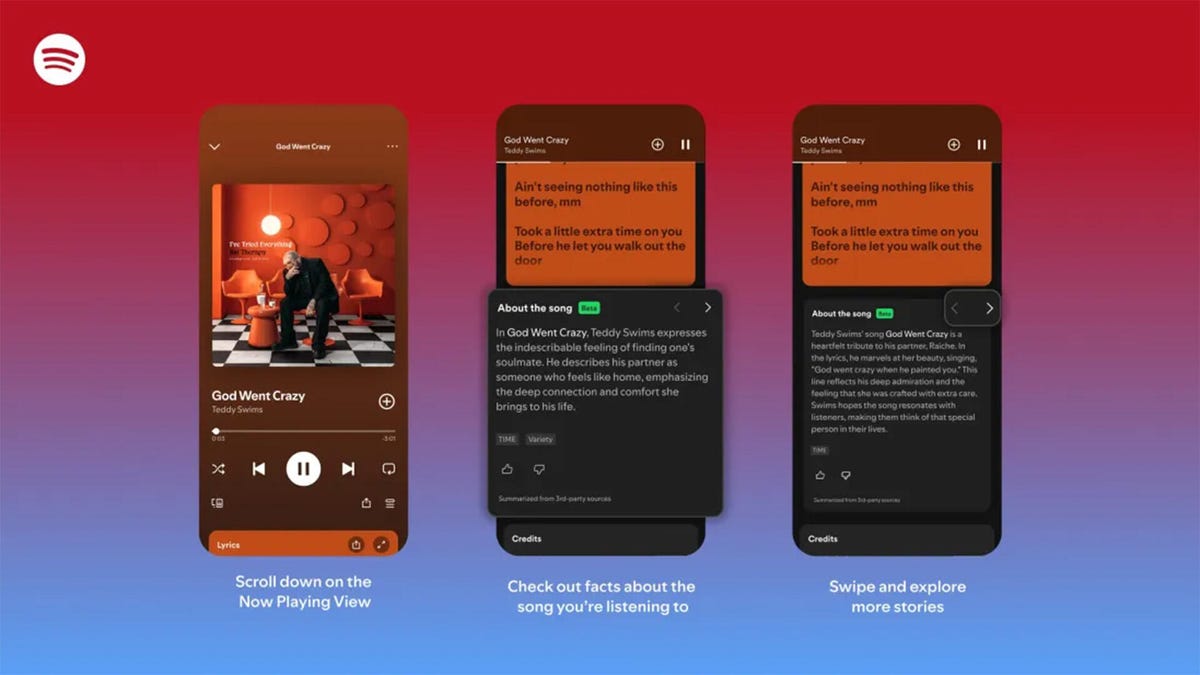

Spotify Launches ‘About the Song’ Beta to Reveal Stories Behind the Music

The stories are told on swipeable cards as you listen to the song.

Did you know Chappell Roan drew inspiration for her hit song Pink Pony Club from The Pink Cadillac, the name of a hot-pink strip club in her Missouri hometown? Or that Fountains of Wayne’s song Stacy’s Mom was inspired by a confessed crush a friend had on the late co-founder Adam Schlesinger’s grandmother?

If you’re a fan of knowing juicy little tidbits about popular songs, you might find more trivia in About the Song, a new feature from streaming giant Spotify that’s kind of like the old VH1 show Pop-Up Video.

About the Song is available in the US, UK, New Zealand and Australia, initially for Spotify Premium members only. It’s only on certain songs, but it will likely keep rolling out to more music. Music facts are sourced from a variety of websites and summarized by AI, and appear below the song’s lyrics when you’re playing a particular song.

«Music fans know the feeling: A song stops you in your tracks, and you immediately want to know more. What inspired it, and what’s the meaning behind it? We believe that understanding the craft and context behind a song can deepen your connection to the music you love,» Spotify wrote in a blog post.

While this version of the feature is new, it’s not the first time Spotify has featured fun facts about the music it plays. The streaming giant partnered with Genius a decade ago for Behind the Lyrics, which included themed playlists with factoids and trivia about each song. Spotify kept this up for a few years before canceling due to multiple controversies, including Paramore’s Hayley Williams blasting Genius for using inaccurate and outdated information.

Spotify soon started testing its Storyline feature, which featured fun facts about songs in a limited capacity for some users, but was never released as a central feature.

About the Song is the latest in a long string of announcements from Spotify, including a Page Match feature that lets you seamlessly switch to an audiobook from a physical book, and an AI tool that creates playlists for you. Spotify also recently announced that it’ll start selling physical books.

How to use About the Song

If you’re a Spotify Premium user, the feature should be available the next time you listen to music on the app.

- Start listening to any supported song.

- Scroll down past the lyrics preview box to the About the Song box.

- Swipe left and right to see more facts about the song.

I tried this with a few tracks, and was pleased to learn that it doesn’t just work for the most recent hits. Spotify’s card for Metallica’s 1986 song Master of Puppets notes the song’s surge in popularity after its cameo in a 2022 episode of Stranger Things. The second card discusses the band’s album art for Master of Puppets and how it was conceptualized.

To see how far support for the feature really went, I looked up a few tracks from off the beaten path, like NoFX’s The Decline and Ice Nine Kills’ Thank God It’s Friday. Spotify supported every track I personally checked.

There does appear to be a limit to the depth of the fun facts, which makes sense since not every song has a complicated story. For those songs, Spotify defaults to trivia about the album that features the music or an AI summary of the lyrics and what they might mean.

Technologies

Today’s NYT Connections: Sports Edition Hints and Answers for Feb. 7, #502

Here are hints and the answers for the NYT Connections: Sports Edition puzzle for Feb. 7, No. 502.

Looking for the most recent regular Connections answers? Click here for today’s Connections hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle and Strands puzzles.

Today’s Connections: Sports Edition features a fun batch of categories. The purple one requires you to find hidden words inside some of the grid words, but they’re not too obscure. If you’re struggling with today’s puzzle but still want to solve it, read on for hints and the answers.

Connections: Sports Edition is published by The Athletic, the subscription-based sports journalism site owned by The Times. It doesn’t appear in the NYT Games app, but it does in The Athletic’s own app. Or you can play it for free online.

Read more: NYT Connections: Sports Edition Puzzle Comes Out of Beta

Hints for today’s Connections: Sports Edition groups

Here are four hints for the groupings in today’s Connections: Sports Edition puzzle, ranked from the easiest yellow group to the tough (and sometimes bizarre) purple group.

Yellow group hint: Golden Gate.

Green group hint: It’s «Shotime!»

Blue group hint: Same first name.

Purple group hint: Tweak a team name.

Answers for today’s Connections: Sports Edition groups

Yellow group: Bay Area teams.

Green group: Associated with Shohei Ohtani.

Blue group: Coaching Mikes.

Purple group: MLB teams, with the last letter changed.

Read more: Wordle Cheat Sheet: Here Are the Most Popular Letters Used in English Words

What are today’s Connections: Sports Edition answers?

The yellow words in today’s Connections

The theme is Bay Area teams. The four answers are 49ers, Giants, Sharks and Valkyries.

The green words in today’s Connections

The theme is associated with Shohei Ohtani. The four answers are Decoy, Dodgers, Japan and two-way.

The blue words in today’s Connections

The theme is coaching Mikes. The four answers are Macdonald, McCarthy, Tomlin and Vrabel.

The purple words in today’s Connections

The theme is MLB teams, with the last letter changed. The four answers are Angelo (Angels), Cuba (Cubs), redo (Reds) and twine (Twins).

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow