Technologies

Here’s What I Learned Testing Photoshop’s New Generative AI Tool

Adobe’s Firefly AI feature brings new fun and fakery to photos. It’s a profound change for image editing, though far from perfect.

Adobe has bulit generative AI abilities into its flagship image-editing software, releasing a Photoshop beta version Tuesday that dramatically expands what artists and photo editors can do. The move promises to release a new torrent of creativity even as it gives us all a new reason to pause and wonder if that sensational, scary or inspirational photo you see on the internet is actually real.

In my tests, detailed below, I found the tool impressive but imperfect. Adding it directly to Photoshop is a big deal, letting creators experiment within the software tool they’re likely already using without excursions to Midjourney, Stability AI’s Stable Diffusion or other outside generative AI tools.

With Adobe’s Firefly family of generative AI technologies arriving in Photoshop, you’ll be able to let the AI fill a selected part of the image with whatever it thinks most fitting – for example, replacing road cracks with smooth pavement. You can also specify the imagery you’d like with a text prompt, such as adding a double yellow line to the road.

Firefly in Photoshop also can also expand an image, adding new scenery beyond the frame based on what’s already in the frame or what you suggest with text. Want more sky and mountains in your landscape photo? A bigger crowd at the rock concert? Photoshop will oblige, without today’s difficulties of finding source material and splicing it in.

The feature, called generative fill and scheduled to emerge from beta testing in the second half of 2023, can be powerful. In Adobe’s live demo, the tool was often able to match a photo’s tones, blend in AI-generated imagery seamlessly, infer the geometric details of perspective even in reflections and extrapolate the position of the sun from shadows and sky haze.

Such technologies have been emerging over the last year as Stable Diffusion, Midjourney and OpenAI’s Dall-Ecaptured the imaginations of artists and creative pros. Now it’s built directly into the software they’re most likely to already be using, streamlining what can be a cumbersome editing process.

«It really puts the power and control of generative AI into the hands of the creator,» said Maria Yap, Adobe’s vice president of digital imaging. «You can just really have some fun. You can explore some ideas. You can ideate. You can create without ever necessarily getting into the deep tools of the product, very quickly.»

But you can’t sell anything yet. With Firefly technology, including what’s produced by Photoshop’s generative fill, «you may not use the output for any commercial purpose,» Adobe’s generative AI beta rules state.

Photoshop’s Firefly AI imperfect but useful

In my testing, I frequently ran into problems, many of them likely stemming from the limited range of the training imagery. When I tried to insert a fish on a bicycle to an image, Firefly only added the bicycle. I couldn’t get Firefly to add a kraken to emerge from San Francisco Bay. A musk ox looked like a panda-moose hybrid.

Less fanciful material also presents problems. Text looks like an alien race’s script. Shadows, lighting, perspective and geometry weren’t always right.

People are hard, too. On close inspection, their faces were distorted in weird ways. Humans added into shots could be positioned too high in the frame or in otherwise unconvincingly blended in.

Still, Firefly is remarkable for what it can accomplish, particularly with landscape shots. I could add mountains, oceans, skies and hills to landscapes. A white delivery van in a night scene was appropriately yellowish to match the sodium vapor streetlights in the scene. If you don’t like the trio of results Firefly presents, you can click the «generate» button to get another batch.

Given the pace of AI developments, I expect Firefly in Photoshop will improve.

It’s hard and expensive to retrain big AI models, requiring a data center packed with expensive hardware to churn through data, sometimes taking weeks for the largest models. But Adobe plans relatively frequent updates to Firefly. «Expect [about] monthly updates for general improvements and retraining every few months in all likelihood,» Adobe product chief Scott Belsky tweeted Tuesday.

Automating image manipulation

For years, «Photoshop» hasn’t just referred to Adobe’s software. It’s also used as a verb signifying photo manipulations like slimming supermodels’ waists or hiding missile launch failures. AI tools automate not just fun and flights of fancy, but also fake images like an alleged explosion at the Pentagon or a convincingly real photo of the pope in a puffy jacket, to pick two recent examples.

With AI, expect editing techniques far more subtle than the extra smoke easily recognized as digitally added to photos of an Israeli attack on Lebanon in 2006.

It’s a reflection of the double-edged sword that is generative AI. The technology is undeniably useful in many situations but also blurs the line between what is true and what is merely plausible.

For its part, Adobe tries to curtail problems. It doesn’t permit prompts to create images of many political figures and blocks you for «safety issues» if you try to create an image of black smoke in front of the White House. And its AI usage guidelines prohibit imagery involving violence, pornography and «misleading, fraudulent, or deceptive content that could lead to real-world harm,» among other categories. «We disable accounts that engage in behavior that is deceptive or harmful.»

Firefly also is designed to skip over styling prompts like that have provoked serious complaints from artists displeased to see their type of art reproduced by a data center. And it supports the Content Authenticity Initiative‘s content credentials technology that can be used to label an image as having been generated by AI.

Today, generative AI imagery made with Adobe’s Firefly website add content credentials by default along with a visual watermark. When the Photoshop feature exists beta testing and ships later this year, imagery will include content credentials automatically, Adobe said.

People trying to fake images can sidestep that technology. But in the long run, it’ll become part of how we all evaluate images, Adobe believes.

«Content credentials give people who want to be trusted a way to be trusted. This is an open-source technology that lets everyone attach metadata to their images to show that they created an image, when and where it was created, and what changes were made to it along the way,» Adobe said. «Once it becomes the norm that important news comes with content credentials, people will then be skeptical when they see images that don’t.»

Generative AI for photos

Adobe’s Firefly family of generative AI tools began with a website that turns a text prompt like «modern chair made up of old tires» into an image. It’s added a couple other options since, and Creative Cloud subscribers will also be able to try a lightweight version of the Photoshop interface on the Firefly site.

When OpenAI’s Dall-E brought that technology to anyone who signed up for it in 2022, it helped push generative artificial intelligence from a technological curiosity toward mainstream awareness. Now there’s plenty of worry along with the excitement as even AI creators fret about what the technology will bring now and in the more distant future.

Generative AI is a relatively new form of artificial intelligence technology. AI models can be trained to recognize patterns in vast amounts of data – in this case labeled images from Adobe’s stock art business and other licensed sources – and then to create new imagery based on that source data.

Generative AI has surged to mainstream awareness with language models used in tools like OpenAI’s ChatGPT chatbot, Google’s Gmail and Google Docs, and Microsoft’s Bing search engine. When it comes to generating images, Adobe employs an AI image generation technique called diffusion that’s also behind Dall-E, Stable Diffusion, Midjourney and Google’s Imagen.

Adobe calls Firefly for Photoshop a «co-pilot» technology, positioning it as a creative aid, not a replacement for humans. Yap acknowledges that some creators are nervous about being replaced by AI. Adobe prefers to see it as a technology that can amplify and speed up the creative process, spreading creative tools to a broader population.

«I think the democratization we’ve been going through, and having more creativity, is a positive thing for all of us,» Yap said. «This is the future of Photoshop.»

Editors’ note: CNET is using an AI engine to create some personal finance explainers that are edited and fact-checked by our editors. For more, see this post.

Technologies

Today’s NYT Mini Crossword Answers for Sunday, Nov. 23

Here are the answers for The New York Times Mini Crossword for Nov. 23.

Looking for the most recent Mini Crossword answer? Click here for today’s Mini Crossword hints, as well as our daily answers and hints for The New York Times Wordle, Strands, Connections and Connections: Sports Edition puzzles.

Need some help with today’s Mini Crossword? It includes a Jimi Hendrix reference, which I appreciated. Read on for the answers. And if you could use some hints and guidance for daily solving, check out our Mini Crossword tips.

If you’re looking for today’s Wordle, Connections, Connections: Sports Edition and Strands answers, you can visit CNET’s NYT puzzle hints page.

Read more: Tips and Tricks for Solving The New York Times Mini Crossword

Let’s get to those Mini Crossword clues and answers.

Mini across clues and answers

1A clue: LinkedIn listing

Answer: JOB

4A clue: Planet with an average surface temperature of around 860°F

Answer: VENUS

6A clue: Written with a pen

Answer: ININK

7A clue: Sheer torment

Answer: AGONY

8A clue: «___ thoughts?»

Answer: ANY

Mini down clues and answers

1D clue: Block tower

Answer: JENGA

2D clue: «Red» vegetable that’s really purple, if you ask me

Answer: ONION

3D clue: Word with Bad or Bugs

Answer: BUNNY

4D clue: By way of

Answer: VIA

5D clue: «Excuse me while I kiss the ___» (Hendrix lyric that’s famously misheard)

Answer: SKY

Technologies

Today’s NYT Connections: Sports Edition Hints and Answers for Nov. 23, #426

Here are hints and the answers for the NYT Connections: Sports Edition puzzle for Nov. 23, No. 426.

Looking for the most recent regular Connections answers? Click here for today’s Connections hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle and Strands puzzles.

Today’s Connections: Sports Edition has one easy group. I saw «boot» and «eject» and I figured out the yellow group right away. If you’re struggling with today’s puzzle but still want to solve it, read on for hints and the answers.

Connections: Sports Edition is published by The Athletic, the subscription-based sports journalism site owned by The Times. It doesn’t appear in the NYT Games app, but it does in The Athletic’s own app. Or you can play it for free online.

Read more: NYT Connections: Sports Edition Puzzle Comes Out of Beta

Hints for today’s Connections: Sports Edition groups

Here are four hints for the groupings in today’s Connections: Sports Edition puzzle, ranked from the easiest yellow group to the tough (and sometimes bizarre) purple group.

Yellow group hint: Outta here!

Green group hint: Football stars.

Blue group hint: English events.

Purple group hint: Not black.

Answers for today’s Connections: Sports Edition groups

Yellow group: Throw out of a game.

Green group: NFL all-time leading receivers.

Blue group: Premier League derbies.

Purple group: ____ Brown.

Read more: Wordle Cheat Sheet: Here Are the Most Popular Letters Used in English Words

What are today’s Connections: Sports Edition answers?

The yellow words in today’s Connections

The theme is throw out of a game. The four answers are boot, chase, eject and toss.

The green words in today’s Connections

The theme is NFL all-time leading receivers. The four answers are Fitzgerald, Moss, Owens and Rice.

The blue words in today’s Connections

The theme is Premier League derbies. The four answers are Manchester, Merseyside, North London and Tyne-Wear.

The purple words in today’s Connections

The theme is ____ Brown. The four answers are Cleveland, Jaylen, Mack and Tim.

Technologies

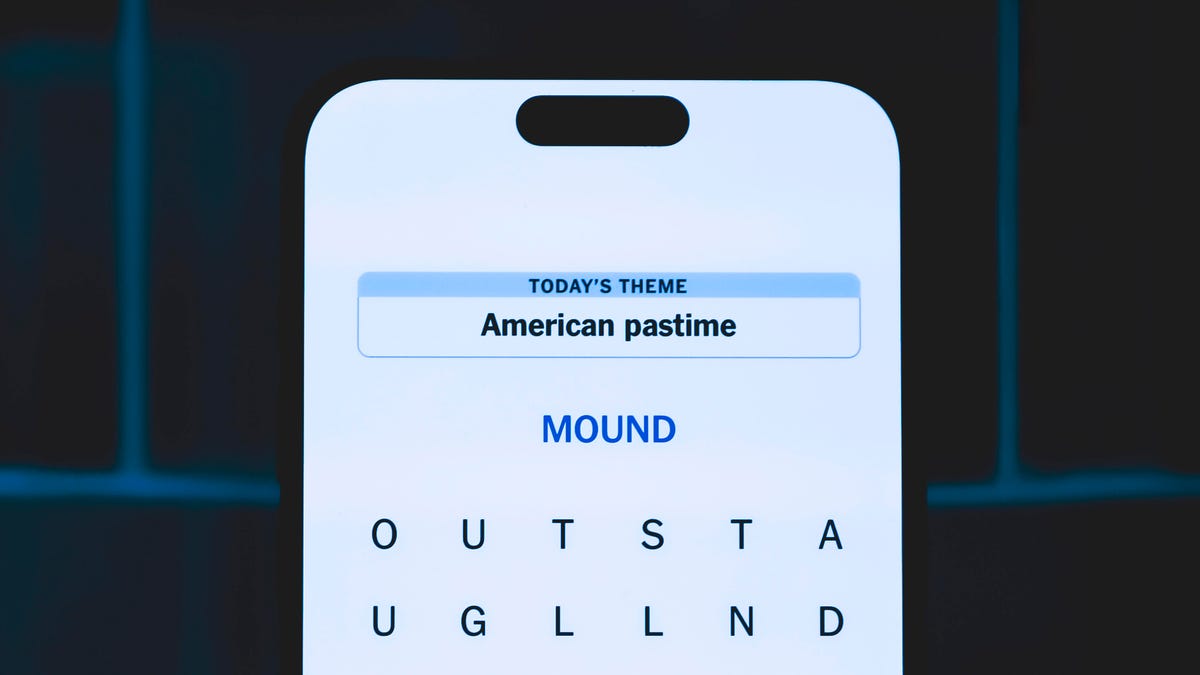

Today’s NYT Strands Hints, Answers and Help for Nov. 23, #630

Today’s Strands puzzle is a delicious one, and it might make you hungry. Here are hints, answers and help for Nov. 23, #630.

Looking for the most recent Strands answer? Click here for our daily Strands hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle, Connections and Connections: Sports Edition puzzles.

Today’s NYT Strands puzzle is a delicious one, and it might make you hungry. Some of the answers are difficult to unscramble, so if you need hints and answers, read on.

I go into depth about the rules for Strands in this story.

If you’re looking for today’s Wordle, Connections and Mini Crossword answers, you can visit CNET’s NYT puzzle hints page.

Read more: NYT Connections Turns 1: These Are the 5 Toughest Puzzles So Far

Hint for today’s Strands puzzle

Today’s Strands theme is: Sweet tooth

If that doesn’t help you, here’s a clue: Halloween treats.

Clue words to unlock in-game hints

Your goal is to find hidden words that fit the puzzle’s theme. If you’re stuck, find any words you can. Every time you find three words of four letters or more, Strands will reveal one of the theme words. These are the words I used to get those hints but any words of four or more letters that you find will work:

- STRAND, STRANDS, REDS, REND, SEND, SENDS, TEND, TENDS, RENDS, SANT, RUST

Answers for today’s Strands puzzle

These are the answers that tie into the theme. The goal of the puzzle is to find them all, including the spangram, a theme word that reaches from one side of the puzzle to the other. When you have all of them (I originally thought there were always eight but learned that the number can vary), every letter on the board will be used. Here are the nonspangram answers:

- DOTS, NERDS, RUNTS, STARBURST, WHATCHAMACALLIT

Today’s Strands spangram

Today’s Strands spangram is CANDYAISLE. To find it, start with the C that’s three letters to the right on the bottom row, and wind up.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies4 года ago

Technologies4 года agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow