Technologies

I Just Tried Photoshop’s New AI Tool. It Makes Photos Creative, Funny or Unreal

Adobe’s Firefly generative AI tool offers a new way to fiddle with photos. Expect a lot of fun and fakery.

Adobe is building generative AI abilities into its flagship image-editing software with a new Photoshop beta release Tuesday. The move promises to release a new torrent of creativity even as it gives us all a new reason to pause and wonder if that sensational, scary or inspirational photo you see on the internet is actually real.

In my tests, detailed below, I found the tool impressive overall but far from perfect. Adding it directly to Photoshop is a big deal, letting creators experiment within the software tool they’re likely already using without excursions to Midjourney, Stability AI’s Stable Diffusion or other outside generative AI tools.

With Adobe’s Firefly family of generative AI technologies arriving in Photoshop, you’ll be able to let the AI fill a selected part of the image with whatever it thinks most fitting – for example, replacing road cracks with smooth pavement. You can also specify the imagery you’d like with a text prompt, such as adding a double yellow line to the road.

Firefly in Photoshop also can also expand an image, adding new scenery beyond the frame based on what’s already in the frame or what you suggest with text. Want more sky and mountains in your landscape photo? A bigger crowd at the rock concert? Photoshop will oblige, without today’s difficulties of finding source material and splicing it in.

Photoshop’s Firefly skills can be powerful. In Adobe’s live demo, the were often able to match a photo’s tones, blend in AI-generated imagery seamlessly, infer the geometric details of perspective even in reflections and extrapolate the position of the sun from shadows and sky haze.

Such technologies have been emerging over the last year as Stable Diffusion, Midjourney and OpenAI’s Dall-Ecaptured the imaginations of artists and creative pros. Now it’s built directly into the software they’re most likely to already be using, streamlining what can be a cumbersome editing process.

«It really puts the power and control of generative AI into the hands of the creator,» said Maria Yap, Adobe’s vice president of digital imaging. «You can just really have some fun. You can explore some ideas. You can ideate. You can create without ever necessarily getting into the deep tools of the product, very quickly.»

Now you’d better brace yourself for that future.

Photoshop’s Firefly AI imperfect but useful

In my testing, I frequently ran into problems, many of them likely stemming from the limited range of the training imagery. When I tried to insert a fish on a bicycle to an image, Firefly only added the bicycle. I couldn’t get Firefly to add a kraken to emerge from San Francisco Bay. A musk ox looked like a panda-moose hybrid.

Less fanciful material also presents problems. Text looks like an alien race’s script. Shadows, lighting, perspective and geometry weren’t always right.

People are hard, too. On close inspection, their faces were distorted in weird ways. Humans added into shots were positioned too high in the frame or in other unconvincing ways.

Still, Firefly is remarkable for what it can accomplish, particularly with landscape shots. I could add mountains, oceans, skies and hills to landscapes. A white delivery van in a night scene was appropriately yellowish to match the sodium vapor streetlights in the scene. If you don’t like the trio of results Firefly presents, you can click the «generate» button to get another batch.

Given the pace of AI developments, I expect Firefly in Photoshop will improve.

«This is the future of Photoshop,» Yap said.

Automating image manipulation

For years, «Photoshop» hasn’t just referred to Adobe’s software. It’s also used as a verb signifying photo manipulations like slimming supermodels’ waists or hiding missile launch failures. AI tools automate not just fun and flights of fancy, but also fake images like an alleged explosion at the Pentagon or a convincingly real photo of the pope in a puffy jacket, to pick two recent examples.

With AI, expect editing techniques far more subtle than the extra smoke easily recognized as digitally added to photos of an Israeli attack on Lebanon in 2006.

It’s a reflection of the double-edged sword that is generative AI. The technology is undeniably useful in many situations but also blurs the line between what is true and what is merely plausible.

For its part, Adobe tries to curtail problems. It doesn’t permit prompts to create images of many political figures and blocks you for «safety issues» if you try to create an image of black smoke in front of the White House. And its AI usage guidelines prohibit imagery involving violence, pornography and «misleading, fraudulent, or deceptive content that could lead to real-world harm,» among other categories. «We disable accounts that engage in behavior that is deceptive or harmful.»

Firefly also is designed to skip over styling prompts like that have provoked serious complaints from artists displeased to see their type of art reproduced by a data center. And it supports the Content Authenticity Initiative‘s content credentials technology that can be used to label an image as having been generated by AI.

Generative AI for photos

Adobe’s Firefly family of generative AI tools began with a website that turns a text prompt like «modern chair made up of old tires» into an image. It’s added a couple other options since, and Creative Cloud subscribers will also be able to try a lightweight version of the Photoshop interface on the Firefly site.

When OpenAI’s Dall-E brought that technology to anyone who signed up for it in 2022, it helped push generative artificial intelligence from a technological curiosity toward mainstream awareness. Now there’s plenty of worry along with the excitement as even AI creators fret about what the technology will bring now and in the more distant future.

Generative AI is a relatively new form of artificial intelligence technology. AI models can be trained to recognize patterns in vast amounts of data – in this case labeled images from Adobe’s stock art business and other licensed sources – and then to create new imagery based on that source data.

Generative AI has surged to mainstream awareness with language models used in tools like OpenAI’s ChatGPT chatbot, Google’s Gmail and Google Docs, and Microsoft’s Bing search engine. When it comes to generating images, Adobe employs an AI image generation technique called diffusion that’s also behind Dall-E, Stable Diffusion, Midjourney and Google’s Imagen.

Adobe calls Firefly for Photoshop a «co-pilot» technology, positioning it as a creative aid, not a replacement for humans. Yap acknowledges that some creators are nervous about being replaced by AI. Adobe prefers to see it as a technology that can amplify and speed up the creative process, spreading creative tools to a broader population.

«I think the democratization we’ve been going through, and having more creativity, is a positive thing for all of us.»

Technologies

We Learned How to Share Info About ICE and Police Raids on Apps Like Ring Neighbors

If you’re wondering how to post about ICE on neighborhood apps, here are some tips.

The US Immigration and Customs Enforcement agency has been in the spotlight due to its repression of immigrants and targeting of protesters, not only in Minnesota but across the country. The FBI has also been investigating related Signal chats, and Facebook is taking down posts about ICE. Earlier this month, the Foundation for Individual Rights and Expression accused the Department of Homeland Security of forcing tech companies to censor «protected speech» on social media platforms.

I contacted two social platforms — Nextdoor and Ring Neighbors — to see what they allow and what happens when you see ICE activity from your video doorbell or in person. I learned what sort of posts they allow, what gets taken down and how to talk about nearby raids. Here’s what you should know, too.

Are posts getting banned on apps like Ring Neighbors?

I reached out to Ring about its Neighbors app policies regarding recent events and police raids, as well as Reddit reports about posts being taken down. The company provided information about its policies and explained why Ring tends to remove certain posts or prevent them from going live on Neighbors.

Posts about a general law enforcement presence can get nixed. So if someone said ICE was spotted in «Bell Gardens,» their post would be denied because that’s too vague. Or if a post asked, «Hey, is there any ICE activity in town?» it wouldn’t be allowed. Other posts get banned if they:

- Explicitly obstruct law enforcement

- Voice political opinions

- Assume immigration status or other types of prejudice

- Don’t pertain to local events

Read more: Is it Legal for Police to Seize Your Home Security Videos?

What’s a safe way to post about police activity?

Posts that cite an exact location or images showing agents directly connected to an event tend not to be taken down. If someone said, «I saw ICE knocking on doors at the IHOP on Florence and Pico,» that would be allowed under Ring’s guidelines. Other allowed posts provide information on the exact cross streets, addresses, complexes, blocks and so on.

Bans aren’t always immediate. Sometimes posts that violate guidelines are taken down after the fact, either through post-published moderation, flagging or user deletion. Customers can usually appeal moderation decisions to ensure consistency.

When I turned to Nextdoor, another popular neighborhood app used for discussing events, a company spokesperson said something similar: «Our platform fosters discussions of local issues and, as such, our Community Guidelines prohibit broad commentary or personal opinions on national political topics.»

As long as it’s a local issue and users follow the basic community guidelines (be respectful, don’t discriminate and use your true identity), then posts should be fine.

What are the guidelines for posting on Ring Neighbors?

When I visit my own Neighbors app, I see — contrary to some reports — that users frequently post about hearing sirens or police activity in their own neighborhoods, ask about masked strangers or raise questions about law enforcement.

You can still post about security concerns on Ring Neighbors and other apps, even and especially when they involve police activity. You can also post about people you don’t recognize and strangers knocking, which opens the door (not literally) to talking about masked federal ICE agents who aren’t wearing any identifiers.

In other words, it looks like what Ring said mostly tracks. Explicit information citing current, local events, preferably with address data, is allowed.

«Focus on the behavior that raised your suspicion,» Nextdoor recommends. «Describe the potentially criminal or dangerous activity you observed or experienced — what the person was doing, what they said (if they spoke to you). Include the direction they were last headed.» If you post with an eye toward your neighborhood’s safety, your post is less likely to be removed.

Finally, avoid posts that include gruesome content or violate someone’s privacy, as these are also red flags likely to lead to a block.

Is Ring currently sharing information with ICE?

You may also be concerned that Ring is sharing your security videos with ICE or the surveillance company Flock Safety. In early 2026, Ring canceled its pending contract with Flock and has not announced any direct arrangements with law enforcement services.

Ring’s published guidelines say the company doesn’t share information with the police or federal agencies without a binding request, such as a search warrant, subpoena or court order. However, since Ring’s plans have changed abruptly over the past several months, they could shift again in the future. CNET will continue to report on further developments.

Can users coordinate on apps like Ring Neighbors?

This is a gray area, and it’s hard to know whether discussions will be removed. In my experience on the Neighbors app, many discussions about sirens and unexplained police presence were left up, allowing people to share their own perspectives and what they heard on police scanners.

It’s possible that the more these posts mention ICE or federal enforcement, the more likely they’ll be removed, and if conversations move into discussions about national issues or general legal advice, they may be taken down. But many people have reported successfully using apps like Neighbors to discuss nearby law enforcement raids, so I don’t see any evidence of a blanket ban.

Groups using the Neighbors app to communicate important information or provide help should also be aware of the Neighbors Verified tag, which is available to public safety agencies and community organizations. This tag makes it easier for Neighbors users to trust information and announcements from specific accounts. Verified accounts don’t have access to any additional user information.

Can agents cover up my security cam or doorbell?

In the past, published footage and news reports have shown federal agents covering up a video doorbell during an ICE raid. While it’s not common, civil rights attorneys have said actions like these are illegal. This issue connects to a larger fight over filming ICE in general, something the Department of Homeland Security has said is illegal, and US courts have said is protected under the First Amendment.

Devices on your own property should be fine if ICE follows the current law — you can find more details here — but it’s always a good idea to immediately save any pertinent video footage, preferably in more than one device.

What are my rights if I’m worried about ICE raids?

Whether you’re concerned about federal immigration raids, curious about what law enforcement is doing or just want clarification about your rights, it’s a good idea to consult the American Civil Liberties Union and the National Immigrant Justice Center. Here is some advice they give.

- Don’t escalate: In cases where federal agents or people appearing to be agents have knocked on doors, people have done nothing and simply waited for agents to leave. Remember, without a warrant, they usually can’t enter a house, and if you have a video doorbell, it can still record everything that happens. Avoid confrontation when possible, and don’t give law enforcement anything to act on. Remember, everyone still has the right to remain silent.

- If you feel your safety is endangered, call 911 or seek help from a nonprofit: Calling 911 is very helpful if you feel unsafe because of nearby events. You can explain the situation and have a record of the call. 911 is an emergency response service and isn’t in the business of reporting to federal agents. There are also local immigrant rights agencies you can contact to report ICE, and groups like the ACLU can usually point you in the right direction.

For more information, take a look at the latest news on what Ring is letting the police see (it’s good news for privacy fans), the legal ramifications of recording video or audio in your home, and what you and landlords can legally do with security cameras.

Technologies

This 160-Watt Anker Charger Just Dropped to $106, but Probably Not for Long

The Anker Prime charger can power three devices simultaneously, including laptops.

You’ll never run out of charging ports again with this Anker 160-watt, three-port charger, especially while it’s down to just $106. It’s currently at its lowest price of the year, but we can’t promise that it’ll stay that way. That’s why we recommend acting fast if you want to snag this bargain charger.

This Anker charger would normally set you back around $150, so you’ll save $44 with this deal. You won’t even have to enter any discount codes or clip any coupons to do it.

The charger has three ports pumping out a total of 160 watts of power — and a single port can charge at 140 watts. That’s enough for the M5 14-inch MacBook Pro and even its larger 16-inch relative, too.

There’s a handy display that shows you information on how the charger is performing, and the pins can be folded away to make the charger perfect for traveling. In fact, Anker’s charger is around the same size as a pair of AirPods Pro 3 earbuds, so it’s highly portable.

Why this deal matters

You can never have too many chargers, and this one does the job of three. It isn’t cheap, to be sure, but with plenty of power on tap and a design that makes it great for taking on the road, it’s still a great buy with this discount.

Technologies

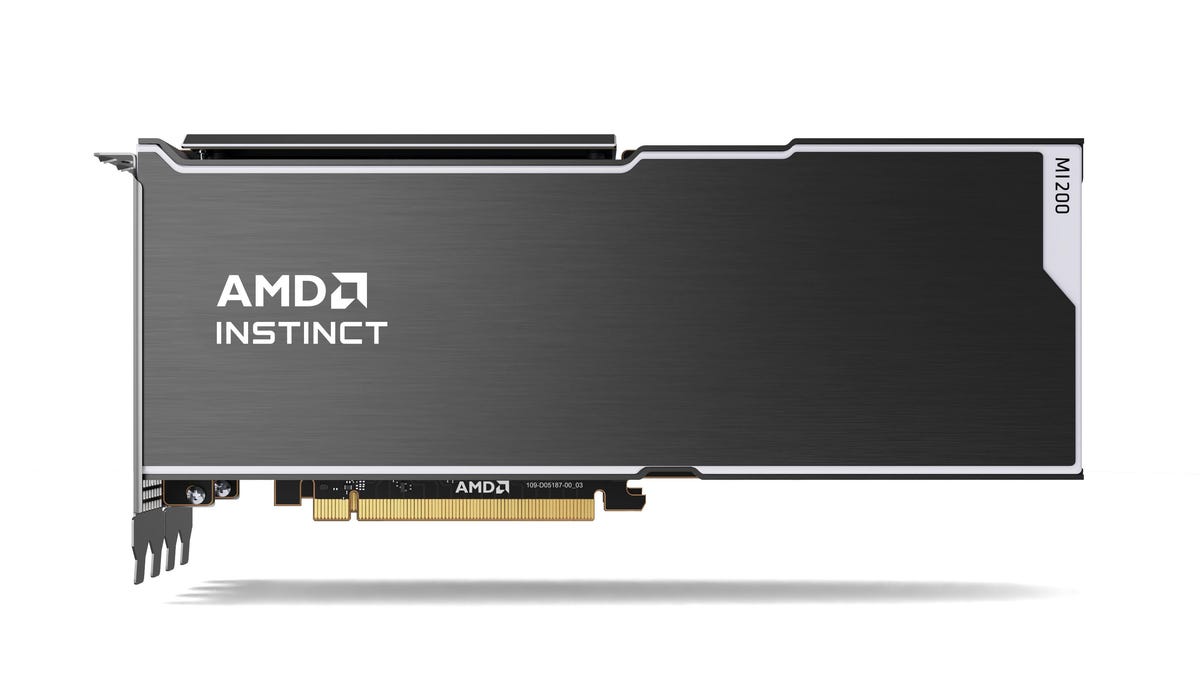

Meta and AMD’s Multibillion-Dollar Deal Is All About the AI Chips

Meta will take a stake in the chipmaker in exchange for a commitment to buy billions of dollars’ worth of AI chips.

Meta is joining OpenAI as one of the major tech companies to take a stake in chipmaker AMD, as part of an AI hardware buying frenzy. Meta and AMD on Tuesday announced a partnership that will involve CEO Mark Zuckerberg’s tech giant buying billions of dollars’ worth of AMD Instinct GPUs in order to fuel its ambitions to build out AI offerings across Meta platforms, including Instagram, Facebook and WhatsApp.

In a release, Meta described the deal as «multi-year,» and said the AI purchase will provide Meta with up to 6 gigawatts of AMD GPUs, «the silicon computing technology used to support modern AI models.»

According to the US Department of Energy, a single gigawatt (1 billion watts) is equivalent to nearly 2,000 large solar panels or 100 million LED bulbs.

In AMD’s version of the announcement, CEO Lisa Su said, «We are proud to expand our strategic partnership with Meta as they push the boundaries of AI at unprecedented scale.» As part of the deal, Meta will take a 10% stake in AMD.

AMD, based in Santa Clara, California, previously signed a deal with ChatGPT-maker OpenAI that it announced last October, which is similar to the Meta deal and also gives its AI rival 10% ownership of AMD.

(Disclosure: Ziff Davis, CNET’s parent company, in 2025 filed a lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.)

What does this mean for the rest of us?

AMD’s two megadeals may not have an immediate impact on people who use Meta’s social networking and communications apps, or even on those who buy AMD’s products, including desktop processors and graphics cards.

But it signals that large companies making huge bets on the future of AI are doing what they can to secure the hardware they need as supplies tighten and prices rise for components such as RAM. Some of those constraints aren’t expected to end anytime soon, and shoppers could begin to see prices rise even more than they already have for computers, smartphones, vehicles and other products that heavily rely on computing components like these.

It is also a sign that Meta’s ambitions for AI are not slowing down as it continues to compete with companies including OpenAI, Microsoft and Google to develop AI products and tools.

Also a factor: Meta’s push into wearables

Another reason AMD may want access to AI chips goes beyond its own data centers and online platforms: Meta has increasingly been focused on wearables such as its Oakley Meta AI Glasses and other potential new portable products.

In addition to what AMD’s GPUs can offer Meta for AI infrastructure power, AMD may also be part of its wearable future.

«With AI models requiring unprecedented processing power to process real-time data and information, Meta is focused on securing the supply chain necessary for its wearable devices,» said Michael J. Wolf, founder and CEO of the consulting firm Activate.

Wolf believes that the deals Meta and OpenAI have signed won’t be the last time a major AI-focused company locks down a supply of semiconductors.

«As consumer hardware transitions from smartphones to smart glasses, we will absolutely see more of these mega-deals,» Wolf said.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow