Technologies

iOS 16.4 Beta 3: New Features Public Beta Testers Can Try Now

Public beta testers can try new emoji, changes to Apple Podcasts, and more.

Apple released iOS 16.4 beta 3 to public beta testers Wednesday, about a week after the company released the second iOS 16.4 public beta. This third beta means the wide unveiling of iOS 16.4 is probably close at hand. Beta testers can now try out new iOS features, like new emoji and updates to Apple Books.

These features are available only to people who are a part of Apple’s Beta Software Program. New iOS features can be fun, but we recommend downloading a beta only onto something other than your primary phone, just in case the new software causes issues. Apple provides beta testers with an app called Feedback. The app lets testers notify Apple of any issues in the new software so the problem can be addressed before general release.

Here are some of the new features testers can find in the iOS 16.4 betas.

Apple ID and beta software updates

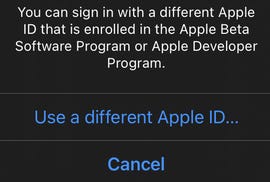

The latest beta lets you sign into another Apple ID to access other beta software.

Zach McAuliffe/CNETWith the third iOS 16.4 beta, developers and beta testers can check whether their Apple ID is associated with the developer beta, public beta or both. If you have a different Apple ID, like one for your job, that has access to beta updates, iOS 16.4 beta 3 also lets you switch to that account from your device.

Apple Books updates

The iOS 16.4 beta 2 update brings the page-turn curl animation back to Apple Books, after it was removed in a previous iOS update. Before, when you turned a page in an e-book on your iPhone, the page would slide to one side of your screen or it would vanish and be replaced by the next page. Beta testers can still choose these other page-turn animations in addition to the curl animation.

With iOS 16.4 beta 3, a new popup appears when you open Apple Books for the first time after downloading the update. It lets you know you can change your page-turn animation, theme and more.

31 new emoji

The first iOS 16.4 beta software brought 31 new emoji to your iOS device. The new emoji include a new smiley; new animals, like a moose and a goose; and new heart colors, like pink and light blue.

Some of the new emoji released in the first iOS 16.4 beta.

Patrick Holland/CNETThe new emoji all come from Unicode’s September 2022 recommendation list, Emoji 15.0.

Apple Podcasts updates

The first beta brought a few changes to how you navigate Apple Podcasts. Now you can access podcast channels you subscribe to in your Library. You can also use Up Next to resume podcast episodes you’ve started, start episodes you’ve saved and remove episodes you want to skip.

Preview Mastodon links in Messages

Apple’s first iOS 16.4 beta enabled rich previews of Mastodon links in Messages. That’s good because Mastodon saw a 400% increase in the rate of new accounts in December, so you might be receiving Mastodon links in Messages.

Music app changes

A small banner appears at the bottom of the screen when you choose to play a song next in Apple Music in the frst iOS 16.4 beta.

Zach McAuliffe/CNETThe Music interface has been slightly modified in the first iOS 16.4 beta. When you add a song to your queue, a small banner appears near the bottom of your screen instead of a full-screen pop-up.

See who and what is covered under AppleCare

Starting with iOS 16.4 beta 1, you could go to Settings to check who and what devices are covered on your AppleCare plan. With iOS 16.4 beta 2, this menu will show you a small icon next to each device that’s covered under AppleCare.

Focus Mode, Shortcuts and always-on display

If you have an iPhone 14 Pro or Pro Max, iOS 16.4 beta 1 lets you enable or disable the always-on display option with certain Focus Modes. A new option in Shortcuts called Set Always on Display was also added, in addition to new Lock Screen and Set VPN actions.

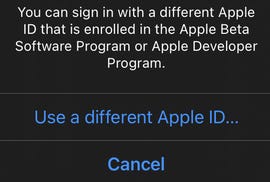

New Apple Wallet widgets

You can add three new order-tracking widgets for Apple Wallet to your home screen with the first iOS 16.4 beta. Each widget displays your tracking information on active orders, but the widgets are different sizes: small, medium and large.

The medium-size Apple Wallet order tracking widget takes up three tile spaces on your iPhone’s screen.

Zach McAuliffe/CNETMore accessibility options

The first beta update added a new accessibility option, too. The new option is called Dim Flashing Lights, and it can be found in the Motion menu in Settings. The option’s description says video content that depicts repeated flashing or strobing lights will automatically be dimmed. Video timelines will also show when flashing lights will occur.

New keyboards, Siri voices and language updates

The first iOS 16.4 beta added keyboards for the Choctaw and Chickasaw languages, and there are new Siri voices for Arabic and Hebrew. Language updates have also come to Korean, Ukrainian, Gujarati, Punjabi and Urdu.

There’s no word on when iOS 16.4 will be released to the general public. There’s no guarantee these beta features will be released with iOS 16.4, or that these will be the only features released with the update.

For more, check out how to become an Apple beta tester, what was included in iOS 16.3.1 and features you may have missed in iOS 16.3.

Technologies

Today’s NYT Mini Crossword Answers for Saturday, Feb. 21

Here are the answers for The New York Times Mini Crossword for Feb. 21.

Looking for the most recent Mini Crossword answer? Click here for today’s Mini Crossword hints, as well as our daily answers and hints for The New York Times Wordle, Strands, Connections and Connections: Sports Edition puzzles.

Need some help with today’s Mini Crossword? It’s the long Saturday version, and some of the clues are stumpers. I was really thrown by 10-Across. Read on for all the answers. And if you could use some hints and guidance for daily solving, check out our Mini Crossword tips.

If you’re looking for today’s Wordle, Connections, Connections: Sports Edition and Strands answers, you can visit CNET’s NYT puzzle hints page.

Read more: Tips and Tricks for Solving The New York Times Mini Crossword

Let’s get to those Mini Crossword clues and answers.

Mini across clues and answers

1A clue: «Jersey Shore» channel

Answer: MTV

4A clue: «___ Knows» (rhyming ad slogan)

Answer: LOWES

6A clue: Second-best-selling female musician of all time, behind Taylor Swift

Answer: MADONNA

8A clue: Whiskey grain

Answer: RYE

9A clue: Dreaded workday: Abbr.

Answer: MON

10A clue: Backfiring blunder, in modern lingo

Answer: SELFOWN

12A clue: Lengthy sheet for a complicated board game, perhaps

Answer: RULES

13A clue: Subtle «Yes»

Answer: NOD

Mini down clues and answers

1D clue: In which high schoolers might role-play as ambassadors

Answer: MODELUN

2D clue: This clue number

Answer: TWO

3D clue: Paid via app, perhaps

Answer: VENMOED

4D clue: Coat of paint

Answer: LAYER

5D clue: Falls in winter, say

Answer: SNOWS

6D clue: Married title

Answer: MRS

7D clue: ___ Arbor, Mich.

Answer: ANN

11D clue: Woman in Progressive ads

Answer: FLO

Technologies

Today’s NYT Connections: Sports Edition Hints and Answers for Feb. 21, #516

Here are hints and the answers for the NYT Connections: Sports Edition puzzle for Feb. 21, No. 516.

Looking for the most recent regular Connections answers? Click here for today’s Connections hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle and Strands puzzles.

Today’s Connections: Sports Edition is a tough one. I actually thought the purple category, usually the most difficult, was the easiest of the four. If you’re struggling with today’s puzzle but still want to solve it, read on for hints and the answers.

Connections: Sports Edition is published by The Athletic, the subscription-based sports journalism site owned by The Times. It doesn’t appear in the NYT Games app, but it does in The Athletic’s own app. Or you can play it for free online.

Read more: NYT Connections: Sports Edition Puzzle Comes Out of Beta

Hints for today’s Connections: Sports Edition groups

Here are four hints for the groupings in today’s Connections: Sports Edition puzzle, ranked from the easiest yellow group to the tough (and sometimes bizarre) purple group.

Yellow group hint: Old Line State.

Green group hint: Hoops legend.

Blue group hint: Robert Redford movie.

Purple group hint: Vroom-vroom.

Answers for today’s Connections: Sports Edition groups

Yellow group: Maryland teams.

Green group: Shaquille O’Neal nicknames.

Blue group: Associated with «The Natural.»

Purple group: Sports that have a driver.

Read more: Wordle Cheat Sheet: Here Are the Most Popular Letters Used in English Words

What are today’s Connections: Sports Edition answers?

The yellow words in today’s Connections

The theme is Maryland teams. The four answers are Midshipmen, Orioles, Ravens and Terrapins.

The green words in today’s Connections

The theme is Shaquille O’Neal nicknames. The four answers are Big Aristotle, Diesel, Shaq and Superman.

The blue words in today’s Connections

The theme is associated with «The Natural.» The four answers are baseball, Hobbs, Knights and Wonderboy.

The purple words in today’s Connections

The theme is sports that have a driver. The four answers are bobsled, F1, golf and water polo.

Technologies

Wisconsin Reverses Decision to Ban VPNs in Age-Verification Bill

The law would have required websites to block VPN users from accessing «harmful material.»

Following a wave of criticism, Wisconsin lawmakers have decided not to include a ban on VPN services in their age-verification law, making its way through the state legislature.

Wisconsin Senate Bill 130 (and its sister Assembly Bill 105), introduced in March 2025, aims to prohibit businesses from «publishing or distributing material harmful to minors» unless there is a reasonable «method to verify the age of individuals attempting to access the website.»

One provision would have required businesses to bar people from accessing their sites via «a virtual private network system or virtual private network provider.»

A VPN lets you access the internet via an encrypted connection, enabling you to bypass firewalls and unblock geographically restricted websites and streaming content. While using a VPN, your IP address and physical location are masked, and your internet service provider doesn’t know which websites you visit.

Wisconsin state Sen. Van Wanggaard moved to delete that provision in the legislation, thereby releasing VPNs from any liability. The state assembly agreed to remove the VPN ban, and the bill now awaits Wisconsin Governor Tony Evers’s signature.

Rindala Alajaji, associate director of state affairs at the digital freedom nonprofit Electronic Frontier Foundation, says Wisconsin’s U-turn is «great news.»

«This shows the power of public advocacy and pushback,» Alajaji says. «Politicians heard the VPN users who shared their worries and fears, and the experts who explained how the ban wouldn’t work.»

Earlier this week, the EFF had written an open letter arguing that the draft laws did not «meaningfully advance the goal of keeping young people safe online.» The EFF said that blocking VPNs would harm many groups that rely on that software for private and secure internet connections, including «businesses, universities, journalists and ordinary citizens,» and that «many law enforcement professionals, veterans and small business owners rely on VPNs to safely use the internet.»

More from CNET: Best VPN Service for 2026: VPNs Tested by Our Experts

VPNs can also help you get around age-verification laws — for instance, if you live in a state or country that requires age verification to access certain material, you can use a VPN to make it look like you live elsewhere, thereby gaining access to that material. As age-restriction laws increase around the US, VPN use has also increased. However, many people are using free VPNs, which are fertile ground for cybercriminals.

In its letter to Wisconsin lawmakers prior to the reversal, the EFF argued that it is «unworkable» to require websites to block VPN users from accessing adult content. The EFF said such sites cannot «reliably determine» where a VPN customer lives — it could be any US state or even other countries.

«As a result, covered websites would face an impossible choice: either block all VPN users everywhere, disrupting access for millions of people nationwide, or cease offering services in Wisconsin altogether,» the EFF wrote.

Wisconsin is not the only state to consider VPN bans to prevent access to adult material. Last year, Michigan introduced the Anticorruption of Public Morals Act, which would ban all use of VPNs. If passed, it would force ISPs to detect and block VPN usage and also ban the sale of VPNs in the state. Fines could reach $500,000.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow