Technologies

FTC to AI Companies: Tell Us How You Protect Teens and Kids Who Use AI Companions

As more teens turn to AI for companionship, the investigation comes as no surprise.

The Federal Trade Commission is launching an investigation into AI chatbots from seven companies, including Alphabet, Meta and OpenAI, over their use as companions. The inquiry involves finding how the companies test, monitor and measure the potential harm to children and teens.

A Common Sense Media survey of 1,060 teens in April and May found that over 70% used AI companions and that more than 50% used them consistently — a few times or more per month.

Experts have been warning for some time that exposure to chatbots could be harmful to young people. A study revealed that ChatGPT, for instance, provided bad advice to teenagers, like how to conceal an eating disorder or how to personalize a suicide notes. In some cases, chatbots have ignored comments that should have been recognized as concerning, instead simply continuing the previous conversation. Psychologists are calling for guardrails to protect young people, like reminders in the chat that the chatbot is not human, and for educators to prioritize AI literacy in schools.

There are plenty of adults, too, who’ve experienced negative consequences of relying on chatbots — whether for companionship and advice or as their personal search engine for facts and trusted sources. Chatbots more often than not tell what it thinks you want to hear, which can lead to flat-out lies. And blindly following the instructions of a chatbot isn’t always the right thing to do.

«As AI technologies evolve, it is important to consider the effects chatbots can have on children,» FTC Chairman Andrew N. Ferguson said in a statement. «The study we’re launching today will help us better understand how AI firms are developing their products and the steps they are taking to protect children.»

A Character.ai spokesperson told CNET every conversation on the service has prominent disclaimers that all chats should be treated as fiction.

«In the past year we’ve rolled out many substantive safety features, including an entirely new under-18 experience and a Parental Insights feature,» the spokesperson said.

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

The company behind the Snapchat social network likewise said it has taken steps to reduce risks. «Since introducing My AI, Snap has harnessed its rigorous safety and privacy processes to create a product that is not only beneficial for our community, but is also transparent and clear about its capabilities and limitations,» the spokesperson said.

Meta declined to comment, and neither the FTC nor any of the remaining four companies immediately responded to our request for comment. The FTC has issued orders and is seeking a teleconference with the seven companies about the timing and format of its submissions no later than Sept 25. The companies under investigation include the makers of some of the biggest AI chatbots in the world or popular social networks that incorporate generative AI:

- Alphabet (parent company of Google)

- Character Technologies

- Meta Platforms

- OpenAI

- Snap

- X.ai

Starting late last year, some of those companies have updated or bolstered their protection features for younger individuals. Character.ai began imposing limits on how chatbots can respond to people under the age of 17 and added parental controls. Instagram introduced teen accounts last year and switched all users under the age of 17 to them and Meta recently set limits on subjects teens can have with chatbots.

The FTC is seeking information from the seven companies on how they:

- monetize user engagement

- process user inputs and generate outputs in response to user inquiries

- develop and approve characters

- measure, test, and monitor for negative impacts before and after deployment

- mitigate negative impacts, particularly to children

- employ disclosures, advertising and other representations to inform users and parents about features, capabilities, the intended audience, potential negative impacts and data collection and handling practices

- monitor and enforce compliance with Company rules and terms of services (for example, community guidelines and age restrictions) and

- use or share personal information obtained through users’ conversations with the chatbots

Technologies

Today’s NYT Mini Crossword Answers for Tuesday, Oct. 14

Here are the answers for The New York Times Mini Crossword for Oct. 14.

Looking for the most recent Mini Crossword answer? Click here for today’s Mini Crossword hints, as well as our daily answers and hints for The New York Times Wordle, Strands, Connections and Connections: Sports Edition puzzles.

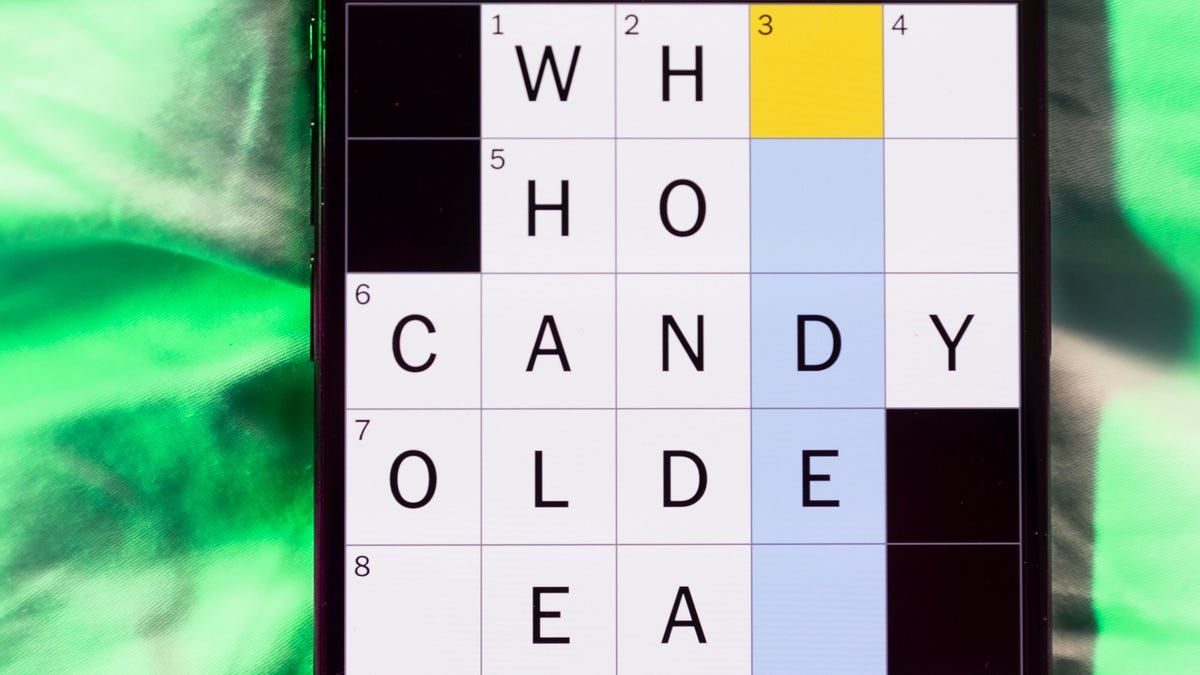

Today’s Mini Crossword has an odd vertical shape, with an extra Across clue, and only four Down clues. The clues are not terribly difficult, but one or two could be tricky. Read on if you need the answers. And if you could use some hints and guidance for daily solving, check out our Mini Crossword tips.

If you’re looking for today’s Wordle, Connections, Connections: Sports Edition and Strands answers, you can visit CNET’s NYT puzzle hints page.

Read more: Tips and Tricks for Solving The New York Times Mini Crossword

Let’s get to those Mini Crossword clues and answers.

Mini across clues and answers

1A clue: Smokes, informally

Answer: CIGS

5A clue: «Don’t have ___, man!» (Bart Simpson catchphrase)

Answer: ACOW

6A clue: What the vehicle in «lane one» of this crossword is winning?

Answer: RACE

7A clue: Pitt of Hollywood

Answer: BRAD

8A clue: «Yeah, whatever»

Answer: SURE

9A clue: Rd. crossers

Answer: STS

Mini down clues and answers

1D clue: Things to «load» before a marathon

Answer: CARBS

2D clue: Mythical figure who inspired the idiom «fly too close to the sun»

Answer: ICARUS

3D clue: Zoomer around a small track

Answer: GOCART

4D clue: Neighbors of Norwegians

Answer: SWEDES

Technologies

Watch SpaceX’s Starship Flight Test 11

Technologies

New California Law Wants Companion Chatbots to Tell Kids to Take Breaks

Gov. Gavin Newsom signed the new requirements on AI companions into law on Monday.

AI companion chatbots will have to remind users in California that they’re not human under a new law signed Monday by Gov. Gavin Newsom.

The law, SB 243, also requires companion chatbot companies to maintain protocols for identifying and addressing cases in which users express suicidal ideation or self-harm. For users under 18, chatbots will have to provide a notification at least every three hours that reminds users to take a break and that the bot is not human.

It’s one of several bills Newsom has signed in recent weeks dealing with social media, artificial intelligence and other consumer technology issues. Another bill signed Monday, AB 56, requires warning labels on social media platforms, similar to those required for tobacco products. Last week, Newsom signed measures requiring internet browsers to make it easy for people to tell websites they don’t want them to sell their data and banning loud advertisements on streaming platforms.

AI companion chatbots have drawn particular scrutiny from lawmakers and regulators in recent months. The Federal Trade Commission launched an investigation into several companies in response to complaints by consumer groups and parents that the bots were harming children’s mental health. OpenAI introduced new parental controls and other guardrails in its popular ChatGPT platform after the company was sued by parents who allege ChatGPT contributed to their teen son’s suicide.

«We’ve seen some truly horrific and tragic examples of young people harmed by unregulated tech, and we won’t stand by while companies continue without necessary limits and accountability,» Newsom said in a statement.

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

One AI companion developer, Replika, told CNET that it already has protocols to detect self-harm as required by the new law, and that it is working with regulators and others to comply with requirements and protect consumers.

«As one of the pioneers in AI companionship, we recognize our profound responsibility to lead on safety,» Replika’s Minju Song said in an emailed statement. Song said Replika uses content-filtering systems, community guidelines and safety systems that refer users to crisis resources when needed.

Read more: Using AI as a Therapist? Why Professionals Say You Should Think Again

A Character.ai spokesperson said the company «welcomes working with regulators and lawmakers as they develop regulations and legislation for this emerging space, and will comply with laws, including SB 243.» OpenAI spokesperson Jamie Radice called the bill a «meaningful move forward» for AI safety. «By setting clear guardrails, California is helping shape a more responsible approach to AI development and deployment across the country,» Radice said in an email.

One bill Newsom has yet to sign, AB 1064, would go further by prohibiting developers from making companion chatbots available to children unless the AI companion is «not foreseeably capable of» encouraging harmful activities or engaging in sexually explicit interactions, among other things.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow