Technologies

Pixel 10 Pro XL vs. Galaxy S25 Ultra: Which Android Camera Wins?

Can Google’s latest flagship unseat Samsung’s premiere smartphone? I took hundreds of photos to find out.

A top-tier smartphone camera needs to perform, but also make it look like it’s not trying very hard. We expect a tap of the shutter button to create a great image in any circumstance, regardless of whether the person making the image knows anything about photography.

Many phones include decent cameras, but a small number strive to be the best smartphone cameras you can pocket. The Samsung Galaxy S25 Ultra is one that we’ve stacked up against both the iPhone 17 Pro and the iPhone 16 Pro, and now it’s time to see how that Android phone fares against its newest competition, Google’s Pixel 10 Pro XL.

I took both phones to Seattle and nearby Mukilteo, Washington, to compare how each performed. Over hundreds of photos, I kept the camera settings as close to the defaults as possible, occasionally switching between the 12-megapixel shooting modes and the high-res 50-megapixel modes where available.

Because we’re talking about photography, my personal preferences as to which are the «best» photos might not be the ones you choose, and that’s fine. With either camera, you’re going to get good photos. But if you’re in the market for a new phone and pondering which high-end camera system is for you, or you want to check out the current state of the art for Android cameras, follow along.

And for even more Pixel 10 Pro XL photos, be sure to follow along with CNET’s Andrew Lanxon on his first-look photo walk through Paris.

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

Pixel 10 Pro XL vs. Galaxy S25 Ultra: Overall performance

I wandered around Pike Place Market, a haven for local shopkeepers and scores of late-summer tourists, where snapping smartphone pictures is part of the fabric of the experience. This nook — a bend in a stairway — is one of my favorite spots at the market in the morning when light comes through the window. Both cameras have done a good job balancing the exposure between the bright day outside the window and the mixture of bright sunlight and shadowy corners on the inside. Of the two, I prefer the Pixel 10 Pro XL because it’s a bit warmer.

Seattle is known more for its clouds than its sunny days, so when the sky is blue, the bright light can feel harsh. Here, the S25 Ultra photo pops more by lightening the shadow areas of the car, but almost too much. The Pixel 10 Pro XL image looks more natural, even though the car is darker.

Just down the street, though, the contrast between the cameras swings in the other direction. The Pixel 10 Pro XL brings out all the vibrant colors of the flowers, the orange awnings and the bright red umbrellas. The S25 Ultra’s shot is more muted. I couldn’t tell if perhaps some of the sunlight was hitting the lens from the side and causing that washed-out appearance. Both cameras still did a fine job of keeping details in the shadows, though.

Pixel 10 Pro XL vs. Galaxy S25 Ultra: Zoom quality

To be honest, zooming much past 10x on a phone always seemed like a futile gesture to me. Pushing past the optical range of the telephoto camera (5x on both cameras) puts you into digital zooming territory, where the camera upscales a small portion of the sensor so it fills the frame. Although digital upscaling has improved in recent years, when you get past 20x or so, photos tend to become a mess of fuzzy enlarged pixels — it’s rarely worth it.

Google decided to take a different approach to extreme zooming on the Pixel 10 Pro and Pro XL. Up to 30x zoom, it uses Google’s Super Res Zoom technology to upscale and sharpen the results, which generally turn out well.

In the extreme range from 30x to 100x, though, the Pixel 10 Pro uses generative AI to rebuild the image based on the original capture. It takes a few seconds for the processing to happen, and it’s all done on-device, not with assistance from cloud resources. The results can be impressive, particularly for static subjects like buildings or landscapes. But when you view them with any scrutiny, it’s almost always obvious that the photo has been treated with AI, with a flat, angular look — and it doesn’t handle most text in a photo at all. But that’s also me scrutinizing the image; it won’t look good printed or viewed on a large screen, but it comes across perfectly fine on a phone screen.

The Pixel 10 Pro keeps both versions of the image: The original capture and the AI-generated one.

Google says that if the camera detects people in a Pro Res Zoom image, it won’t attempt to use generative AI on them — it could easily create a person that looks nothing like the actual person in the image. When that happens, you can tell: In this shot, the sailboat has been rendered (complete with a nonsensical guess about lettering on the sail), but the people on board are sharpened but still fuzzy.

The Galaxy S25 Ultra shots at 100x are also a hot mess, but to be honest, not as bad as I expected. They’re heavily processed to compensate for the upscaling, but… not terrible? I feel like I’m giving the S25 Ultra a «good job, buddy!» for showing up and not face-planting when, in fact, the photos are objectively not great, but they’re better than I expected.

Pixel 10 Pro XL vs. Galaxy S25 Ultra: Low-light situations

Pike Place Market is a maze of levels and long, shop-lined corridors and alleys that don’t get a lot of direct light. The notorious Gum Wall — yes, an alleyway where people stick used gum on the brick walls — is dark at one end and brighter at the other depending on the sun’s position in the sky. Neither phone fell back into its respective night mode, and both made acceptable shots in the midst of a lot of color and texture. Here again, I give the edge to the Pixel 10 Pro XL for its warmth and brighter overall tone. However, in both shots, the details on the wall suffer — note the pixelated «Extra» wrapper at top left. My apologies if you’ve just lost your appetite; at least photos don’t include the specific aroma of an alley filled with thousands of fruity gum globs.

Speaking of colors and textures, this barbershop in a muted hallway lit by what look to be fluorescent ceiling bulbs and a prominent ring light is another example of each camera taking a mixed-light situation and making a good exposure. I give the edge to the Pixel 10 Pro because the neon Open sign hasn’t been turned into a flat red, as in the S25 Ultra photo.

Leaving the bustle of downtown Seattle for the beach near the Mukilteo Lighthouse about half an hour north, this beach at sunset looks much better using the ultrawide camera on the Pixel 10 Pro XL compared with the ultrawide on the S25 Ultra. And in this case, I can’t say that either picture impresses. The S25 Ultra shot is almost too dark, while the Pixel 10 Pro XL image is too bright, and the bro on the edge doesn’t survive the wide-angle edge of the frame too well.

But what about engaging the actual night modes? Here, back in Seattle, this guardian troll by Danish artist Thomas Dambo at the National Nordic Museum retains a lot of detail on the Pixel 10 Pro XL, while the S25 Ultra photo comes out a little soft and saturated. (The lights inside the museum change color, hence the blue versus purple hues behind it.) Advantage Pixel.

And for a true night test, I put both phones on a tripod to capture this section of Shilshole Marina. Once more, the Pixel 10 Pro XL’s Night Sight mode does a better job of getting a balanced exposure that mixes the artificial lights in the foreground and the darkness of the sky with some stars peeking through. The S25 Ultra looks like it’s throwing as much processing at the image as possible, making the brighter areas look overexposed and introducing a lot of noise in the sky.

Pixel 10 Pro XL vs. Galaxy S25 Ultra: Portrait modes

One of the improvements Google is touting for the Pixel 10 Pro is in the quality of portrait mode photos, specifically high-res 50-megapixel shots.

In this indoor cafe with screened window light, the Pixel 10 Pro XL is really trying to contain the flyaway wisps of hair, but it’s made them ghostly and more evident instead. Everything else about the photo looks good, from the colors to the soft background — in fact, the hair at her shoulders shows better separation than on top of her head.

On the other hand, the S25 Ultra’s Portrait mode photo has made the top hairs nicely distinct, but the falloff at her shoulders and the general smudge of background make the depth of field in this photo more obviously synthetic. Also, once again, I prefer the tone and warmer temperature of the Pixel photo.

Outside, the S25 Ultra’s Portrait mode is improved, with more natural blurred areas — note the hair over the subject’s left shoulder that’s slightly blurry but not as soft as the foliage in the background. The flyaway hairs at the top of their head also look natural. The high-resolution Portrait mode version from the Pixel 10 Pro looks entirely natural to my eye, with a soft background and all of their curly hair in focus. Once again, I prefer the Pixel’s version, but they both look good. (Although I probably should have tried Camera Coach to compose the portraits better in the frame without so much space above their head.)

Pixel 10 Pro XL vs. Galaxy S25 Ultra: Which is the better camera?

I’ve certainly come down on the side of the Pixel 10 Pro XL for most of these photos, largely due to the warmer white balance and better color fidelity. But as you can see, none of the photos are outright bad. If you’re looking for a new flagship Android phone, both models will fill that need. And if you specifically want a great camera system, right now the Pixel 10 Pro has pushed into the lead.

OK, iPhone 17 Pro, it’s your turn. Let’s see how you compare to the Pixel 10 Pro XL.

Technologies

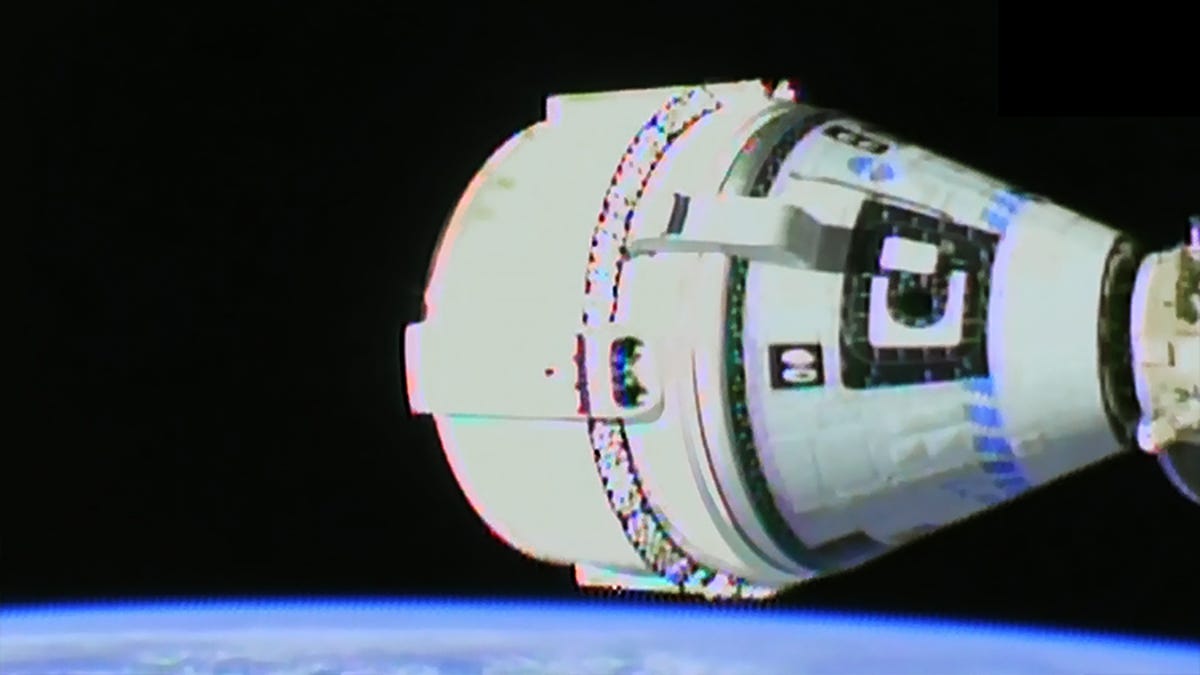

NASA Admits Fault in Starliner Test Flight, Classifies It as ‘Type A’ Mishap

Remember the astronauts who were stranded in space for months? NASA says it’s close to identifying the «true technical root cause» of the spaceship malfunctions.

NASA has been investigating the now-infamous Boeing Starliner incident since the story dominated headlines in late 2024 and early 2025. The Starliner suffered malfunctions that stranded now-retired astronauts Suni Williams and Butch Wilmore for months. The agency has now released a report on what happened, taking responsibility for its role in the mission’s failure.

«The Boeing Starliner spacecraft has faced challenges throughout its uncrewed and most recent crewed missions,» said NASA Administrator Jared Isaacman in a NASA blog post on Thursday. «While Boeing built Starliner, NASA accepted it and launched two astronauts into space. The technical difficulties encountered during docking with the International Space Station were very apparent.»

NASA has now labelled the mission a «Type A mishap,» which is defined as a «total direct cost of mission failure and property damage greater than $2 million or more,» or where «crewed aircraft hull loss has occurred.» Both of those apply to the Starliner, which has cost the agency $4.2 billion to date.

Isaacman also released a letter addressed to all NASA employees on X. The letter outlined various issues with the mission, including a «prior OFT thruster risk that was never fully understood,» disagreements among leadership about Williams and Wilmore’s return options, and the agency’s delay in declaring the mission a failure, despite its high-profile nature clearly showing it was.

These sentiments were echoed in NASA’s press conference on Thursday.

NASA has committed to working with Boeing to make the Starliner launch-worthy again and has been investigating technical issues and addressing them since the mishap early last year. Isaacman admitted at the press conference that the «true technical root cause» of the malfunctions still hasn’t been identified, but NASA believes it is close to identifying it.

«We’re not starting from zero here,» Isaacman told a reporter during the press conference. «We’re sharing the results of multiple investigations that will be coming to light in the hours and days ahead. Boeing and NASA have been working to try and understand these technical challenges during that entire time period.»

A malfunction to remember

The crewed Starliner flight was delayed several times before finally launching on June 5, 2024. The crew experienced malfunctions en route to the ISS, including several thruster failures, which made docking particularly stressful.

The Starliner’s return was delayed by two weeks before finally being sent home without Wilmore and Williams, who were left stranded on the ISS until returning with Crew-9 in March 2025.

The Starliner’s story is far from over. NASA and Boeing intend to send the Starliner back to the ISS in an uncrewed resupply mission with a launch date currently set for April 2026.

Technologies

Let T-Mobile Pick Up the Tab. Get a Free iPhone 17 With a New Line

If you’ve been looking to add a new line or switch carriers, you can scoop up Apple’s latest flagship on T-Mobile’s dime.

Apple’s new iPhone 17 typically costs $830 for the 256GB configuration, or up to $1,030 for the 512GB configuration. However, T-Mobile isoffering it to customers for free if they meet certain qualifications. If you’ve been looking to trade in your old device or choose an eligible plan, now is a great time to nab this deal.

T-Mobile doesn’t mention a deadline for this deal’s end, but it’s best to act fast if you’ve been wanting the latest iPhone.

To get a free iPhone 17, you’ll need to switch to T-Mobile on an Experience Beyond or Experience More plan and open a new line. You can also choose a Better Value plan, but you must add at least three lines with that plan to get your phone. You can also add a new line on a qualifying plan to score the deal, so long as you also have an eligible device to trade in.

Buyers are still responsible for the $35 activation fee. You’ll get bill credits for 24 months that amount to your phone’s cost. Additionally, you can only get up to four devices with a new line on a qualifying plan.

Note that newer phones will net you more trade-in credits, but an iPhone 6 will net you at least $400 off. The iPhone 17 Pro is also free with a trade-in of an eligible device on an Experience Beyond plan. The iPhone 17 Pro Max is just over $4 per month right now, with the same qualifications.

We’ve also got a list of the best phone deals, if you’d like to shop around.

MOBILE DEALS OF THE WEEK

-

$749 (save $250)

-

$298 (save $102)

-

$241 (save $310)

-

$499 (save $300)

Why this deal matters

The iPhone 17 series is the latest in Apple’s ecosystem. These smartphones are made to work with Apple Intelligence, include faster chips, offer improved camera performance and show off Apple’s trademark gorgeous design. Starting at $830, they’re not the cheapest phones around, so carrier deals like this one are the best way to save some serious cash.

Technologies

How Team USA’s Olympic Skiers and Snowboarders Got an Edge From Google AI

Google engineers hit the slopes with Team USA’s skiers and snowboarders to build a custom AI training tool.

Team USA’s skiers and snowboarders are going home with some new hardware, including a few gold medals, from the 2026 Olympics. Along with the years of hard work that go into being an Olympic athlete, this year’s crew had an extra edge in their training thanks to a custom AI tool from Google Cloud.

US Ski and Snowboard, the governing body for the US national teams, oversees the training of the best skiers and snowboarders in the country to prepare them for big events, such as national championships and the Olympics. The organization partnered with Google Cloud to build an AI tool to offer more insight into how athletes are training and performing on the slopes.

Video review is a big part of winter sports training. A coach will literally stand on the sidelines recording an athlete’s run, then review the footage with them afterward to spot errors. But this process is somewhat dated, Anouk Patty, chief of sport at US Ski and Snowboard, told me. That’s where Google came in, bringing new AI-powered data insights to the training process.

Google Cloud engineers hit the slopes with the skiers and snowboarders to understand how to build an actually useful AI model for athletic training. They used video footage as the base of the currently unnamed AI tool. Gemini did a frame-by-frame analysis of the video, which was then fed into spatial intelligence models from Google DeepMind. Those models were able to take the 2D rendering of the athlete from the video and transform it into a 3D skeleton of an athlete as they contort and twist on runs.

Final touches from Gemini help the AI tool analyze the physics in the pixels, according to Ravi Rajamani, global head of Google’s AI Blackbelt team. which worked on the project. Coaches and athletes told the engineers the specific metrics they wanted to track — speed, rotation, trajectory — and the Google engineers coded the model to make it easy to monitor them and compare between different videos. There’s also a chat interface to ask Gemini questions about performance.

«From just a video, we are actually able to recreate it in 3D, so you don’t need expensive equipment, [like] sensors, that get in the way of an athlete performing,» Rajamani said.

Coaches are undeniably the experts on the mountain, but the AI can act as a kind of gut check. The data can help confirm or deny what coaches are seeing and give them extra insight into the specifics of each athlete’s performance. It can catch things that humans would struggle to see with the naked eye or in poor video quality, like where an athlete was looking while doing a trick and the exact speed and angle of a rotation.

«It’s data that they wouldn’t otherwise have,» Patty said. The 3D skeleton is especially helpful because it makes it easier to see movement obscured by the puffy jackets and pants athletes wear, she said.

For elite athletes in skiing and snowboarding, making small adjustments can mean the difference between a gold medal and no medal at all. Technological advances in training are meant to help athletes get every available tool for improvement.

«You’re always trying to find that 1% that can make the difference for an athlete to get them on the podium or to win,» Patty said. It can also democratize coaching. «It’s a way for every coach who’s out there in a club working with young athletes to have that level of understanding of what an athlete should do that the national team athletes have.»

For Google, this purpose-built AI tool is «the tip of the iceberg,» Rajamani said. There are a lot of potential future use cases, including expanding the base model to be customized to other sports. It also lays the foundation for work in sports medicine, physical therapy, robotics and ergonomics — disciplines where understanding body positioning is important. But for now, there’s satisfaction in knowing the AI was built to actually help real athletes.

«This was not a case of tech engineers building something in the lab and handing it over,» Rajamani said. «This is a real-world problem that we are solving. For us, the motivation was building a tool that provides a true competitive advantage for our athletes.»

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow