Technologies

The Revelation I Got From Experiencing HaptX Is Wild

I tested gloves and buzzing things in Las Vegas to see where the future points.

I put my hands out flat and loaded them into a pair of gloves loaded with joints, cables, pumps and tightening straps. All of this was connected to a backpack-size box that helped pump pressure around my fingers and create sensations of touching things. I was about to play Jenga in VR using an $80,000 pair of haptic gloves made by HaptX.

The future of the metaverse, or how we’ll dip into virtual worlds, seems to involve VR and AR, sometimes. If it does, it’ll also mean solving what we do with our hands. While companies like Meta are already researching ways that neural input bands and haptic gloves could replace controllers, none of that is coming for years. In the meantime, is there anything better than the VR game controllers already out there or basic camera-based hand tracking? I’ve tried a couple of haptic gloves before, but I was ready to try more.

I poked around CES 2023 in Las Vegas to get some experiences with devices I hadn’t tried before, and it suddenly hit me that there’s already a spectrum of options. Each of them was a little revelation.

High end: Massive power gloves

HaptX has been recognized for years as one of the best haptic gloves products on the market, but I’d never had a chance to experience them. The hardware is highly specialized and also extremely large and expensive. I wish I’d gotten a chance to see them at the last CES I attended before this, in 2020. Finally, in 2023, I got a chance.

The gloves use microfluidics, pumping air into small bladders that create touch sensations in 133 zones per hand across the fingers and palm. At the same time, cables on the backs of the fingers pull back to simulate up to 8 pounds of force feedback. Used with apps that support them, you can reach out, grab things and actually feel them.

I’ve tried lower-cost haptic gloves at home that didn’t have the air bladders but did have cables to apply resistance. The HaptX gloves are a big step forward and the most eerily realistic ones I’ve ever tried. I wouldn’t say everything «felt real,» but the poking finger-feelings I had in my fingers and palms let me feel shapes of things, while the resistance gave me a sense of grabbing and holding stuff.

The most amazing moments were when I placed objects on my palm and seemed to feel their weight. Also, when another person’s finger virtually touched mine. Another journalist was in another VR headset with haptic gloves playing Jenga next to me. We never made contact, but occasionally we shook hands virtually or gave high-fives. Our fingers touching felt… well, oddly real, like sensing someone’s finger touching your glove.

HaptX is making another pair of smaller, more mobile gloves later this year that cost less (about $5,000) while still promising the same level of feedback, plus tactile vibrations like the haptic buzzes you might feel with game controllers. I didn’t get to demo that, but I can’t wait.

While HaptX’s tech is wild, it’s meant for industrial purposes and simulations. It represents actual reality, but it’s so massive that it wouldn’t let me do anything else other than live in its simulated world. For instance, how would I type or pull out my phone? Still, I’ll dream of interfaces that let me feel as immersed as these gloves can accomplish.

Budget gloves: bHaptics’ TactGloves

At $300, bHaptics‘ yellow haptic gloves are far, far less expensive than HaptX. They’re also completely different. Instead of creating pressure or resistance, all they really do is have various zones inside that electrically buzz, like your phone, watch or game controller, to sync up with moments when your fingers in VR would virtually touch something. Strangely, it’s very effective. In a few demos I tried, pushing buttons and touching objects provided enough feedback to feel like I was really «clicking» a thing. Another demo, which had me hug a virtual avatar mirroring my movements or shake hands, gave enough contact to fool me into feeling I was touching them.

bHaptics also makes a haptic vest I tried called the TactSuit that vibrates with feedback with supported games and apps. There aren’t many apps that work ideally with haptic gloves right now, because no one’s using haptic gloves. But bHaptics’ support of the standalone Meta Quest 2, and its wireless Bluetooth pairing, means they’re actually portable… even if they look like giant janitorial cleaning gloves. The tradeoff with being so small and wireless is their range is short. I had to keep the gloves within about two feet of the headset, otherwise they’d lose connection.

The buzzing feedback didn’t prove to me that I could absolutely reach into other worlds, but they offered enough sensation to make hand tracking feel more precise, Instead of wondering whether my hand gestures had actually contacted a virtual object, I could get a buzzing confirmation. The whole experience reminded me of some sort of game controller feedback I could wear on my fingers, in a good way.

No gloves at all: Ultraleap’s Ultrasonics

Ultraleap, a company that’s specialized in hand tracking for years, has a different approach to haptics: sensations you can feel in the air. I waved my hand above a large rectangular panel and felt ripples and buzzes beneath my fingers. The feelings are created with ultrasonic waves, high-powered sound bursts that move air almost like super-precise fans against your fingers. I tried Ultraleap’s tech back in 2020, but trying the latest and more compact arrays this year made me think about a whole new use case. It was easy to make this logic leap, since Ultraleap’s booth also demonstrated hand tracking (without haptic feedback) on Pico Neo 3 and Lynx R1 VR and mixed reality headsets.

What if… this air vibration could be used for headsets? Ultraleap is already dreaming and planning for this solution, but right now ultrasonic tech is too power hungry, and the panels too large, for headgear. The tech is mainly being used in car interface concepts, where the hand gestures and feedback could make adjusting car controls while driving easier to use and less dangerous or awkward. The range of the sensations, at least several feet, seem ideal for the arm length and radius of most existing camera-based hand-tracking tech being used right now on devices like the Meta Quest 2.

I tried a demo where I adjusted a virtual volume slider by pinching and raising the volume up and down, while feeling discrete clicks to let me know I was doing something. I could feel a virtual «bar» in the air that I could feel and perhaps even move. The rippling, subtle buzzes are far more faint than those on haptic gloves or game controllers (or your smartwatch), but they could be just enough to give that extra sense that a virtual button press, for instance, actually succeeded…or that a gesture to turn something on or off was registered.

If these interfaces move to VR and AR, Ultreleap’s representatives said they’d likely end up in larger installations first: maybe theme park rides. Ultraleap’s tech is already in experiences like the hands-free Ninjago ride at Legoland, which I’ve tried with my kids. The 3D hand-tracking ride lets me throw stars at enemies, but sometimes I’m not sure my gestures were registered. What if buzzing let me know I was making successful hits?

Haptics are likely to come from stuff we already wear

Of course, I skipped the most obvious step for AR and VR haptic feedback: smartwatches and rings. We wear buzzing things on our wrists already. Apple’s future VR/AR device might work with the Apple Watch this way, and Meta, Google, Samsung, Qualcomm and others could follow a similar path with dovetailing products. I didn’t come across any wearable watch or ring VR/AR haptics at CES 2023 (unless I missed them). But I wouldn’t be surprised if they’re coming soon. If AR and VR are ever going to get small enough to wear more often, we’re going to need controls that are far smaller than game controllers… and ways to make gesture inputs feel far less weird. Believe the buzz: Haptics is better than you think.

Technologies

AI Is Taking Over Social Media, but Only 44% of People Are Confident They Can Spot It, CNET Finds

Half of social media users said they want better labels on AI-generated and edited posts.

AI slop has infected every social media platform, from soulless images to bizarre videos and superficially literate text. The vast majority of US adults who use social media (94%) believe they encounter content that was created or altered by AI, but only 44% of US adults say they’re confident they can tell real photos and videos from AI-generated ones, according to an exclusive CNET survey. That’s a big problem.

There are a lot of different ways people are fighting back against AI content. Some solutions are focused on better labels for AI-created content, since it’s harder than ever to trust our eyes. Of the 2,443 respondents who use social media, half (51%) believed we need better AI labels online. Others (21%) believe there should be a total ban on AI-generated content on social media. Only a small group (11%) of respondents say they find AI content useful, informative or entertaining.

AI isn’t going anywhere, and it’s fundamentally reshaping the internet and our relationship with it. Our survey shows that we still have a long way to go to reckon with it.

Key findings

- Most US adults who use social media (94%) believe they encounter AI content on social media, yet far fewer (44%) can confidently distinguish between real and fake images and videos.

- Many US adults (72%) said they take action to determine if an image or video is real, but some don’t do anything, particularly among Boomers (36%) and Gen Xers (29%).

- Half of US adults (51%) believe AI-generated and edited content needs better labeling.

- One in five (21%) believe AI content should be prohibited on social media, with no exceptions.

US adults don’t feel they can spot AI media

Seeing is no longer believing in the age of AI. Tools like OpenAI’s Sora video generator and Google’s Nano Banana image model can create hyperrealistic media, with chatbots smoothly assembling swaths of text that sound like a real person wrote them.

So it’s understandable that a quarter (25%) of US adults say they aren’t confident in their ability to distinguish real images and videos from AI-generated ones. Older generations, including Boomers (40%) and Gen X (28%), are the least confident. If folks don’t have a ton of knowledge or exposure to AI, they’re likely to feel unsure about their ability to accurately spot AI.

People take action to verify content in different ways

AI’s ability to mimic real life makes it even more important to verify what we’re seeing online. Nearly three in four US adults (72%) said they take some form of action to determine whether an image or video is real when it piques their suspicions, with Gen Z being the most likely (84%) of the age groups to do so. The most obvious — and popular — method is closely inspecting the images and videos for visual cues or artifacts. Over half of US adults (60%) do this.

But AI innovation is a double-edged sword; models have improved rapidly, eliminating the previous errors we used to rely on to spot AI-generated content. The em dash was never a reliable sign of AI, but extra fingers in images and continuity errors in videos were once prominent red flags. Newer AI models usually don’t make those pedestrian mistakes. So we all have to work a little bit harder to determine what’s real and what’s fake.

As visual indicators of AI disappear, other forms of verifying content are increasingly important. The next two most common methods are checking for labels or disclosures (30%) and searching for the content elsewhere online (25%), such as on news sites or through reverse image searches. Only 5% of respondents reported using a deepfake detection tool or website.

But 25% of US adults don’t do anything to determine if the content they’re seeing online is real. That lack of action is highest among Boomers (36%) and those in Gen X (29%). This is worrisome — we’ve already seen that AI is an effective tool for abuse and fraud. Understanding the origins of a post or piece of content is an important first step to navigating the internet, where anything could be falsified.

Half of US adults want better AI labels

Many people are working on solutions to deal with the onslaught of AI slop. Labeling is a major area of opportunity. Labeling relies on social media users to disclose that their post was made with the help of AI. This can also be done behind the scenes by social media platforms, but it’s somewhat difficult, which leads to haphazard results. That’s likely why 51% of US adults believe that we need better labeling on AI content, including deepfakes. Support was strongest among Millennials and Gen Z, at 56% and 55%, respectively.

Other solutions aim to control the flood of AI content shared on social media. All of the major platforms allow AI-generated content, as long as it doesn’t violate their general content guidelines — nothing illegal or abusive, for example. But some platforms have introduced tools to limit the amount of AI-generated content you see in your feeds; Pinterest rolled out its filters last year, while TikTok is still testing some of its own. The idea is to give every person the ability to permit or exclude AI-generated content from their feeds.

But 21% of respondents believe that AI content should be prohibited on social media altogether, no exceptions allowed. That number is highest among Gen Z at 25%. When asked if they believed AI content should be allowed but strictly regulated, 36% said yes. Those low percentages may be explained by the fact that only 11% find AI content provides meaningful value — that it’s entertaining, informative or useful — and that 28% say it provides little to no value.

How to limit AI content and spot potential deepfakes

Your best defense against being fooled by AI is to be eagle-eyed and trust your gut. If something is too weird, too shiny or too good to be true, it probably is. But there are other steps you can take, like using a deepfake detection tool. There are many options; I recommend starting with the Content Authenticity Initiative‘s tool, since it works with several different file types.

You can also check out the account that shared the post for red flags. Many times, AI slop is shared by mass slop producers, and you’ll easily be able to see that in their feeds. They’ll be full of weird videos that don’t seem to have any continuity or similarities between them. You can also check to see if anyone you know is following them or if that account isn’t following anyone else (that’s a red flag). Spam posts or scammy links are also indications that the account isn’t legit.

If you want to limit the AI content you see in your social feeds, check out our guides for turning off or muting Meta AI in Instagram and Facebook and filtering out AI posts on Pinterest. If you do encounter slop, you can mark the post as something you’re not interested in, which should indicate to the algorithm that you don’t want to see more like it. Outside of social media, you can disable Apple Intelligence, the AI in Pixel and Galaxy phones and Gemini in Google Search, Gmail and Docs.

Even if you do all this and still get occasionally fooled by AI, don’t feel too bad about it. There’s only so much we can do as individuals to fight the gushing tide of AI slop. We’re all likely to get it wrong sometimes. Until we have a universal system to effectively detect AI, we have to rely on the tools we have and our ability to educate each other on what we can do now.

Methodology

CNET commissioned YouGov Plc to conduct the survey. All figures, unless otherwise stated, are from YouGov Plc. The total sample size was 2,530 adults, of which 2,443 use social media. Fieldwork was undertaken Feb. 3-5, 2026. The survey was carried out online. The figures have been weighted and are representative of all US adults (aged 18 plus).

Technologies

My Galaxy A17 Review: Samsung’s $200 Phone Does It All… Slowly

Samsung’s lower-cost Galaxy phone hits all the right check boxes, but it’s easily overwhelmed when multitasking.

Pros

- Big and bright screen

- Good photos for the price

- Six years of software and security support

Cons

- Multitasking can be rough

- Noticeably sluggish

Samsung’s $200 Galaxy A17 5G makes me thankful that Android is so flexible. That’s because during my three weeks using the most affordable 2026 Galaxy phone, I kept running into roadblocks with the phone’s underpowered hardware.

Whenever I tried to run a navigation app on the phone at the same time as streaming music, I found that either the song had noticeable pauses and dips or the navigation app would automatically quit without any notice. This was especially frustrating when I realized I missed my subway stop while trying to make it to my friend’s concert. When the phone is doing just one of these tasks, the A17 loads them up fast and even feels smooth.

It’s a shame because the phone otherwise feels like a great value. It has access to nearly all the same apps and services found on more expensive Galaxy phones. I appreciated having Samsung’s Now bar, with dynamic notifications showing how much time is left on timers and important boarding pass information for my flights. Samsung’s Smart View lets me use Miracast to stream my phone’s display to a Roku TV, while most Android phones lately only include Chromecast support. Plus Samsung’s six-year promise of software and security updates is unmatched in this price range.

So while I do feel the Galaxy A17 is one of the best phones available for most people looking for a new device that’s under $200, it’s not an enthusiastic recommendation.

This phone could be great for someone who just wants a device that keeps things simple: Yes, you can make calls, send texts, take decent photos and stream videos from your favorite social media app for a low price. Just don’t expect the Galaxy A17 to excel at tasks that require some app juggling.

My Galaxy A17 navigation and music fix

I discovered a quick fix for when keeping multiple apps open puts too much strain on the phone, like when I used Google Maps and Apple Music at the same time. Open Settings: Search for Memory and you’ll bring up a page that lets you list your most important background apps as Excluded apps. This tells the Galaxy A17 to stop policing how much memory these take up, as the phone is actively checking and turning off apps that you might not need in the background. And with a limited 4GB of memory, I hit this strain constantly.

While Samsung does let you convert some of its onboard storage into an additional 4GB of memory, the Galaxy A17 simply does not have enough space to make multitasking easy. While it’s not uncommon for phones in this price tier to struggle with complex tasks, it’s frustrating to see the Galaxy A17 stumble in common multitasking processes such as navigation or listening to music.

Samsung Galaxy A17 design, software, battery

The Samsung Galaxy A17 might not have the trendy vegan leather look of the Moto G, but the A17 does make plastic look as good as you can get. My review unit came in black, and there’s also a blue option. The design mimics the newer Galaxy phones by assembling its rear cameras into a vertically aligned oval camera bar.

Along the front is the phone’s nice and bright 6.7-inch display, which runs at a 1,080p resolution. For its affordable price, the phone’s display is a highlight, and it runs smoothly at a 90Hz refresh rate. This made the phone particularly good for watching videos and browsing the web. While games looked good, the phone’s limited memory and processing power got in the way of them working well.

The Galaxy A17 comes with 4GB of memory and 128GB of storage, which have become fairly standard for phones at the $200 price range. But what bugs me is that this configuration is the same as what the Galaxy A15 offered two years ago, and when I reviewed that phone I also felt like the device struggled with some tasks. While Samsung has a RAM Plus setting to virtually expand the memory by «borrowing» from main storage, the limited space quickly became apparent whenever I tried to use the phone for multiple tasks.

The Galaxy A17 uses Samsung’s Exynos 1330 processor. In benchmark tests, it scored slightly lower than the MediaTek Dimensity 6300 powering the $180 Moto G Play that I recently tested. Even without the tests, it’s clear that you need to take things easy with the Galaxy A17. The phone would often crawl when I used it for basic tasks: When I swipe down from the top of the screen to look at notifications, there’s a noticeable delay between the swipe and the action on the screen. Playing music while texting sometimes works, and sometimes doesn’t. And sometimes when opening an app, I’d be greeted with a blank white screen while I waited for assets to load.

3DMark Wild Life Extreme

Geekbench 6.0

- Single-core

- Multicore

But when the phone works, I’ve been delighted by the way Samsung has been able to scale down its myriad of services to its $200 phone. Samsung Health, Samsung Wallet and Samsung’s Weather app are fully functional and even colorful. While audio plays only from a single speaker, it gets quite loud when I put on my news podcasts in the living room. Samsung no longer provides a headphone jack for its under-$200 phone, which began with last year’s A16, but it’s easy enough to listen through a Bluetooth-connected pair of wireless earbuds or a cast audio to a speaker.

The 5,000-mAh battery helps the phone last a little longer than a day of normal use. I typically ended a day with 30% to 40% of battery left. You’ll probably want to charge the phone every day and luckily it’s 25W wired charging speed filled the battery from 0% to 54% in 30 minutes. That’s quite good for the price, and likely means you’ll be able to charge the phone up while getting ready for the day.

In our 3-hour YouTube streaming battery test, the Galaxy A17 performs a hair better than Motorola’s $160 Moto G Play. It depleted to 81% by the time I’d finished testing its 5,000-mAh battery. The Play has a slightly bigger 5,200-mAh battery, which dropped to 79% during testing.

Galaxy A17 cameras

The cameras on the Samsung Galaxy A17 5G perform fairly well for the phone’s price. There’s a 50-megapixel wide-angle camera, a 5-megapixel ultrawide and a 2-megapixel macro for shooting close-up subjects. The photos I took at the pirate-themed Gasparilla Festival in Tampa, Florida managed to capture all the action without too much blurring. That said, the photos themselves aren’t very detailed, showing the camera suite’s limited capabilities.

The overcast day helped make colors come out, however it’s clear that the cameras don’t have a wide dynamic range and aren’t advanced enough to separate out dark hair from shadows. But for this price, that’s acceptable, as I’m glad to see so little motion blur.

The cameras were challenged more when trying to zoom in. Images taken with the preset 2x zoom had an abundance of crushed shadows making dark colors and textures, like hair, appear to blend together.

With a more stable subject under decent lighting, such as the chicken stir fry bowl I got at the parade, the images have a lot of detail when I didn’t use the zoom.

The phone’s autofocus was on the chicken, rice and vegetables, but the grass behind it and the fallen beads on the ground blend together because the main lens’ natural bokeh, which looks crunchy (instead of buttery smooth and dreamy).

This ultrawide photo of the same subject fares better, with some loss of detail on the dish. The background looks clearer as the ultrawide lens keeps more of the image, the grass, beads and trash in focus.

Like many phones in this price range, you’ll get the best results in environments with good lighting. In this photo from The Book Lounge in St. Petersburg, Florida, the bookshelves are on full display and the A17’s cameras are able to depict the text of most of the book covers. It does struggle with a few: Skin in the Game by William Miller in the top-right is slightly out of focus, which is probably due to the lower quality of the main camera’s optics.

And in this 2x photo, the shelf appears softer because the A17 has to crop in since there’s not a dedicated zoom lens. But the variety of the book colors still looks true to life.

Selfie photos taken with the 13-megapixel front-facing camera get the job done, but they’re not great. I’d share them with group chats, but probably wouldn’t post them publicly. I took the selfie below in a well-lit Manhattan diner. The image has a lot of detail in my face: Note my skin texture and hair.

Samsung Galaxy A17 5G: The bottom line

The Samsung Galaxy A17 5G’s big selling point is its $200 price and access to many modern Galaxy features. This phone might even be offered for free with a carrier deal. When it comes to basic tasks, the Galaxy A17 is capable of doing most of them, including phone calls, texting, tapping into the subway using Samsung Wallet, web browsing and simple photography in well-lit environments.

But if you find yourself multitasking, just know that the Galaxy A17 quickly becomes frustrating.

If you need a cheaper phone, the Galaxy A17 is currently the choice I’d recommend most for its variety of features. Just be easy with it.

Samsung Galaxy A17 5G vs. Motorola Moto G Play (2026), Motorola Moto G Power (2026)

| Samsung Galaxy A17 5G | Motorola Moto G Play (2026) | Motorola Moto G Power (2026) | |

|---|---|---|---|

| Display size, resolution | 6.7-inch AMOLED, 2,340×1,080 pixels, 90Hz refresh rate | 6.7-inch LCD; 1,604×720 pixels; 120Hz refresh rate | 6.8-inch LCD, 2,388×1,080 pixels, 120Hz refresh rate |

| Pixel density | 385 ppi | 263 ppi | 387ppi |

| Dimensions (inches) | 6.5×3.1×0.3 in | 6.6x3x0.3 in | 6.6x3x0.3 in |

| Dimensions (millimeters) | 164.4×77.9×7.5mm | 167.2×76.4×8.4 mm | 167x77x8.7mm |

| Weight (ounces, grams) | 192 g (6.8 oz) | 202 g (7.1 oz) | 208 g (7.3 ounces) |

| Mobile software | Android 16 | Android 16 | Android 16 |

| Camera | 50-megapixel (wide), 5-megapixel (ultrawide), 2-megapixel (macro) | 32-megapixel | 50-megapixel (wide), 8-megapixel (ultrawide) |

| Front-facing camera | 13-megapixel | 8-megapixel | 32-megapixel |

| Video capture | 1,080p at 30fps | 1,080p at 30fps | 1080p at 60fps |

| Processor | Samsung Exynos 1330 | MediaTek Dimensity 6300 | MediaTek Dimensity 6300 |

| RAM/Storage | 4GB + 128GB | 4GB + 64GB | 8GB + 128GB |

| Expandable storage | Yes, microSD | Yes | microSD |

| Battery/Charger | 5,000 mAh | 5,200 mAh | 5,200 mAh |

| Fingerprint sensor | Side | Side | Side |

| Connector | USB-C | USB-C | USB-C |

| Headphone jack | None | Yes | Yes |

| Special features | 25W wired charging, One UI 8.0, Smart View, Samsung Health, Samsung Wallet, IP54 dust- and water-resistance, six years of software and security updates | Two years of software updates, three years of security updates, 18W wired charging, NFC, Gorilla Glass 3 | 30W wired charging, RAM Boost, Dolby Atmos, NFC, IP68 and IP69 water and dust resistance |

| Price off-contract (USD) | $200 (128GB) | $180 (64GB) | $300 (128GB) |

How we test phones

Every phone tested by CNET’s reviews team was actually used in the real world. We test a phone’s features, play games and take photos. We examine the display to see if it’s bright, sharp and vibrant. We analyze the design and build to see how it is to hold and whether it has an IP-rating for water resistance. We push the processor’s performance to the extremes using standardized benchmark tools like GeekBench and 3DMark, along with our own anecdotal observations navigating the interface, recording high-resolution videos and playing graphically intense games at high refresh rates.

All the cameras are tested in a variety of conditions from bright sunlight to dark indoor scenes. We try out special features like night mode and portrait mode and compare our findings against similarly priced competing phones. We also check out the battery life by using it daily as well as running a series of battery drain tests.

We take into account additional features like support for 5G, satellite connectivity, fingerprint and face sensors, stylus support, fast charging speeds and foldable displays, among others that can be useful. We balance all of this against the price to give you the verdict on whether that phone, whatever price it is, actually represents good value. While these tests may not always be reflected in CNET’s initial review, we conduct follow-up and long-term testing in most circumstances.

Technologies

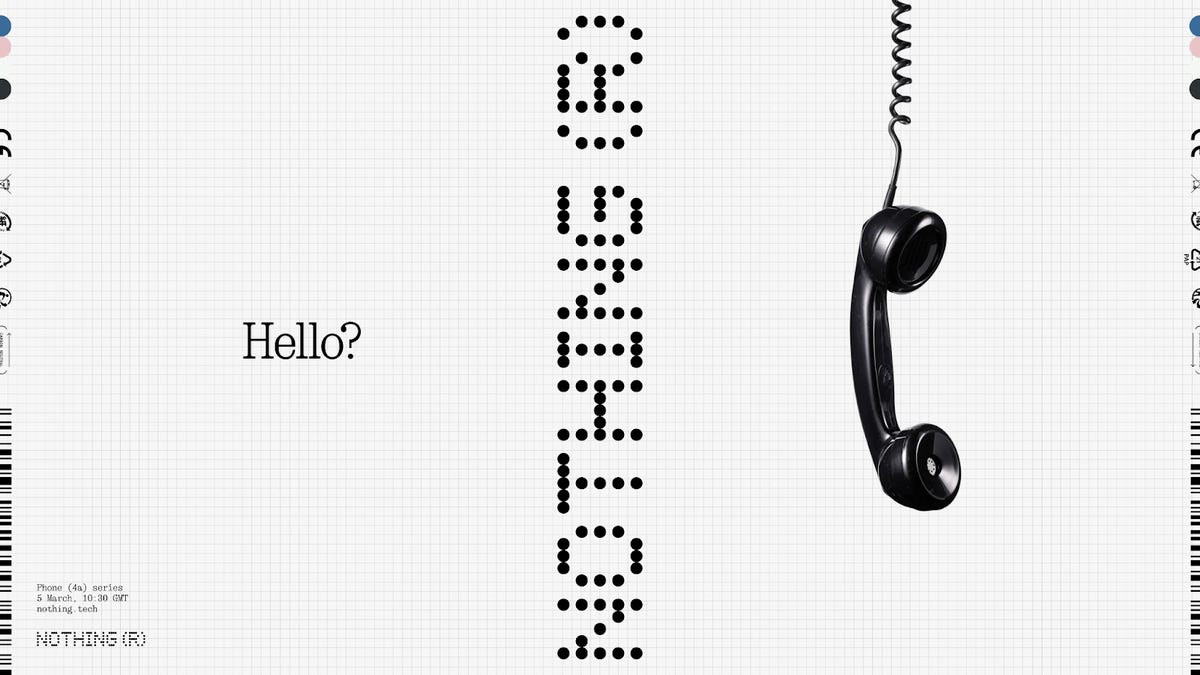

Nothing to Launch the Phone 4A On March 5, and Hints at Possible Pink Design

Nothing promises the Phone 4A will feature a «bold new experimentation of color.» We think we might know what that means.

British tech company Nothing has set a date for the launch of its upcoming Phone 4A. The latest midrange offering from the design-forward phone-maker will make an appearance on March 5 at the renowned London art school Central Saint Martin’s.

The phone launch will take place during one of the busiest weeks in the tech calendar, brushing up against both MWC (March 2-5) and Apple’s «special experience» on March 4, where the company might unveil the iPhone 17E. Still, what we might see at these rival events remains largely a mystery for now. Nothing, on the other hand, has given us a much clearer idea of what to expect when it livestreams the launch of the Phone 4A at 10.30 a.m. GMT (2.30 a.m. PT).

«We’re going to be focused on levelling up our A series with the 4A,» said Nothing CEO Carl Pei in a video he posted last month. «It’s our best-selling series and we’re really excited about taking this even closer to what a flagship experience is going to be across the board from materials, design to screen, camera, etc.»

The Phone 4A is the successor to the Phone 3A, which Nothing launched at a similar time last year. But it also builds on the success of the Phone 3, Nothing’s first true flagship, which arrived last summer. CNET reviewed both phones and we were especially taken with the Phone 3A series

«The Nothing Phone 3A Pro impressed me enough with its combination of value and performance that I awarded it a coveted CNET Editors’ Choice award,» said CNET Editor at Large Andrew Lanxon. «I want to see Nothing continue its focus on affordability while offering a phone that’s capable of handling all of the everyday essentials. I’d love to see some vibrant colors too as, let’s be honest, phones aren’t as interesting as they used to be.»

Pei has already promised that the phone will offer a «bold new experimentation of color,» and he might just have given us a hint as to what color he’s referring to — based on a graphic he posted to Instagram on Tuesday.

}

The image features Nothing’s name and the date of the Phone 4a launch event scrawled in bright pink graffiti-style lettering over the top of the invite to Apple’s March 4 «special experience.»

Pei has already said that the Phone 4A will continue the evolution of Nothing’s transparent design principles, and it would be a fun move for this latest device to glow bright pink. But Nothing is nothing if not bold when it comes to design. If any company can make an iconic neon pink phone work in 2026, it’s this one.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow