Technologies

7 Ways Microsoft Uses AI to Make You Actually Care About Bing

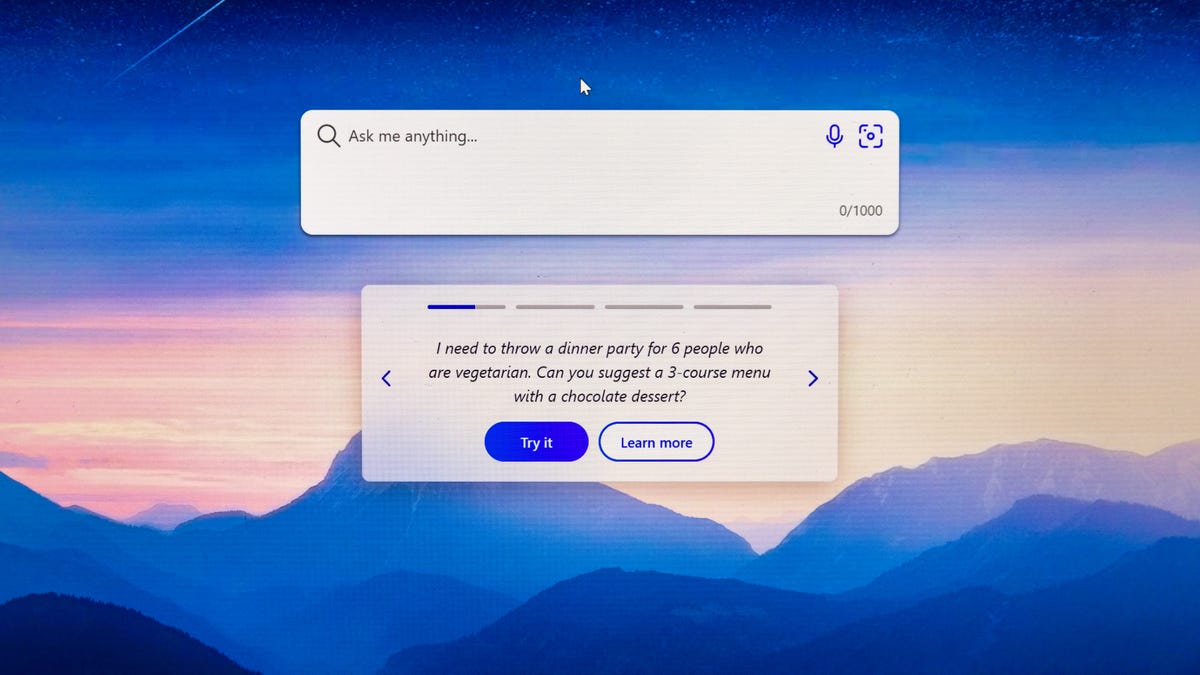

It isn’t just ChatGPT on the Bing website. Microsoft blends OpenAI’s language technology with its own Bing search engine.

Microsoft Bing faces a big problem: Google utterly eclipses search engine. But Bing has a chance to grab more attention for itself with the OpenAI‘s language technology, the artificial intelligence foundation that’s made the ChatGPT service a huge hit.

For the brainier Bing to work, though, Microsoft has to get the details right. ChatGPT can be useful, but it can be flaky, too, and nobody wants a search engine they can’t trust.

Microsoft has put a lot of thought and its own programming resources into the challenge. It’s wrestled with issues like how AI-powered Bing shows ads, reveals its data sources, and grounds the AI technology in reality so you get trustworthy results, not the digital hallucinations that can be hard to spot in machine-generated information.

I spoke to Jordi Ribas, leader of Bing search and AI, to dig more deeply into the overhauled Bing search engine. He’s a big enough fan that he used the technology to help him write his boss a memo about it. «It probably saved me two to three hours,» he said, and it improved the Spanish executive’s English, too.

When the technology expands beyond today’s very small test group, it’ll let millions of us dig for much more complicated information, like whether an Ikea loveseat will fit into your car. And we’ll all be able to see whether it truly gives Google a run for its money. But for now, are seven aspects of Bing AI that I learned.

Bing AI isn’t just a repackaged version of ChatGPT

Microsoft blends its Bing search engine with the large language model technology from OpenAI, the AI lab that built the ChatGPT tool that’s fired up excitement about AI and that Microsoft invested in. You can get ChatGPT-like results using Bing’s «chat» option — for example, «Write a short essay on the importance of Taoism.» But for other queries, Bing and OpenAI technology are blended through an orchestration system Microsoft calls Prometheus.

For instance, you can Bing, «I like the band Led Zeppelin. What other musicians should I listen to?» OpenAI first paraphrases that prompt to «bands similar to Led Zeppelin,» then repackages Bing search results in a bulleted list. Each suggestion, like Fleetwood Mac, Pink Floyd and the Rolling Stones, comes with a two-sentence description.

Bing AI cites its sources — sometimes

When you give ChatGPT a prompt, it’ll respond with text it generates, but it won’t tell you where it got that information. The AI system is trained on vast amounts of the information on the internet, but it’s hard to draw a direct line between that training data and ChatGPT’s output.

On Bing, though, factual information is often annotated, because Bing knows the source from its indexing of the web. For example, in the Led Zeppelin prompt above, Bing includes a link at the top of its answer to a Musicaroo post, 13 Bands That Sound Like Led Zeppelin, and includes that link and others from MusicalMum and Producer Hive.

That sourcing transparency helps address a big criticism of AI, making it easier to evaluate whether the response is accurate or a mere AI hallucination. But it doesn’t always appear. In the essay on Taoism above, for example, there aren’t any sources, footnotes or links at all.

Some source links are ads that make Microsoft money

The Bing AI’s elaborate answers provide a new way for Microsoft to generate money from ads. In traditional Bing searches, the «organic» search results that Bing judges to be most relevant are separate from items placed by advertisers. But with Bing AI searches, the two types of information can be blended.

For example, in its response to the query «plan me a one-week trip to Iceland without a rental car,» AI-powered Bing suggests several destinations. In one of them, several words are underlined: «You can visit places like Vík, Skógafoss, Seljalandsfoss, and Jökulsárlón glacier lagoon by joining a multi-day tour or taking a bus.» Hovering over that link shows three sources for that information and an ad from a tour company. The advertisement is the top item of the three and is labeled «ad.»

«When you look at those citations, sometimes they are ads,» Ribas said. «When it’s more of a purchasing intent query, you hover over it and you’ll see the list of the references and sometimes it’s an ad. Then sometimes in the conversation itself, you’re going to see product ads, like if you do a hotel query.»

Ad revenue is a big deal, since it takes weeks of work on an enormous cluster of computers for OpenAI to build a single update to its language model, and OpenAI CEO Sam Altman estimates it costs a few cents to process each ChatGPT prompt. Bing, even though it’s a distant second to Google in the search engine market, still handles millions of queries a day.

Google plans to open access to its Bard AI chatbot soon, but it won’t be including ads to begin with.

OpenAI-boosted results are more relevant than plain old Bing

The fundamental measure of a search engine’s usefulness is whether its results are relevant, and the OpenAI technology brings a huge boost in the measurement that Microsoft uses to score its search engine results’ relevance.

«My team, working super, super hard in a given year, might move that metric by one point,» Ribas said, but OpenAI’s technology boosted it three points in one fell swoop. «It’s just never happened before in the history of Bing,» Ribas said.

That relevance boost is just for ordinary search results, Ribas added. OpenAI’s technology can further improve Bing with its chat interface that offers more elaborate answers and a follow-up exchange.

OpenAI makes Bing better with languages besides English

One particular area where Bing has been weak is searches that aren’t in English, and Ribas said OpenAI helps there. A lot of Bing’s three-point gain in relevance scoring «came from international markets,» Ribas said.

OpenAI’s large language model, or LLM, is trained with text from 100 languages. «Catalan is my first language. I can have a dialogue in Catalan. It works really, really well,» Ribas said

Bing brings OpenAI’s results up to date

Large language models like OpenAI’s GPT-3.5, the foundation for ChatGPT, are slow to build and improve, which means they don’t move at the speed of the web or of conventional search engines. GPT-3.5, for example, was trained in 2021, so it doesn’t have any idea about Russia’s invasion of Ukraine, the effects of recent inflation on consumers, or Xi Jinping securing his third term as general secretary of the Chinese Communist Party.

Bing often does know this more recent information, though. «When you bring in the Bing results, then you will get fresh results on that complete answer,» Ribas said.

Bing ‘grounds’ OpenAI’s flights of fancy

Microsoft uses its Bing data to try to avoid situations where OpenAI’s more creative technology could lead people astray. The more factual a query and answer are, the more Bing’s technology is used in the answer, Ribas said. This «grounding» significantly reduces AI’s problems with making stuff up: «It will reduce hallucination, which is … an ongoing battle,» Ribas said.

But Microsoft doesn’t want its grounding system to squash all the magic out of the AI. There’s a reason ChatGPT has been so captivating. The Prometheus system decides on the priorities for each query.

«We had to find the sweet spot between over-grounding the model and keeping it interesting,» Ribas said. «We have a measurement of the interestingness of the results, and we have a measurement for the groundedness of the results. The more the query is looking for something very factual, the more we weight the grounded. The more the query is supposed to be creative, the less we weight the grounded. I kept telling my team, I want my cake and eat it too.»

Technologies

NordVPN Software Blocked 92% of Phishing Emails in Independent Testing

Phishing attempts continue to grow with help from generative AI and its believable deepfakes and voice impersonations.

NordVPN’s anti-malware software Threat Protection Pro blocked 92% of phishing websites in an independent lab test of several antivirus products, browsers and VPNs in results released this week.

AV-Comparatives, based in Austria, attacked 15 products with 250 websites — all verified to be valid phishing URLs — in a test that ran Jan. 7 to 19. The lab said the products were tested in parallel and with active internet/cloud access. The Google Chrome browser was used for antivirus and VPN testing.

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

Phishing is a form of cyberattack in which a malicious actor tries to get someone to go «fishing,» with malicious URLs as bait. These phishing attempts might be sent in emails, but they also appear on websites, in texts and in voicemails.

You might get an email that says your bank account has been hacked and you should click on a URL to solve the problem. Or an email says you’ve won a big prize, instructing you to click on a URL to redeem. During tax season, the amount of scam emails and texts increases dramatically, with AI often used to ramp up the numbers. CNET offers tips for how to detect phishing attempts on even the most sophisticated of emails.

«By creating a sense of trust and urgency, cybercriminals hope to prevent you from thinking critically about their bait message so that they can gain access to your sensitive or personal information like your password, credit card numbers, user data, etc,» warns the US State Department website. «These cybercriminals may target specific individuals, known as spear phishing, or cast a wide net to attempt to catch as many victims as possible.»

In the AV-Comparatives test, which evaluated phishing-page detection and false-positive rates, NordVPN’s Threat Protection Pro ranked fourth among security products, blocking 92% of the 250 phishing URLs tested. The highest scoring included:

- Avast Free Antivirus 95%

- Norton Antivirus Plus 95%

- Webroot SecureAnywhere Internet Security Plus 93%

On its website, NordVPN says Threat Protection Pro protects devices even when they are not connected to a VPN. The company says the software can thwart phishing attempts and prevent malware from infecting your computer in several ways — alerts about malicious websites; blocking cookies that can learn about your browsing habits; and stopping pop-ups and intrusive ads.

According to cybersecurity company Hoxhunt, the total volume of phishing attacks has skyrocketed by 4,151% since the advent of ChatGPT in 2022, with a cost to companies of $4.88 million per phishing breach.

With the rapid expansion of AI across the internet, the volume of phishing attacks is growing. Some AI-generated phishing scams are able to get past email filters, but Hoxhunt found that only 0.7% to 4.7% of phishing emails were written by AI. However, cybercriminals are using AI to expand their phishing tools. AI can create deepfake videos and voice-impersonation phone calls to redirect payments or gain access to sensitive data.

AI scams will be tough to root out. CNET reported that 62% of executives had been targets of phishing attempts, including voice- and text-based scams, with 37% reporting invoice or payment fraud, all from generative AI.

Although NordVPN’s product might be effective at preventing malware from infecting your computer, it can’t eliminate malware that may already be on it. To clean up those issues, CNET lists the best antivirus software of 2026 and the best free antivirus apps. Those products can scan your computer and hopefully eradicate any malware and viruses that might be there.

More from CNET: Best VPN Service for 2026: Our Top Picks in a Tight Race

Technologies

The 3 iOS Features You Definitely Aren’t Using (but Are Silently Draining Your Battery)

If you find that your phone loses battery too fast, you may just need to disable these features to solve the problem.

It’s 2026, and if you’re constantly toggling on «Low Power Mode» just to survive a commute, you may as well be carrying around a brick. While it’s true that lithium-ion batteries naturally degrade over time, most people are draining their «juice» prematurely by leaving on high-performance features they don’t even need.

Your iPhone has a few key settings that drain your battery in the background. The good news is, you can turn them off. Instead of watching your battery percentage plummet at the worst possible moment, a few simple tweaks will give you hours of extra life.

Before you even think about buying a new phone, check your Battery Health menu (anything above 80% is decent) and then turn off these three settings. It’s the easiest way to make your iPhone battery last longer, starting right now.

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

Turn off widgets on your iPhone lock screen

All the widgets on your lock screen force your apps to automatically run in the background, constantly fetching data to update the information the widgets display, like sports scores or the weather. Because these apps are constantly running in the background due to your widgets, that means they continuously drain power.

If you want to help preserve some battery on iOS 18, the best thing to do is simply avoid widgets on your lock screen (and home screen). The easiest way to do this is to switch to another lock screen profile: Press your finger down on your existing lock screen and then swipe around to choose one that doesn’t have any widgets.

If you want to just remove the widgets from your existing lock screen, press down on your lock screen, hit Customize, choose the Lock Screen option, tap on the widget box and then hit the «—« button on each widget to remove them.

Reduce the motion of your iPhone UI

Your iPhone user interface has some fun, sleek animations. There’s the fluid motion of opening and closing apps, and the burst of color that appears when you activate Siri with Apple Intelligence, just to name a couple. These visual tricks help bring the slab of metal and glass in your hand to life. Unfortunately, they can also reduce your phone’s battery life.

If you want subtler animations across iOS, you can enable the Reduce Motion setting. To do this, go to Settings > Accessibility > Motion and toggle on Reduce Motion.

Switch off your iPhone’s keyboard vibration

Surprisingly, the keyboard on the iPhone has never had the ability to vibrate as you type, an addition called «haptic feedback» that was added to iPhones with iOS 16. Instead of just hearing click-clack sounds, haptic feedback gives each key a vibration, providing a more immersive experience as you type. According to Apple, the very same feature may also affect battery life.

According to this Apple support page about the keyboard, haptic feedback «might affect the battery life of your iPhone.» No specifics are given as to how much battery life the keyboard feature drains, but if you want to conserve battery, it’s best to keep this feature disabled.

Fortunately, it is not enabled by default. If you’ve enabled it yourself, go to Settings > Sounds & Haptics > Keyboard Feedback and toggle off Haptic to turn off haptic feedback for your keyboard.

For more tips on iOS, read about how to access your Control Center more easily and why you might want to only charge your iPhone to 95%.

Technologies

These Are the Weirdest Phones I’ve Tested Over 14 Years

These phones tried some wild things. Not all of them succeeded.

I’ve been a CNET journalist for over 14 years, testing everything from electric cars and bikes to cameras and, er, magic wands. But it’s phones that have always been my main focus and I’ve seen a lot of them come and go in my time here. Sure, we’ve had the mainstays like Apple and Samsung, but I’ve also seen the rise of brands like Xiaomi and OnePlus, while once-dominant names like BlackBerry, HTC and LG have vanished from the mobile space.

I’ve seen phones arrive with such fanfare that they changed the face of the mobile industry, while others simply trickled into existence and disappeared just as uneventfully. But it’s the weird ones that stick in my memory. Those devices that tried to be different, that dared to offer features we didn’t even know we wanted or simply the ones that aimed to be quirky for the sake of quirky. Like someone who thinks an interesting hat is the same as having a personality.

Here then are some of the weirdest phones I’ve come across in my mobile journey at CNET. Better yet, I still have them in a big box, so I was able to dig them out and take new photos — though not all of them still work. Let’s start with a doozy.

BlackBerry Passport

At the height of its power RIM’s BlackBerry was one of the most dominant names in mobile. It was unthinkable then that anything could unseat the goliath, let alone that it would fade into total nonexistence. The once juicy, ripe BlackBerry withered and died on the bush, but not without a few interesting death rattles on its way.

My pick from the company’s end days is the Passport from 2014, notable not just for its physical keyboard but its almost completely square design. The rationale behind this, according to its maker, was that business types just really love squares. A Word document, an Excel spreadsheet, an email — all square (ish) and all able to be viewed natively on the Passport’s 4.5 inch display with its 1:1 aspect ratio. Let’s not forget that all Instagram posts at that time were also square so it had that going for it too. YouTube, not so much.

In theory it’s a sound idea. In practice the square design made it awkward to use, as the physical keyboard was too wide and narrow. Its BlackBerry 10 software, especially the app availability, lagged behind what you’d get from Android at the time. BlackBerry quickly ditched the new shape. After trying to claw back some credibility with its Android phones — including the stupidly named Priv, a phone I quite liked — and by bringing on singer Alicia Keys as Global Creative Director (because BlackBerry phones had keys, get it?) the company stopped making its own phones in 2016.

YotaPhone 2

You’d be forgiven for having never heard of this phone or its parent company, Yota. Based in Russia, Yota made two phones: the creatively named YotaPhone in 2012 and the similarly inspired YotaPhone 2 in 2014, pictured above. Both were unique in the mobile world for their use of a second display on the rear. From the front, these phones looked and operated like any other generic Android phone. Flip them over though and you’d get a 4.3-inch E Ink display.

The idea was that you’d use your Android phone as normal for things like web browsing, gaming or watching videos, but you’d switch to the rear display if you wanted to read ebooks or simply have it propped up to show incoming notifications. E Ink displays use almost no power, so it made a lot of sense to preserve battery life by viewing «slow» content on the back.

The reality though is that beyond ebooks — which aren’t great to read on such a tiny screen anyway — there’s very little anyone might want to use an E Ink display for when out and about. It was difficult to operate, too, thanks to a slow processor and clunky software. After just two generations of YotaPhones, the company went into liquidation.

HTC ChaCha

Remember when Facebook was the cool place to be instead of just the place your parents and their friends go to publicly air their most troubling of opinions? When I was at university, instead of trading phone numbers when you met someone, the default thing was to add each other on Facebook and then begin poking each other. Facebook was so ubiquitous at the time that it was simply the way every single person I knew communicated.

Keen to capitalise on Zuckerberg’s social media success, HTC brought out the ChaCha in 2011. The phone came with an utterly ludicrous name and a dedicated Facebook button on the bottom edge. Tapping this would immediately bring up your Facebook page, allowing you to post the lyrics to Rebecca Black’s Friday, ask what Fifty Shades of Grey is about or do whatever else it was we were all up to in 2011.

Facebook might still be around in one form or another, but HTC abandoned its phone-making business back in 2018. Unsurprisingly, phones with dedicated hardware buttons tied to social media haven’t caught on.

Sirin Labs Finney U1

«Bro!» I hear you shout, all-too loudly. «BRO! You’ve got to check out what my Bitcoin is doing!» You’d then show me your phone and I’d watch while your crypto account plummeted, rebounded and plummeted again over the course of 12 seconds. The phone you’d be showing me, of course, would be the Sirin Labs Finney, a 2019 phone specifically targeted at crypto bros who wanted a device that would perfectly match their high-living, high-fiving crypto-trading lifestyle.

At its core, the Finney is just another Android phone, but a hidden second screen pops up from the back of the phone, with the sole purpose of giving you secure access to your crypto wallet. The phone had a whole host of security features to ensure that only you could access your Bitcoin or Etherium, and it allowed you to send and receive cryptocurrency without having to use a third-party online platform. Apparently that was a good thing.

If you were entrenched in the crypto world, this phone might have been the dream. But the wallet wasn’t easy to use and the phone was expensive, thanks to the cost of that second screen. Sirin Labs stopped making phones soon after and the mobile industry learned an important lesson about not developing hyper-niche devices that aren’t even that well-suited for the handful of customers that might be interested.

Planet Computers Gemini PDA

Half phone, half laptop, all productivity. The Gemini PDA by UK-based mobile startup Planet Computers was a clamshell device in 2018 with a large (at the time) 5.99-inch display and a full qwerty keyboard. It was basically a slightly more modern interpretation of a PDA, like 1998’s Psion 3MX, in that it was effectively a tiny laptop that would fold up and fit in your pocket. The full keyboard allowed you to type away comfortably on long emails or documents while the regular Android software on the top half meant it also functioned like any other phone — apps, games, phone calls, whatever.

It had 4G connectivity for fast data speeds and a later model even got an update to 5G. But, like the BlackBerry Passport, its focus on business-folk and productivity above all else meant it was a niche product that failed to garner enough appeal to succeed. It didn’t help that it was utterly enormous and fitting it in a jeans pocket was basically impossible, so it didn’t impress either as a laptop or as a phone.

LG G5

LG remains a huge name in the tech industry today thanks to its TVs and appliances, but it also tried to be a big player in the phone world, too. I liked LG’s phones — they were quirky and often tried weird things which kept my days as a reviewer interesting, perhaps none more so than the LG G5 in 2016.

LG called the G5 «modular,» meaning that the bottom chin of the phone snapped off allowing you to attach different modules such as a camera grip or an audio interface. Like many items on this list I can say that it’s a nice idea in theory, but in practice the phone fell short. Swapping out modules meant removing the battery, which of course meant restarting your phone every time you wanted to use the camera grip.

It was an inelegant solution to a problem that never needed to exist. But its bigger issue was that the camera grip and audio interface were the only two modules LG actually made for the phone. It’s as though the company had this fun notion in creating a phone that can transform according to your needs but then forgot to assign anyone to come up with any ideas on what to do with it. As a result, the end product was uninspiring, over-engineered and expensive.

Samsung Galaxy Note

Samsung’s Galaxy Note series helped transform the mobile industry. It literally stretched the boundaries of phones, encouraging larger and larger screens — even creating the unpleasant and mercifully short-lived term «phablet.» But the first-generation model in 2011 was controversial, mostly due to what was then considered its enormous size.

At 5.3 inches, it was significantly bigger than almost any other phone out there, including Samsung’s own Galaxy S2 — which, at a measly 4.3 inches, paled into insignificance against the mighty Note. It was mocked for being so huge, with memes appearing online poking fun at people holding it up when making calls. And while times have changed and we now have Samsung’s 6.9-inch Galaxy S25 Ultra, the original Note’s boxy aspect ratio meant it was actually wider than the S25 Ultra. So even by today’s standards it’s big.

It was also among the first phones to come with its own stylus shoved into its bottom. It’s a feature that few mobile companies have mimicked, but Samsung kept it as a differentiator on its later Note models before incorporating it into its flagship S line starting with the S22 Ultra.

Nokia Lumia 1020

Nokia’s Lumia 1020 was my absolute favorite phone for quite some time after its launch in 2013. And it’s because of its weirdness.

Nokia had an amazing history of bonkers mobiles — 2004’s 7280 «lipstick phone,» for example — and while the Lumia range was much more sedate, the 1020 had a few things that made it stand out. First, it ran Windows Phone, Microsoft’s brief and unsuccessful attempt to launch a rival to Android and iOS. A rival that I happened to quite like.

It was also made of polycarbonate, with a smoothly rounded unibody design that strongly contrasted the angular metal, plastic and glass designs of almost all other phones launching at that time. Its look was unlike anything else on sale, and I loved it.

But the main thing I loved was its camera. With a 41-megapixel sensor, Carl Zeiss lens, raw image capture and optical image stabilization, the Lumia 1020 packed the best camera specs of any phone I’d ever seen. It made the phone a true standout product, especially for photographers like me who wanted an amazing camera with them at all times, but didn’t want to have to carry both a phone and a compact digital camera.

While incredible image quality from a phone is a given in almost all camera phones in 2026, the Lumia 1020 was an early pioneer in what could be achieved from a phone camera.

LG G4

LG, twice in one list? Oh yes, my friend, because the G5 seen above was not the first time LG went weird. Launched in 2015, the LG G4 had two main features that raised a few eyebrows. Most notably was LG’s decision to wrap the phone in real leather. Yes, real actual leather. Like what you’d get when you peel a cow. It even had stitching down the back, making it look like a handbag or a boot.

While it’s not a phone for vegans, I actually liked the look, especially as real leather — even the really thin stuff LG used on the G4 — naturally wears over time, gaining scuffs and scratches that give each phone a unique patina. It’s why I love my old leather Danner boots, and it’s why a vintage, worn-in leather jacket will almost always look better than a brand new one. Still, with leather being an expensive — and arguably controversial — material to use on a phone, it’s no surprise LG didn’t return to this idea.

But it’s not the only weird thing about the phone — the G4 was among a small number of phones released around that time that experimented with curved displays. It’s gently bent into a banana shape, the theory being that it makes watching videos more immersive, as is the case with curved screens in movie theaters. The problem is that movie screens are immense, so that curve makes sense. On a 5.5 inch phone like the G4, that curve is barely noticeable and only really served to push the price up.

Motorola Moto X and Moto Maker

I’ve just pointed out how weird the LG G4 was for using leather and now I’m pointing out another phone that, as you can see in the image above, is also wrapped in leather. But the weird thing here isn’t that the Motorola Moto X came in leather — it’s that I personally got to choose that it came in leather.

With the Moto X in 2013, Motorola launched a service called Moto Maker that allowed you to customize your phone in a wild variety of ways. From different-colored backs and multicolored accents around the camera and speakers through to using materials including leather and even various types of wood, there were loads of options to make your Moto X look unique. Each phone would then be made to order and you could even have it personalised with lazer etching and provide your Google account for it to be prelinked on arrival.

If custom-making phones with a vast number of potential options en mass sounds like an absolute logistical nightmare then you’re on the same page as Motorola eventually found itself. Moto Maker only existed for a few years before the company retired its customization service.

Samsung Galaxy Fold

I’m ending on a wildcard addition with the original Galaxy Fold. It’s a wildcard because Samsung’s Fold and Flip range are now up to number seven and we’ve got foldable devices from almost all major Android manufacturers. Though still not Apple.

While the original Fold might have kicked off the foldable revolution, there’s no question it was a weird phone. I was among the first to test it in the world when it launched in 2019 and while I was certainly impressed by the bendy display, its hinge felt weird and «snappy» to use. The outer display was, let’s face it, terrible.

On paper its 4.6-inch size is reasonable, but it’s so tall and narrow that it was borderline unusable for anything more than checking incoming notifications. Trying to type on it meant whittling down your thumbs to pointy nubs so I spent most of my time interacting with the phone’s much bigger internal screen. Cut to today when the Galaxy Z Fold 7’s outer screen measures a healthier 6.7 inches and as a result can function like any regular smartphone, with the bigger inside screen only required when you want more immersive content.

Looking back at the original Fold and its bizarre proportions, it’s honestly a surprise that Samsung persisted with the format. But I’m glad it did.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow