Technologies

WWDC 2023 Biggest Reveals: Vision Pro Headset, iOS 17, MacBook Air and More

From its expected AR/VR headset to new Macs to software updates like iOS 17, here’s what Apple unveiled at WWDC.

Apple’s Worldwide Developers Conference kicked off on Monday with a keynote address showing everything coming to the company’s lineup of devices. WWDC has been typically where the company gives us a first look at new software for iPhones, iPads, Apple Watches and Macs. But this year, Apple revealed a bevy of new hardware, too.

The big announcement was the debut of the Apple Vision Pro headset, a «new kind of computer» as Tim Cook put it in the presentation. But with MacBook Air and other Mac hardware announcements — including new silicon — as well as software upgrades, no corner of Apple’s ecosystem lacked for updates.

11:44

For a detailed summary of everything announced as it happened, give our live blog a look. Read on for the highlights of the presentation and links to our stories.

Apple Vision Pro, a new headset

The Apple Vision Pro is the company’s answer to the AR and VR headset race. It’s a personal display on your face with all the interface touches you’d expect from Apple, with an operating system that looks like a combination of iOS, MacOS and TVOS. And it’s not going to come cheap: The Apple Vision Pro retails for $3,499 and will start shipping early next year.

The device itself looks like other headsets, though the glass front hides cameras and even a curved OLED outer display (more on why later). The headset is secured to the wearer’s head with a wide rear band (no over-the-top strap), though as rumors suggested, there’s an external battery back that connects over a cable and sits in your pocket. There’s a large Apple Watch-style digital crown on the right side that lets you dial immersion (the outside world) in and out.

The Vision Pro has three-element lenses that enable 4K resolution, though you can swap out lenses, presumably for different vision capabilities. Audio pods are embedded within the band to sit over your ears, and «audio ray tracing» maps sound to your position. A suite of lidar and other sensors on the bottom of the headset track hand and body motions.

Technically speaking, the Vision Pro is a computer, with an M2 chip found on Apple’s highest-end computers. But a new R1 chip processes all the other headset inputs from 12 cameras, five sensors and six microphones and sends it to the M2 to reduce lag and get new images to displays within 12 milliseconds. The Vision Pro runs the new VisionOS, which uses iOS frameworks, a 3D engine, foveated rendering and other software tricks to make what Apple calls «the first operating system designed from the ground up for spatial computing.»

Interior cameras track your facial motion, which is projected to others when on FaceTime and other video chatting apps.

Apple Vision Pro can scan your face to create a digital 3D avatar.

To keep users from being cut off from the outside world, the EyeSight feature uses inside-pointing cameras and the headset’s outer display to show your eyes — essentially showing people around you what your eyes are focusing on. If you’ve dialed your immersion all the way on, your eyes will disappear on the outside screen. But you’re not totally cut off. While wearing the headset, if someone approaches you they’ll filter in to your vision.

The interface uses hand motions to control the device, though there are also voice controls. It’s tough to tell how these controls will work, and we’d expect that users will need some time to adapt to not using a mouse and keyboard.

This isn’t just an entertainment device. Apple is pitching its first new product in eight years as a work-from-home and travel device, essentially letting you open however many windows you want. It can work in the office as a display for Macs, and supports Apple’s Magic Keyboard and Trackpad devices.

The Vision Pro has Apple’s first 3D cameras and can take spatial photos, providing 3D depth with binaural audio to experience moments with more immersion. Of course, this spatial experience is extended to movies that’s «impossible to represent on a 2D screen,» Apple said during its presentation, continually teasing the exclusivity that non-headset wearers won’t even understand without trying out a Vision Pro. Disney CEO Bob Iger took the WWDC stage to vouch for the headset, and followed with a short video showing interactive 3D experiences that Vision Pro users will soon get to experience on the Disney Plus streaming service.

Now that Apple has all these new cameras and eye-tracking, it’s introduced a way to secure your data and purchases with Optic ID, which uses your eyes as an optical fingerprint for authentication. Camera data is processed at the system level, so what the headset sees isn’t fed up to the cloud.

Read more: Apple’s ‘One More Thing’ retrospective

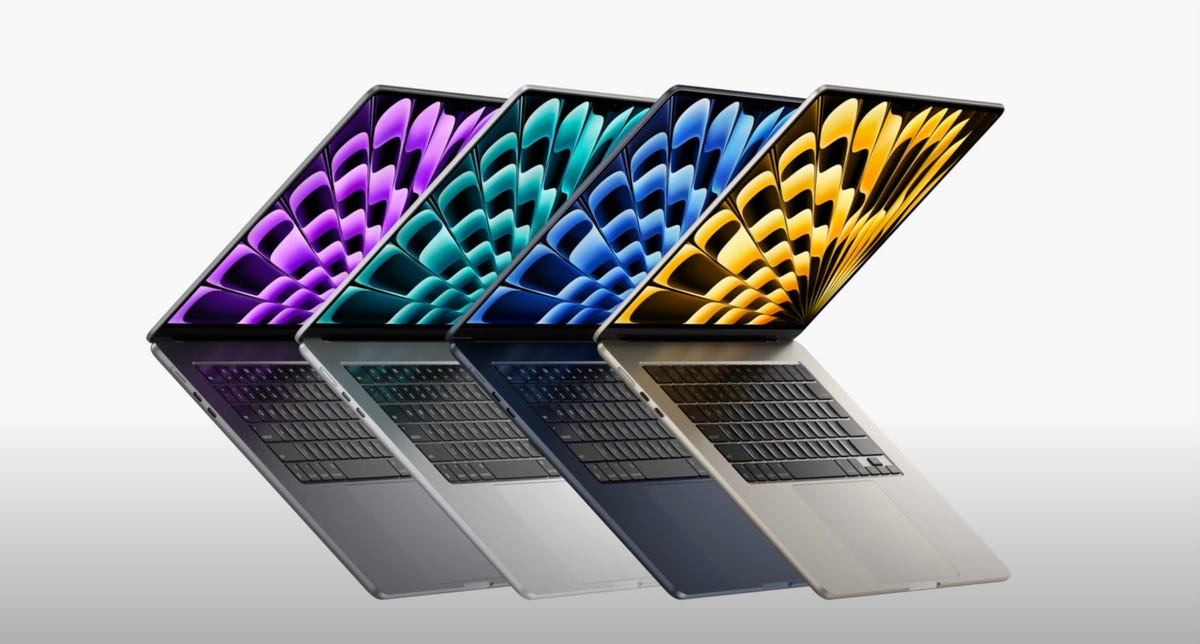

New MacBook Air 15

As was rumored, Apple announced a new MacBook Air 15, a larger version of the MacBook Air 13 that launched last year.

The MacBook Air 15 is powered by an M2 chip and gets up to 18 hours of battery life. Configurations can come with up to 24GB of memory and up to 2TB of storage, retailing for $1,299 to start (or $1,199 with a student discount).

The 15-inch model is 11.5mm thick and 3.3 pounds, and has two Thunderbolt ports and a Magsafe cable connector — along with a 3.5mm headphone jack. It has an above-display 1080p camera in a notch, three microphones and six speakers with force-canceling subwoofers.

Read more: 15-inch MacBook Air M2 Preorder: Where to Buy Apple’s Latest Laptop

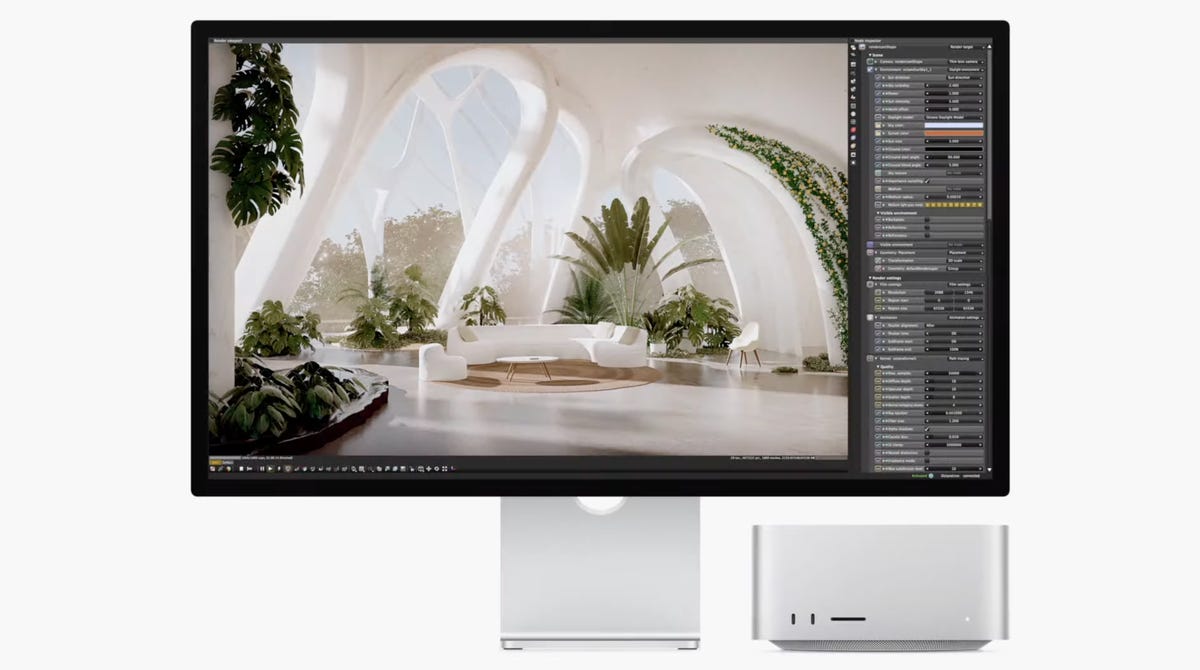

Mac Studio with M2

A new Mac Studio has landed and it comes with Apple’s latest silicon. The new model comes with an M2 Max chipset, or the new M2 Ultra chipset — essentially two M2 Max chips combined, which enables up to 192GB of memory.

The M2 Ultra stole the spotlight with new capabilities, with a 24-core CPU and streaming 22 videos at 8K ProRes resolution at once. It can support up to six Apple Pro Displays at once.

The Mac Studio starts at $1,999 and will be available starting next week.

Mac Pro with M2 Ultra

Apple wasted no time announcing that its new high-end desktop Mac Pro model would get the M2 Ultra as well. The new Mac Pro gets all the M2 Ultra upgrades as the Studio, including support for up to 192GB of RAM.

The Mac Pro has eight thunderbolt ports, two HDMI ports and dual 10GB ethernet ports, with six open PCIe Gen 4 slots. The new Mac Pro comes in both upright tower and horizontal rack orientations.

The new Mac Pro starts at $6,999 and will be available starting next week.

iOS 17

iOS 17 brings a ton of quality-of-life improvements, and the iOS 17 developer beta is available now to download. Finally, you can use more filters while searching within your Messages. In addition to pressing and holding on messages to reply, you can also simply swipe on specific messages to reply to them, and voice notes will be transcribed.

Say goodbye to gray screens when you get calls — now you can set full-screen photos or Memoji to contacts when they call you. And if someone leaves a voicemail, you can see it transcribed in real-time to help you screen calls if you don’t recognize a caller.

06:31

A new safety feature, Check In, sends a note to a trusted contact when you reach a location — like when you make it home safe after late-night travel. If it’s taking you longer to get to a destination, you’ll be prompted to extend the timer rather than alert your contact. It also shares your battery and signal status. Check In is end-to-end encrypted.

Last year, Apple introduced an iOS feature to let you copy photo subjects and paste them as stickers — and now you can do that with video to essentially create GIFs to share with friends or even as responses to Messages. All emoji are now shareable stickers, too.

AirDrop has been a helpful tool to send files between Apple devices, but now you can share your contact info with Name Drop. You can choose what you want to share between email addresses, phone numbers and more.

Also, say goodbye to relying on Notes to jot down your thoughts — Journal is a new secure app for personal recollections. Apple is pitching it as a gratitude exercise, but iOS will auto-include activities like songs and workouts you’ve done to your personal log.

Apple Maps got an update that Android owners have had for years — the ability to use Maps offline, especially helpful when you’re outside network range while outdoors or conserving battery.

A new mode, StandBy, converts an iPhone to an alarm clock when it’s charging and rotated horizontally. It gets smart interactions like a large visible clockface along with calendar and music controls.

Lastly, as was rumored, you won’t have to say «Hey Siri» anymore. Just saying «Siri» will bring up the voice assistant.

Read more: Apple Finally Lets You Type What You Ducking Mean on iOS 17

iPadOS 17

iPadOS 17 brings more controls to widgets, which don’t just show more info at a glance — they have more interactive buttons to let you control your smart home or play music.

iPadOS 17 is bringing more interactive personal data to the Health app, including richer sleep and activity visualization.

The next iPadOS update brings quality-of-life upgrades like more lock screen customization and multiple timers (helpful when cooking), as well as improvements to the follow-you-during-video-calls Stage Manager feature for iPad selfie cameras.

With all the screen space on an iPad, Apple expanded what you can do with PDFs, which can be autofilled and signed from within iPadOS. iPad owners can collaborate in real time while tweaking PDFs, and the files can now be stored in the Notes app.

MacOS Sonoma

MacOS Sonoma, named after one of California’s most famous wine-producing areas, continues the WWDC theme of adding more widget functionality.

Sonoma also has some gaming upgrades like a new gaming mode that prioritizes CPU and GPU to improve frame rate. Apple is paying attention to immersion with lower latency for wireless controllers and speakers or headsets. The company is also courting developers with game dev kits and Metal 3. But the biggest gaming announcement is that legendary game creator Hideo Kojima’s opus Death Stranding is coming to Macs later this year. «We are actively working to bring our future titles to Apple platforms,» Kojima said during the WWDC presentation.

On the business side, Mac has improved videoconferencing with an overlay that shows slide controls while you’re presenting. Apple also introduced new reactions — like ticker-tape falling for a congratulations — that can be triggered with gestures.

PassKey, the end-to-end encrypted password chain tech Apple introduced last year, can now be shared with other contacts, and everyone included can edit and update passwords to be shared with the group.

Safari has security updates including locking the browser window when in private browsing mode, and profiles to separate accounts, logins and cookies between work and personal use.

AirPods and audio upgrades

Apple has a handful of improvements to its audio products. AirPods will get Adaptive Audio, which combines noise-canceling with intelligent audio to drown out annoying background noise while letting through important sounds — like car horns or bike bells. It’ll also pass through voices in case someone starts a conversation in person.

And it’s far easier to digitally take control of the music with SharePlay while somebody with CarPlay is driving — a prompt will go out to others in the car asking if they want to take control.

Apps in WatchOS 10 are getting a new look.

WatchOS 10

Yet again, widgets make an appearance with WatchOS 10, the next operating system upgrade for Apple Watches. Widgets are now accessible in a stack from your home screen — just use the digital crown to scroll between them.

Apple has focused on cycling this year, improving workouts by showing functional threshold data, an important metric for cyclists. It also connects over Bluetooth to sensors on bikes, and there’s a new full-screen mode for iPhones that allows you to use it as a full screen while cycling.

Hikers, rejoice! WatchOS 10 has upgraded its compass with cellular connection waypoints, telling you which direction to walk and how far you have to go before you can get carrier reception. It also shows SOS waypoint spots, and shows elevation view in the 3D compass view. There’s also a neat topographical view.

Apple is also expanding its Mindfulness app to log how you’re feeling in State of Mind, choosing between color-coded emotional states. You can even access this from your iPhone in case you’re away from your Apple Watch.

Health focuses for 2023

On top of the WatchOS Mindfulness updates, Apple introduced a neutral survey to self-report mood and mental health, which acts as a sort of non-medical way to indicate whether you may want to get professional help.

Apple also has a new cross-device Vision Health focus in the Health app, and a new feature on the Apple Watch measures daylight time spent outside to watch for myopia in younger wearers. Screen Distance uses the TrueDepth camera on iPads to warn people if they’re too close to the screen.

Technologies

Today Only You Can Get the Super Mario Galaxy 2-Pack at $14 Off

Enjoy two of Nintendo’s best Mario games in one package with a decent amount off.

Best Buy has a deal on at the moment that knocks the price of Super Mario Galaxy and Mario Galaxy 2 on Nintendo Switch down to $56. That’s a $14 discount, which is a lot on a first-party Nintendo game.

Nintendo Switch games are notorious for never really going down in price, which makes every deal that happens worth at least considering. Last time this was on sale, it was for $59; this is $3 cheaper than that, making the value even better. That’s two all-time-classic games for $28 each, basically, which is fantastic.

The only problem with this is that it’s a Best Buy daily deal, which means that it runs out tonight. So, if you do want to pick this up at this price, you’re going to need to be quick.

In his review, CNET’s Scott Stein was a big fan of both revamped Mario titles included in this bundle, but less so the $70 asking price. This deal goes a long way to helping fix that problem and gives you the chance to add two classic Mario titles to your collection at a discount.

Originally released on the Wii, both Super Mario Galaxy and Galaxy 2 have been updated with higher-resolution visuals, an improved interface and new content, so there’s never been a better time to play them. And unlike the originals, you can play these Switch games anywhere and at any time.

Why this deal matters

Mario games are like no other, and they’re great for adults and kids alike. This bundle includes two of the best, and right now you can pick it up at a price that makes them an even better buy than they already were. Whether you played them the first time around, you’re looking to see what all the fuss was about or want to introduce them to a new generation of Mario fans, this is the deal for you.

Technologies

I’ve Seen It With My Own Eyes: The Robots Are Here and Walking Among Us

The «physical AI» boom has created a world of opportunity for robot makers, and they’re not holding back.

It’s been 24 years since CNET first published an article with the headline The robots are coming. It’s a phrase I’ve repeated in my own writing over the years — mostly in jest. But now in 2026, for the first time, I feel confident in declaring that the robots have finally arrived.

I kicked off this year, as I often do, wandering the halls of the Las Vegas Convention Center and its hotel-based outposts on the lookout for the technology set to define the next 12 months. CES has always been a hotbed of activity for robots, but more often than not, a robot that makes a flashy Vegas debut doesn’t go on to have a rich, meaningful career in the wider world.

In fact, as cute as they often are and as fun as they can be to interact with on the show floor, most robots I’ve seen at CES over the years amount to little more than gimmicks. They either come back year after year with no notable improvements or are never seen or heard from again.

In more than a decade of covering the show, I’ve been waiting for a shift to occur. In 2026, I finally witnessed it. From Hyundai unveiling the final product version of the Boston Dynamics Atlas humanoid robot in its press conference to Nvidia CEO Jensen Huang’s focus on «physical AI» during his keynote, a sea change was evident this year in how people were talking about robots.

«We’ve had this dream of having robots everywhere for decades and decades,» Rev Lebaredian, Nvidia’s vice president of Omniverse and simulation told me on the sidelines of the chipmaker’s vast exhibition at the glamorous Fontainebleau Hotel. «It’s been in sci-fi as long as we can remember.»

Throughout the show, I felt like I was watching that sci-fi vision come to life. Everywhere I went, I was stumbling upon robot demos (some of which will be entering the market this year) drawing crowds, like the people lining up outside Hyundai’s booth to see the new Atlas in action.

So what’s changed? Until now, «we didn’t have the technology to create the brain of a robot,» Lebaredian said.

AI has unlocked our ability to apply algorithms to language, and it’s being applied to the physical world, changing everything for robots and those who make them.

The physical AI revolution

What truly makes a robot a robot? Rewind to CES 2017: I spent my time at the show asking every robotics expert that question, sparked by the proliferation of autonomous vehicles, drones and intelligent smart home devices.

This exercise predated the emergence of generative AI and models such as OpenAI’s ChatGPT, but already I could see that by integrating voice assistants into their products, companies were beginning to blur the boundaries of what could be considered robotics.

Not only has the tech evolved since that time, but so has the language we use to talk about it. At CES 2026, the main topic of conversation seemed to be «physical AI.» It’s an umbrella term that can encompass everything from self-driving cars to robots.

«If you have any physical embodiments, where AI is not only used to perceive the environment, but actually to take decisions and actions that interact with the environment around it … then it’s physical AI,» Ahmed Sadek, head of physical AI and vice president of engineering at chipmaker Qualcomm told me.

Autonomous vehicles have been the easiest expression of physical AI to build so far, according to Lebaredian, simply because their main challenge is to dodge objects rather than interact with them. «Avoiding touching things is a lot easier than manipulating things,» he said.

Still, the development of self-driving vehicles has done much of the heavy lifting on the hardware, setting the stage for robot development to accelerate at a rapid pace now that the software required to build a brain is catching up.

For Nvidia, which worked on the new Atlas robot with Boston Dynamics, and Qualcomm, which announced its latest robotics platform at CES, these developments present a huge opportunity.

But that opportunity also extends to start-ups. Featured prominently at the CES 2026 booth of German automotive company Schaeffler was the year-and-a-half-old British company Humanoid, demonstrating the capabilities of its robot HMND 01.

The wheeled robot was built in just seven months Artem Sokolov, Humanoid’s CEO, told me, as we watch it sort car parts with its pincerlike hands. «We built our bipedal one for service and household much faster — in five months,» Sokolov added.

Humanoid’s speed can be accounted for by the AI boom plus an influx of talent recruited from top robotics companies, said Sokolov. The company has already signed around 25,000 preorders for HMND 01 and completed pilots with six Fortune 500 companies, he said.

This momentum extends to the next generation of Humanoid’s robots, where Sokolov doesn’t foresee any real bottlenecks. The main factors dictating the pace will be improvements in AI models and making the hardware more reliable and cost effective.

Humanoid hype hits its peak

Humanoid the company might have the rights to the name, but the concept of humanoids is a wider domain.

By the end of last year, the commercialization of humanoid robots had entered an «explosive phase of growth,» with a 508% year-on-year increase in global market revenue to $440 million, according to a report released by IDC this month.

At CES, Qualcomm’s robot demonstration showed how its latest platform could be adapted across different forms, including a robotic arm that could assemble a sandwich. But it was the humanoids at its booth that caused everyone to pull out their phones and start filming.

«Our vision is that if you have any embodiment, any mechatronic system, our platform should be able to transform it to a continuously learning intelligent robot,» said Qualcomm’s Sadek. But, he added, the major benefit of the humanoid form is its «flexibility.»

Some in the robotics world have criticized the focus on humanoids, due to their replication of our own limitations. It’s a notion that Lebaredian disagrees with, pointing out that we’ve designed our world around us and that robots need to be able to operate within it.

«There are many tasks that are dull, dangerous and dirty — they call it the three Ds — that are being done by humans today, that we have labor shortages for and that this technology can potentially go help us with,» he said.

We already have many specialist robots working in factories around the world, Lebaredian added. With their combination of arms, legs and mobility, humanoids are «largely a superset of all of the other kinds of robots» and, as such, are perfect for the more general-purpose work we need help with.

The hype around robots — and humanoids in particular — at CES this year felt intense. Even Boston Dynamics CEO Robert Playter acknowledged this in a Q&A with reporters moments after he unveiled the new Atlas on stage.

But it’s not just hype, Playter insisted, because Boston Dynamics is already demonstrating that they can put thousands of robots in the market. «That is not an indication of a hype cycle, but actually an indication of an emerging industry,» he said.

A huge amount of money is being poured into a rapidly growing number of robotics start-ups. The rate of this investment is a signal that the tech is ready to go, according to Nvidia’s Lebaredian.

«It’s because, fundamentally, the experts, people who understand this stuff, now believe, technically, it’s all possible,» he said. «We’ve switched from a scientific problem of discovery to an engineering problem.»

Robot evolution: From industry to home

From what I observed at the show, this engineering «problem» is one that many companies have already solved. Robots such as Atlas and HMND 01 have crossed the threshold from prototype to factory ready. The question for many of us will be as to when will these robots be ready for our homes.

Playter has openly talked about Boston Dynamics’ ambitions in this regard. He sees Atlas evolving into a home robot — but not yet. Some newer entrants to the robotics market — 1X, Sunday Robotics and Humanoid among them — are keen to get their robots into people’s homes in the next couple of years. Playter cautions against this approach.

«Companies are advertising that they want to go right to the home,» he said. «We think that’s the wrong strategy.»

The reasons he listed are twofold: pricing and safety. Playter echoed a sentiment I’ve heard elsewhere: that the first real use for home humanoid robots will be to carry out care duties for disabled and elderly populations. Perhaps in 20 years, you will have a robot carry you in and out of bed, but relying on one to do so when you’re in a vulnerable state poses «critical safety issue,» he said.

Putting robots in factories first allows people to work closely with them while keeping a safe distance, allowing those safety kinks to be ironed out. The deployment of robots at scale in industrial settings will also lead to mass manufacturing of components that will, at some point, make robots affordable for the rest of us, said Playter (unlike 1X’s $20,000 Neo robot, for example).

Still, he imagines the business model will be «robots as a service,» even when they do first enter our homes. Elder care itself is a big industry with real money being spent that could present Boston Dynamics with a market opportunity as Atlas takes its first steps beyond the factory floor.

«I spent a lot of money … with my mom in specialty care the last few years,» he said. «Having robots that can preserve autonomy and dignity at home, I think people will actually spend money — maybe $20K a year.»

The first «care» robots are more likely to be companion robots. This year at the CES, Tombot announced that its robotic labrador, Jennie, who first charmed me back at the show in 2020, is finally ready to go on sale. It served as yet another signal to me that the robots are ready to lead lives beyond the convention center walls.

Unlike in previous years, I left Vegas confident that I’ll be seeing more of this year’s cohort of CES robots in the future. Maybe not in my home just yet, but it’s time to prepare for a world in which robots will increasingly walk among us.

Technologies

Today’s Wordle Hints, Answer and Help for Jan. 29, #1685

Here are hints and the answer for today’s Wordle for Jan. 29, No. 1,685.

Looking for the most recent Wordle answer? Click here for today’s Wordle hints, as well as our daily answers and hints for The New York Times Mini Crossword, Connections, Connections: Sports Edition and Strands puzzles.

Today’s Wordle puzzle was a tough one for me. I never seem to guess three of the letters in this word. If you need a new starter word, check out our list of which letters show up the most in English words. If you need hints and the answer, read on.

Read more: New Study Reveals Wordle’s Top 10 Toughest Words of 2025

Today’s Wordle hints

Before we show you today’s Wordle answer, we’ll give you some hints. If you don’t want a spoiler, look away now.

Wordle hint No. 1: Repeats

Today’s Wordle answer has no repeated letters.

Wordle hint No. 2: Vowels

Today’s Wordle answer has one vowel and one sometimes vowel.

Wordle hint No. 3: First letter

Today’s Wordle answer begins with F.

Wordle hint No. 4: Last letter

Today’s Wordle answer ends with Y.

Wordle hint No. 5: Meaning

Today’s Wordle answer can refer to a pastry that breaks apart easily.

TODAY’S WORDLE ANSWER

Today’s Wordle answer is FLAKY.

Yesterday’s Wordle answer

Yesterday’s Wordle answer, Jan. 28, No. 1684 was CRUEL.

Recent Wordle answers

Jan. 24, No. 1680: CLIFF

Jan. 25, No. 1681: STRUT

Jan. 26, No. 1682: FREAK

Jan. 27, No. 1683: DUSKY

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.