Technologies

AI Is Bad at Sudoku. It’s Even Worse at Showing Its Work

Researchers did more than ask chatbots to play games. They tested whether AI models could describe their thinking. The results were troubling.

Chatbots are genuinely impressive when you watch them do things they’re good at, like writing a basic email or creating weird, futuristic-looking images. But ask generative AI to solve one of those puzzles in the back of a newspaper, and things can quickly go off the rails.

That’s what researchers at the University of Colorado at Boulder found when they challenged large language models to solve sudoku. And not even the standard 9×9 puzzles. An easier 6×6 puzzle was often beyond the capabilities of an LLM without outside help (in this case, specific puzzle-solving tools).

A more important finding came when the models were asked to show their work. For the most part, they couldn’t. Sometimes they lied. Sometimes they explained things in ways that made no sense. Sometimes they hallucinated and started talking about the weather.

If gen AI tools can’t explain their decisions accurately or transparently, that should cause us to be cautious as we give these things more control over our lives and decisions, said Ashutosh Trivedi, a computer science professor at the University of Colorado at Boulder and one of the authors of the paper published in July in the Findings of the Association for Computational Linguistics.

«We would really like those explanations to be transparent and be reflective of why AI made that decision, and not AI trying to manipulate the human by providing an explanation that a human might like,» Trivedi said.

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

The paper is part of a growing body of research into the behavior of large language models. Other recent studies have found, for example, that models hallucinate in part because their training procedures incentivize them to produce results a user will like, rather than what is accurate, or that people who use LLMs to help them write essays are less likely to remember what they wrote. As gen AI becomes more and more a part of our daily lives, the implications of how this technology works and how we behave when using it become hugely important.

When you make a decision, you can try to justify it, or at least explain how you arrived at it. An AI model may not be able to accurately or transparently do the same. Would you trust it?

Why LLMs struggle with sudoku

We’ve seen AI models fail at basic games and puzzles before. OpenAI’s ChatGPT (among others) has been totally crushed at chess by the computer opponent in a 1979 Atari game. A recent research paper from Apple found that models can struggle with other puzzles, like the Tower of Hanoi.

It has to do with the way LLMs work and fill in gaps in information. These models try to complete those gaps based on what happens in similar cases in their training data or other things they’ve seen in the past. With a sudoku, the question is one of logic. The AI might try to fill each gap in order, based on what seems like a reasonable answer, but to solve it properly, it instead has to look at the entire picture and find a logical order that changes from puzzle to puzzle.

Read more: 29 Ways You Can Make Gen AI Work for You, According to Our Experts

Chatbots are bad at chess for a similar reason. They find logical next moves but don’t necessarily think three, four or five moves ahead — the fundamental skill needed to play chess well. Chatbots also sometimes tend to move chess pieces in ways that don’t really follow the rules or put pieces in meaningless jeopardy.

You might expect LLMs to be able to solve sudoku because they’re computers and the puzzle consists of numbers, but the puzzles themselves are not really mathematical; they’re symbolic. «Sudoku is famous for being a puzzle with numbers that could be done with anything that is not numbers,» said Fabio Somenzi, a professor at CU and one of the research paper’s authors.

I used a sample prompt from the researchers’ paper and gave it to ChatGPT. The tool showed its work, and repeatedly told me it had the answer before showing a puzzle that didn’t work, then going back and correcting it. It was like the bot was turning in a presentation that kept getting last-second edits: This is the final answer. No, actually, never mind, this is the final answer. It got the answer eventually, through trial and error. But trial and error isn’t a practical way for a person to solve a sudoku in the newspaper. That’s way too much erasing and ruins the fun.

AI struggles to show its work

The Colorado researchers didn’t just want to see if the bots could solve puzzles. They asked for explanations of how the bots worked through them. Things did not go well.

Testing OpenAI’s o1-preview reasoning model, the researchers saw that the explanations — even for correctly solved puzzles — didn’t accurately explain or justify their moves and got basic terms wrong.

«One thing they’re good at is providing explanations that seem reasonable,» said Maria Pacheco, an assistant professor of computer science at CU. «They align to humans, so they learn to speak like we like it, but whether they’re faithful to what the actual steps need to be to solve the thing is where we’re struggling a little bit.»

Sometimes, the explanations were completely irrelevant. Since the paper’s work was finished, the researchers have continued to test new models released. Somenzi said that when he and Trivedi were running OpenAI’s o4 reasoning model through the same tests, at one point, it seemed to give up entirely.

«The next question that we asked, the answer was the weather forecast for Denver,» he said.

(Disclosure: Ziff Davis, CNET’s parent company, in April filed a lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.)

Explaining yourself is an important skill

When you solve a puzzle, you’re almost certainly able to walk someone else through your thinking. The fact that these LLMs failed so spectacularly at that basic job isn’t a trivial problem. With AI companies constantly talking about «AI agents» that can take actions on your behalf, being able to explain yourself is essential.

Consider the types of jobs being given to AI now, or planned for in the near future: driving, doing taxes, deciding business strategies and translating important documents. Imagine what would happen if you, a person, did one of those things and something went wrong.

«When humans have to put their face in front of their decisions, they better be able to explain what led to that decision,» Somenzi said.

It isn’t just a matter of getting a reasonable-sounding answer. It needs to be accurate. One day, an AI’s explanation of itself might have to hold up in court, but how can its testimony be taken seriously if it’s known to lie? You wouldn’t trust a person who failed to explain themselves, and you also wouldn’t trust someone you found was saying what you wanted to hear instead of the truth.

«Having an explanation is very close to manipulation if it is done for the wrong reason,» Trivedi said. «We have to be very careful with respect to the transparency of these explanations.»

Technologies

Google Rolls Out Latest AI Model, Gemini 3.1 Pro

Starting Thursday, Gemini 3.1 Pro can be accessed via the AI app, NotebookLM and more.

Google took the wraps off its latest AI model, Gemini 3.1 Pro, on Thursday, calling it a «step forward in core reasoning.» The software giant says its latest model is smarter and more capable for complex problem-solving.

Google shared a series of bookmarks and examples of the latest model’s capabilities, and is rolling out Gemini 3.1 to a series of products for consumers, enterprise and developers.

The overall AI model landscape seems to change weekly. Google’s release comes just a few days after Anthropic dropped the latest version of Claude, Sonnet 4.6, which can operate a computer at a human baseline level.

Benchmarks of Gemini 3.1

Google shared some details about AI model benchmarks for Gemini 3.1 Pro.

The announcement blog post highlights that the Gemini 3.1 Pro benchmark for the ARC-AGI-2 test for solving abstract reasoning puzzles sits at 77.1%. This is noticeably higher than Gemini 3 Pro’s 31.1% score for the same test.

The ARC-AGI-2 benchmark is one of multiple improvements coming from Gemini 3.1 Pro, Google says.

3.1 Pro enhancements

With better benchmarks nearly across the board, Google highlighted some of the ways that translate in general use:

Code-based animations: The latest Gemini model can easily create animated SVG images that are scalable without quality loss and ready to be added to websites with a text prompt.

Creative coding: Gemini 3.1 Pro generated an entire website based on a character from Emily Brontë’s novel Wuthering Heights, if she were a landscape photographer showing off her portfolio.

Interactive design: 3.1 Pro was used to create a 3D interactive starling murmuration that allows the flock to be controlled in an assortment of ways, all while a soundscape is generated that changes with the movement of the birds.

Availability

As of Thursday, Gemini 3.1 Pro is rolling out in the Gemini app for those with the AI Pro or Ultra plans. NotebookLM users subscribed to one of those plans will also be able to take advantage of the new model.

Both developers and enterprises can also access the new model via the Gemini API through a range of products, including AI Studio, Gemini Enterprise, Antigravity and Android Studio.

Technologies

Today’s NYT Strands Hints, Answers and Help for Feb. 20 #719

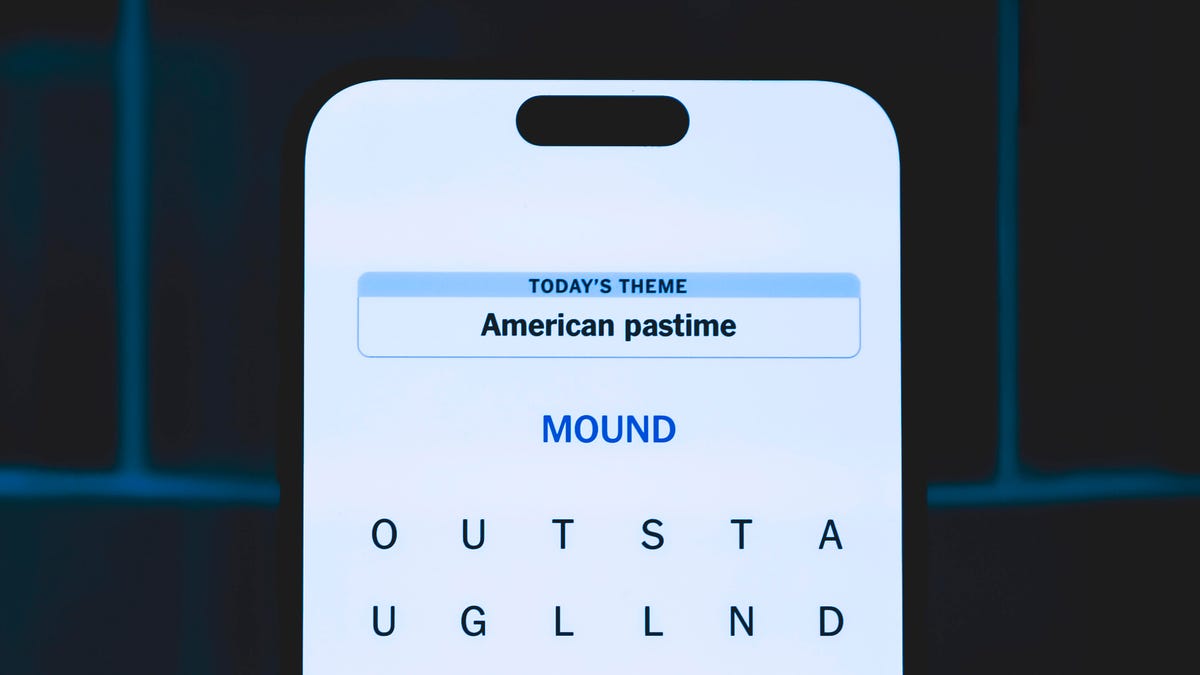

Here are hints and answers for the NYT Strands puzzle for Feb. 20, No. 719.

Looking for the most recent Strands answer? Click here for our daily Strands hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle, Connections and Connections: Sports Edition puzzles.

Today’s NYT Strands puzzle is a bit tricky. Some of the answers are difficult to unscramble, so if you need hints and answers, read on.

I go into depth about the rules for Strands in this story.

If you’re looking for today’s Wordle, Connections and Mini Crossword answers, you can visit CNET’s NYT puzzle hints page.

Read more: NYT Connections Turns 1: These Are the 5 Toughest Puzzles So Far

Hint for today’s Strands puzzle

Today’s Strands theme is: True grit

If that doesn’t help you, here’s a clue: You might find this in a wood shop.

Clue words to unlock in-game hints

Your goal is to find hidden words that fit the puzzle’s theme. If you’re stuck, find any words you can. Every time you find three words of four letters or more, Strands will reveal one of the theme words. These are the words I used to get those hints but any words of four or more letters that you find will work:

- SAND, CART, SCAR, SCAT, PAPER, HAVE

Answers for today’s Strands puzzle

These are the answers that tie into the theme. The goal of the puzzle is to find them all, including the spangram, a theme word that reaches from one side of the puzzle to the other. When you have all of them (I originally thought there were always eight but learned that the number can vary), every letter on the board will be used. Here are the nonspangram answers:

- COARSE, HARSH, SCRATCHY, ROUGH, PRICKLY, ABRASIVE

Today’s Strands spangram

Today’s Strands spangram is SANDPAPER. To find it, start with the S that’s the farthest-left letter on the very top row, and wind down.

Technologies

Today’s NYT Connections: Sports Edition Hints and Answers for Feb. 20, #515

Here are hints and the answers for the NYT Connections: Sports Edition puzzle for Feb. 20, No. 515.

Looking for the most recent regular Connections answers? Click here for today’s Connections hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle and Strands puzzles.

Today’s Connections: Sports Edition features a category all about my favorite football team. If you’re struggling with today’s puzzle but still want to solve it, read on for hints and the answers.

Connections: Sports Edition is published by The Athletic, the subscription-based sports journalism site owned by The Times. It doesn’t appear in the NYT Games app, but it does in The Athletic’s own app. Or you can play it for free online.

Read more: NYT Connections: Sports Edition Puzzle Comes Out of Beta

Hints for today’s Connections: Sports Edition groups

Here are four hints for the groupings in today’s Connections: Sports Edition puzzle, ranked from the easiest yellow group to the tough (and sometimes bizarre) purple group.

Yellow group hint: We’ll be right back…

Green group hint: Run for the roses.

Blue group hint: Skol!

Purple group hint:

Answers for today’s Connections: Sports Edition groups

Yellow group: Break in the action.

Green group: Bets in horse racing.

Blue group: QBs drafted by Vikings in first round.

Purple group: Race ____.

Read more: Wordle Cheat Sheet: Here Are the Most Popular Letters Used in English Words

What are today’s Connections: Sports Edition answers?

The yellow words in today’s Connections

The theme is break in the action. The four answers are intermission, pause, suspension and timeout.

The green words in today’s Connections

The theme is bets in horse racing. The four answers are exacta, place, show and win.

The blue words in today’s Connections

The theme is QBs drafted by Vikings in first round. The four answers are Bridgewater, Culpepper, McCarthy and Ponder.

The purple words in today’s Connections

The theme is race ____. The four answers are bib, car, course and walking.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow