Technologies

Congress Won’t Block State AI Regulations. Here’s What That Means for Consumers

The Senate yanked the plan to halt enforcement of state artificial intelligence laws from the big tax and spending bill at the last minute.

After months of debate, a plan in Congress to block states from regulating artificial intelligence was pulled from the big federal budget bill this week. The proposed 10-year moratorium would have prevented states from enforcing rules and laws on AI if the state accepted federal funding for broadband access.

The issue exposed divides among technology experts and politicians, with some Senate Republicans joining Democrats in opposing the move. The Senate eventually voted 99-1 to remove the proposal from the bill, which also includes the extension of the 2017 federal tax cuts and cuts to services like Medicaid and SNAP. Congressional Republican leaders have said they want to have the measure on President Donald Trump’s desk by July 4.

Tech companies and many Congressional Republicans supported the moratorium, saying it would prevent a «patchwork» of rules and regulations across states and local governments that could hinder the development of AI — especially in the context of competition with China. Critics, including consumer advocates, said states should have a free hand to protect people from potential issues with the fast-growing technology.

«The Senate came together tonight to say that we can’t just run over good state consumer protection laws,» Sen. Maria Cantwell, a Washington Democrat, said in a statement. «States can fight robocalls, deepfakes and provide safe autonomous vehicle laws. This also allows us to work together nationally to provide a new federal framework on artificial intelligence that accelerates US leadership in AI while still protecting consumers.»

Despite the moratorium being pulled from this bill, the debate over how the government can appropriately balance consumer protection and supporting technology innovation will likely continue. «There have been a lot of discussions at the state level, and I would think that it’s important for us to approach this problem at multiple levels,» said Anjana Susarla, a professor at Michigan State University who studies AI. «We could approach it at the national level. We can approach it at the state level, too. I think we need both.»

Several states have already started regulating AI

The proposed moratorium would have barred states from enforcing any regulation, including those already on the books. The exceptions are rules and laws that make things easier for AI development and those that apply the same standards to non-AI models and systems that do similar things. These kinds of regulations are already starting to pop up. The biggest focus is not in the US, but in Europe, where the European Union has already implemented standards for AI. But states are starting to get in on the action.

Colorado passed a set of consumer protections last year, set to go into effect in 2026. California adopted more than a dozen AI-related laws last year. Other states have laws and regulations that often deal with specific issues such as deepfakes or require AI developers to publish information about their training data. At the local level, some regulations also address potential employment discrimination if AI systems are used in hiring.

«States are all over the map when it comes to what they want to regulate in AI,» said Arsen Kourinian, a partner at the law firm Mayer Brown. So far in 2025, state lawmakers have introduced at least 550 proposals around AI, according to the National Conference of State Legislatures. In the House committee hearing last month, Rep. Jay Obernolte, a Republican from California, signaled a desire to get ahead of more state-level regulation. «We have a limited amount of legislative runway to be able to get that problem solved before the states get too far ahead,» he said.

Read more: AI Essentials: 29 Ways to Make Gen AI Work for You, According to Our Experts

While some states have laws on the books, not all of them have gone into effect or seen any enforcement. That limits the potential short-term impact of a moratorium, said Cobun Zweifel-Keegan, managing director in Washington for IAPP. «There isn’t really any enforcement yet.»

A moratorium would likely deter state legislators and policymakers from developing and proposing new regulations, Zweifel-Keegan said. «The federal government would become the primary and potentially sole regulator around AI systems,» he said.

What a moratorium on state AI regulation would mean

AI developers have asked for any guardrails placed on their work to be consistent and streamlined.

«We need, as an industry and as a country, one clear federal standard, whatever it may be,» Alexandr Wang, founder and CEO of the data company Scale AI, told lawmakers during an April hearing. «But we need one, we need clarity as to one federal standard and have preemption to prevent this outcome where you have 50 different standards.»

During a Senate Commerce Committee hearing in May, OpenAI CEO Sam Altman told Sen. Ted Cruz, a Republican from Texas, that an EU-style regulatory system «would be disastrous» for the industry. Altman suggested instead that the industry develop its own standards.

Asked by Sen. Brian Schatz, a Democrat from Hawaii, if industry self-regulation is enough at the moment, Altman said he thought some guardrails would be good, but, «It’s easy for it to go too far. As I have learned more about how the world works, I am more afraid that it could go too far and have really bad consequences.» (Disclosure: Ziff Davis, parent company of CNET, in April filed a lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.)

Not all AI companies are backing a moratorium, however. In a New York Times op-ed, Anthropic CEO Dario Amodei called it «far too blunt an instrument,» saying the federal government should create transparency standards for AI companies instead. «Having this national transparency standard would help not only the public but also Congress understand how the technology is developing, so that lawmakers can decide whether further government action is needed.»

Concerns from companies, both the developers that create AI systems and the «deployers» who use them in interactions with consumers, often stem from fears that states will mandate significant work such as impact assessments or transparency notices before a product is released, Kourinian said. Consumer advocates have said more regulations are needed and hampering the ability of states could hurt the privacy and safety of users.

A moratorium on specific state rules and laws could result in more consumer protection issues being dealt with in court or by state attorneys general, Kourinian said. Existing laws around unfair and deceptive practices that are not specific to AI would still apply. «Time will tell how judges will interpret those issues,» he said.

Susarla said the pervasiveness of AI across industries means states might be able to regulate issues such as privacy and transparency more broadly, without focusing on the technology. But a moratorium on AI regulation could lead to such policies being tied up in lawsuits. «It has to be some kind of balance between ‘we don’t want to stop innovation,’ but on the other hand, we also need to recognize that there can be real consequences,» she said.

Much policy around the governance of AI systems does happen because of those so-called technology-agnostic rules and laws, Zweifel-Keegan said. «It’s worth also remembering that there are a lot of existing laws and there is a potential to make new laws that don’t trigger the moratorium but do apply to AI systems as long as they apply to other systems,» he said.

What’s next for federal AI regulation?

One of the key lawmakers pushing for the removal of the moratorium from the bill was Sen. Marsha Blackburn, a Tennessee Republican. Blackburn said she wanted to make sure states were able to protect children and creators, like the country musicians her state is famous for. «Until Congress passes federally preemptive legislation like the Kids Online Safety Act and an online privacy framework, we can’t block states from standing in the gap to protect vulnerable Americans from harm — including Tennessee creators and precious children,» she said in a statement.

Groups that opposed the preemption of state laws said they hope the next move for Congress is to take steps toward actual regulation of AI, which could make state laws unnecessary. If tech companies «are going to seek federal preemption, they should seek federal preemption along with a federal law that provides rules of the road,» Jason Van Beek, chief government affairs officer at the Future of Life Institute, told me.

Ben Winters, director of AI and data privacy at the Consumer Federation of America, said Congress could take up the idea of pre-empting state laws again in separate legislation. «Fundamentally, it’s just a bad idea,» he told me. «It doesn’t really necessarily matter if it’s done in the budget process.»

Technologies

Today’s NYT Connections: Sports Edition Hints and Answers for Feb. 18, #513

Here are hints and the answers for the NYT Connections: Sports Edition puzzle for Feb. 18, No. 513.

Looking for the most recent regular Connections answers? Click here for today’s Connections hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle and Strands puzzles.

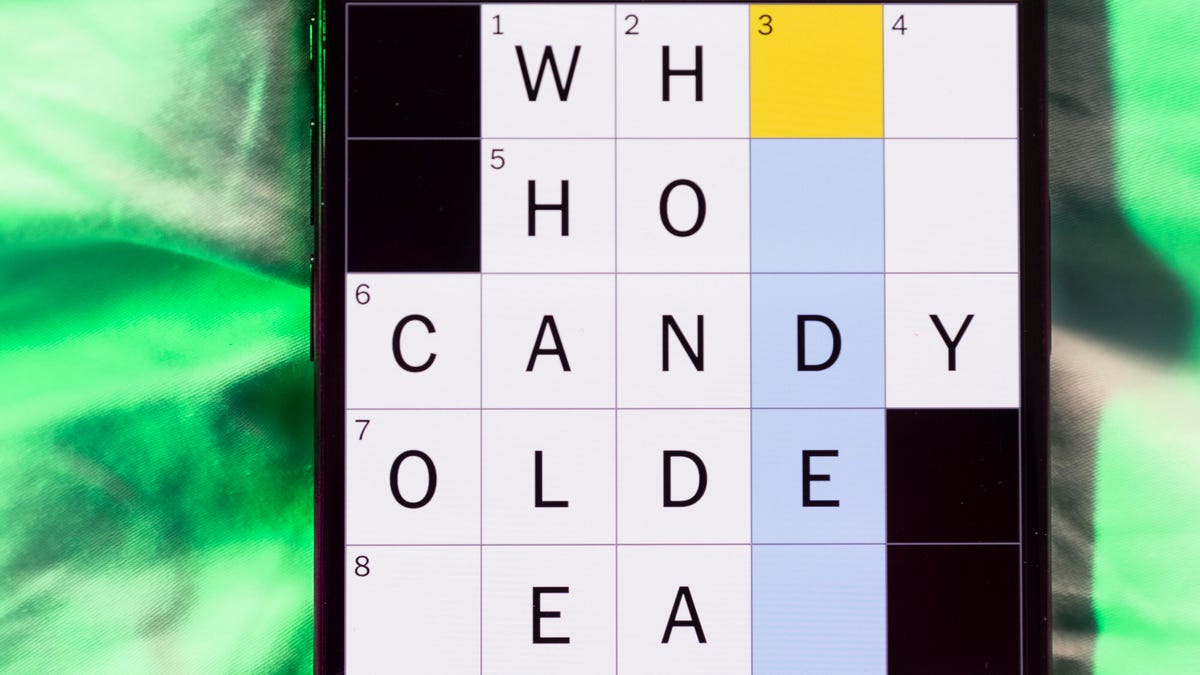

Today’s Connections: Sports Edition has a fun yellow category that might just start you singing. If you’re struggling with today’s puzzle but still want to solve it, read on for hints and the answers.

Connections: Sports Edition is published by The Athletic, the subscription-based sports journalism site owned by The Times. It doesn’t appear in the NYT Games app, but it does in The Athletic’s own app. Or you can play it for free online.

Read more: NYT Connections: Sports Edition Puzzle Comes Out of Beta

Hints for today’s Connections: Sports Edition groups

Here are four hints for the groupings in today’s Connections: Sports Edition puzzle, ranked from the easiest yellow group to the tough (and sometimes bizarre) purple group.

Yellow group hint: I don’t care if I never get back.

Green group hint: Get that gold medal.

Blue group hint: Hoops superstar.

Purple group hint: Not front, but…

Answers for today’s Connections: Sports Edition groups

Yellow group: Heard in «Take Me Out to the Ball Game.»

Green group: Olympic snowboarding events.

Blue group: Vince Carter, informally.

Purple group: ____ back.

Read more: Wordle Cheat Sheet: Here Are the Most Popular Letters Used in English Words

What are today’s Connections: Sports Edition answers?

The yellow words in today’s Connections

The theme is heard in «Take Me Out to the Ball Game.» The four answers are Cracker Jack, home team, old ball game and peanuts.

The green words in today’s Connections

The theme is Olympic snowboarding events. The four answers are big air, giant slalom, halfpipe and slopestyle.

The blue words in today’s Connections

The theme is Vince Carter, informally. The four answers are Air Canada, Half-Man, Half-Amazing, VC and Vinsanity.

The purple words in today’s Connections

The theme is ____ back. The four answers are diamond, drop, quarter and razor.

Technologies

Today’s NYT Mini Crossword Answers for Wednesday, Feb. 18

Here are the answers for The New York Times Mini Crossword for Feb. 18.

Looking for the most recent Mini Crossword answer? Click here for today’s Mini Crossword hints, as well as our daily answers and hints for The New York Times Wordle, Strands, Connections and Connections: Sports Edition puzzles.

Today’s Mini Crossword is a fun one, and it’s not terribly tough. It helps if you know a certain Olympian. Read on for all the answers. And if you could use some hints and guidance for daily solving, check out our Mini Crossword tips.

If you’re looking for today’s Wordle, Connections, Connections: Sports Edition and Strands answers, you can visit CNET’s NYT puzzle hints page.

Read more: Tips and Tricks for Solving The New York Times Mini Crossword

Let’s get to those Mini Crossword clues and answers.

Mini across clues and answers

1A clue: ___ Glenn, Olympic figure skater who’s a three-time U.S. national champion

Answer: AMBER

6A clue: Popcorn size that might come in a bucket

Answer: LARGE

7A clue: Lies and the Lying ___ Who Tell Them» (Al Franken book)

Answer: LIARS

8A clue: Close-up map

Answer: INSET

9A clue: Prepares a home for a new baby

Answer: NESTS

Mini down clues and answers

1D clue: Bold poker declaration

Answer: ALLIN

2D clue: Only U.S. state with a one-syllable name

Answer: MAINE

3D clue: Orchestra section with trumpets and horns

Answer: BRASS

4D clue: «Great» or «Snowy» wading bird

Answer: EGRET

5D clue: Some sheet music squiggles

Answer: RESTS

Technologies

The Witcher 3, Kingdom Come Deliverance 2 Bring the Heat to Xbox Game Pass

Two amazing games will be available soon for Xbox Game Pass subscribers.

The second half of February and early March could be considered one of the best stretches in recent memory for Xbox Game Pass subscribers. The Witcher 3: Wild Hunt, widely regarded as one of the best games of the past decade, and Kingdom Come: Deliverance 2 headline a lineup that leans heavily into sprawling, choice-driven adventures but does throw in some football to mix things up a bit.

Xbox Game Pass offers hundreds of games you can play on your Xbox Series X, Xbox Series S, Xbox One, Amazon Fire TV, smart TV, PC or mobile device, with prices starting at $10 a month. While all Game Pass tiers offer you a library of games, Game Pass Ultimate ($30 a month) gives you access to the most games, as well as Day 1 games, meaning they hit Game Pass the day they go on sale.

Here are all the latest games subscribers can play on Game Pass. You can also check out other games the company added to the service in early February, including Madden NFL 26.

The Witcher 3: Wild Hunt – Complete Edition

Available on Feb. 19 for Game Pass Ultimate and Premium Game Pass subscribers.

The Witcher 3 came out 10 years ago, and it’s still being praised as one of the best games ever made. To celebrate, developer CD Projekt Red is bringing over The Witcher 3: Wild Hunt Complete Edition to Xbox Game Pass. Subscribers will be able to play The Witcher 3 and its expansions, Hearts of Stone and Blood and Wine. Players once more take on the role of monster-slayer Geralt, who goes on an epic search for his daughter, Ciri. As he pieces together what happened to her, he comes across vicious monsters, devious spirits, and the most evil of humans who seek to end his quest.

Death Howl

Available on Feb. 19 for Game Pass Ultimate, Game Pass Premium and PC Game Pass subscribers.

Death Howl is a dark fantasy tactical roguelike that blends turn-based grid combat with deck-building mechanics. Players move across compact battlefield maps, weighing positioning and card synergies to survive increasingly difficult encounters. Progression comes through incremental upgrades that reshape each run. Battles reward careful planning, as overextending or mismanaging your hand can quickly end a run.

EA Sports College Football 26

Available on Feb. 19 for Game Pass Ultimate subscribers.

EA Sports College Football 26 delivers a new take on college football gameplay with enhanced offensive and defensive mechanics, smarter AI and dynamic play-calling that reflects real strategic football systems. Featuring over 2,800 plays and more than 300 real-world coaches with distinct schemes, it offers expanded Dynasty and Road to Glory modes where team building and personnel decisions matter. On the field, dynamic substitutions, improved blocking and coverage logic make matches feel more fluid and tactical.

Dice A Million

Available on Feb. 25 for Game Pass Ultimate and PC Game Pass subscribers.

Dice A Million centers on rolling and managing dice to build toward increasingly higher scores. Each round asks players to weigh risk against reward, deciding when to bank points and when to push for bigger combinations. Progression introduces modifiers and new rules that subtly shift probabilities, making runs feel distinct while keeping the core loop focused on calculated gambling.

Towerborne

Available on Feb. 26 for Game Pass Ultimate, PC, and Premium Game Pass subscribers.

After months in preview, Towerborne will get its full release on Xbox Game Pass. The fast-paced action game blends procedural dungeons and light RPG progression, with players fighting through waves of enemies. You’ll unlock permanent upgrades between runs and equip weapons, spells and talents that change how combat feels each time. The core loop pushes risk versus reward as you dive deeper into tougher floors, adapting builds on the fly, and mastering movement and timing to survive increasingly chaotic battles.

Final Fantasy 3

Available on March 3 for Game Pass Ultimate, Premium and PC Game Pass subscribers.

Another Final Fantasy game is coming to Xbox Game Pass. This time, it’s Final Fantasy 3, originally released on the Famicom (the Japanese version of the NES) back in 1990. Since then, Final Fantasy 3 has been ported to a slew of devices and operating systems, including the Nintendo Wii, iOS and Android. Now, you’ll be able to play on your Xbox or PC with a Game Pass subscription. A new group of heroes is once again tasked with saving the world before it’s covered in darkness. Four orphans from the village of Ur find a Crystal of Light in a secret cave, which tasks them as the new Warriors of Light. They’ll have to stop Xande, an evil wizard looking to use the power of darkness to become immortal.

Kingdom Come: Deliverance 2

Available on March 3 for Game Pass Ultimate, Premium and PC Game Pass subscribers.

Last year was stacked with amazing games, and Kingdom Come: Deliverance 2 was one of the best. Developer Warhorse Studios’ RPG series takes place in the real medieval kingdom of Bohemia, which is now the Czech Republic, and tasks players with a somewhat realistic gaming experience where you have to use the weapons, armor and items from those times. The sequel picks up right after the first game (also on Xbox Game Pass) as Henry of Skalitz is attacked by bandits, which starts a series of events that disrupts the entire country.

Games leaving Game Pass in February

For February, Microsoft is removing four games. If you’re still playing them, now’s a good time to finish up what you can before they’re gone for good on Feb. 28.

For more on Xbox, discover other games available on Game Pass now, and check out our hands-on review of the gaming service. You can also learn about recent changes to Game Pass.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow