Technologies

Gemini Live’s New Camera Trick Works Like Magic — When It Wants To

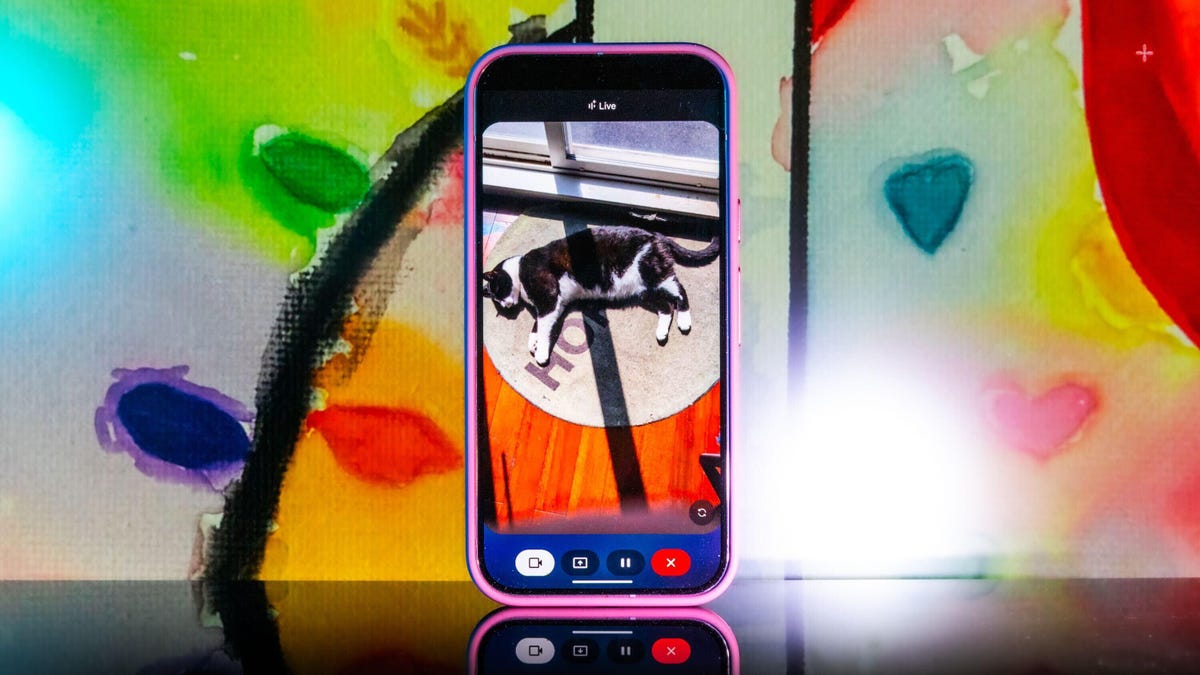

Gemini Live’s new camera mode can identify objects around you and more. I tested it out with my offbeat collectibles.

When Gemini Live’s new camera feature popped up on my phone, I didn’t hesitate to try it out. In one of my longer tests, I turned it on and started walking through my apartment, asking Gemini what it saw. It identified some fruit, chapstick and a few other everyday items with no problem, but I was wowed when I asked where I left my scissors. «I just spotted your scissors on the table, right next to the green package of pistachios. Do you see them?»

It was right, and I was wowed.

I never mentioned the scissors while I was giving Gemini a tour of my apartment, but I made sure their placement was in the camera view for a couple of seconds before moving on and asking additional questions about other objects in the room.

I was following the lead of the demo that Google did last summer when it first showed off these Live video AI capabilities. Gemini reminded the person giving the demo where they left their glasses, and it seemed too good to be true, so I had to try it out and came away impressed.

Gemini Live will recognize a whole lot more than household odds and ends. Google says it’ll help you navigate a crowded train station or figure out the filling of a pastry. It can give you deeper information about artwork, like where an object originated and whether it was a limited edition.

It’s more than just a souped-up Google Lens. You talk with it, and it talks to you. I didn’t need to speak to Gemini in any particular way — it was as casual as any conversation. Way better than talking with the old Google Assistant that the company is quickly phasing out.

Google and Samsung are just starting to roll out the feature to all Pixel 9 (including the new, Pixel 9a) and Galaxy S25 phones. It’s free for those devices, and other Pixel phones can access it via a Google AI Premium subscription. Google also released a new YouTube video for the April 2025 Pixel Drop showcasing the feature, and there’s now a dedicated page on the Google Store for it.

To get started, you can go live with Gemini, enable the camera and start talking.

Gemini Live follows on from Google’s Project Astra, first revealed last year as possibly the company’s biggest «we’re in the future» feature, an experimental next step for generative AI capabilities, beyond your simply typing or even speaking prompts into a chatbot like ChatGPT, Claude or Gemini. It comes as AI companies continue to dramatically increase the skills of AI tools, from video generation to raw processing power. Similar to Gemini Live, there’s Apple’s Visual Intelligence, which the iPhone maker released in a beta form late last year.

My big takeaway is that a feature like Gemini Live has the potential to change how we interact with the world around us, melding our digital and physical worlds together just by holding your camera in front of almost anything.

I put Gemini Live to a real test

The first time I tried it, Gemini was shockingly accurate when I placed a very specific gaming collectible of a stuffed rabbit in my camera’s view. The second time, I showed it to a friend in an art gallery. It identified the tortoise on a cross (don’t ask me) and immediately identified and translated the kanji right next to the tortoise, giving both of us chills and leaving us more than a little creeped out. In a good way, I think.

I got to thinking about how I could stress-test the feature. I tried to screen-record it in action, but it consistently fell apart at that task. And what if I went off the beaten path with it? I’m a huge fan of the horror genre — movies, TV shows, video games — and have countless collectibles, trinkets and what have you. How well would it do with more obscure stuff — like my horror-themed collectibles?

First, let me say that Gemini can be both absolutely incredible and ridiculously frustrating in the same round of questions. I had roughly 11 objects that I was asking Gemini to identify, and it would sometimes get worse the longer the live session ran, so I had to limit sessions to only one or two objects. My guess is that Gemini attempted to use contextual information from previously identified objects to guess new objects put in front of it, which sort of makes sense, but ultimately, neither I nor it benefited from this.

Sometimes, Gemini was just on point, easily landing the correct answers with no fuss or confusion, but this tended to happen with more recent or popular objects. For example, I was surprised when it immediately guessed one of my test objects was not only from Destiny 2, but was a limited edition from a seasonal event from last year.

At other times, Gemini would be way off the mark, and I would need to give it more hints to get into the ballpark of the right answer. And sometimes, it seemed as though Gemini was taking context from my previous live sessions to come up with answers, identifying multiple objects as coming from Silent Hill when they were not. I have a display case dedicated to the game series, so I could see why it would want to dip into that territory quickly.

Gemini can get full-on bugged out at times. On more than one occasion, Gemini misidentified one of the items as a made-up character from the unreleased Silent Hill: f game, clearly merging pieces of different titles into something that never was. The other consistent bug I experienced was when Gemini would produce an incorrect answer, and I would correct it and hint closer at the answer — or straight up give it the answer, only to have it repeat the incorrect answer as if it was a new guess. When that happened, I would close the session and start a new one, which wasn’t always helpful.

One trick I found was that some conversations did better than others. If I scrolled through my Gemini conversation list, tapped an old chat that had gotten a specific item correct, and then went live again from that chat, it would be able to identify the items without issue. While that’s not necessarily surprising, it was interesting to see that some conversations worked better than others, even if you used the same language.

Google didn’t respond to my requests for more information on how Gemini Live works.

I wanted Gemini to successfully answer my sometimes highly specific questions, so I provided plenty of hints to get there. The nudges were often helpful, but not always. Below are a series of objects I tried to get Gemini to identify and provide information about.

Technologies

Wisconsin Reverses Decision to Ban VPNs in Age-Verification Bill

The law would have required websites to block VPN users from accessing «harmful material.»

Following a wave of criticism, Wisconsin lawmakers have decided not to include a ban on VPN services in their age-verification law, making its way through the state legislature.

Wisconsin Senate Bill 130 (and its sister Assembly Bill 105), introduced in March 2025, aims to prohibit businesses from «publishing or distributing material harmful to minors» unless there is a reasonable «method to verify the age of individuals attempting to access the website.»

One provision would have required businesses to bar people from accessing their sites via «a virtual private network system or virtual private network provider.»

A VPN lets you access the internet via an encrypted connection, enabling you to bypass firewalls and unblock geographically restricted websites and streaming content. While using a VPN, your IP address and physical location are masked, and your internet service provider doesn’t know which websites you visit.

Wisconsin state Sen. Van Wanggaard moved to delete that provision in the legislation, thereby releasing VPNs from any liability. The state assembly agreed to remove the VPN ban, and the bill now awaits Wisconsin Governor Tony Evers’s signature.

Rindala Alajaji, associate director of state affairs at the digital freedom nonprofit Electronic Frontier Foundation, says Wisconsin’s U-turn is «great news.»

«This shows the power of public advocacy and pushback,» Alajaji says. «Politicians heard the VPN users who shared their worries and fears, and the experts who explained how the ban wouldn’t work.»

Earlier this week, the EFF had written an open letter arguing that the draft laws did not «meaningfully advance the goal of keeping young people safe online.» The EFF said that blocking VPNs would harm many groups that rely on that software for private and secure internet connections, including «businesses, universities, journalists and ordinary citizens,» and that «many law enforcement professionals, veterans and small business owners rely on VPNs to safely use the internet.»

More from CNET: Best VPN Service for 2026: VPNs Tested by Our Experts

VPNs can also help you get around age-verification laws — for instance, if you live in a state or country that requires age verification to access certain material, you can use a VPN to make it look like you live elsewhere, thereby gaining access to that material. As age-restriction laws increase around the US, VPN use has also increased. However, many people are using free VPNs, which are fertile ground for cybercriminals.

In its letter to Wisconsin lawmakers prior to the reversal, the EFF argued that it is «unworkable» to require websites to block VPN users from accessing adult content. The EFF said such sites cannot «reliably determine» where a VPN customer lives — it could be any US state or even other countries.

«As a result, covered websites would face an impossible choice: either block all VPN users everywhere, disrupting access for millions of people nationwide, or cease offering services in Wisconsin altogether,» the EFF wrote.

Wisconsin is not the only state to consider VPN bans to prevent access to adult material. Last year, Michigan introduced the Anticorruption of Public Morals Act, which would ban all use of VPNs. If passed, it would force ISPs to detect and block VPN usage and also ban the sale of VPNs in the state. Fines could reach $500,000.

Technologies

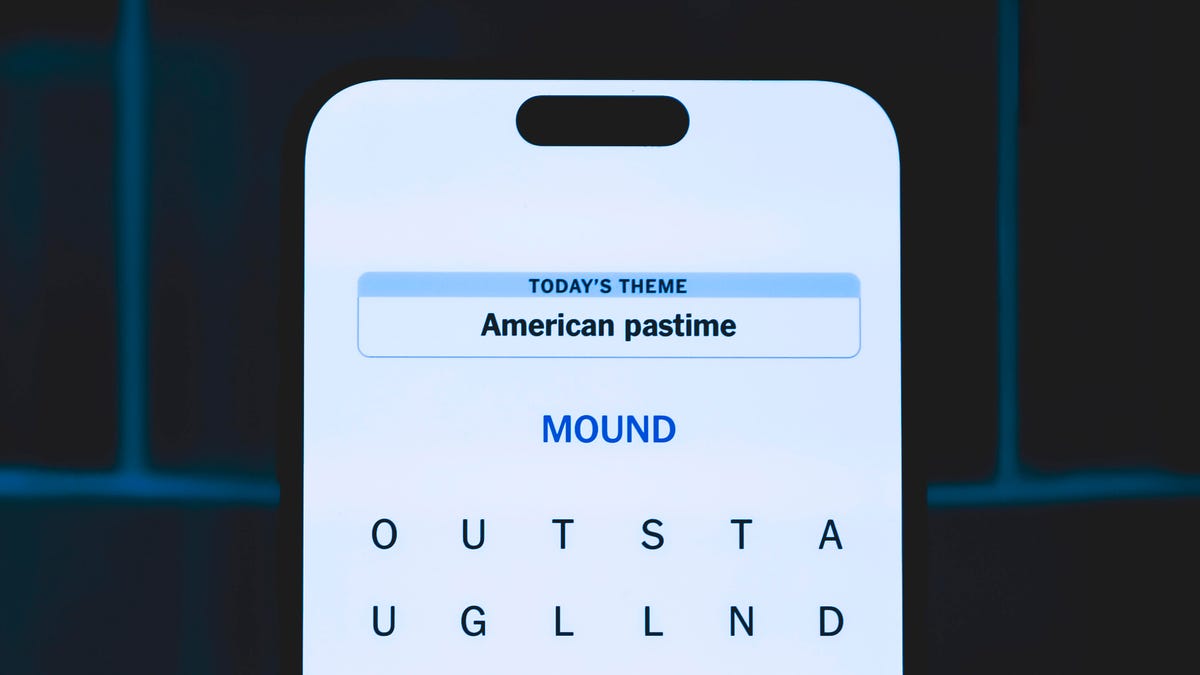

Today’s NYT Strands Hints, Answers and Help for Feb. 21 #720

Here are hints and answers for the NYT Strands puzzle for Feb. 21, No. 720.

Looking for the most recent Strands answer? Click here for our daily Strands hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle, Connections and Connections: Sports Edition puzzles.

Today’s NYT Strands puzzle might be easy for those who pursue a certain hobby. Some of the answers are difficult to unscramble, so if you need hints and answers, read on.

I go into depth about the rules for Strands in this story.

If you’re looking for today’s Wordle, Connections and Mini Crossword answers, you can visit CNET’s NYT puzzle hints page.

Read more: NYT Connections Turns 1: These Are the 5 Toughest Puzzles So Far

Hint for today’s Strands puzzle

Today’s Strands theme is: The beer necessities.

If that doesn’t help you, here’s a clue: Cheers!

Clue words to unlock in-game hints

Your goal is to find hidden words that fit the puzzle’s theme. If you’re stuck, find any words you can. Every time you find three words of four letters or more, Strands will reveal one of the theme words. These are the words I used to get those hints but any words of four or more letters that you find will work:

- MALE, TREAT, STEAM, TEAM, MOVE, LOVE, ROVE, ROVER, SPEAR, PEAR

Answers for today’s Strands puzzle

These are the answers that tie into the theme. The goal of the puzzle is to find them all, including the spangram, a theme word that reaches from one side of the puzzle to the other. When you have all of them (I originally thought there were always eight but learned that the number can vary), every letter on the board will be used. Here are the nonspangram answers:

- HOPS, WATER, MALT, YEAST, BARLEY, SUGAR, WHEAT, FLAVOR

Today’s Strands spangram

Today’s Strands spangram is HOMEBREW. To find it, start with the H that’s three letters to the right on the top row, and wind down.

Technologies

Today’s NYT Connections Hints, Answers and Help for Feb. 21, #986

Here are some hints and the answers for the NYT Connections puzzle for Feb. 21 #986.

Looking for the most recent Connections answers? Click here for today’s Connections hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle, Connections: Sports Edition and Strands puzzles.

Today’s NYT Connections puzzle features another of those purple categories where you need to look for hidden words inside of other words. It can be a real stumper. Read on for clues and today’s Connections answers.

The Times has a Connections Bot, like the one for Wordle. Go there after you play to receive a numeric score and to have the program analyze your answers. Players who are registered with the Times Games section can now nerd out by following their progress, including the number of puzzles completed, win rate, number of times they nabbed a perfect score and their win streak.

Read more: Hints, Tips and Strategies to Help You Win at NYT Connections Every Time

Hints for today’s Connections groups

Here are four hints for the groupings in today’s Connections puzzle, ranked from the easiest yellow group to the tough (and sometimes bizarre) purple group.

Yellow group hint: Rookies don’t have this.

Green group hint: Call the roll.

Blue group hint: How’d you do today?

Purple group hint: Vroom-vroom, but with a twist.

Answers for today’s Connections groups

Yellow group: Experience.

Green group: Attendance status.

Blue group: Commentary about your Connections results.

Purple group: Car brands plus two letters.

Read more: Wordle Cheat Sheet: Here Are the Most Popular Letters Used in English Words

What are today’s Connections answers?

The yellow words in today’s Connections

The theme is experience. The four answers are background, history, life and past.

The green words in today’s Connections

The theme is attendance status. The four answers are absent, excused, late and present.

The blue words in today’s Connections

The theme is commentary about your Connections results. The four answers are great, perfect, phew and solid.

The purple words in today’s Connections

The theme is car brands plus two letters. The four answers are audits (Audi), Dodgers (Dodge), Infinitive (Infiniti) and Minion (Mini).

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow