Technologies

I Took the iPhone 15 Pro Max and 13 Pro Max to Yosemite for a Camera Test

Do the latest Apple phone and cameras capture the epic majesty of Yosemite National Park better than a two-year-old iPhone? We find out.

This past week, I took Apple’s new iPhone 15 Pro Max on an epic adventure to California’s Yosemite National Park.

As a professional photographer, I take tens of thousands of photos every year. Much of my work is done inside my San Francisco photo studio, but I also spend a considerable amount of time shooting on location. I still use a DSLR, but my iPhone 13 Pro is never far from me.

Like most people nowadays, I don’t upgrade my phone every year or even two. Phones have reached a point where they are good at performing daily tasks for three or four years. And most phone cameras are sufficient for capturing everyday special moments to post on social media or share with friends.

But maybe, like me, you’re in the mood for something shiny and new like the iPhone 15 Pro Max. I wanted to find out how my 2-year-old iPhone 13 Pro and its 3x optical zoom would do against the 15 Pro Max and its new 5x optical zoom. And what better place to take them than on an epic adventure to Yosemite, one of the crown jewels of America’s National Park System and an iconic destination for outdoor lovers.

Yosemite is absolutely, massively impressive.

The main camera is still the best camera

The iPhone 15 Pro Max’s main camera with its wide angle lens is the most important camera on the phone. It has a new larger 48-megapixel sensor that had no problem being my daily workhorse for a week.

The larger sensor means the camera can now capture more light and render colors more accurately. And the improvements are visible. Not only do photos look richer in bright light but also in low-light scenarios.

In the images below, taken at sunrise at Tunnel View in Yosemite National Park, notice how the 15 Pro Max’s photo has better fidelity, color and contrast in the foreground leaves. Compare that against the pronounced edge sharpening of the mountaintops in the 13 Pro image.

The 15 Pro Max’s camera captures excellent detail in bright light, including more texture, like in rocky landscapes, more detail in the trees and more fine-grained color.

A new 15 Pro Max feature aimed at satisfying a camera nerd’s creative itch uses the larger main sensor combined with the A17 Pro chip to turn the 24mm equivalent wide angle lens into essentially four lenses. You can switch the main camera between 1x, 1.2x, 1.5x and 2x, the equivalent of 24mm, 28mm, 35mm and 50mm prime lens – four of the most popular prime lens lengths. In reality, the 15 Pro Max takes crops of the sensor and using some clever processing to correct lens distortion.

In use, it’s nice to have these crop options, but for most people they will likely be of little interest.

I find the 15 Pro Max’s native 1x view a little wide and enjoy being able to change it to default to 1.5x magnification. I went into Settings, tapped on Camera, then on Main Camera and changed the default lens to a 35mm look. Now, every time I open the camera, it’s at 1.5x and I can just focus on framing and taking the photo instead of zooming in.

Another nifty change that I highly recommend is to customize the Action button so that it opens the camera when you long press it. The Action button replaces the switch to mute/silence your phone that has been on every iPhone since the original. You can program the Action button to trigger a handful of features or shortcuts by going into the Settings app and tapping Action button. Once you open the camera, the Action button can double as a physical camera shutter button.

The dynamic range and detail are noticeably better in photos I took with the 15 Pro Max main camera in just about every lighting condition.

There are fewer blown out highlights and nicer, blacker blacks with less noise. In particular, there is more tonal range and detail in the whites. I noticed this particularly when it came to how the 15 Pro Max captured direct sunlight on climbers or in the shadow detail in the rock formations.

Read more: iPhone 15 Pro Max Camera vs. Galaxy S23 Ultra: Smartphone Shootout

Overall, the 15 Pro Max’s main camera is simply far better and consistent at exposures than on the 13 Pro.

The iPhone 15 Pro Max 5x telephoto camera

The iPhone 15 Pro Max has a 5x telephoto camera with an f/2.8 aperture and an equivalent focal length of 120mm.

The 13 Pro’s 3x camera, introduced in 2021, was a huge step up from previous models and still gives zoomed-in images a cinematic feel from the lens’ depth compression. The 15 Pro Max’s longer telephoto lens, combined with a larger sensor, accentuates those cinematic qualities even further, resulting in images with a rich array of color and a wider tonal range.

All this translates to a huge improvement in light capture and a noticeable step up in image quality for the iPhone’s zoom lens.

I found that the 15 Pro Max’s telephoto camera yields better photos of subjects farther away like mountains, wildlife and the stage at a live concert.

A combination of optical stabilization and 3D sensor-shift make the 15 Pro Max’s tele upgrade experience easier to use by steadying the image capture. A longer lens typically means there’s a greater chance of blurred images due to your hand shaking. Using such a long focal length magnifies every little movement of the camera.

I found that the 3D sensor-shift optical image stabilization system does wonders for shooting distant subjects and minimizing that camera shake.

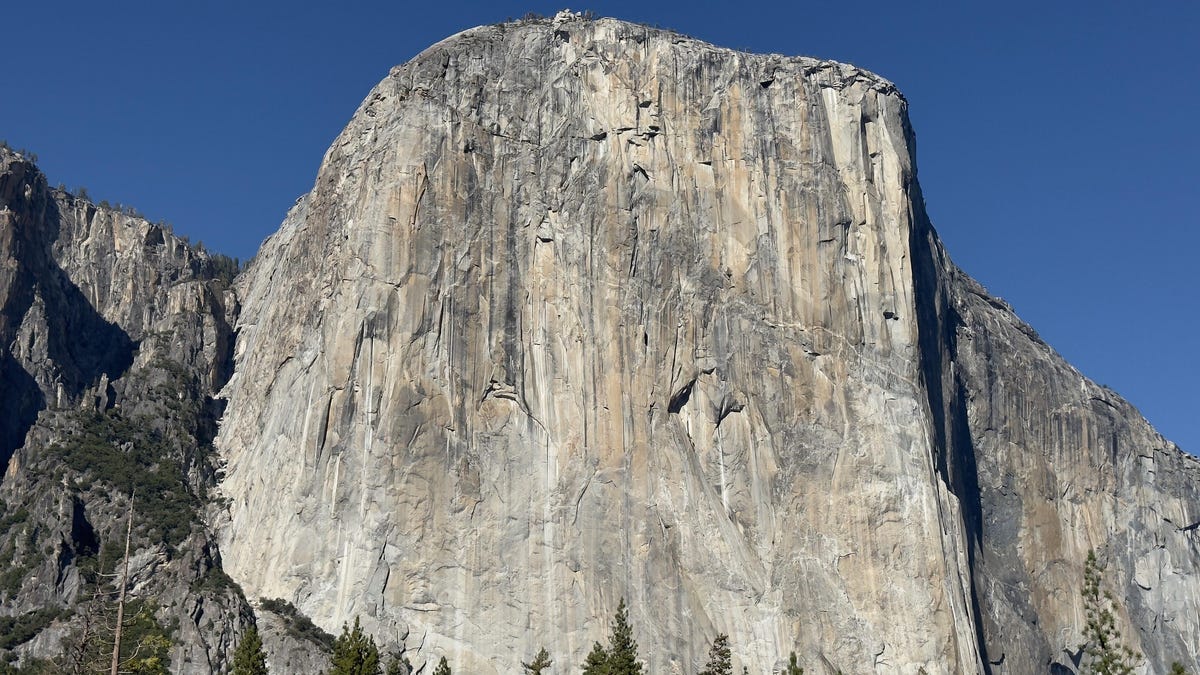

The image below was shot with the 5x zoom on the iPhone 15 Pro Max looking up the Yosemite Valley from Tunnel View. It is an incredibly crisp telephoto image.

For reference, the image below was shot on the 15 Pro Max from the same location using the ultra Wide lens. I am about five miles away from that V-shaped dip at the end of the valley.

The iPhone still suffers from lens flare

Lens flares, along with the green dot that seems to be in all iPhone images taken into direct sunlight, continue to be an issue on the iPhone 15 Pro Max despite the new lens coatings.

Apple says the main camera lens has been treated for anti-glare, but I didn’t notice any improvements. In some cases, images have even greater lens flares than photos from previous iPhone models.

Notice the repeated halo effect surrounding the sun on the images below shot at Lower Yosemite Falls.

The 15 Pro Max and Smart HDR 5

The 15 Pro Max’s new A17 Pro chip brings with it greater computational power (Apple calls it Smart HDR 5), which delivers more natural looking images compared with the 13 Pro, especially in very bright and very dark scenes. There is a noticeably better, more subtle handling of color with a less heavy-handed approach that balances between brightening the shadows and darkening highlights.

You can see clearly the warmer, more natural looking light in 15 Pro Max photo below, pushing back against the typical blue light rendering that is common in over-processed HDR images. At the same time, Apple’s implementation hasn’t swayed too far in the opposite direction and refrains from over saturating orange colors that frequently troubles digital corrections on phones.

Coming from an iPhone 13 Pro Max, I noticed the background corrections during computational processing on the 15 Pro Max tend to result in more discrete and balanced images. Apple appears to have dialed back its bombastic pursuit of pushing computational photography right in our faces like with the 13 Pro and fine tuned the 15 Pro Max’s image pipeline to lean toward a more realistic reflection of your subject.

It’s a welcome change.

The 15 Pro Max shines in night mode

Night mode shots from the 15 Pro Max look similar to the ones from my 13 Pro Max, but there are minor improvements in the exposure that result in images with a better tonal range. The 15 Pro Max’s larger main camera sensor captures photos with less noise in the blacks and a better overall exposure compared to the 13 Pro Max.

Colors in 15 Pro Max night mode images appear more accurate, realistic, and have a wider dynamic range. Notice the detail in the photo below of El Capitan and The Dawn Wall. The 15 Pro Max even captures detail in the car lights snaking through the valley floor road.

Overall, night mode images continue to look soft and over-processed. Night mode gives snaps a dream-like vibe and that isn’t necessarily a bad thing. These photos are brighter and have less image noise than those shot on my iPhone 13 Pro Max.

15 Pro Max vs. 13 Pro Max: the bottom line

By this point, it should be no surprise that the iPhone 15 Pro Max’s cameras are a significant improvement over the ones on the 13 Pro Max. If photography is a priority for you, I recommend upgrading to it from the 13 Pro Max or earlier.

If you’re coming from an iPhone 14 Pro, the improvements seem less dramatic, and it’s likely not a worth the upgrade. I’m incredibly excited to continue carrying the iPhone 15 Pro Max in my pocket to Yosemite or just around my home.

Technologies

The Impressive Bowers & Wilkins PX7 S2e Bluetooth Headphones Drop Below $300

You can save more than $100 on these high-end, previous-gen Bowers & Wilkins headphones.

Bowers & Wilkins headphones are among the best, and right now you can grab the Bowers & Wilkins PX7 S2e Bluetooth headphones for only $297. That’s a nice $102 discount and just $3 more than the lowest price we’ve seen. But this deal won’t last long, so be quick to lock in this price.

The newer PX7 S3 headphones have earned a place on our best headphones roundup, but those also come in at $479. However, if you aren’t worried about having the latest model, you can take advantage of price drops on previous-gen models, like this one.

Our audio expert, David Carnoy, appreciated the original PX7 S2 headphones when they debuted, noting their comfortable fit, sound quality, noise cancellation and voice-calling performance. So these PX7 S2e headphones are an updated version with refined internal tuning and a more controlled base, but they still have the same design and offer up to 30 hours of playback, just like the original.

Not quite what you’re looking for? Check out all of our best headphone deals for more options.

HEADPHONE DEALS OF THE WEEK

-

$248 (save $152)

-

$170 (save $181)

-

$398 (save $62)

-

$200 (save $250)

Why this deal matters

Any chance to save over $100 on a pair of high-end headphones is a deal worth paying attention to. This model isn’t the current flagship, but it still offers exceptional sound and comfort, and now you can score a pair for under $300.

Technologies

Watch Out, Meta. I Tried Alibaba’s Qwen Smart Glasses and They’re Mega Impressive

These AI-focused smart glasses are available now in China but will roll out internationally later this year.

Mobile World Congress in Barcelona might be a European tech show, but for the past few years, the event has largely been dominated by Chinese phone companies such as Xiaomi and Honor. This year, they were joined by tech giant Alibaba, which launched its Qwen smart glasses at the show — and having tried them, all I have to say is, Meta should watch its back.

The Qwen glasses are among the first wearable devices Alibaba is building on top of its Qwen AI family of large language models, and the company brought two different models to the MWC.

The first pair, the Qwen S1 specs, have a heads-up waveguide display etched into the lenses, and serve as a rival to Meta’s Ray-Ban Display model (minus the gesture control). My first impression of these AR glasses was that they were light and comfortable to wear — I wouldn’t have known that they were smart glasses by their weight alone. At the end of each arm are swappable batteries, which snap off easily so you can keep the glasses running for longer when you’re on the go.

I activated the glasses with the phrase «Hey Qwennie,» which it picked up with its five microphones. I then asked it to complete a range of basic tasks, including asking the device to take a photo and to tell me what I was looking at when I held a photo of Barcelona’s Sagrada Familia in front of my face.

I could see a miniature version of the photo I captured in the green display, and the glasses were able to answer my architectural query both by displaying text in the heads-up display and through the bone conduction built into the arms of the S1. Perhaps my favorite feature, though, was the turn-by-turn directions. This feature felt like it could become essential for navigating a busy city, and far more convenient than using a phone or smartwatch.

I also tried out the teleprompter feature, which scrolled as I read out loud from the text appearing on the display but must confess I didn’t find it quite as easy to follow as a similar demo I tried earlier in the week on the MemoMind One glasses. With the Qwen booth assistant talking to me in Chinese, I was able to see and hear the English translation of her words on the display and in my ear simultaneously, although there was enough of a delay to prevent our communication from being entirely smooth.

The second pair of glasses Alibaba brought to the show were the Qwen G1 glasses, which lack the heads-up display present on the S1, but otherwise offer pretty much the same features thanks to the microphones, cameras and bone-conduction.

On the whole, I was impressed by the look, feel, sound quality and capabilities of these glasses, which for many people might be their first introduction to Alibaba’s Qwen AI (by way of the Qwen App, which is integrated with the specs). In China, where preorders for the glasses are already live, people wearing the glasses will be able to complete tasks such as ordering food or hailing a cab completely hands free.

Alibaba said pricing for the G1 glasses will start at around $275 (for comparison, Meta’s Ray-Ban Gen 2 glasses cost $379), but didn’t say how much the more advanced S1 glasses will cost. Official sales in China will commence on March 8, with Alibaba promising an international rollout featuring integration with popular global services scheduled for an unspecified date later in 2026.

Technologies

Softness and Brightness Blend to Stunning Effect in TCL’s Nxtpaper AMOLED Phone Display

An anti-glare screen that’s still radiant and vivid? Sign me up.

I’ve always been impressed with TCL’s easy-to-read Nxtpaper technology. Sitting somewhere between E Ink and a more traditional screen with built-in anti-glare tech, there’s a softness both to the look and feel of a Nxtpaper display that makes it a real pleasure to use.

But if I were asked whether I’d be happy to replace my regular phone with one that had an LCD Nxtpaper display, the answer has always been no, for one simple reason: brightness. The vivid colors that we’re accustomed to on most phones screens tend to look dull on Nxtpaper, and I just wouldn’t be willing to compromise on radiance, in spite of the many good qualities Nxtpaper brings to the table.

Until now, that is. Among the cool phones and weird tech on display at Mobile World Congress 2026, I saw a Nxtpaper phone that might have changed my perspective. TCL showed off an upgraded AMOLED version of Nxtpaper stopped me in my tracks. It blended the luminosity of AMOLED and the softness of Nxtpaper to stunning effect, in a way that would genuinely make me reconsider my stance on owning a Nxtpaper phone.

The screen offers 3,200 nits of brightness, and has a circular polarization rate of 90%, which means it closely resembles natural light. TCL has managed to reduce blue light emission as low as 2.9%, and the display dynamically adjusts brightness and color temperature in tune with the body’s natural circadian rhythms.

The one drawback I can see for using Nxtpaper on a phone screen is that it might not be ideal for taking, viewing and editing photos. In my brief demo at MWC, I took a selfie and noticed the colors didn’t look especially true to life. But it’s important to note that TCL is still developing this technology, so it remains a work in progress and my brief time using it likely won’t be an accurate reflection of a final product.

In all, this is real leap forward for Nxtpaper. Although TCL hasn’t announced any devices featuring the technology yet, it likely will do in due course. I’d personally like to see it on a laptop — as I spend all day staring at my screen both reading and writing, it seems like the perfect application of this tech. I can’t wait to see where it ends up.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow