Technologies

Crackdown on Netflix Password Sharing: What It Means for You

It all boils down to choice.

If you’re sharing your Netflix password with friends or family, it’s time to make a choice: Do you want to pay extra, or politely boot them from your account?

Netflix has rolled out account-sharing changes to US customers who are sharing passwords with anyone outside their household. Subscribers with either a standard or premium plan can choose to pay an extra $8 per month for each additional member.

However, there are limits to how many extra users are allowed. Premium subscribers ($20 a month) can add two extra people to their account while those on the standard plan ($15.50 a month) are only allowed one extra member. Netflix defines a household as one where everyone lives under the same roof. Members of that household are still able to watch content while traveling, and the extra fee will not apply. At this time, the extra member option is only available for those who are billed directly by Netflix.

How to add or remove extra users

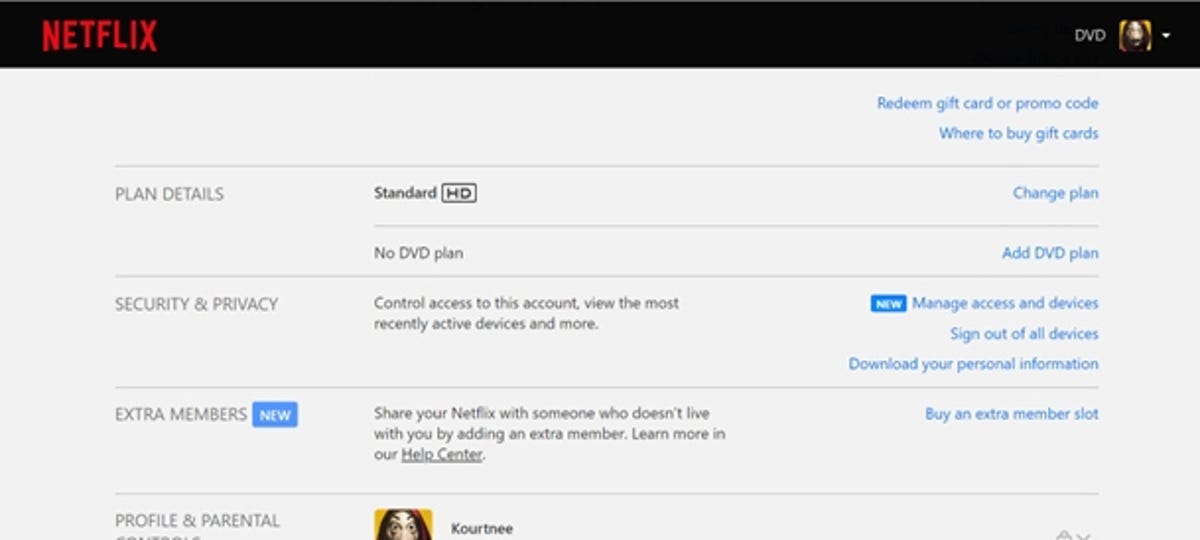

When you open the Netflix app and navigate to your account page, you’ll see an Extra Members option. From there, subscribers can purchase a slot for the person outside their household. If they accept the invitation, the extra member will receive their own separate account, profile and password, and the fee is paid for by the main subscribing household.

The rules? Extra member accounts can only stream on one device at a time and are only permitted to have one profile. The extra member must also be located in the same country as the account holder.

A peek at where to find Extra Members on your account page.

Subscribers can also opt to remove users outside of their households from their account, and then urge them to sign up for their own Netflix subscriptions. In this case, anyone who is removed from an account can transfer existing profiles to a new membership they pay for themselves.

Here’s a look at the monthly cost for each subscription plan:

Netflix plans

| Basic with ads | Basic no ads | Standard | Premium | |

|---|---|---|---|---|

| Monthly price | $7 | $10 | $15.50 | $20 |

| Number of screens you can watch at the same time | 1 | 1 | 2 | 4 |

| Number of phones or tablets you can have downloads on | 0 | 1 | 2 | 4 |

| HD available | No | Yes | Yes | Yes |

| Ultra HD available | No | No | No | Yes |

The streaming service rolled out its new policy in February for Canada, Spain, Portugal and New Zealand. Netflix first announced its intention to crack down on password-sharing last year. In April, Netflix said it would implement a fee for US customers by the end of the second quarter, i.e. the end of June, but the changes took effect in late May.

Technologies

Smartphone vs. Dumb Phone: Why People Are Going Basic

Here’s how to ditch your smartphone for a dumb phone. It’s digital detox done right.

Over the past couple of decades, smartphones have become an integral part of our lives. According to the Pew Research Center, about 91% of Americans own a smartphone. Statista reports that the number of global smartphone owners is estimated to reach 6.1 billion in 2029. For many, a smartphone is an always-on internet device that keeps us connected to the world.

But there are also significant downsides to having one. If you’re concerned about how much time you spend on your phone, you aren’t alone: Some people feel addicted to their smartphones, checking their email and social media feeds hundreds of times a day. Perhaps you find yourself doomscrolling through the news or wasting time on mindless apps and games rather than being productive at work or spending quality time with your family. Sure, you could simply limit your screen time, but that takes willpower that you might not have.

This rise in this obsessive behavior toward smartphones explains the resurgence of so-called dumb phones in recent years. Sometimes referred to as feature phones, dumb phones are essentially stripped-down cellular devices that lack the bells and whistles of modern smartphones. Some only let you call and text, while others have a few more features such as a camera or a music player. Dumb phones typically offer only the most basic of features, minimal internet and that’s about it.

If that intrigues you, read on. In this guide, we’ll highlight the different kinds of dumb phones on the market, what you should look for when shopping for one, and whether a dumb phone is even right for you.

The differences between a dumb phone and a smartphone

A smartphone is essentially a tiny computer in your pocket. A dumb phone lacks the apps and features that smartphones have. More advanced dumb phones, or «feature phones,» offer a camera and apps like a calendar or a music player. Some even have minimal internet connectivity.

Many dumb phones are reminiscent of handsets with physical buttons from decades ago. Others have a T9 keypad where you press the numbers with the letters on the keypad and the phone «predicts» the word you want.There are even feature phones with touchscreens and more modern interfaces.

What should you look for when getting a dumb phone?

The dumb phone that’s best for you will depend on the reason you’re getting it. Do you want to go without internet access entirely and do it cold turkey? Then, perhaps a basic phone is what you want. Basic phones are also great if you just want a secondary emergency backup handset. Do you want at least some functionality, like Wi-Fi hotspot capabilities or navigation directions? Then look into «smarter» dumb phones that have those features.

Alternatively, if you think you still need certain smartphone apps like WhatsApp or Uber, you could look into «dumbed down» Android phones with smaller screens and keypads (sometimes called Android dumb phones). They don’t qualify as dumb phones technically, but they’re often seen as an in-between solution for those who can’t quite commit to a lifestyle change.

What are the different kinds of dumb phones on the market?

As more people seek smartphone alternatives, a large number of modern dumb phones have emerged on the market. If you’re on the hunt for one, we recommend using Jose Briones’ excellent Dumbphone Finder, which lets you filter and browse a dizzying array of choices based on your preferences and network provider. We also suggest perusing the r/dumbphones subreddit, where you’ll find a community of dumb phone enthusiasts who can assist you in your dumb phone journey.

Here are a few different kinds of dumb phones that caught our attention.

Smarter dumb phones

If you have a tough time letting go of your smartphone, there are a few smarter dumb phones on the market that might be a good gateway into the smartphone-free world. They often have touchscreen interfaces and more features you’d find on smartphones, like a music player or a camera.

Perhaps the smartest dumb phone on the market right now is the Light Phone 3, which has a 3.92-inch OLED screen and a minimalist black-and-white aesthetic. Its features include GPS for directions, Bluetooth, a fingerprint sensor, Wi-Fi hotspot capabilities, a flashlight, a 50-megapixel rear camera, an 8-megapixel front-facing camera and a music player. It also has 5G support, which is something of a rarity among dumb phones.

However, it’s expensive at around $700, which is almost the same price as a higher-end smartphone. Light also sells the Light Phone 2, which lacks cameras and a flashlight, but it’s much cheaper at $300 (about the price of a midrange smartphone). It uses an E Ink screen instead of OLED. However, some reviews have said that the texting speed is pretty slow.

Another touchscreen phone that’s similar to an e-reader and is fairly popular with the dumb phone community is the Mudita Kompakt. It has wireless charging, an 8-megapixel camera, GPS for directions, a music player, an e-reader and basic apps including weather, a calendar and more.

Barebones phones

On the other hand, if you’re ready for a full digital detox, then you could consider just a basic phone that lets you call, text and not much else. Simply harken back to the phones of decades ago and you’ll likely find one that fits that description.

One of the major brands still making basic phones is HMD Global, which also makes Nokia-branded handhelds like the Nokia 3210 and the Nokia 2780 Flip. HMD makes its own line of phones too, such as the iconic pink Barbie phone, complete with a large Barbie logo emblazoned on the front. It even greets you with a cheerful «Hello Barbie» each time it powers on. We should note, however, that HMD has said it’s exiting the US, so the only way to get one might be through third-party reseller in the near future.

There are still basic phones being sold in the US. The Punkt MP02 is one of the more interesting models, thanks in part to its unique slim design and clicky buttons. You can even send messages via Signal with it, though you’ll have to text via the old-fashioned T9 method.

Android dumb phones

Some dumb phone purists might argue that anything Android doesn’t belong in this list, but if your main goal in quitting your phone is to be free of the social media algorithm, then perhaps a scaled-down smartphone is a good halfway point for you. A couple of examples are the Unifone S22 Flip phone (formerly the CAT S22 Flip phone) and the Doov R7 Pro candy bar (available outside of the US), both of which are Android handsets but have traditional cell phone designs (The Unifone S22 Flip runs Android Go, a simplified version of Android).

This way, you still have access to your «must-have» apps, and might be able to better withstand the temptation of social media because of their tiny size and shape (or at least that’s the theory).

Should you buy an old or used dumb phone? Will it work on a carrier’s 5G network?

There’s nothing wrong with buying an old or used dumb phone, but you should make sure that it works with your cellphone network. Not all phones work with all networks, and certain carriers in the US aren’t compatible with every device, so check their restrictions. AT&T, for example, has a whitelist of permitted devices and you generally can’t use something that isn’t on that list.

As for 5G support, that’s pretty rare when it comes to dumb phones, mostly because they often don’t really need it (they typically won’t see the benefit of faster data speeds, for example). Some, however, do have 5G support, such as the Light Phone 3, the Sonim XP3 Plus 5G and the TCL Flip 4. If 5G support is important to you due to network congestion concerns, then that’s something you can keep an eye out for.

What if I’m not able to give up my smartphone just yet?

Maybe you need your smartphone for work or emergencies, or maybe you just don’t find any of the existing dumb phones all that appealing. If you don’t mind exercising your willpower, there are existing «wellness» tools on both Android and iOS that could help limit your screen time by allowing you to set app timers or downtime modes.

You could also disable and uninstall all your most addictive apps and use parental control tools to limit your screen time. Last but not least, there are several apps and gadgets designed to help you cut back on doomscrolling, like the Brick and the Unpluq tag.

Technologies

Today’s NYT Mini Crossword Answers for Friday, Nov. 28

Here are the answers for The New York Times Mini Crossword for Nov. 28.

Looking for the most recent Mini Crossword answer? Click here for today’s Mini Crossword hints, as well as our daily answers and hints for The New York Times Wordle, Strands, Connections and Connections: Sports Edition puzzles.

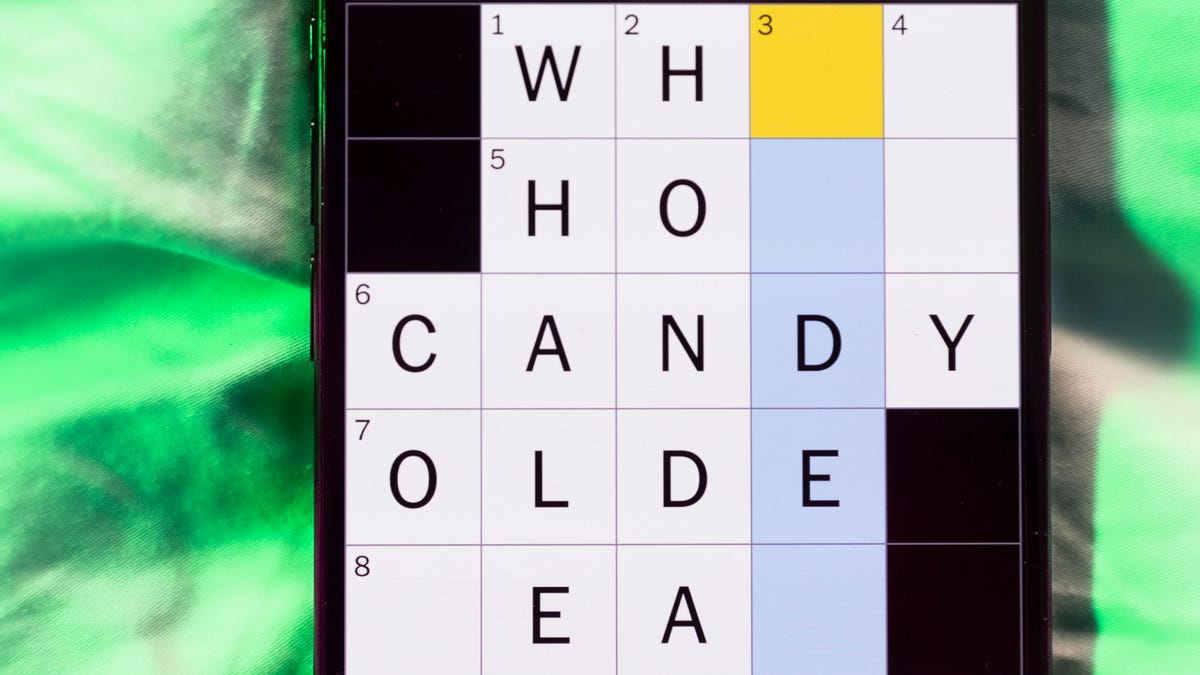

Happy Black Friday — and that’s a fitting theme for today’s Mini Crossword. Read on for the answers. And if you could use some hints and guidance for daily solving, check out our Mini Crossword tips.

If you’re looking for today’s Wordle, Connections, Connections: Sports Edition and Strands answers, you can visit CNET’s NYT puzzle hints page.

Read more: Tips and Tricks for Solving The New York Times Mini Crossword

Let’s get to those Mini Crossword clues and answers.

Mini across clues and answers

1A clue: Major tech purchases on Black Friday

Answer: TVS

4A clue: Hit the mall

Answer: SHOP

5A clue: When many arrive at stores on Black Friday

Answer: EARLY

6A clue: «Buy one, get one ___»

Answer: FREE

7A clue: Clichéd holiday gift for dad

Answer: TIE

Mini down clues and answers

1D clue: Number of days that the first Thanksgiving feast lasted

Answer: THREE

2D clue: Small, mouselike rodent

Answer: VOLE

3D clue: Intelligence bureau worker

Answer: SPY

4D clue: Traditional garment worn at an Indian wedding

Answer: SARI

5D clue: Movement of money between accounts, for short

Answer: EFT

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

Technologies

Today’s NYT Connections: Sports Edition Hints and Answers for Nov. 28, #431

Here are hints and the answers for the NYT Connections: Sports Edition puzzle for Nov. 28, No. 431.

Looking for the most recent regular Connections answers? Click here for today’s Connections hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle and Strands puzzles.

Today’s Connections: Sports Edition is a pretty tough one. If you’re struggling with today’s puzzle but still want to solve it, read on for hints and the answers.

Connections: Sports Edition is published by The Athletic, the subscription-based sports journalism site owned by The Times. It doesn’t appear in the NYT Games app, but it does in The Athletic’s own app. Or you can play it for free online.

Read more: NYT Connections: Sports Edition Puzzle Comes Out of Beta

Hints for today’s Connections: Sports Edition groups

Here are four hints for the groupings in today’s Connections: Sports Edition puzzle, ranked from the easiest yellow group to the tough (and sometimes bizarre) purple group.

Yellow group hint: Shoes.

Green group hint: Think Olympics.

Blue group hint: Kick the ball.

Purple group hint: Family affair.

Answers for today’s Connections: Sports Edition groups

Yellow group: Basketball sneaker brands.

Green group: First words of gymnastics apparatus.

Blue group: Women’s soccer stars.

Purple group: Basketball father/son combos.

Read more: Wordle Cheat Sheet: Here Are the Most Popular Letters Used in English Words

What are today’s Connections: Sports Edition answers?

The yellow words in today’s Connections

The theme is basketball sneaker brands. The four answers are Adidas, Jordan, Nike and Under Armour.

The green words in today’s Connections

The theme is first words of gymnastics apparatus. The four answers are balance, parallel, pommel and uneven.

The blue words in today’s Connections

The theme is women’s soccer stars. The four answers are Bonmatí, Girma, Marta and Rodman.

The purple words in today’s Connections

The theme is basketball father/son combos. The four answers are Barry, James, Pippen and Sabonis.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies4 года ago

Technologies4 года agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow