Technologies

Razer Kiyo Pro Ultra Review: It Comes So Close to Greatness

The 4K streaming-optimized webcam can deliver excellent quality compared to current competitors, but it can also be just a little too glitchy.

My initial reaction to the Razer Kiyo Pro Ultra’s video was «Wow! Finally a webcam as good as a compact vlogging camera.» My reaction after trying to adjust the settings, especially when using it with a third-party application, was «I’m going to reach through my screen and punch you now.» Seriously: Razer’s Synapse software is the only thing preventing this $300 (£300, AU$500) 4K webcam for streamers and power videoconferencers from getting an Editors’ Choice award.

Synapse is the only way to control most of the settings, so it can make or break your experience. If you don’t need to change settings besides zoom, focus or white balance that often (they’re available via the Windows driver), then you’ll probably be OK. And even Synapse 3 doesn’t work on the Mac, so the webcam’s not well suited for that platform.

Like

- Excellent quality and performance

- Nice built-in lens cover design

- Has a relatively large number of adjustable settings which compensate for issues other cameras have

Don’t Like

- Synapse control of camera is glitchy and the camera occasionally hangs when changing settings

- You can only change settings when Synapse has exclusive control of the camera

The Kiyo Pro Ultra’s closest competitor would have been the Elgato Facecam Pro, which no longer seems to be available anywhere despite shipping in November 2022. (It used a previous generation of the Sony Starvis sensor, and it’s always possible that it’s being reworked with the newer sensor.)

That camera supported 4K at 60fps compared to the Razer’s 30fps (at 1080p and lower it can do 60fps), but otherwise the Razer has a lot of the same strengths, including manual exposure controls, user presets and other settings which can help you tweak the quality of your output, such as MJPEG quality (for streaming at 1440p or 4K) and the ability to meter off your face in autoexposure mode (important if you’re off center) and lens distortion compensation.

While it looks similar to the rest of its Kiyo siblings, the Pro and the X (on our list of the best webcams we’ve tested), it has something I’ve wanted for a while: a built-in lens cover. Razer cleverly incorporated it as an iris that closes when you rotate the outer ring.

Top marks for quality and performance

When it’s good, the Kiyo Pro Ultra is great. It incorporates a 1/1.2-inch Sony Starvis 2 sensor, which is a just bit smaller than the 1-inch sensor in compact vlogging cameras like the Sony ZV-1 but loads bigger than the sensors in other webcams, with a good size f1.7 aperture.

The larger sensor and aperture means it shows perceptible depth-of-field blur. It doesn’t have as wide a field of view as many webcams, only up to 82 degrees (72 degrees with distortion correction on) rather than 90 or more, which could affect its suitability for your needs.

The Ultra displays excellent tonal range for what it is, though it falls short in handling bright areas. It needs some software tweaking for that, I think. It has the typical HDR option, but in a backlit shot with a properly exposed foreground (as well as without), it didn’t help clip the overbrightness in the back. There are toggles for both dark and light rooms, but neither seemed to make a perceptible difference. I’ve had other cameras handle it better.

It meters properly, for the most part. Center metering works best if you’re in the center — face metering overexposes oddly without tweaking the exposure compensation, otherwise. But if you lean to the side, face metering keeps it from spiking when it sees your black chair instead of your face. White balance is very good as long as you’re not in too dark an environment. Even then it’s not bad. Nor does it lose a lot of color saturation.

You can toggle a couple of noise reduction settings and they do make a significant difference in low light. The distortion compensation makes a visible difference as well.

Standard autofocus is meh, just like all the other webcams. But there are several settings to mitigate the frequent hunting, which other webcams don’t have. Face autofocus does a good job of keeping it from hunting when you move your head, and there’s a «stylized lighting» setting which helps the AF system lock when the lighting might otherwise confuse it.

The camera handles some of the image processing that might otherwise be sent to the PC, notably the MJPEG compression of the stream you’re sending, and you can set how aggressively it compresses either automatically or on a performance-to-quality continuum.

Still needs some baking

Unfortunately, it’s still just a little too glitchy and the software limits it unintentionally. You can’t access any of the settings in Synapse — most notably resolution/frame rate and manual exposure (ISO and shutter speed) — unless camera preview is enabled. And Windows only allows one application to access a camera at a time.

So, for example, if you’ve accidentally left the resolution at 4K but you need it to be 1080p in OBS, to change it you have to first deactivate the camera in OBS — thankfully, OBS has that option, but Nvidia Broadcast doesn’t. Then jump over to Synapse, turn on preview, change the resolution, turn off preview, jump back to OBS and reactivate the camera. And resolution, among other settings, doesn’t seem to be saved as part of the profiles you can create.

Doing it once isn’t that much of a problem. After the 10th time in an hour it gets old.

It’s also complicated by the occasional failure of settings to kick in, which sometimes forces you to loop back through that activate-deactivate cycle: Why does my adjusted exposure not look adjusted? Do I have to kick it to get autofocus to kick in? The preview in Synapse isn’t always accurate, though that’s not unique to Synapse, but it means you can’t assume your adjustments there will be correct. Synapse also froze several times while I was trying to swap between profiles.

Almost every other reasonable webcam utility allows you to change settings while viewing within the application you need them for. Yes, sometimes a few are disabled (because Windows), but at least they’re not all unavailable. It’s possible that all these issues can be ameliorated with firmware and software patches, but I have learned never to assume that just because they can be that they will be.

The Razer Kiyo Pro Ultra is a capable webcam that just needs some software and firmware polish before I’m comfortable considering it a reliable, consistent performer.

The best laptops in every category

- Best Laptop for 2023

- Best Windows Laptops

- Best Laptop for College

- Best Laptop for High School Students

- Best Budget Laptop Under $500

- Best Dell Laptops

- Best 15-Inch Work and Gaming Laptops

- Best 2-in-1 Laptop

- Best HP Laptops

- Best Gaming Laptop

- Best Cheap Gaming Laptop Under $1,000

- Best Chromebook: 8 Chromebooks Starting at Under $300

Technologies

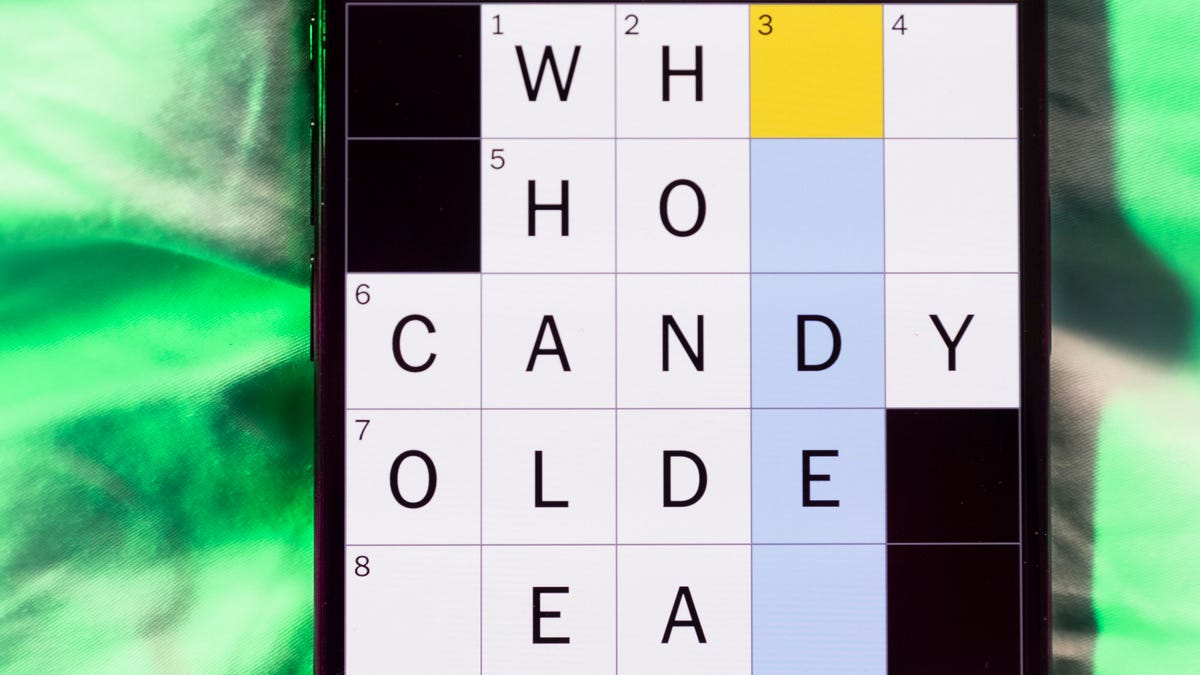

Today’s NYT Mini Crossword Answers for Saturday, Feb. 21

Here are the answers for The New York Times Mini Crossword for Feb. 21.

Looking for the most recent Mini Crossword answer? Click here for today’s Mini Crossword hints, as well as our daily answers and hints for The New York Times Wordle, Strands, Connections and Connections: Sports Edition puzzles.

Need some help with today’s Mini Crossword? It’s the long Saturday version, and some of the clues are stumpers. I was really thrown by 10-Across. Read on for all the answers. And if you could use some hints and guidance for daily solving, check out our Mini Crossword tips.

If you’re looking for today’s Wordle, Connections, Connections: Sports Edition and Strands answers, you can visit CNET’s NYT puzzle hints page.

Read more: Tips and Tricks for Solving The New York Times Mini Crossword

Let’s get to those Mini Crossword clues and answers.

Mini across clues and answers

1A clue: «Jersey Shore» channel

Answer: MTV

4A clue: «___ Knows» (rhyming ad slogan)

Answer: LOWES

6A clue: Second-best-selling female musician of all time, behind Taylor Swift

Answer: MADONNA

8A clue: Whiskey grain

Answer: RYE

9A clue: Dreaded workday: Abbr.

Answer: MON

10A clue: Backfiring blunder, in modern lingo

Answer: SELFOWN

12A clue: Lengthy sheet for a complicated board game, perhaps

Answer: RULES

13A clue: Subtle «Yes»

Answer: NOD

Mini down clues and answers

1D clue: In which high schoolers might role-play as ambassadors

Answer: MODELUN

2D clue: This clue number

Answer: TWO

3D clue: Paid via app, perhaps

Answer: VENMOED

4D clue: Coat of paint

Answer: LAYER

5D clue: Falls in winter, say

Answer: SNOWS

6D clue: Married title

Answer: MRS

7D clue: ___ Arbor, Mich.

Answer: ANN

11D clue: Woman in Progressive ads

Answer: FLO

Technologies

Today’s NYT Connections: Sports Edition Hints and Answers for Feb. 21, #516

Here are hints and the answers for the NYT Connections: Sports Edition puzzle for Feb. 21, No. 516.

Looking for the most recent regular Connections answers? Click here for today’s Connections hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle and Strands puzzles.

Today’s Connections: Sports Edition is a tough one. I actually thought the purple category, usually the most difficult, was the easiest of the four. If you’re struggling with today’s puzzle but still want to solve it, read on for hints and the answers.

Connections: Sports Edition is published by The Athletic, the subscription-based sports journalism site owned by The Times. It doesn’t appear in the NYT Games app, but it does in The Athletic’s own app. Or you can play it for free online.

Read more: NYT Connections: Sports Edition Puzzle Comes Out of Beta

Hints for today’s Connections: Sports Edition groups

Here are four hints for the groupings in today’s Connections: Sports Edition puzzle, ranked from the easiest yellow group to the tough (and sometimes bizarre) purple group.

Yellow group hint: Old Line State.

Green group hint: Hoops legend.

Blue group hint: Robert Redford movie.

Purple group hint: Vroom-vroom.

Answers for today’s Connections: Sports Edition groups

Yellow group: Maryland teams.

Green group: Shaquille O’Neal nicknames.

Blue group: Associated with «The Natural.»

Purple group: Sports that have a driver.

Read more: Wordle Cheat Sheet: Here Are the Most Popular Letters Used in English Words

What are today’s Connections: Sports Edition answers?

The yellow words in today’s Connections

The theme is Maryland teams. The four answers are Midshipmen, Orioles, Ravens and Terrapins.

The green words in today’s Connections

The theme is Shaquille O’Neal nicknames. The four answers are Big Aristotle, Diesel, Shaq and Superman.

The blue words in today’s Connections

The theme is associated with «The Natural.» The four answers are baseball, Hobbs, Knights and Wonderboy.

The purple words in today’s Connections

The theme is sports that have a driver. The four answers are bobsled, F1, golf and water polo.

Technologies

Wisconsin Reverses Decision to Ban VPNs in Age-Verification Bill

The law would have required websites to block VPN users from accessing «harmful material.»

Following a wave of criticism, Wisconsin lawmakers have decided not to include a ban on VPN services in their age-verification law, making its way through the state legislature.

Wisconsin Senate Bill 130 (and its sister Assembly Bill 105), introduced in March 2025, aims to prohibit businesses from «publishing or distributing material harmful to minors» unless there is a reasonable «method to verify the age of individuals attempting to access the website.»

One provision would have required businesses to bar people from accessing their sites via «a virtual private network system or virtual private network provider.»

A VPN lets you access the internet via an encrypted connection, enabling you to bypass firewalls and unblock geographically restricted websites and streaming content. While using a VPN, your IP address and physical location are masked, and your internet service provider doesn’t know which websites you visit.

Wisconsin state Sen. Van Wanggaard moved to delete that provision in the legislation, thereby releasing VPNs from any liability. The state assembly agreed to remove the VPN ban, and the bill now awaits Wisconsin Governor Tony Evers’s signature.

Rindala Alajaji, associate director of state affairs at the digital freedom nonprofit Electronic Frontier Foundation, says Wisconsin’s U-turn is «great news.»

«This shows the power of public advocacy and pushback,» Alajaji says. «Politicians heard the VPN users who shared their worries and fears, and the experts who explained how the ban wouldn’t work.»

Earlier this week, the EFF had written an open letter arguing that the draft laws did not «meaningfully advance the goal of keeping young people safe online.» The EFF said that blocking VPNs would harm many groups that rely on that software for private and secure internet connections, including «businesses, universities, journalists and ordinary citizens,» and that «many law enforcement professionals, veterans and small business owners rely on VPNs to safely use the internet.»

More from CNET: Best VPN Service for 2026: VPNs Tested by Our Experts

VPNs can also help you get around age-verification laws — for instance, if you live in a state or country that requires age verification to access certain material, you can use a VPN to make it look like you live elsewhere, thereby gaining access to that material. As age-restriction laws increase around the US, VPN use has also increased. However, many people are using free VPNs, which are fertile ground for cybercriminals.

In its letter to Wisconsin lawmakers prior to the reversal, the EFF argued that it is «unworkable» to require websites to block VPN users from accessing adult content. The EFF said such sites cannot «reliably determine» where a VPN customer lives — it could be any US state or even other countries.

«As a result, covered websites would face an impossible choice: either block all VPN users everywhere, disrupting access for millions of people nationwide, or cease offering services in Wisconsin altogether,» the EFF wrote.

Wisconsin is not the only state to consider VPN bans to prevent access to adult material. Last year, Michigan introduced the Anticorruption of Public Morals Act, which would ban all use of VPNs. If passed, it would force ISPs to detect and block VPN usage and also ban the sale of VPNs in the state. Fines could reach $500,000.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow