Technologies

Why We’re All Obsessed with the Mind-Blowing ChatGPT AI Chatbot

This artificial intelligence bot can answer questions, write essays, summarize documents and program computers. But deep down, it doesn’t know what’s true.

There’s a new AI bot in town: ChatGPT. Even if you aren’t into artificial intelligence, pay attention, because this one is a big deal.

The tool, from a power player in artificial intelligence called OpenAI, lets you type natural-language prompts. ChatGPT then offers conversational, if somewhat stilted, responses. The bot remembers the thread of your dialogue, using previous questions and answers to inform its next responses. It derives its answers from huge volumes of information on the internet.

ChatGPT is a big deal. The tool seems pretty knowledgeable in areas where there’s good training data for it to learn from. It’s not omniscient or smart enough to replace all humans yet, but it can be creative, and its answers can sound downright authoritative. A few days after its launch, more than a million people were trying out ChatGPT.

But be careful, OpenAI warns. ChatGPT has all kinds of potential pitfalls, some easy to spot and some more subtle.

«It’s a mistake to be relying on it for anything important right now,» OpenAI Chief Executive Sam Altman tweeted. «We have lots of work to do on robustness and truthfulness.» Here’s a look at why ChatGPT is important and what’s going on with it.

And it’s becoming big business. In January, Microsoft pledged to invest billions of dollars into OpenAI. A modified version of the technology behind ChatGPT is now powering Microsoft’s new Bing challenge to Google search and, eventually, it’ll power the company’s effort to build new AI co-pilot smarts in to every part of your digital life.

Bing uses OpenAI technology to process search queries, compile results from different sources, summarize documents, generate travel itineraries, answer questions and generally just chat with humans. That’s a potential revolution for search engines, but it’s been plagued with problems like factual errors and and unhinged conversations.

What is ChatGPT?

ChatGPT is an AI chatbot system that OpenAI released in November to show off and test what a very large, powerful AI system can accomplish. You can ask it countless questions and often will get an answer that’s useful.

For example, you can ask it encyclopedia questions like, «Explain Newton’s laws of motion.» You can tell it, «Write me a poem,» and when it does, say, «Now make it more exciting.» You ask it to write a computer program that’ll show you all the different ways you can arrange the letters of a word.

Here’s the catch: ChatGPT doesn’t exactly know anything. It’s an AI that’s trained to recognize patterns in vast swaths of text harvested from the internet, then further trained with human assistance to deliver more useful, better dialog. The answers you get may sound plausible and even authoritative, but they might well be entirely wrong, as OpenAI warns.

Chatbots have been of interest for years to companies looking for ways to help customers get what they need and to AI researchers trying to tackle the Turing Test. That’s the famous «Imitation Game» that computer scientist Alan Turing proposed in 1950 as a way to gauge intelligence: Can a human conversing with a human and with a computer tell which is which?

But chatbots have a lot of baggage, as companies have tried with limited success to use them instead of humans to handle customer service work. A study of 1,700 Americans, sponsored by a company called Ujet, whose technology handles customer contacts, found that 72% of people found chatbots to be a waste of time.

ChatGPT has rapidly become a widely used tool on the internet. UBS analyst Lloyd Walmsley estimated in February that ChatGPT had reached 100 million monthly users the previous month, accomplishing in two months what took TikTok about nine months and Instagram two and a half years. The New York Times, citing internal sources, said 30 million people use ChatGPT daily.

What kinds of questions can you ask?

You can ask anything, though you might not get an answer. OpenAI suggests a few categories, like explaining physics, asking for birthday party ideas and getting programming help.

I asked it to write a poem, and it did, though I don’t think any literature experts would be impressed. I then asked it to make it more exciting, and lo, ChatGPT pumped it up with words like battlefield, adrenaline, thunder and adventure.

One wacky example shows how ChatGPT is willing to just go for it in domains where people would fear to tread: a command to write «a folk song about writing a rust program and fighting with lifetime errors.»

ChatGPT’s expertise is broad, and its ability to follow a conversation is notable. When I asked it for words that rhymed with «purple,» it offered a few suggestions, then when I followed up «How about with pink?» it didn’t miss a beat. (Also, there are a lot more good rhymes for «pink.»)

When I asked, «Is it easier to get a date by being sensitive or being tough?» GPT responded, in part, «Some people may find a sensitive person more attractive and appealing, while others may be drawn to a tough and assertive individual. In general, being genuine and authentic in your interactions with others is likely to be more effective in getting a date than trying to fit a certain mold or persona.»

You don’t have to look far to find accounts of the bot blowing people’s minds. Twitter is awash with users displaying the AI’s prowess at generating art prompts and writing code. Some have even proclaimed «Google is dead,» along with the college essay. We’ll talk more about that below.

CNET writer David Lumb has put together a list of some useful ways ChatGPT can help, but more keep cropping up. One doctor says he’s used it to persuade a health insurance company to pay for a patient’s procedure.

Who built ChatGPT and how does it work?

ChatGPT is the brainchild of OpenAI, an artificial intelligence research company. Its mission is to develop a «safe and beneficial» artificial general intelligence system or to help others do so. OpenAI has 375 employees, Altman tweeted in January. «OpenAI has managed to pull together the most talent-dense researchers and engineers in the field of AI,» he also said in a January talk.

It’s made splashes before, first with GPT-3, which can generate text that can sound like a human wrote it, and then with DALL-E, which creates what’s now called «generative art» based on text prompts you type in.

GPT-3, and the GPT 3.5 update on which ChatGPT is based, are examples of AI technology called large language models. They’re trained to create text based on what they’ve seen, and they can be trained automatically — typically with huge quantities of computer power over a period of weeks. For example, the training process can find a random paragraph of text, delete a few words, ask the AI to fill in the blanks, compare the result to the original and then reward the AI system for coming as close as possible. Repeating over and over can lead to a sophisticated ability to generate text.

It’s not totally automated. Humans evaluate ChatGPT’s initial results in a process called fine tuning. Human reviewers apply guidelines that OpenAI’s models then generalize from. In addition, OpenAI used a Kenyan firm that paid people up to $3.74 per hour to review thousands of snippets of text for problems like violence, sexual abuse and hate speech, Time reported, and that data was built into a new AI component designed to screen such materials from ChatGPT answers and OpenAI training data.

ChatGPT doesn’t actually know anything the way you do. It’s just able to take a prompt, find relevant information in its oceans of training data, and convert that into plausible sounding paragraphs of text. «We are a long way away from the self-awareness we want,» said computer scientist and internet pioneer Vint Cerf of the large language model technology ChatGPT and its competitors use.

Is ChatGPT free?

Yes, for the moment at least, but in January OpenAI added a paid version that responds faster and keeps working even during peak usage times when others get messages saying, «ChatGPT is at capacity right now.»

You can sign up on a waiting list if you’re interested. OpenAI’s Altman warned that ChatGPT’s «compute costs are eye-watering» at a few cents per response, Altman estimated. OpenAI charges for DALL-E art once you exceed a basic free level of usage.

But OpenAI seems to have found some customers, likely for its GPT tools. It’s told potential investors that it expects $200 million in revenue in 2023 and $1 billion in 2024, according to Reuters.

What are the limits of ChatGPT?

As OpenAI emphasizes, ChatGPT can give you wrong answers and can give «a misleading impression of greatness,» Altman said. Sometimes, helpfully, it’ll specifically warn you of its own shortcomings. For example, when I asked it who wrote the phrase «the squirming facts exceed the squamous mind,» ChatGPT replied, «I’m sorry, but I am not able to browse the internet or access any external information beyond what I was trained on.» (The phrase is from Wallace Stevens’ 1942 poem Connoisseur of Chaos.)

ChatGPT was willing to take a stab at the meaning of that expression once I typed it in directly, though: «a situation in which the facts or information at hand are difficult to process or understand.» It sandwiched that interpretation between cautions that it’s hard to judge without more context and that it’s just one possible interpretation.

ChatGPT’s answers can look authoritative but be wrong.

«If you ask it a very well structured question, with the intent that it gives you the right answer, you’ll probably get the right answer,» said Mike Krause, data science director at a different AI company, Beyond Limits. «It’ll be well articulated and sound like it came from some professor at Harvard. But if you throw it a curveball, you’ll get nonsense.»

The journal Science banned ChatGPT text in January. «An AI program cannot be an author. A violation of these policies will constitute scientific misconduct no different from altered images or plagiarism of existing works,» Editor in Chief H. Holden Thorp said.

The software developer site StackOverflow banned ChatGPT answers to programming questions. Administrators cautioned, «because the average rate of getting correct answers from ChatGPT is too low, the posting of answers created by ChatGPT is substantially harmful to the site and to users who are asking or looking for correct answers.»

You can see for yourself how artful a BS artist ChatGPT can be by asking the same question multiple times. I asked twice whether Moore’s Law, which tracks the computer chip industry’s progress increasing the number of data-processing transistors, is running out of steam, and I got two different answers. One pointed optimistically to continued progress, while the other pointed more grimly to the slowdown and the belief «that Moore’s Law may be reaching its limits.»

Both ideas are common in the computer industry itself, so this ambiguous stance perhaps reflects what human experts believe.

With other questions that don’t have clear answers, ChatGPT often won’t be pinned down.

The fact that it offers an answer at all, though, is a notable development in computing. Computers are famously literal, refusing to work unless you follow exact syntax and interface requirements. Large language models are revealing a more human-friendly style of interaction, not to mention an ability to generate answers that are somewhere between copying and creativity.

Will ChatGPT help students cheat better?

Yes, but as with many other technology developments, it’s not a simple black and white situation. Decades ago, students could copy encyclopedia entries and use calculators, and more recently, they’ve been able to search engines and Wikipedia. ChatGPT offers new abilities for everything from helping with research to doing your homework for you outright. Many ChatGPT answers already sound like student essays, though often with a tone that’s stuffier and more pedantic than a writer might prefer.

Google programmer Kenneth Goodman tried ChatGPT on a number of exams. It scored 70% on the United States Medical Licensing Examination, 70% on a bar exam for lawyers, nine out of 15 correct on another legal test, the Multistate Professional Responsibility Examination, 78% on New York state’s high school chemistry exam‘s multiple choice section, and ranked in the 40th percentile on the Law School Admission Test.

High school teacher Daniel Herman concluded ChatGPT already writes better than most students today. He’s torn between admiring ChatGPT’s potential usefulness and fearing its harm to human learning: «Is this moment more like the invention of the calculator, saving me from the tedium of long division, or more like the invention of the player piano, robbing us of what can be communicated only through human emotion?»

Dustin York, an associate professor of communication at Maryville University, hopes educators will learn to use ChatGPT as a tool and realize it can help students think critically.

«Educators thought that Google, Wikipedia, and the internet itself would ruin education, but they did not,» York said. «What worries me most are educators who may actively try to discourage the acknowledgment of AI like ChatGPT. It’s a tool, not a villain.»

Can teachers spot ChatGPT use?

Not with 100% certainty, but there’s technology to spot AI help. The companies that sell tools to high schools and universities to detect plagiarism are now expanding to detecting AI, too.

One, Coalition Technologies, offers an AI content detector on its website. Another, Copyleaks, released a free Chrome extension designed to spot ChatGPT-generated text with a technology that’s 99% accurate, CEO Alon Yamin said. But it’s a «never-ending cat and mouse game» to try to catch new techniques to thwart the detectors, he said.

Copyleaks performed an early test of student assignments uploaded to its system by schools. «Around 10% of student assignments submitted to our system include at least some level of AI-created content,» Yamin said.

OpenAI launched its own detector for AI-written text in February. But one plagiarism detecting company, CrossPlag, said it spotted only two of 10 AI-generated passages in its test. «While detection tools will be essential, they are not infallible,» the company said.

Researchers at Pennsylvania State University studied the plagiarism issue using OpenAI’s earlier GPT-2 language model. It’s not as sophisticated as GPT-3.5, but its training data is available for closer scrutiny. The researchers found GPT-2 plagiarized information not just word-for-word at times, but also paraphrased passages and lifted ideas without citing its sources. «The language models committed all three types of plagiarism, and that the larger the dataset and parameters used to train the model, the more often plagiarism occurred,» the university said.

Can ChatGPT write software?

Yes, but with caveats. ChatGPT can retrace steps humans have taken, and it can generate actual programming code. «This is blowing my mind,» said one programmer in February, showing on Imgur the sequence of prompts he used to write software for a car repair center. «This would’ve been an hour of work at least, and it took me less than 10 minutes.»

You just have to make sure it’s not bungling programming concepts or using software that doesn’t work. The StackOverflow ban on ChatGPT-generated software is there for a reason.

But there’s enough software on the web that ChatGPT really can work. One developer, Cobalt Robotics Chief Technology Officer Erik Schluntz, tweeted that ChatGPT provides useful enough advice that, over three days, he hadn’t opened StackOverflow once to look for advice.

Another, Gabe Ragland of AI art site Lexica, used ChatGPT to write website code built with the React tool.

ChatGPT can parse regular expressions (regex), a powerful but complex system for spotting particular patterns, for example dates in a bunch of text or the name of a server in a website address. «It’s like having a programming tutor on hand 24/7,» tweeted programmer James Blackwell about ChatGPT’s ability to explain regex.

Here’s one impressive example of its technical chops: ChatGPT can emulate a Linux computer, delivering correct responses to command-line input.

What’s off limits?

ChatGPT is designed to weed out «inappropriate» requests, a behavior in line with OpenAI’s mission «to ensure that artificial general intelligence benefits all of humanity.»

If you ask ChatGPT itself what’s off limits, it’ll tell you: any questions «that are discriminatory, offensive, or inappropriate. This includes questions that are racist, sexist, homophobic, transphobic, or otherwise discriminatory or hateful.» Asking it to engage in illegal activities is also a no-no.

Is this better than Google search?

Asking a computer a question and getting an answer is useful, and often ChatGPT delivers the goods.

Google often supplies you with its suggested answers to questions and with links to websites that it thinks will be relevant. Often ChatGPT’s answers far surpass what Google will suggest, so it’s easy to imagine GPT-3 is a rival.

But you should think twice before trusting ChatGPT. As when using Google and other sources of information like Wikipedia, it’s best practice to verify information from original sources before relying on it.

Vetting the veracity of ChatGPT answers takes some work because it just gives you some raw text with no links or citations. But it can be useful and in some cases thought provoking. You may not see something directly like ChatGPT in Google search results, but Google has built large language models of its own and uses AI extensively already in search.

That said, Google is keen to tout its deep AI expertise, ChatGPT triggered a «code red» emergency within Google, according to The New York Times, and drew Google co-founders Larry Page and Sergey Brin back into active work. Microsoft could build ChatGPT into its rival search engine, Bing. Clearly ChatGPT and other tools like it have a role to play when we’re looking for information.

So ChatGPT, while imperfect, is doubtless showing the way toward our tech future.

Editors’ note: CNET is using an AI engine to create some personal finance explainers that are edited and fact-checked by our editors. For more, see this post.

Technologies

How Team USA’s Olympic Skiers and Snowboarders Got an Edge From Google AI

Google engineers hit the slopes with Team USA’s skiers and snowboarders to build a custom AI training tool.

Team USA’s skiers and snowboarders are going home with some new hardware, including a few gold medals, from the 2026 Olympics. Along with the years of hard work that go into being an Olympic athlete, this year’s crew had an extra edge in their training thanks to a custom AI tool from Google Cloud.

US Ski and Snowboard, the governing body for the US national teams, oversees the training of the best skiers and snowboarders in the country to prepare them for big events, such as national championships and the Olympics. The organization partnered with Google Cloud to build an AI tool to offer more insight into how athletes are training and performing on the slopes.

Video review is a big part of winter sports training. A coach will literally stand on the sidelines recording an athlete’s run, then review the footage with them afterward to spot errors. But this process is somewhat dated, Anouk Patty, chief of sport at US Ski and Snowboard, told me. That’s where Google came in, bringing new AI-powered data insights to the training process.

Google Cloud engineers hit the slopes with the skiers and snowboarders to understand how to build an actually useful AI model for athletic training. They used video footage as the base of the currently unnamed AI tool. Gemini did a frame-by-frame analysis of the video, which was then fed into spatial intelligence models from Google DeepMind. Those models were able to take the 2D rendering of the athlete from the video and transform it into a 3D skeleton of an athlete as they contort and twist on runs.

Final touches from Gemini help the AI tool analyze the physics in the pixels, according to Ravi Rajamani, global head of Google’s AI Blackbelt team. which worked on the project. Coaches and athletes told the engineers the specific metrics they wanted to track — speed, rotation, trajectory — and the Google engineers coded the model to make it easy to monitor them and compare between different videos. There’s also a chat interface to ask Gemini questions about performance.

«From just a video, we are actually able to recreate it in 3D, so you don’t need expensive equipment, [like] sensors, that get in the way of an athlete performing,» Rajamani said.

Coaches are undeniably the experts on the mountain, but the AI can act as a kind of gut check. The data can help confirm or deny what coaches are seeing and give them extra insight into the specifics of each athlete’s performance. It can catch things that humans would struggle to see with the naked eye or in poor video quality, like where an athlete was looking while doing a trick and the exact speed and angle of a rotation.

«It’s data that they wouldn’t otherwise have,» Patty said. The 3D skeleton is especially helpful because it makes it easier to see movement obscured by the puffy jackets and pants athletes wear, she said.

For elite athletes in skiing and snowboarding, making small adjustments can mean the difference between a gold medal and no medal at all. Technological advances in training are meant to help athletes get every available tool for improvement.

«You’re always trying to find that 1% that can make the difference for an athlete to get them on the podium or to win,» Patty said. It can also democratize coaching. «It’s a way for every coach who’s out there in a club working with young athletes to have that level of understanding of what an athlete should do that the national team athletes have.»

For Google, this purpose-built AI tool is «the tip of the iceberg,» Rajamani said. There are a lot of potential future use cases, including expanding the base model to be customized to other sports. It also lays the foundation for work in sports medicine, physical therapy, robotics and ergonomics — disciplines where understanding body positioning is important. But for now, there’s satisfaction in knowing the AI was built to actually help real athletes.

«This was not a case of tech engineers building something in the lab and handing it over,» Rajamani said. «This is a real-world problem that we are solving. For us, the motivation was building a tool that provides a true competitive advantage for our athletes.»

Technologies

Virtual Boy Review: Nintendo’s Oddest Switch Accessory Yet Is an Immersive ’90s Museum

No one needs a Virtual Boy. But I always wanted one. And now it’s living with me at last.

On my desk is a Nintendo device that looks like equipment stolen from a cyberpunk optical shop. It’s big, it’s red and black, it sits on a tripod, it has an eyepiece, and it has a Nintendo Switch 2 nestled inside. Hello, Virtual Boy, you’re back.

Nintendo has made a lot of weird consoles over the years, but the Virtual Boy was the weirdest. And the shortest lived. Released in 1995 and discontinued a year later, it lived for a blink of an eye during my final year in college. I never really had time to consider buying one.

It would have been perfect for me, a Game Boy fan who was in love with the idea of VR even back then. Nintendo has been flirting with virtual reality in various forms for decades, and the Virtual Boy was the biggest swing. But it wasn’t VR at all, really. It was a 3D game console in red and black monochrome, a 3D Game Boy in tripod form.

I’m setting the stage because right now you can order a $100 Virtual Boy recreation that’s a big, strange Switch accessory. It’s staring at me now, taking up a lot of space. It’s too big to fit in a bag. It’s a tabletop console, really, and Nintendo has created this Virtual Boy viewer as a way to play a set of free-with-subscription games on the Switch and Switch 2.

Is it worth your money? I’d call it a museum-piece collectible, not a serious piece of gaming hardware. Still, my kid stuck his head in, played 3D Wario Land, and came out declaring it was really cool. He loves old retro games. But I don’t know how often he’ll pop his head back in.

Nintendo’s first stab at 3D now feels like a museum piece

For comparison, I pulled my old Nintendo 3DS XL out of the drawer where it had been tucked away and booted it up, marveling again that Nintendo actually made a glasses-free 3D game handheld once upon a time. The 3DS is a far more capable and advanced game system, but consider the Virtual Boy an ancient attempt to get there first.

The Virtual Boy was a monochrome red-and-black LED display system, a tabletop-only device that was neither handheld nor TV-connected. The Nintendo Switch’s tabletop-style game modes feel like a bit of an evolutionary link to the Virtual Boy, so it’s poetic that the Switch pops into the new Virtual Boy to power the games and provide the display.

The plastic Virtual Boy is just an odd set of VR goggles for the Switch, but with a red filter on the lenses. Also, you can’t wear it. You keep your head stuck in it.

Awkward and easy to use

All the trappings on this recreation look like the old Virtual Boy but don’t work: You can see a simulated headphone jack, controller port, a sort of knob on top. I just unsnap the plastic case and slide the Switch in, carefully, and then snap it back over. That’s all it is.

To control it, you use the Switch controllers detached or another Switch-compatible controller. Launching the Virtual Boy app — free on the eShop, but you need a Switch Online Plus Expansion Pack account, which costs $50 a year, or $80 for a family membership — splits the Switch display into two smaller, distorted screens. In the Virtual Boy, it looks properly 3D. When I’m done playing, I pop the Switch back out.

As I said in my first hands-on, the big foam-covered eyepiece is more than wide enough for big glasses, and was fine to dip my face into. Getting a comfortable angle to stay playing for a while is another challenge. The Virtual Boy’s included tripod-like stand can adjust the angle, but not as wide as I’d like. I’m sort of hunched over while playing, which gives me a bit of pain. Leaning on the table with my controllers in hand helps.

The red-lensed front eyepiece can be removed, and a later software update will allow Virtual Boy games to be played in several color mixes beyond red and black. Also, you can unscrew an inner bracket to hold the Switch 2 and swap in an included Switch-sized bracket instead. The Switch Lite doesn’t work with the Virtual Boy, however.

The weirdness is my type of indie

All you get right now are seven of the 16 games Nintendo has promised to release for the Virtual Boy. Believe it or not, there were only 22 games ever released for this system. The 16 will include two that were never released before, which is a fun collector’s novelty.

But what’s amazing to me now is that, sinking into these oddball retro games with their pixelated NES-slash-Game Boy aesthetics in red and black, they feel weirdly timely. The janky, oddball, almost-parallel-universe Nintendo vibe feels like the indie retro aesthetic that’s been big for a while now. After all these years, is the Virtual Boy now finally awesome?

Games like UFO 50 (a compilation of new indie games made to feel like an archive of ’80s games for a console that never was) and indie consoles like Panic Playdate (still my favorite black and white mini handheld, a home for all sorts of homebrew retro games) match my feeling diving into these Virtual Boy games and figuring them out.

Wario Land is probably the best: A side-scrolling Wario game with multiple depth levels, it gives me Game Boy Mario game vibes. Golf has multiple holes and an aiming system, and it’s relaxed and basic (and hard to perfect). 3D Tetris has you dropping blocks down a well to fill in layers, with a Tron-like puzzle feel. Red Alert’s wireframe 3D shooter design is like Star Fox, but boiled all the way down to simple vector lines. Galactic Pinball has several tables, and it’s some lovely, very old-school 3D Nintendo pinball fun. Teleroboxer is Punch-Out with robots, with a style that also reminds me of the early Switch game Arms. And The Mansion of Innsmouth is a creepy 3D dungeon-crawling game (in Japanese) where you try to get to exits before time runs out… or monsters get you.

The remaining games coming this year include Mario Tennis, another Tetris game, a wireframe 3D racer, a 3D reinvention of the original Mario Bros. game called Mario Clash and a 3D Space Invaders. By the end of Nintendo’s release schedule, a good chunk of Virtual Boy’s catalog will be there.

A novelty that’s niche as hell

Worth it? Again, if you love weird and retro, and are intrigued by lost Nintendo 3D games, then yes. But if you’re looking for cutting-edge, then no.

Keep in mind: You can buy a cheaper $25 cardboard set of goggles for the Switch that lets you play the Virtual Boy games, too (or use the old Labo VR goggles Nintendo made in 2019, if you have them). That’s a more sensible path. There are even unofficial emulators for Virtual Boy games on the Meta Quest and Apple Vision Pro. But who said the Virtual Boy was sensible?

A Nintendo game system that’s a big set of red goggles on a tripod is inherently absurd. And I welcome its weird footprint in my home, because that’s exactly who I am. But it’s also a testament to Nintendo’s perpetual interest in the bleeding edge of gaming. VR, glasses-free 3D, AR, modular consoles… Nintendo’s poking around the edges.

Is the Virtual Boy a sign that Nintendo could make its own VR or AR game system again someday soon, or as an extension of the Switch 2? Who knows? Shigeru Miyamoto, Nintendo’s legendary video game designer, sounded intrigued and elusive about it when I asked him last year. But there’s never any real way to guess where Nintendo’s heading. The Virtual Boy is a museum-piece reminder of that.

Technologies

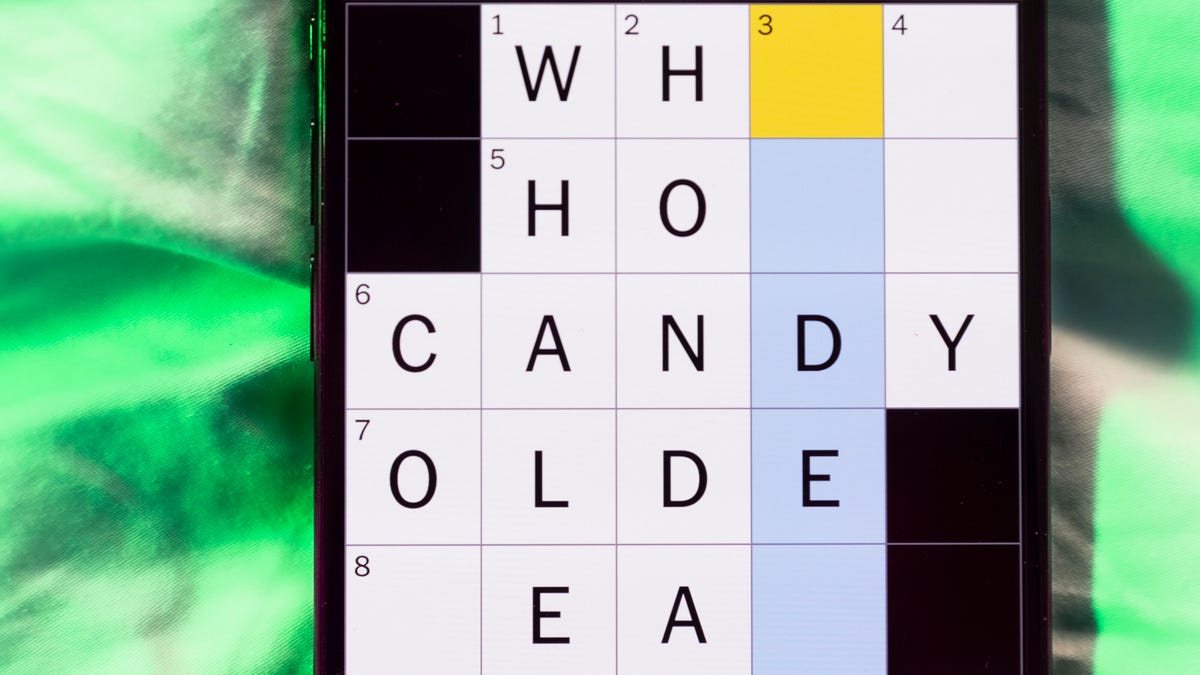

Today’s NYT Mini Crossword Answers for Friday, Feb. 20

Here are the answers for The New York Times Mini Crossword for Feb. 20.

Looking for the most recent Mini Crossword answer? Click here for today’s Mini Crossword hints, as well as our daily answers and hints for The New York Times Wordle, Strands, Connections and Connections: Sports Edition puzzles.

Today’s Mini Crossword expects you to know a little bit about everything — from old political parties to architecture to video games. Read on for all the answers. And if you could use some hints and guidance for daily solving, check out our Mini Crossword tips.

If you’re looking for today’s Wordle, Connections, Connections: Sports Edition and Strands answers, you can visit CNET’s NYT puzzle hints page.

Read more: Tips and Tricks for Solving The New York Times Mini Crossword

Let’s get to those Mini Crossword clues and answers.

Mini across clues and answers

1A clue: Political party that competed with Democrats during the 1830s-’50s

Answer: WHIGS

6A clue: Four Seasons, e.g.

Answer: HOTEL

7A clue: Dinosaur in the Mario games

Answer: YOSHI

8A clue: Blizzard or hurricane

Answer: STORM

9A clue: We all look up to it

Answer: SKY

Mini down clues and answers

1D clue: «Oh yeah, ___ that?»

Answer: WHYS

2D clue: Says «who»?

Answer: HOOTS

3D clue: «No worries»

Answer: ITSOK

4D clue: Postmodern architect Frank

Answer: GEHRY

5D clue: Narrow

Answer: SLIM

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow