Technologies

Scientists Develop ‘Cellular Glue’ That Could Heal Wounds, Regrow Nerves

One day, these special synthetic molecules could also help mitigate the organ shortage crisis.

Researchers from the University of California, San Francisco announced a fascinating innovation on Monday. They call it «cellular glue» and say it could one day open doors to massive medical achievements, like building organs in a lab for transplantation and reconstructing nerves that’ve been damaged beyond the reach of standard surgical repair.

Basically, the team engineered a set of synthetic molecules that can be manipulated to coax cells within the human body to bond with one another. Together, these molecules constitute the so-called «cellular glue» and act like adhesive molecules naturally found in and around cells that involuntarily dictate the way our tissues, nerves and organs are structured and anchored together.

Only in this case scientists can voluntarily control them.

«The properties of a tissue, like your skin for example, are determined in large part by how the different cells are organized within it,» Adam Stevens, a researcher at UCSF’s Cell Design Institute and first author of a paper in the journal Nature, said in a statement. «We’re devising ways to control this organization of cells, which is central to being able to synthesize tissues with the properties we want them to have.»

Doctors could eventually use the sticky material as a viable mechanism to mend patients’ wounds, regrow nerves otherwise deemed destroyed and potentially even work toward regenerating diseased lungs, livers and other vital organs.

That last bit could lend a hand in alleviating the crisis of donor organs rapidly running out of supply. According to the Health Resources and Services Administration, 17 people in the US die each day while on the waitlist for an organ transplant, yet every 10 minutes, another person is added to that list.

«Our work reveals a flexible molecular adhesion code that determines which cells will interact, and in what way,» Stevens said. «Now that we are starting to understand it, we can harness this code to direct how cells assemble into tissues and organs.»

Ikea cells

Right after babies are born (and even when they’re still in the womb) their cells essentially find it easy to reconnect with one another when a bond is lost. This is primarily because kids are still growing, so their cells are still actively coming together. But as a consequence, that’s also why their scratches and scrapes tend to heal quite quickly.

In other words, think of children’s cell molecules as having lots of clear-cut instructions on how to put themselves together to make tissues, organs and nerves. They’re like sentient little pieces of Ikea furniture with the store’s building booklet in hand.

As people get older, however, those biological Ikea instructions get put in the attic, the team explains. That’s because, for the most part, the body is pretty solidified — and this is sometimes a problem. For instance, when someone’s liver gets really damaged, their liver cell molecules may need to refer back to those Ikea instructions but can’t find them.

But that’s where «cellular glue» molecules come in. These rescuers can essentially be primed with those Ikea instructions before being sent into the body, so their blueprint is fresh. Scientists can load them up with information on which cell molecules to bond with and even how strongly to bond with them.

Then, these glue molecules can guide relevant cells toward one another, helping along the healing and regeneration processes.

«In a solid organ, like a lung or a liver, many of the cells will be bonded quite tightly,» explains a UCSF description of the new invention. «But in the immune system, weaker bonds enable the cells to flow through blood vessels or crawl between the tightly bound cells of skin or organ tissues to reach a pathogen or a wound.»

To make this kind of customization possible, the researchers added two important components to their cellular glue. First, part of the molecule acts as a receptor. It remains on the outside of the cell and determines which other cells the molecule is allowed to interact with. Second, there’s the bond-strength-tuner. This section exists within the cell. Mix and match those two traits and, the team says, you can create an array of cell adhesion molecules prepped to bond in various ways.

«We were able to engineer cells in a manner that allows us to control which cells they interact with, and also to control the nature of that interaction,» Wendell Lim, director of UCSF’s Cell Design Institute and senior author of the paper, said in a statement.

In fact, the team says the range of potential molecules is wide enough that they could inform the academic stage of medical studies, too. Researchers could make mock tissues, for example, to deepen understanding of the human body as a whole.

Or as Stevens put it, «These tools could be really transformative.»

Technologies

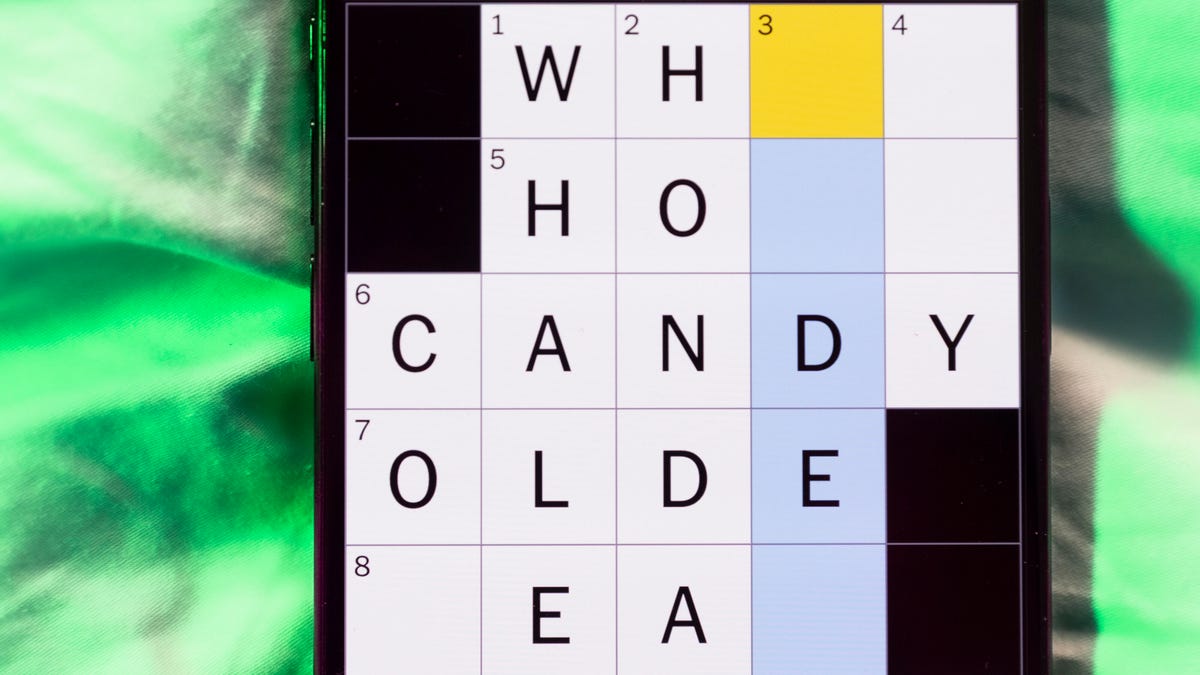

Today’s NYT Mini Crossword Answers for Saturday, Feb. 28

Here are the answers for The New York Times Mini Crossword for Feb. 28.

Looking for the most recent Mini Crossword answer? Click here for today’s Mini Crossword hints, as well as our daily answers and hints for The New York Times Wordle, Strands, Connections and Connections: Sports Edition puzzles.

Need some help with today’s Mini Crossword? As is usual for Saturday, it’s pretty long, and should take you longer than the normal Mini. A bunch of three-initial terms are used in this one. Read on for all the answers. And if you could use some hints and guidance for daily solving, check out our Mini Crossword tips.

If you’re looking for today’s Wordle, Connections, Connections: Sports Edition and Strands answers, you can visit CNET’s NYT puzzle hints page.

Read more: Tips and Tricks for Solving The New York Times Mini Crossword

Let’s get to those Mini Crossword clues and answers.

Mini across clues and answers

1A clue: Rock’s ___ Leppard

Answer: DEF

4A clue: Cry a river

Answer: SOB

7A clue: Clean Air Act org.

Answer: EPA

8A clue: Org. that pays the Bills?

Answer: NFL

9A clue: Nintendo console with motion sensors

Answer: WII

10A clue: ___-quoted (frequently said)

Answer: OFT

11A clue: With 13-Across, narrow gap between the underside of a house and the ground

Answer: CRAWL

13A clue: See 11-Across

Answer: SPACE

14A clue: Young lady

Answer: GAL

15A clue: Ooh and ___

Answer: AAH

17A clue: Sports org. for Scottie Scheffler

Answer: PGA

18A clue: «Hey, just an F.Y.I. …,» informally

Answer: PSA

19A clue: When doubled, nickname for singer Swift

Answer: TAY

20A clue: Socially timid

Answer: SHY

Mini down clues and answers

1D clue: Morning moisture

Answer: DEW

2D clue: «Game of Thrones» or Homer’s «Odyssey»

Answer: EPICSAGA

3D clue: Good sportsmanship

Answer: FAIRPLAY

4D clue: White mountain toppers

Answer: SNOWCAPS

5D clue: Unrestrained, as a dog at a park

Answer: OFFLEASH

6D clue: Sandwich that might be served «triple-decker»

Answer: BLT

12D clue: Common battery type

Answer: AA

14D clue: Chat___

Answer: GPT

16D clue: It’s for horses, in a classic joke punchline

Answer: HAY

Technologies

Ultrahuman Ring Pro Brings Better Battery Life, More Action and Analysis

The company’s new flagship smart ring stores more data, too. But that doesn’t really help Americans.

Sick of your smart ring’s battery not holding up? Ultrahuman’s new $479 Ring Pro smart ring, unveiled on Friday, offers up to 15 days of battery life on a single charge. The Ring Pro joins the company’s $349 Ring Air, which boosts health tracking, thanks to longer battery life, increased data storage, improved speed and accuracy and a new heart-rate sensing architecture. The ring works in conjunction with the latest Pro charging case.

Ultrahuman also launched its Jade AI, which can act as an agent based on analysis of current and historical health data. Jade can synthesize data from across the company’s products and is compatible with its Rings.

«With industry-leading hardware paired with Jade biointelligence AI, users can now take real-time actionable interventions towards their health than ever before,» said Mohit Kumar, CEO of Ultrahuman.

No US sales

That hardware isn’t available in the US, though, thanks to the ongoing ban on Ultrahuman’s Rings sales here, stemming from a patent dispute with its competitor, Oura Ring. It’s available for preorder now everywhere else and is slated to ship in March. Jade’s available globally.

Ultrahuman says the Ring Pro boosts battery life to about 15 days in Chill mode — up to 12 days in Turbo — compared to a maximum of six days for the Air. The Pro charger’s battery stores enough for another 45 days, which you top off with Qi-compatible wireless charging. In addition, the case incorporates locator technology via the app and a speaker, as well as usability features such as haptic notifications and a power LED.

The ring can also retain up to 250 days of data versus less than a week for the cheaper model. Ultrahuman redesigned the heart-rate sensor for better signal quality. An upgraded processor improves the accuracy of the local machine learning and overall speed.

It’s offered in gold, silver, black and titanium finishes, with available sizes ranging from 5 to 14.

Jade’s Deep Research Mode is the cross-ecosystem analysis feature, which aggregates data from Ring and Blood Vision and the company’s subscription services, Home and M1 CGM, to provide historical trends, offer current recommendations and flag potential issues, as well as trigger activities such as A-fib detection. Ultrahuman plans to expand its capabilities to include health-adjacent activities, such as ordering food.

Some new apps are also available for the company’s PowerPlug add-on platform, including capabilities such as tracking GLP-1 effects, snoring and respiratory analysis and migraine management tools.

Technologies

The FCC Just Approved Charter’s $34.5B Cox Purchase. Here’s What It Means for 37M Customers

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow