Technologies

Apple, iPhones, photos and child safety: What’s happening and should you be concerned?

The tech giant’s built new systems to fight child exploitation and abuse, but security advocates worry it could erode our privacy. Here’s why.

Apple’s long presented itself as a bastion of security, and as one of the only tech companies that truly cares about user privacy. But a new technology designed to help an iPhone, iPad or Mac computer detect child exploitation images and videos stored on those devices has ignited a fierce debate about the truth behind Apple’s promises.

On Aug. 5, Apple announced a new feature being built into the upcoming iOS 15, iPad OS 15, WatchOS 8 and MacOS Monterey software updates designed to detect if someone has child exploitation images or videos stored on their device. It’ll do this by converting images into unique bits of code, known as hashes, based on what they depict. The hashes are then checked against a database of known child exploitation content that’s managed by the National Center for Missing and Exploited Children. If a certain number of matches are found, Apple is then alerted and may further investigate.

Apple said it developed this system to protect people’s privacy, performing scans on the phone and only raising alarms if a certain number of matches are found. But privacy experts, who agree that fighting child exploitation is a good thing, worry that Apple’s moves open the door to wider uses that could, for example, put political dissidents and other innocent people in harm’s way.

«Even if you believe Apple won’t allow these tools to be misused there’s still a lot to be concerned about,» tweeted Matthew Green, a professor at Johns Hopkins University who’s worked on cryptographic technologies.

Apple’s new feature, and the concern that’s sprung up around it, represent an important debate about the company’s commitment to privacy. Apple has long promised that its devices and software are designed to protect their users’ privacy. The company even dramatized that with an ad it hung just outside the convention hall of the 2019 Consumer Electronics Show, which said «What happens on your iPhone stays on your iPhone.»

«We at Apple believe privacy is a fundamental human right,» Apple CEO Tim Cook has often said.

Apple’s scanning technology is part of a trio of new features the company’s planning for this fall. Apple also is enabling its Siri voice assistant to offer links and resources to people it believes may be in a serious situation, such as a child in danger. Advocates had been asking for that type of feature for a while.

It also is adding a feature to its messages app to proactively protect children from explicit content, whether it’s in a green-bubble SMS conversation or blue-bubble iMessage encrypted chat. This new capability is specifically designed for devices registered under a child’s iCloud account and will warn if it detects an explicit image being sent or received. Like with Siri, the app will also offer links and resources if needed.

There’s a lot of nuance involved here, which is part of why Apple took the unusual step of releasing research papers, frequently asked questions and other information ahead of the planned launch.

Here’s everything you should know:

Why is Apple doing this now?

The tech giant said it’s been trying to find a way to help stop child exploitation for a while. The National Center for Missing and Exploited Children received more than 65 million reports of material last year. Apple said that’s way up from the 401 reports 20 years ago.

«We also know that the 65 million files that were reported is only a small fraction of what is in circulation,» said Julie Cordua, head of Thorn, a nonprofit fighting child exploitation that supports Apple’s efforts. She added that US law requires tech companies to report exploitative material if they find it, but it does not compel them to search for it.

Other companies do actively search for such photos and videos. Facebook, Microsoft, Twitter, and Google (and its YouTube subsidiary) all use various technologies to scan their systems for any potentially illegal uploads.

What makes Apple’s system unique is that it’s designed to scan our devices, rather than the information stored on the company’s servers.

The hash scanning system will only be applied to photos stored in iCloud Photo Library, which is a photo syncing system built into Apple devices. It won’t hash images and videos stored in the photos app of a phone, tablet or computer that isn’t using iCloud Photo Library. So, in a way, people can opt out if they choose not to use Apple’s iCloud photo syncing services.

Could this system be abused?

The question at hand isn’t whether Apple should do what it can to fight child exploitation. It’s whether Apple should use this method.

The slippery slope concern privacy experts have raised is whether Apple’s tools could be twisted into surveillance technology against dissidents. Imagine if the Chinese government were able to somehow secretly add data corresponding to the famously suppressed Tank Man photo from the 1989 pro-democracy protests in Tiananmen Square to Apple’s child exploitation content system.

Apple said it designed features to keep that from happening. The system doesn’t scan photos, for example — it checks for matches between hash codes. The hash database is also stored on the phone, not a database sitting on the internet. Apple also noted that because the scans happen on the device, security researchers can audit the way it works more easily.

Is Apple rummaging through my photos?

We’ve all seen some version of it: The baby in the bathtub photo. My parents had some of me, I have some of my kids, and it was even a running gag on the 2017 Dreamworks animated comedy The Boss Baby.

Apple says those images shouldn’t trip up its system. Because Apple’s system converts our photos to these hash codes, and then checks them against a known database of child exploitation videos and photos, the company isn’t actually scanning our stuff. The company said the likelihood of a false positive is less than one in 1 trillion per year.

«In addition, any time an account is flagged by the system, Apple conducts human review before making a report to the National Center for Missing and Exploited Children,» Apple wrote on its site. «As a result, system errors or attacks will not result in innocent people being reported to NCMEC.»

Is Apple reading my texts?

Apple isn’t applying its hashing technology to our text messages. That, effectively, is a separate system. Instead, with text messages, Apple is only alerting a user who’s marked as a child in their iCloud account about when they’re about to send or receive an explicit image. The child can still view the image, and if they do a parent will be alerted.

«The feature is designed so that Apple does not get access to the messages,» Apple said.

What does Apple say?

Apple maintains that its system is built with privacy in mind, with safeguards to keep Apple from knowing the contents of our photo libraries and to minimize the risk of misuse.

«At Apple, our goal is to create technology that empowers people and enriches their lives — while helping them stay safe,» Apple said in a statement. «We want to protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material.»

Technologies

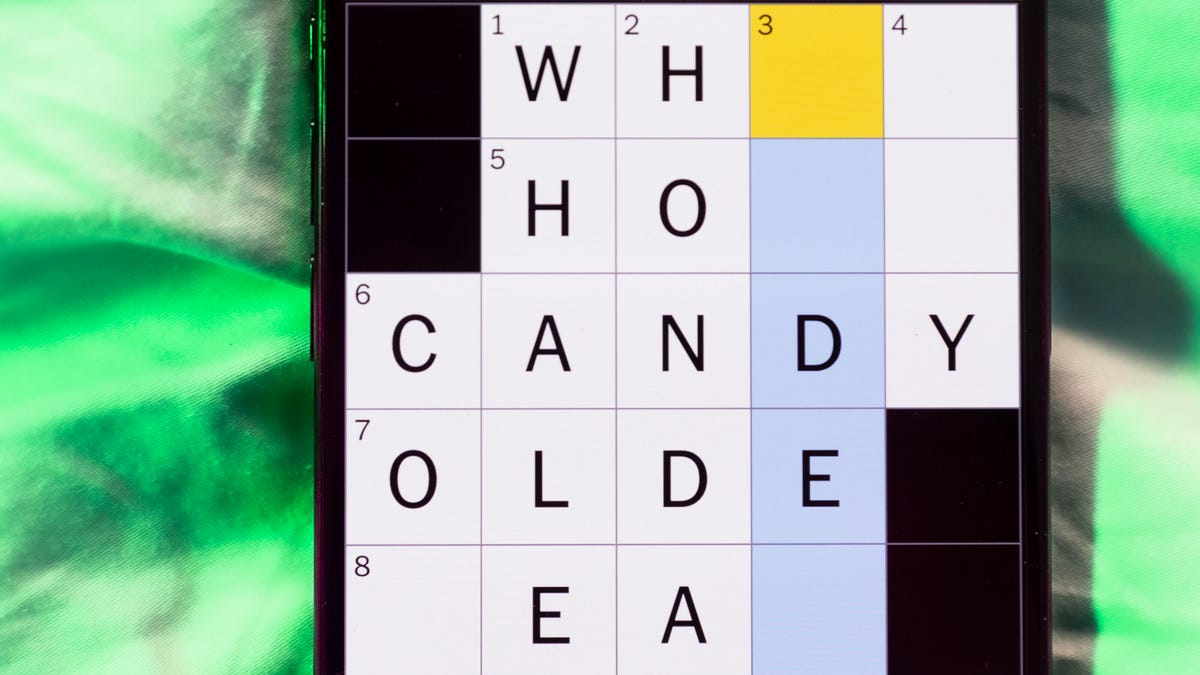

Today’s NYT Mini Crossword Answers for Sunday, Jan. 25

Here are the answers for The New York Times Mini Crossword for Jan. 25.

Looking for the most recent Mini Crossword answer? Click here for today’s Mini Crossword hints, as well as our daily answers and hints for The New York Times Wordle, Strands, Connections and Connections: Sports Edition puzzles.

Need some help with today’s Mini Crossword? It might help to be a Scrabble player. Read on for all the answers. And if you could use some hints and guidance for daily solving, check out our Mini Crossword tips.

If you’re looking for today’s Wordle, Connections, Connections: Sports Edition and Strands answers, you can visit CNET’s NYT puzzle hints page.

Read more: Tips and Tricks for Solving The New York Times Mini Crossword

Let’s get to those Mini Crossword clues and answers.

Mini across clues and answers

1A clue: Some breakfast drinks, for short

Answer: OJS

4A clue: Ready for business

Answer: OPEN

5A clue: Information gathered by a spy

Answer: INTEL

6A clue: Highest-scoring Scrabble word with four tiles (22)

Answer: QUIZ

7A clue: Nine-digit ID

Answer: SSN

Mini down clues and answers

1D clue: Agree to receive promotional emails, say

Answer: OPTIN

2D clue: Second-highest-scoring Scrabble word with four tiles (20)

Answer: JEEZ

3D clue: Sketch comedy show since ’75

Answer: SNL

4D clue: Burden

Answer: ONUS

5D clue: Geniuses have high ones

Answer: IQS

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

Technologies

Today’s NYT Connections: Sports Edition Hints and Answers for Jan. 25, #489

Here are hints and the answers for the NYT Connections: Sports Edition puzzle for Jan. 25, No. 489.

Looking for the most recent regular Connections answers? Click here for today’s Connections hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle and Strands puzzles.

Today’s Connections: Sports Edition grid started with a funny message: SUPER BOWL ORR BUST. If you’re struggling with today’s puzzle but still want to solve it, read on for hints and the answers.

Connections: Sports Edition is published by The Athletic, the subscription-based sports journalism site owned by The Times. It doesn’t appear in the NYT Games app, but it does in The Athletic’s own app. Or you can play it for free online.

Read more: NYT Connections: Sports Edition Puzzle Comes Out of Beta

Hints for today’s Connections: Sports Edition groups

Here are four hints for the groupings in today’s Connections: Sports Edition puzzle, ranked from the easiest yellow group to the tough (and sometimes bizarre) purple group.

Yellow group hint: Bummer!

Green group hint: Add three letters.

Blue group hint: Noted hockey players.

Purple group hint: Not the moon, but …

Answers for today’s Connections: Sports Edition groups

Yellow group: Disappointment.

Green group: Sports, with «-ing.»

Blue group: Hall of Fame NHL defensemen.

Purple group: ____ star(s)

Read more: Wordle Cheat Sheet: Here Are the Most Popular Letters Used in English Words

What are today’s Connections: Sports Edition answers?

The yellow words in today’s Connections

The theme is disappointment. The four answers are bust, dud, failure and flop.

The green words in today’s Connections

The theme is sports, with «-ing.» The four answers are bowl, box, curl and surf.

The blue words in today’s Connections

The theme is Hall of Fame NHL defensemen. The four answers are Bourque, Coffey, Leetch and Orr.

The purple words in today’s Connections

The theme is ____ star(s). The four answers are all, Chicago, Dallas and super.

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

Technologies

Today’s NYT Connections Hints, Answers and Help for Jan. 25 #959

Here are some hints and the answers for the NYT Connections puzzle for Jan. 25, No. 959

Looking for the most recent Connections answers? Click here for today’s Connections hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle, Connections: Sports Edition and Strands puzzles.

Really, New York Times? The paper noted for being rather sedate actually put the words SUB and DOM next to each other in today’s NYT Connections puzzle. Of course, they didn’t mean what they could have meant, and they did not end up in the same category, but still. Read on for clues and today’s Connections answers.

The Times has a Connections Bot, like the one for Wordle. Go there after you play to receive a numeric score and to have the program analyze your answers. Players who are registered with the Times Games section can now nerd out by following their progress, including the number of puzzles completed, win rate, number of times they nabbed a perfect score and their win streak.

Read more: Hints, Tips and Strategies to Help You Win at NYT Connections Every Time

Hints for today’s Connections groups

Here are four hints for the groupings in today’s Connections puzzle, ranked from the easiest yellow group to the tough (and sometimes bizarre) purple group.

Yellow group hint: Like an understudy.

Green group hint: Delete is another one.

Blue group hint: Like penne.

Purple group hint: At the end of words.

Answers for today’s Connections groups

Yellow group: Act as a backup.

Green group: PC keyboard keys.

Blue group: Pasta shapes.

Purple group: Suffixes.

Read more: Wordle Cheat Sheet: Here Are the Most Popular Letters Used in English Words

What are today’s Connections answers?

The yellow words in today’s Connections

The theme is act as a backup. The four answers are cover, fill in, sub and temp.

The green words in today’s Connections

The theme is PC keyboard keys. The four answers are alt, enter, menu and windows.

The blue words in today’s Connections

The theme is pasta shapes. The four answers are bowtie, ribbon, shell and tube.

The purple words in today’s Connections

The theme is suffixes. The four answers are ate, dom, hood and ship.

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies4 года ago

Technologies4 года agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow