Technologies

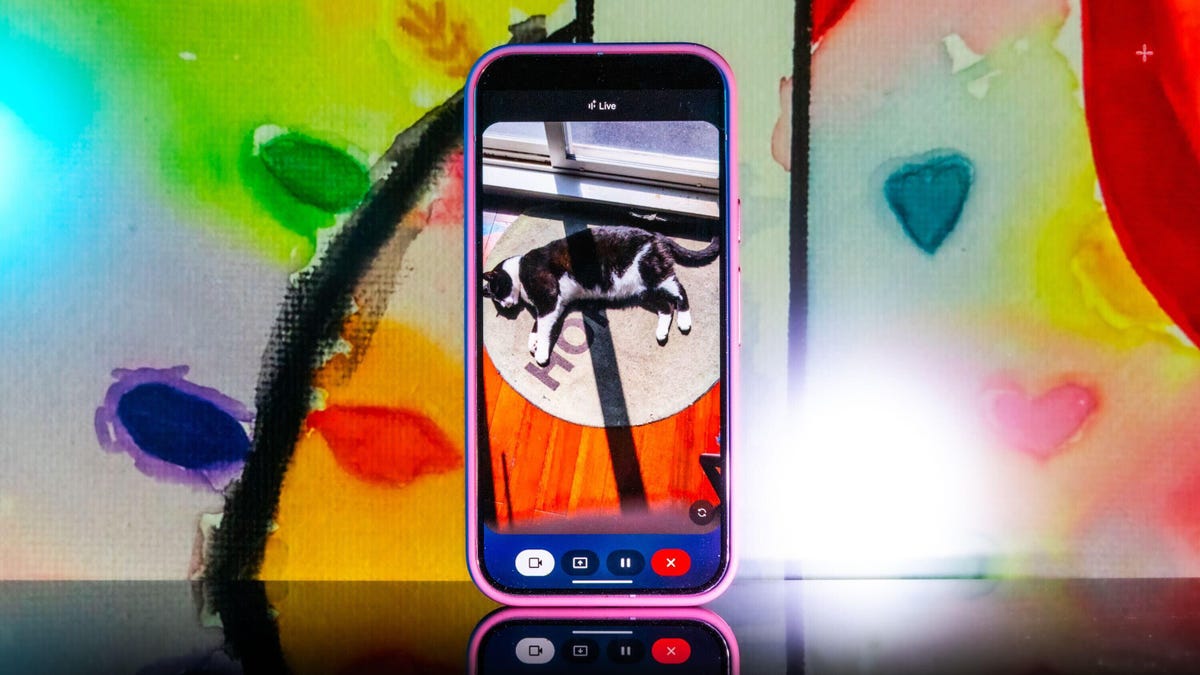

Gemini Live Gives You AI With Eyes, and It’s Awesome

When it works, Gemini Live’s new camera mode feels like the future in all the right ways. I put it to the test.

Google’s been rolling out the new Gemini Live camera mode to all Android phones using the Gemini app for free after a two-week exclusive for Pixel 9 (including the new Pixel 9A) and Galaxy S5 smartphones. In simpler terms, Google successfully gave Gemini the ability to see, as it can recognize objects that you put in front of your camera.

It’s not just a party trick, either. Not only can it identify objects, but you can also ask questions about them — and it works pretty well for the most part. In addition, you can share your screen with Gemini so it can identify things you surface on your phone’s display. When you start a live session with Gemini, you now have the option to enable a live camera view, where you can talk to the chatbot and ask it about anything the camera sees. I was most impressed when I asked Gemini where I misplaced my scissors during one of my initial tests.

«I just spotted your scissors on the table, right next to the green package of pistachios. Do you see them?»

Gemini Live’s chatty new camera feature was right. My scissors were exactly where it said they were, and all I did was pass my camera in front of them at some point during a 15-minute live session of me giving the AI chatbot a tour of my apartment.

When the new camera feature popped up on my phone, I didn’t hesitate to try it out. In one of my longer tests, I turned it on and started walking through my apartment, asking Gemini what it saw. It identified some fruit, ChapStick and a few other everyday items with no problem. I was wowed when it found my scissors.

That’s because I hadn’t mentioned the scissors at all. Gemini had silently identified them somewhere along the way and then recalled the location with precision. It felt so much like the future, I had to do further testing.

My experiment with Gemini Live’s camera feature was following the lead of the demo that Google did last summer when it first showed off these live video AI capabilities. Gemini reminded the person giving the demo where they’d left their glasses, and it seemed too good to be true. But as I discovered, it was very true indeed.

Gemini Live will recognize a whole lot more than household odds and ends. Google says it’ll help you navigate a crowded train station or figure out the filling of a pastry. It can give you deeper information about artwork, like where an object originated and whether it was a limited edition piece.

It’s more than just a souped-up Google Lens. You talk with it, and it talks to you. I didn’t need to speak to Gemini in any particular way — it was as casual as any conversation. Way better than talking with the old Google Assistant that the company is quickly phasing out.

Google also released a new YouTube video for the April 2025 Pixel Drop showcasing the feature, and there’s now a dedicated page on the Google Store for it.

To get started, you can go live with Gemini, enable the camera and start talking. That’s it.

Gemini Live follows on from Google’s Project Astra, first revealed last year as possibly the company’s biggest «we’re in the future» feature, an experimental next step for generative AI capabilities, beyond your simply typing or even speaking prompts into a chatbot like ChatGPT, Claude or Gemini. It comes as AI companies continue to dramatically increase the skills of AI tools, from video generation to raw processing power. Similar to Gemini Live, there’s Apple’s Visual Intelligence, which the iPhone maker released in a beta form late last year.

My big takeaway is that a feature like Gemini Live has the potential to change how we interact with the world around us, melding our digital and physical worlds together just by holding your camera in front of almost anything.

I put Gemini Live to a real test

The first time I tried it, Gemini was shockingly accurate when I placed a very specific gaming collectible of a stuffed rabbit in my camera’s view. The second time, I showed it to a friend in an art gallery. It identified the tortoise on a cross (don’t ask me) and immediately identified and translated the kanji right next to the tortoise, giving both of us chills and leaving us more than a little creeped out. In a good way, I think.

I got to thinking about how I could stress-test the feature. I tried to screen-record it in action, but it consistently fell apart at that task. And what if I went off the beaten path with it? I’m a huge fan of the horror genre — movies, TV shows, video games — and have countless collectibles, trinkets and what have you. How well would it do with more obscure stuff — like my horror-themed collectibles?

First, let me say that Gemini can be both absolutely incredible and ridiculously frustrating in the same round of questions. I had roughly 11 objects that I was asking Gemini to identify, and it would sometimes get worse the longer the live session ran, so I had to limit sessions to only one or two objects. My guess is that Gemini attempted to use contextual information from previously identified objects to guess new objects put in front of it, which sort of makes sense, but ultimately, neither I nor it benefited from this.

Sometimes, Gemini was just on point, easily landing the correct answers with no fuss or confusion, but this tended to happen with more recent or popular objects. For example, I was surprised when it immediately guessed one of my test objects was not only from Destiny 2, but was a limited edition from a seasonal event from last year.

At other times, Gemini would be way off the mark, and I would need to give it more hints to get into the ballpark of the right answer. And sometimes, it seemed as though Gemini was taking context from my previous live sessions to come up with answers, identifying multiple objects as coming from Silent Hill when they were not. I have a display case dedicated to the game series, so I could see why it would want to dip into that territory quickly.

Gemini can get full-on bugged out at times. On more than one occasion, Gemini misidentified one of the items as a made-up character from the unreleased Silent Hill: f game, clearly merging pieces of different titles into something that never was. The other consistent bug I experienced was when Gemini would produce an incorrect answer, and I would correct it and hint closer at the answer — or straight up give it the answer, only to have it repeat the incorrect answer as if it was a new guess. When that happened, I would close the session and start a new one, which wasn’t always helpful.

One trick I found was that some conversations did better than others. If I scrolled through my Gemini conversation list, tapped an old chat that had gotten a specific item correct, and then went live again from that chat, it would be able to identify the items without issue. While that’s not necessarily surprising, it was interesting to see that some conversations worked better than others, even if you used the same language.

Google didn’t respond to my requests for more information on how Gemini Live works.

I wanted Gemini to successfully answer my sometimes highly specific questions, so I provided plenty of hints to get there. The nudges were often helpful, but not always. Below are a series of objects I tried to get Gemini to identify and provide information about.

Technologies

Today’s NYT Connections Hints, Answers and Help for Dec. 24, #927

Here are some hints and the answers for the NYT Connections puzzle for Dec. 24 #927

Looking for the most recent Connections answers? Click here for today’s Connections hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle, Connections: Sports Edition and Strands puzzles.

Today’s NYT Connections puzzle is kind of tough. Ooh, that purple category! Once again, you’ll need to look inside words for hidden words. Read on for clues and today’s Connections answers.

The Times has a Connections Bot, like the one for Wordle. Go there after you play to receive a numeric score and to have the program analyze your answers. Players who are registered with the Times Games section can now nerd out by following their progress, including the number of puzzles completed, win rate, number of times they nabbed a perfect score and their win streak.

Read more: Hints, Tips and Strategies to Help You Win at NYT Connections Every Time

Hints for today’s Connections groups

Here are four hints for the groupings in today’s Connections puzzle, ranked from the easiest yellow group to the tough (and sometimes bizarre) purple group.

Yellow group hint: Cash out.

Green group hint: Chomp

Blue group hint: Walleye and salmon.

Purple group hint: Make a musical sound, with a twist.

Answers for today’s Connections groups

Yellow group: Slang for money.

Green group: Masticate.

Blue group: Fish.

Purple group: Ways to vocalize musically plus a letter.

Read more: Wordle Cheat Sheet: Here Are the Most Popular Letters Used in English Words

What are today’s Connections answers?

The yellow words in today’s Connections

The theme is slang for money. The four answers are bacon, bread, cheese and paper.

The green words in today’s Connections

The theme is masticate. The four answers are bite, champ, chew and munch.

The blue words in today’s Connections

The theme is fish. The four answers are char, pollock, sole and tang.

The purple words in today’s Connections

The theme is ways to vocalize musically plus a letter. The four answers are hump (hum), rapt (rap), singe (sing) and whistler (whistle).

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

Toughest Connections puzzles

We’ve made a note of some of the toughest Connections puzzles so far. Maybe they’ll help you see patterns in future puzzles.

#5: Included «things you can set,» such as mood, record, table and volleyball.

#4: Included «one in a dozen,» such as egg, juror, month and rose.

#3: Included «streets on screen,» such as Elm, Fear, Jump and Sesame.

#2: Included «power ___» such as nap, plant, Ranger and trip.

#1: Included «things that can run,» such as candidate, faucet, mascara and nose.

Technologies

Today’s NYT Mini Crossword Answers for Wednesday, Dec. 24

Here are the answers for The New York Times Mini Crossword for Dec. 24.

Looking for the most recent Mini Crossword answer? Click here for today’s Mini Crossword hints, as well as our daily answers and hints for The New York Times Wordle, Strands, Connections and Connections: Sports Edition puzzles.

Need some help with today’s Mini Crossword? I’m Irish-American, but yet 6-Down, which involves Ireland, stumped me at first. Read on for all the answers.. And if you could use some hints and guidance for daily solving, check out our Mini Crossword tips.

If you’re looking for today’s Wordle, Connections, Connections: Sports Edition and Strands answers, you can visit CNET’s NYT puzzle hints page.

Read more: Tips and Tricks for Solving The New York Times Mini Crossword

Let’s get to those Mini Crossword clues and answers.

Mini across clues and answers

1A clue: Wordle or Boggle

Answer: GAME

5A clue: Big Newton

Answer: ISAAC

7A clue: Specialized vocabulary

Answer: LINGO

8A clue: «See you in a bit!»

Answer: LATER

9A clue: Tone of many internet comments

Answer: SNARK

Mini down clues and answers

1D clue: Sharks use them to breathe

Answer: GILLS

2D clue: From Singapore or South Korea, say

Answer: ASIAN

3D clue: Large ocean ray

Answer: MANTA

4D clue: ___ beaver

Answer: EAGER

6D clue: Second-largest city in the Republic of Ireland, after Dublin

Answer: CORK

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

Technologies

Quadrantids Is a Short but Sweet Meteor Shower Just After New Year’s. How to See It

This meteor shower has one of the most active peaks, but it doesn’t last for very long.

The Quadrantids has the potential to be one of the most active meteor showers of the year, and skygazers won’t have long to wait to see it. The annual shower is predicted to reach maximum intensity on Jan. 3. And with a display that can rival Perseids, Quadrantids could be worth braving the cold to see it.

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

The show officially begins on Dec. 28 and lasts until Jan. 12, according to the American Meteor Society. Quadrantids is scheduled to peak on Jan. 2-3, when it may produce upwards of 125 meteors per hour. This matches Perseids and other larger meteor showers on a per-hour rate, but Quadrantids also has one of the shortest peaks at just 6 hours, so it rarely produces as many meteors overall as the other big ones.

The meteor shower comes to Earth courtesy of the 2003 EH1 asteroid, which is notable because most meteor showers are fed from comets, not asteroids. Per NASA, 2003 EH1 is a near-Earth asteroid that orbits the sun once every five and a half years. Science posits that 2003 EH1 was a comet in a past life, but too many trips around the sun stripped it of its ice, leaving only its rocky core. The Earth runs through EH1’s orbital debris every January, which results in the Quadrantids meteor shower.

How and where to see Quadrantids

Quadrantids is named for the constellation where its meteors appear to originate, a point known as the radiant. This presents another oddity, as the shower originates from the constellation Quadrans Muralis. This constellation ceased to be recognized as an official constellation in the 1920s and isn’t available on most publicly accessible sky maps.

For the modern skygazer, you’ll instead need to find the Bootes and Draco constellations, both of which contain stars that were once a part of the Quadrans Muralis. Draco will be easier to find after sunset on the evening of Jan. 2, and will be just above the horizon in the northern sky. Bootes orbits around Draco, but will remain under the horizon until just after 1 a.m. local time in the northeastern sky. From that point forward, both will sit in the northeastern part of the sky until sunrise. You’ll want to point your chair in that direction and stay there to see meteors.

As the American Meteor Society notes, Quadrantids has a short but active peak, lasting around 6 hours. The peak is expected to start around 4 p.m. ET and last well into the evening. NASA predicts the meteor shower to start one day later on Jan. 3-4, so if you don’t see any on the evening of Jan. 2, try again on Jan. 3.

To get the best results, the standard space viewing tips apply. You’ll want to get as far away from the city and suburbs as possible to reduce light pollution. Since it’ll be so cold outside, dress warmly and abstain from alcoholic beverages, as they can affect your body temperature. You won’t need any binoculars or telescopes, and the reduced field of view may actually impact your ability to see meteors.

The bad news is that either way, the Quadrantids meteor shower coincides almost perfectly with January’s Wolf Moon, which also happens to be a supermoon. This will introduce quite a lot of light pollution, which will likely drown out all but the brightest meteors. So, while it may have a peak of over 100 meteors per hour, both NASA and the AMS agree that the more realistic expectation is 10 or so bright meteors per hour.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies4 года ago

Technologies4 года agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow