Technologies

Apple Vision Pro’s Biggest Missing Pieces

Commentary: Apple’s AR/VR «spatial computer» pushes the upper limits of immersive tech. But it has some notable omissions.

The evolution of VR and AR is in major flux, and right now Apple’s bleeding-edge, ultra-expensive Vision Pro headset is sitting at the top of the heap — and it’s not even expected to arrive until 2024.

After a demo at WWDC, I came away instantly impressed at how the Vision Pro hardware synthesized so much of what I’ve seen in VR and AR over the last five years. But this time it was all done with Retina Display-level resolution and smooth, easy hand-tracking finesse. At $3,499 (around £2,800 or AU$5,300 converted), Apple’s hardware is priced far beyond VR headsets like the Meta Quest 2, and also aims to be a full computer experience in AR (as well as VR, even though Apple doesn’t outright acknowledge it).

Even so, there are notable absences from the Vision Pro, at least based on what Apple presented at WWDC. I had expectations as to what Apple might make the killer apps and features for its spatial computer headset, and only some of them materialized. Maybe others will emerge as we get closer to Apple’s 2024 headset release, or get introduced via software updates much like Meta has done with the Quest over time.

Still, I’m surprised they’re not already part of the Vision Pro experience. To me, they’ll eventually make everything I saw work even better.

Read more: Best VR Headsets of 2023

Fitness

The Meta Quest’s best feature, other than games, is its ability to be a portable exercise machine. Beat Saber was my pandemic home workout, and Meta’s acquisition of Within (maker of Supernatural, a subscription fitness app that pairs with the Apple Watch) indicates how much fitness is already a part of the VR landscape.

Apple is a prime candidate to fuse VR, AR, fitness and health and take the experience far beyond what Meta has done. Apple already has the Apple Watch and Apple Health and Fitness Plus subscription workouts. And yet, the Vision Pro has no announced fitness or health apps yet, except for a sitting-still Meditation app that’s more of a breathing prompt.

When will the Apple Watch become part of the Vision Pro experience?

Even more puzzling: The Vision Pro seemingly doesn’t work with the Apple Watch at all. This could change. Maybe Apple is waiting to discuss this aspect next year. Or, maybe, it will arrive with a future version of the Vision hardware.

Some VR sporting game app makers are already announcing ports for the Vision Pro, including Golf Plus, an app that works in VR with controllers. The assumption, for now, is that these apps will find a way to work just using eye and hand tracking.

Apple didn’t even demonstrate that much active motion inside the Vision Pro; my demos were mostly seated, except for a final walk-around experience where I looked at a dinosaur up close.

Is the dangling battery pack part of the concern? The headset’s weight? Or is Apple starting with computing interfaces first and adding fitness later?

The iPhone in your pocket should ideally interface with Vision Pro, too.

iPhone, iPad and Watch compatibility

Speaking of fitness and the Apple Watch, I always imagined Apple’s AR headset would emphasize seamless compatibility with all of its products. Apple didn’t exactly do that with the Vision Pro, either.

The Vision Pro will work as a monitor-extending device with Macs, providing high-res virtual displays in a similar way that headsets like the Quest 2, Quest Pro and others already do. I didn’t get to try using the Vision Pro with a Mac, and I didn’t get to use a trackpad or keyboard, either. The Vision Pro will work with Magic Trackpads and Magic Keyboards to add physical trackpad/typing input options, again, like other VR/AR headsets, in addition to onboard eye- and hand-tracking.

And yet, the Vision Pro won’t interface directly with iPhones, iPads or the Apple Watch. Not yet, at least.

The Vision Pro primarily runs iPad-type apps. This is why the iPad Pro seems to be the best computer companion to the Vision Pro: it has a keyboard, a trackpad, built-in motion tracking that’s already AR-friendly, front and rear depth-sensing cameras that could possibly help with 3D scanning environments or faces, and it has a touchscreen and Pencil stylus.

Qualcomm’s software tools for AR glasses extend phone apps to headsets. The Apple Vision Pro bypasses the phone and works on its own.

Apple is emphasizing that the Vision Pro is a self-contained computer that doesn’t need other devices. That’s understandable, and most of Apple’s cloud services, like FaceTime, will work so that the Vision Pro will essentially absorb most iPhone and iPad features. Yet I don’t understand why iPhones, iPads and Watches wouldn’t be welcome input accessories. Their touchscreens and motion controls could help them act as remotes or physical-feedback devices, in a similar way to how Qualcomm is already looking at the relationship between phones and AR glasses. I hold up my iPhone all the time to enter passwords on the Apple TV. I seamlessly drop photos, links and text from my iPhone over to my Mac.

Touchscreens could act as virtual keyboards. Drawing on the iPad could mirror a 3D art interface. With Apple’s already excellent passthrough cameras, iPhone, iPad and Watch displays could become interactive second screens, tactile interfaces that sprout extra parts in AR. Also, there’s the value of haptics and physical feedback.

The PSVR 2 controller: One advantage to physical devices is physical feedback.

No haptics

The buzzing, tapping and rumbling feelings we get on our phones, watches and game controllers, those are feedback tools I’ve really connected with when I go into VR. The PlayStation VR 2 even has rumbling feedback in its headset. The Vision Pro, with eye and hand tracking, has no controllers. And no haptic feedback. I’ve been fascinated by the future of haptics — I saw a lot of experimental solutions earlier this year. For now, Apple is sitting out on haptic solutions for Vision Pro.

When I use iPhones and the Watch, I feel those little virtual clicks as reminders of when I’ve opened something, or when information comes in. I feel them as extensions of my perceptual field. In VR, it’s the same way. Apple’s pinch-based hand tracking technically has some physical sensation when your own fingers touch each other, but nothing will buzz or tap to let you know something is happening beyond your field of view — in another open app, for instance, or behind you in an immersive 3D environment.

Microsoft made a similar decision with the HoloLens by only having in-air hand tracking, but former AR head Alex Kipman told me years ago that haptics were part of the HoloLens roadmap.

Apple already has haptic devices; the Apple Watch, for example. All those iPhones, too. I’m surprised the Vision Pro doesn’t already have a solution for haptics. But maybe it’s also on its roadmap?

Logitech’s VR Ink, released in 2019, is an in-air 3D stylus. How will Apple handle creative tools in 3D?

Will there ever be other accessories like the Pencil?

One of the wildest parts about a mixed-reality future is how it can blend virtual and real tools together, or even invent tools that don’t exist. I’ve had my VR controllers act like they’re morphing into objects that feel like they’re an extension of my body. Some companies like Logitech have already developed in-air 3D styluses for creative work in VR and AR.

Apple’s Vision Pro demos didn’t show off any creative apps beyond the collaborative Freeform, and nothing that showed how 3D inputs could be improved with handheld tools.

Maybe Apple is starting off by emphasizing the power of just eyes and hands here, similar to how Steve Jobs initially refused to give the iPad a stylus. But the iPad has a Pencil now, and it’s an essential art tool for many people. Dedicated physical peripherals are helpful, and Apple has none with its Vision Pro headset (yet). I do like VR controllers, and Meta’s clever transforming Quest Pro controllers can be flipped around to become writing tools with an added stylus tip. As a flood of creative apps arrive on the Vision Pro in 2024, will Apple address possibilities for dedicated accessories? Will the Vision Pro allow for easy pairing of them? Hopefully, yes.

The Apple Vision Pro is a long way from arriving, and there’s still so much we don’t know. As Apple’s first AR/VR headset evolves, however, these key aspects should be kept in mind, because they’ll be incredibly important ways to expand how the headset feels useful and flexible for everyone.

Technologies

AI Slop Is Destroying the Internet. These Are the People Fighting to Save It

Technologies

The Sun’s Temper Tantrums: What You Should Know About Solar Storms

Solar storms are associated with the lovely aurora borealis, but they can have negative impacts, too.

Last month, Earth was treated to a massive aurora borealis that reached as far south as Texas. The event was attributed to a solar storm that lasted nearly a full day and will likely contend for the strongest of 2026. Such solar storms are usually fun for people on Earth, as we are protected from solar radiation by our planet’s atmosphere, so we can just enjoy the gorgeous greens and pretty purples in the night sky.

But solar storms are a lot more than just the aurora borealis we see, and sometimes they can cause real damage. There are several examples of this in recorded history, with the earliest being the Carrington Event, a solar storm that took place on Sept. 1, 1859. It remains the strongest solar storm ever recorded, where the world’s telegraph machines became overloaded with energy from it, causing them to shock their operators, send ghost messages and even catch on fire.

Things have changed a lot since the mid-1800s, and while today’s technology is a lot more resistant to solar radiation than it once was, a solar storm of that magnitude could still cause a lot of damage.

What is a solar storm?

A solar storm is a catchall term that describes any disturbance in the sun that involves the violent ejection of solar material into space. This can come in the form of coronal mass ejections, where clouds of plasma are ejected from the sun, or solar flares, which are concentrated bursts of electromagnetic radiation (aka light).

A sizable percentage of solar storms don’t hit Earth, and the sun is always belching material into space, so minor solar storms are quite common. The only ones humans tend to talk about are the bigger ones that do hit the Earth. When this happens, it causes geomagnetic storms, where solar material interacts with the Earth’s magnetic fields, and the excitations can cause issues in everything from the power grid to satellite functionality. It’s not unusual to hear «solar storm» and «geomagnetic storm» used interchangeably, since solar storms cause geomagnetic storms.

Solar storms ebb and flow on an 11-year cycle known as the solar cycle. NASA scientists announced that the sun was at the peak of its most recent 11-year cycle in 2024, and, as such, solar storms have been more frequent. The sun will metaphorically chill out over time, and fewer solar storms will happen until the cycle repeats.

This cycle has been stable for hundreds of millions of years and was first observed in the 18th century by astronomer Christian Horrebow.

How strong can a solar storm get?

The Carrington Event is a standout example of just how strong a solar storm can be, and such events are exceedingly rare. A rating system didn’t exist back then, but it would have certainly maxed out on every chart that science has today.

We currently gauge solar storm strength on four different scales.

The first rating that a solar storm gets is for the material belched out of the sun. Solar flares are graded using the Solar Flare Classification System, a logarithmic intensity scale that starts with B-class at the lowest end, and then increases to C, M and finally X-class at the strongest. According to NASA, the scale goes up indefinitely and tends to get finicky at higher levels. The strongest solar flare measured was in 2003, and it overloaded the sensors at X17 and was eventually estimated to be an X45-class flare.

CMEs don’t have a named measuring system, but are monitored by satellites and measured based on the impact they have on the Earth’s geomagnetic field.

Once the material hits Earth, NOAA uses three other scales to determine how strong the storm was and which systems it may impact. They include:

- Geomagnetic storm (G1-G5): This scale measures how much of an impact the solar material is having on Earth’s geomagnetic field. Stronger storms can impact the power grid, electronics and voltage systems.

- Solar radiation storm (S1-S5): This measures the amount of solar radiation present, with stronger storms increasing exposure to astronauts in space and to people in high-flying aircraft. It also describes the storm’s impact on satellite functionality and radio communications.

- Radio blackouts (R1-R5): Less commonly used but still very important. A higher R-rating means a greater impact on GPS satellites and high-frequency radios, with the worst case being communication and navigation blackouts.

Solar storms also cause auroras by exciting the molecules in Earth’s atmosphere, which then light up as they «calm down,» per NASA. The strength and reach of the aurora generally correlate with the strength of the storm. G1 storms rarely cause an aurora to reach further south than Canada, while a G5 storm may be visible as far south as Texas and Florida. The next time you see a forecast calling for a big aurora, you can assume a big solar storm is on the way.

How dangerous is a solar storm?

The overwhelming majority of solar storms are harmless. Science has protections against the effects of solar storms that it did not have back when telegraphs were catching on fire, and most solar storms are small and don’t pose any threat to people on the surface since the Earth’s magnetic field protects us from the worst of it.

That isn’t to say that they pose no threats. Humans may be exposed to ionizing radiation (the bad kind of radiation) if flying at high altitudes, which includes astronauts in space. NOAA says that this can happen with an S2 or higher storm, although location is really important here. Flights that go over the polar caps during solar storms are far more susceptible than your standard trip from Chicago to Houston, and airliners have a whole host of rules to monitor space weather, reroute flights and monitor long-term radiation exposure for flight crews to minimize potential cancer risks.

Larger solar storms can knock quite a few systems out of whack. NASA says that powerful storms can impact satellites, cause radio blackouts, shut down communications, disrupt GPS and cause damaging power fluctuations in the power grid. That means everything from high-frequency radio to cellphone reception could be affected, depending on the severity.

A good example of this is the Halloween solar storms of 2003. A series of powerful solar flares hit Earth on Oct. 28-31, causing a solar storm so massive that loads of things went wrong. Most notably, airplane pilots had to change course and lower their altitudes due to the radiation wreaking havoc on their instruments, and roughly half of the world’s satellites were entirely lost for a few days.

A paper titled Flying Through Uncertainty was published about the Halloween storms and the troubles they caused. Researchers note that 59% of all satellites orbiting Earth at the time suffered some sort of malfunction, like random thrusters going offline and some shutting down entirely. Over half of the Earth’s satellites were lost for days, requiring around-the-clock work from NASA and other space agencies to get everything back online and located.

Earth hasn’t experienced a solar storm on the level of the Carrington Event since it occurred in 1859, so the maximum damage it could cause in modern times is unknown. The European Space Agency has run simulations, and spoiler alert, the results weren’t promising. A solar storm of that caliber has a high chance of causing damage to almost every satellite in orbit, which would cause a lot of problems here on Earth as well. There were also significant risks of electrical blackouts and damage. It would make one heck of an aurora, but you might have to wait to post it on social media until things came back online.

Do we have anything to worry about?

We’ve mentioned two massive solar storms with the Halloween storms and the Carrington Event. Such large storms tend to occur very infrequently. In fact, those two storms took place nearly 150 years apart. Those aren’t the strongest storms yet, though. The very worst that Earth has ever seen were what are known as Miyake events.

Miyake events are times throughout history when massive solar storms were thought to have occurred. These are measured by massive spikes in carbon-14 that were preserved in tree rings. Miyake events are few and far between, but science believes at least 15 such events have occurred over the past 15,000 years. That includes one in 12350 BCE, which may have been twice as large as any other known Miyake event.

They currently hold the title of the largest solar storms that we know of, and are thought to be caused by superflares and extreme solar events. If one of these happened today, especially one as large as the one in 12350 BCE, it would likely cause widespread, catastrophic damage and potentially threaten human life.

Those only appear to happen about once every several hundred to a couple thousand years, so it’s exceedingly unlikely that one is coming anytime soon. But solar storms on the level of the Halloween storms and the Carrington Event have happened in modern history, and humans have managed to survive them, so for the time being, there isn’t too much to worry about.

Technologies

TMR vs. Hall Effect Controllers: Battle of the Magnetic Sensing Tech

The magic of magnets tucked into your joysticks can put an end to drift. But which technology is superior?

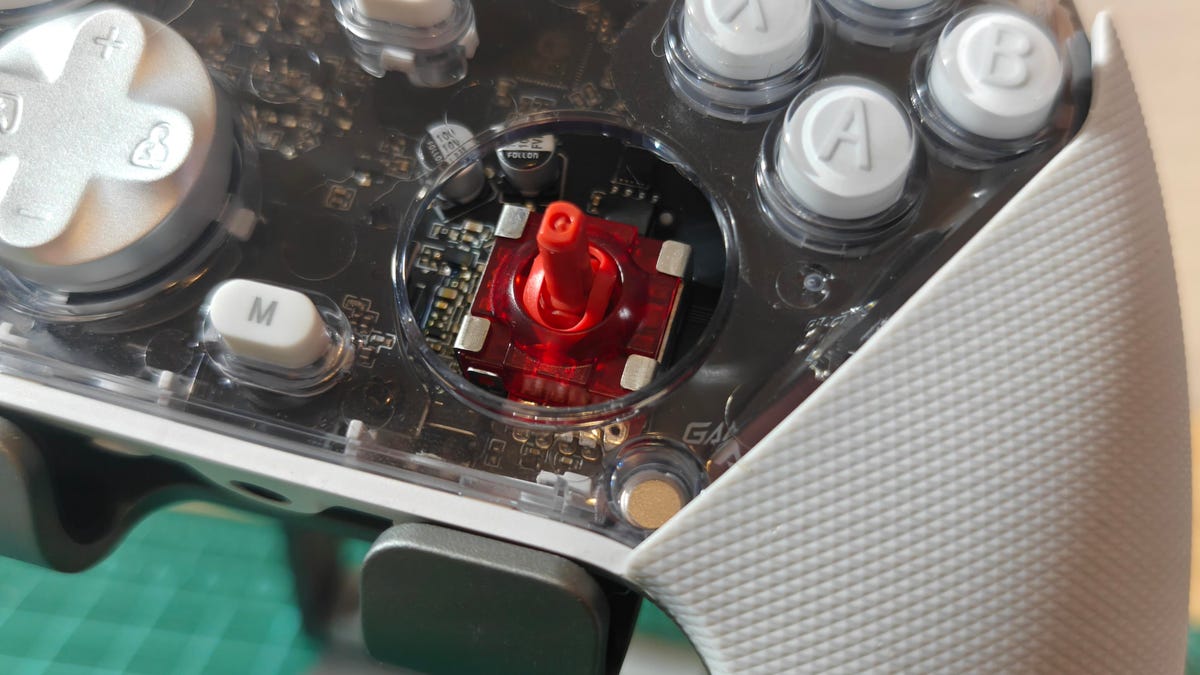

Competitive gamers look for every advantage they can get, and that drive has spawned some of the zaniest gaming peripherals under the sun. There are plenty of hardware components that actually offer meaningful edges when implemented properly. Hall effect and TMR (tunnel magnetoresistance or tunneling magnetoresistance) sensors are two such technologies. Hall effect sensors have found their way into a wide variety of devices, including keyboards and gaming controllers, including some of our favorites like the GameSir Super Nova.

More recently, TMR sensors have started to appear in these devices as well. Is it a better technology for gaming? With multiple options vying for your lunch money, it’s worth understanding the differences to decide which is more worthy of living inside your next game controller or keyboard.

How Hall effect joysticks work

We’ve previously broken down the difference between Hall effect tech and traditional potentiometers in controller joysticks, but here’s a quick rundown on how Hall effect sensors work. A Hall effect joystick moves a magnet over a sensor circuit, and the magnetic field affects the circuit’s voltage. The sensor in the circuit measures these voltage shifts and maps them to controller inputs. Element14 has a lovely visual explanation of this effect here.

The advantage this tech has over potentiometer-based joysticks used in controllers for decades is that the magnet and sensor don’t need to make physical contact. There’s no rubbing action to slowly wear away and degrade the sensor. So, in theory, Hall effect joysticks should remain accurate for the long haul.

How TMR joysticks work

While TMR works differently, it’s a similar concept to Hall effect devices. When you move a TMR joystick, it moves a magnet in the vicinity of the sensor. So far, it’s the same, right? Except with TMR, this shifting magnetic field changes the resistance in the sensor instead of the voltage.

There’s a useful demonstration of a sensor in action here. Just like Hall effect joysticks, TMR joysticks don’t rely on physical contact to register inputs and therefore won’t suffer the wear and drift that affects potentiometer-based joysticks.

Which is better, Hall effect or TMR?

There’s no hard and fast answer to which technology is better. After all, the actual implementation of the technology and the hardware it’s built into can be just as important, if not more so. Both technologies can provide accurate sensing, and neither requires physical contact with the sensing chip, so both can be used for precise controls that won’t encounter stick drift. That said, there are some potential advantages to TMR.

According to Coto Technology, who, in fairness, make TMR sensors, they can be more sensitive, allowing for either greater precision or the use of smaller magnets. Since the Hall effect is subtler, it relies on amplification and ultimately requires extra power. While power requirements vary from sensor to sensor, GameSir claims its TMR joysticks use about one-tenth the power of mainstream Hall effect joysticks. Cherry is another brand highlighting the lower power consumption of TMR sensors, albeit in the brand’s keyboard switches.

The greater precision is an opportunity for TMR joysticks to come out ahead, but that will depend more on the controller itself than the technology. Strange response curves, a big dead zone (which shouldn’t be needed), or low polling rates could prevent a perfectly good TMR sensor from beating a comparable Hall effect sensor in a better optimized controller.

The power savings will likely be the advantage most of us really feel. While it won’t matter for wired controllers, power savings can go a long way for wireless ones. Take the Razer Wolverine V3 Pro, for instance, a Hall effect controller offering 20 hours of battery life from a 4.5-watt-hour battery with support for a 1,000Hz polling rate on a wireless connection. Razer also offers the Wolverine V3 Pro 8K PC, a near-identical controller with the same battery offering TMR sensors. They claim the TMR version can go for 36 hours on a charge, though that’s presumably before cranking it up to an 8,000Hz polling rate — something Razer possibly left off the Hall effect model because of power usage.

The disadvantage of the TMR sensor would be its cost, but it appears that it’s negligible when factored into the entire price of a controller. Both versions of the aforementioned Razer controller are $199. Both 8BitDo and GameSir have managed to stick them into reasonably priced controllers like the 8BitDo Ultimate 2, GameSir G7 Pro and GameSir Cyclone 2.

So which wins?

It seems TMR joysticks have all the advantages of Hall effect joysticks and then some, bringing better power efficiency that can help in wireless applications. The one big downside might be price, but from what we’ve seen right now, that doesn’t seem to be much of an issue. You can even find both technologies in controllers that cost less than some potentiometer models, like the Xbox Elite Series 2 controller.

Caveats to consider

For all the hype, neither Hall effect nor TMR joysticks are perfect. One of their key selling points is that they won’t experience stick drift, but there are still elements of the joystick that can wear down. The ring around the joystick can lose its smoothness. The stick material can wear down (ever tried to use a controller with the rubber worn off its joystick? It’s not pleasant). The linkages that hold the joystick upright and the springs that keep it stiff can loosen, degrade and fill with dust. All of these can impact the continued use of the joystick, even if the Hall effect or TMR sensor itself is in perfect operating order.

So you might not get stick drift from a bad sensor, but you could get stick drift from a stick that simply doesn’t return to its original resting position. That’s when having a controller that’s serviceable or has swappable parts, like the PDP Victrix Pro BFG, could matter just as much as having one with Hall effect or TMR joysticks.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies5 лет ago

Technologies5 лет agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies5 лет ago

Technologies5 лет agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow