Technologies

A Decade Later, Your Phone Still Does Not Replace a Pro Camera

Commentary: Phone cameras are getting better and better, but they still aren’t much closer to replacing dSLRs and professional mirrorless cameras.

On a chilly Saturday afternoon in San Francisco, I was under a patio heater with a group of friends when someone said we should get a group photo. What happened next was surprising. Instead of using his phone to take a commemorative photo, my friend pulled out a point-and-shoot camera. I thought to myself, «Wait. The phone killed the point-and-shoot camera years ago. Why didn’t he just use his iPhone?» Granted it was the high-end Sony RX100 VII, which is an excellent compact camera and one of the few point-and-shoots still made today.

Phones from Apple, Samsung and Google include some of the best phone cameras you can buy, like the iPhone 14 Pro, Google Pixel 7 Pro and Samsung Galaxy S22 Ultra. But for professional photographers and filmmakers, that’s not always enough. The holy grail is being able to have a truly large image sensor like the one you’d find in a high-end mirrorless camera and a lens mount that could attach to your phone. Sounds simple enough right? Wrong.

Everyone from Samsung to Panasonic, Sony and Motorola has tried to make this dream a reality in some way. Now Xiaomi, the world’s third largest phone-maker (behind Samsung and Apple) is the latest to rekindle the quest for the phone camera holy grail. The company has a new prototype phone that lets you mount a Leica M lens on it.

But this is just a concept. If you’re wondering whether phones will ever make dedicated pro cameras obsolete the way they did with point-and-shoots, the answer is a resounding no. The past decade has shown us why.

Why phone cameras are limited

First, it’s important to understand how your phone’s camera works. Behind the lens is a tiny image sensor, smaller than a single Lego brick. Sometimes there are headlines that Sony, Sharp or, years ago, Panasonic put a 1-inch sensor in a phone. Sadly, that name doesn’t refer to the actual dimensions and in reality, a 1-inch image sensor is about 0.6 of an inch diagonally or, for the sake of approximation, two Lego bricks. The 1-inch sensor is the hoverboard of cameras, but it’s still one of the largest to be put into a phone.

Dedicated cameras have sensors that are closer to 12 Lego bricks (positioned side-by-side in a four-by-three rectangle) and most come with a lens mount that lets you change lenses. The «holy grail» is to put one of these larger sensors into a phone.

But bigger sensors are more expensive than the little ones used in your iPhone and there are space considerations. A lens for a phone camera sensor is relatively small. But lenses for a full-frame sensor are larger and require more space between the back of the lens and the sensor. Phones simply lack this room without becoming significantly thicker.

Every year we see Apple, Samsung and the like take small steps toward improving phone photography. But phone camera hardware has largely hit a ceiling. Instead of radical camera improvements, we get modest upgrades. This could be a sign that companies have honed in on what consumers want. But it could also be a consequence of space and size limitations of tiny sensors.

Instead smartphone-makers use computational photography to overcome a tiny sensor’s limitations — smaller dynamic range and light sensitivity. Google, Apple, Samsung all use machine learning algorithms and artificial intelligence to improve the photos you take with your phone.

But hardware is also important. Earlier this month Tim Cook, Apple’s CEO, shared a photo on Twitter, above, of a visit to Sony in Japan. While it’s been widely assumed that Apple uses Sony’s image sensors in the iPhone, this is the first time Cook formally acknowledged it. And as CNET readers already know, Sony phones like the Xperia 1 IV have some of the best camera hardware found on any phone sold today.

The Xperia 1 IV won a CNET Innovation award for its telephoto camera, which has miniature lens elements that actually move back and forth, like a real telephoto lens. The result is that you can use the lens to zoom without cropping digitally, which degrades the image. Can you imagine an iPhone 15 Pro with this lens?

The Xiaomi 12S Ultra Leica lens prototype is so 2013

That brings us to Xiaomi, which is the latest company attempting to merge pro-level cameras with your phone. In November, Xiaomi released a video of a phone camera concept that shows a Leica lens mounted on a 12S Ultra phone. This prototype is like a concept car: No matter how cool it is, you’ll never get to drive it.

The Chinese company took the 12S Ultra and added a removable ring around its circular camera bump. The ring covers a thread around the outside edge of the camera bump onto which you can attach an adapter that lets you mount Leica M lenses. The adapter’s thickness is the same distance that a Leica M lens needs to be positioned away from the sensor in order to focus.

A few caveats: The Xiaomi 12S Ultra concept uses an exposed 1-inch sensor, which as I mentioned earlier, isn’t actually 1-inch. Next, this is purely a concept. If something like this actually went on sale, it would cost thousands of dollars. A nice dedicated camera like the Fujifilm X100 V, which has a much bigger sensor, costs $1,399 in comparison.

Xiaomi isn’t the first phone-maker to try this. In 2013, Sony took an image sensor and put it on the back of a lens that has a grip to attach to the back of a phone. The idea is to use your phone’s screen as the viewfinder for the camera system, which you can control through an app. Essentially you bypass your phone’s cameras.

Sony made several different versions of this «lens with a grip» and used sensors that were just a bit bigger than those found in phone cameras. Sony also made the QX-1 camera, which had an APS-C sized sensor that in our Lego approximation is about six bricks positioned side-by-side in a three-by-two rectangle. That’s not as large as a full-frame sensor, but vastly bigger than your phone’s image sensors.

The Sony QX-1 has a Sony E-mount, meaning you can use various E-mount lenses or use adapters for Canon or Nikon lenses. Because the QX-1 is controlled with Bluetooth, you could either attach it to your phone or put it in different places to take photos remotely.

The QX-1 came out in 2014 and cost $350. Imagine having something like this today? I would definitely buy a 2022 version if Sony made it, but sadly the QX-1 was disconitntued a few years after it went on sale. That’s around the time that Red, the company that makes cinema cameras used to film shows and movies like The Hobbit, The Witcher, Midsommar and The Boys, made a phone called the Red Hydrogen One.

Despite being a phone made by one of the best camera companies in the world, the $1,300 Red Hydrogen One’s cameras were on par with those from a $700 Android phone. The back of the phone had pogo pins designed to attach different modules (like Moto Mods), including a «cinema camera module» that housed a large image sensor and a lens mount, according to patent drawings. The idea is that you would use a Hydrogen One and the cinema mod to turn the phone into a mini-Red cinema camera.

Well, that never happened.

The Red Hydrogen One was discontinued and now shows up as a phone prop in films like F9, on the dashboard of Dominic Toretto’s car or in the hands of Leonard DiCaprio in Don’t Look Up.

2023 will show that pro cameras won’t be killed off by our phones

There aren’t any rumors that Apple is making an iPhone with a camera lens mount, nor are there murmurs of a Google mirrorless camera. But if Xiaomi made a prototype of a phone with a professional lens mount, you have to imagine that somewhere in the basement of Apple Park sits an old concept camera that runs an iOS-like interface, is powered by the iPhone’s A-series chip and able to use some of the same computational photography processing. Or at least that’s what I’d like to believe.

How amazing would photos look from a pro-level dedicated camera that uses the same processing tricks that Apple or Google implement on their phones? And how nice would it be to have a phone-like OS to share those photos and videos to Instagram or TikTok?

Turns out, Samsung tried bringing an Android phone’s interface to a camera in 2012. Noticing a theme here? Most of these holy grail phone camera concepts were tried 10 years ago. A few of these, like the Sony QX-1, were truly ahead of their time.

I don’t think Apple will ever release a standalone iOS-powered camera or make an iPhone with a Leica lens mount. The truth is that over the past decade, cameras have gotten smaller. The bulky dSLRs that signified professional cameras for years are quickly heading into the sunset. Mirrorless cameras have risen in popularity. They tend to be smaller, since they don’t need the space for a dSLR mirror box.

If there is a takeaway from all of this, it’s just a reminder of how good the cameras on our phones have gotten in that time. Even if it feels like they’ve plateaued, they’re dependable for most everyday tasks. But they won’t be replacing professional cameras anytime soon.

If you want to step up into a professional camera, find one like the Fujifilm X100 V or Sony A7C, that pack a large image sensor, a sharp lens and can fit into a coat pocket. And next time I’m at a dinner party with friends, I won’t act so shocked when someone wants to take a picture with a camera instead of a phone.

Read more: Pixel 7 Pro Actually Challenges My $10,000 DSLR Camera Setup

Technologies

This 3-in-1 Charger Is a Must-Have for Travelers, and It Just Hit a Record-Low of $95

Snag it for $45 off and charge your iPhone, AirPods and Apple Watch at the same time.

If you’re a frequent traveler, then you know that outlets are a precious commodity in places like airports and coffee shops. So why waste one on a single device when you can charge up to three at once? Right now, you can grab this seriously sleek Ugreen Magflow three-in-one foldable charger for just $95 at Amazon. That’s a $45 discount and the all-time lowest price we’ve seen. Just don’t wait too long, as this deal could expire at any time.

At just 7.4 ounces, this compact charging station is designed to be taken on the go. But despite its size, it still supports 25-watt MagSafe charging for iPhones, as well as 5-watt wireless charging for AirPods and Apple Watches. The charging stand also tilts up to double as a stand, and it’s equipped with 16 magnets to keep your phone aligned and securely in place. Plus, it’s got built-in protections against overheating, overcharging, short-circuiting and more to prevent damage to your devices.

Why this deal matters

This folding Ugreen charger is great for juicing up your devices on the go, and it’s never been more affordable. Plus, Ugreen makes some of the best MagSafe chargers on the market right now, so don’t miss your chance to grab one at a record-low price.

Technologies

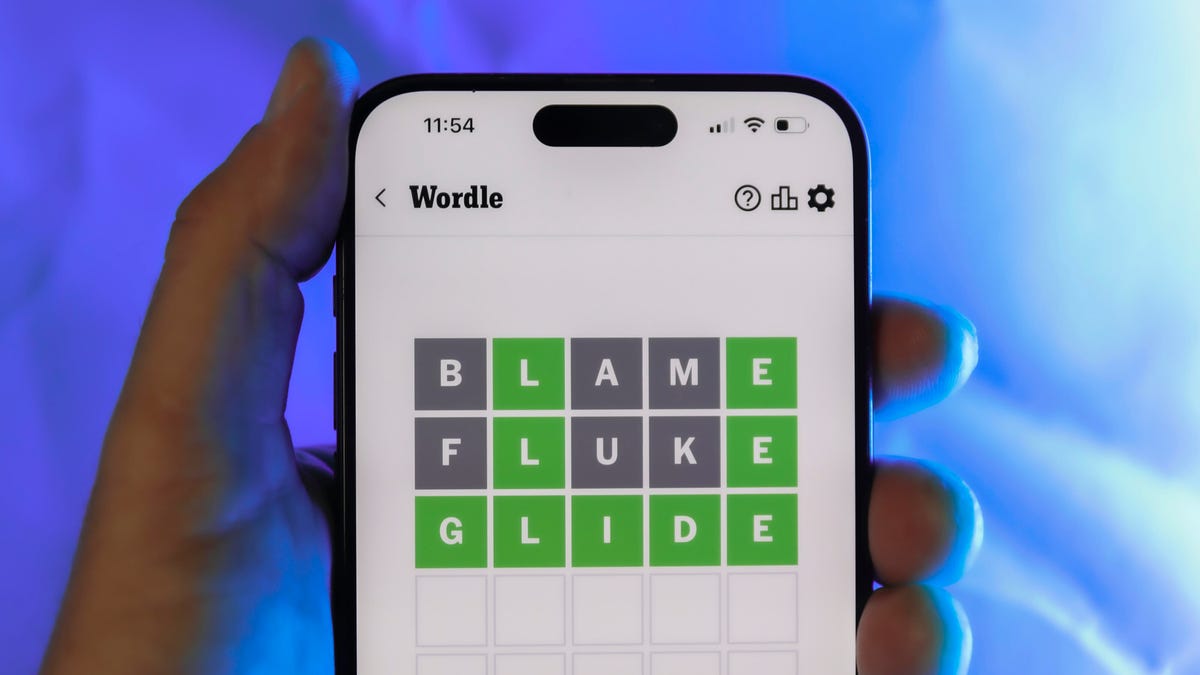

Today’s Wordle Hints, Answer and Help for Jan. 13, #1669

Here are hints and the answer for today’s Wordle for Jan. 13, No. 1,669.

Looking for the most recent Wordle answer? Click here for today’s Wordle hints, as well as our daily answers and hints for The New York Times Mini Crossword, Connections, Connections: Sports Edition and Strands puzzles.

Today’s Wordle puzzle is a little tricky, and it might make you hungry. If you need a new starter word, check out our list of which letters show up the most in English words. If you need hints and the answer, read on.

Read more: New Study Reveals Wordle’s Top 10 Toughest Words of 2025

Today’s Wordle hints

Before we show you today’s Wordle answer, we’ll give you some hints. If you don’t want a spoiler, look away now.

Wordle hint No. 1: Repeats

Today’s Wordle answer has no repeated letters.

Wordle hint No. 2: Vowels

Today’s Wordle answer has two vowels.

Wordle hint No. 3: First letter

Today’s Wordle answer begins with G.

Wordle hint No. 4: Last letter

Today’s Wordle answer ends with O.

Wordle hint No. 5: Meaning

Today’s Wordle answer can refer to a spicy Cajun stew popular in New Orleans.

TODAY’S WORDLE ANSWER

Today’s Wordle answer is GUMBO.

Yesterday’s Wordle answer

Yesterday’s Wordle answer, Jan. 12, No. 1,668 was TRIAL.

Recent Wordle answers

Jan. 8, No. 1,664: BLAST

Jan. 9, No. 1,665: EIGHT

Jan. 10, No. 1,666: MANIC

Jan. 11, No. 1,667: QUARK

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

What’s the best Wordle starting word?

Don’t be afraid to use our tip sheet ranking all the letters in the alphabet by frequency of uses. In short, you want starter words that lean heavy on E, A and R, and don’t contain Z, J and Q.

Some solid starter words to try:

ADIEU

TRAIN

CLOSE

STARE

NOISE

Technologies

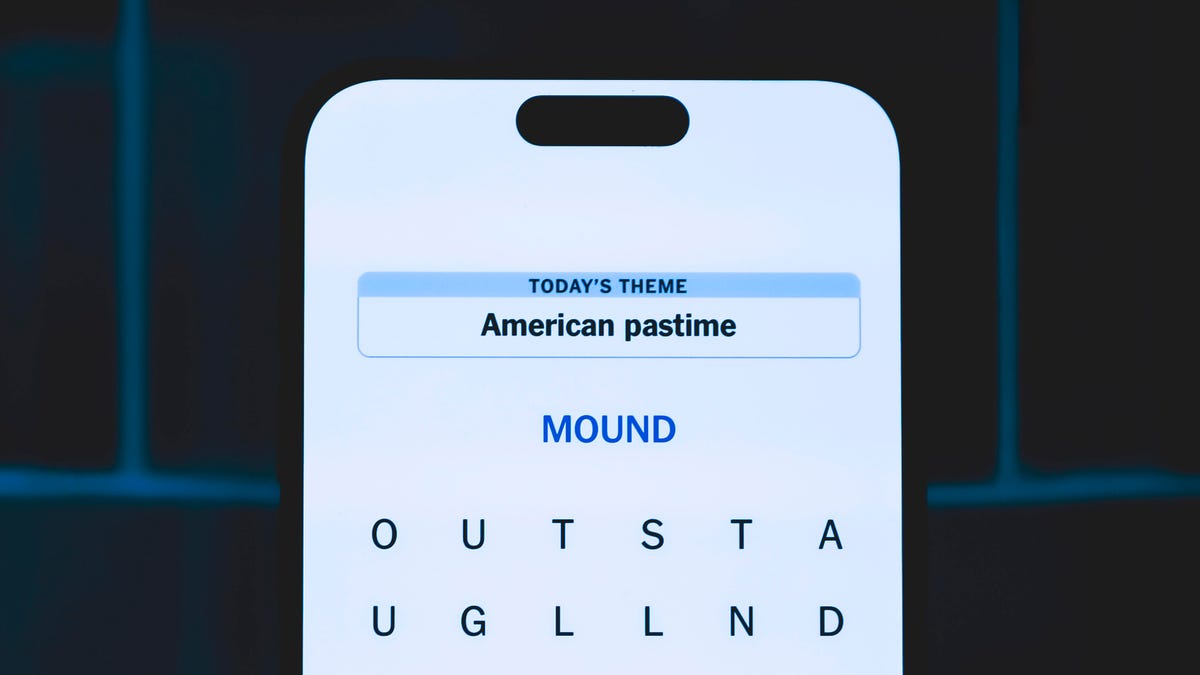

Today’s NYT Strands Hints, Answers and Help for Jan. 13 #681

Here are hints and answers for the NYT Strands puzzle for Jan. 13, No. 681.

Looking for the most recent Strands answer? Click here for our daily Strands hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle, Connections and Connections: Sports Edition puzzles.

It took me a while to figure out the theme for today’s NYT Strands puzzle, but once I did, I thought it was a fun one. Some of the answers are difficult to unscramble, so if you need hints and answers, read on.

I go into depth about the rules for Strands in this story.

If you’re looking for today’s Wordle, Connections and Mini Crossword answers, you can visit CNET’s NYT puzzle hints page.

Read more: NYT Connections Turns 1: These Are the 5 Toughest Puzzles So Far

Hint for today’s Strands puzzle

Today’s Strands theme is: You need to chill

If that doesn’t help you, here’s a clue: Brrrr!

Clue words to unlock in-game hints

Your goal is to find hidden words that fit the puzzle’s theme. If you’re stuck, find any words you can. Every time you find three words of four letters or more, Strands will reveal one of the theme words. These are the words I used to get those hints but any words of four or more letters that you find will work:

- GONE, ABLE, TABLE, FOOD, TEEN, LEAF, GOOF, GOOD, SAFE

Answers for today’s Strands puzzle

These are the answers that tie into the theme. The goal of the puzzle is to find them all, including the spangram, a theme word that reaches from one side of the puzzle to the other. When you have all of them (I originally thought there were always eight but learned that the number can vary), every letter on the board will be used. Here are the nonspangram answers:

- PIZZA, SHERBET, POPSICLES, WAFFLES, VEGETABLES

Today’s Strands spangram

Today’s Strands spangram is FROZENFOOD. To find it, start with the F that is five letters down on the far-right row, and wind backward.

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

Toughest Strands puzzles

Here are some of the Strands topics I’ve found to be the toughest.

#1: Dated slang. Maybe you didn’t even use this lingo when it was cool. Toughest word: PHAT.

#2: Thar she blows! I guess marine biologists might ace this one. Toughest word: BALEEN or RIGHT.

#3: Off the hook. Again, it helps to know a lot about sea creatures. Sorry, Charlie. Toughest word: BIGEYE or SKIPJACK.

-

Technologies3 года ago

Technologies3 года agoTech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Technologies3 года agoBest Handheld Game Console in 2023

-

Technologies3 года ago

Technologies3 года agoTighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Technologies4 года agoBlack Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies4 года ago

Technologies4 года agoGoogle to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies4 года ago

Technologies4 года agoVerum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Technologies4 года agoOlivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

Technologies4 года agoiPhone 13 event: How to watch Apple’s big announcement tomorrow