Technologies

Gemini Live’s New Camera Trick Works Like Magic — When It Wants To

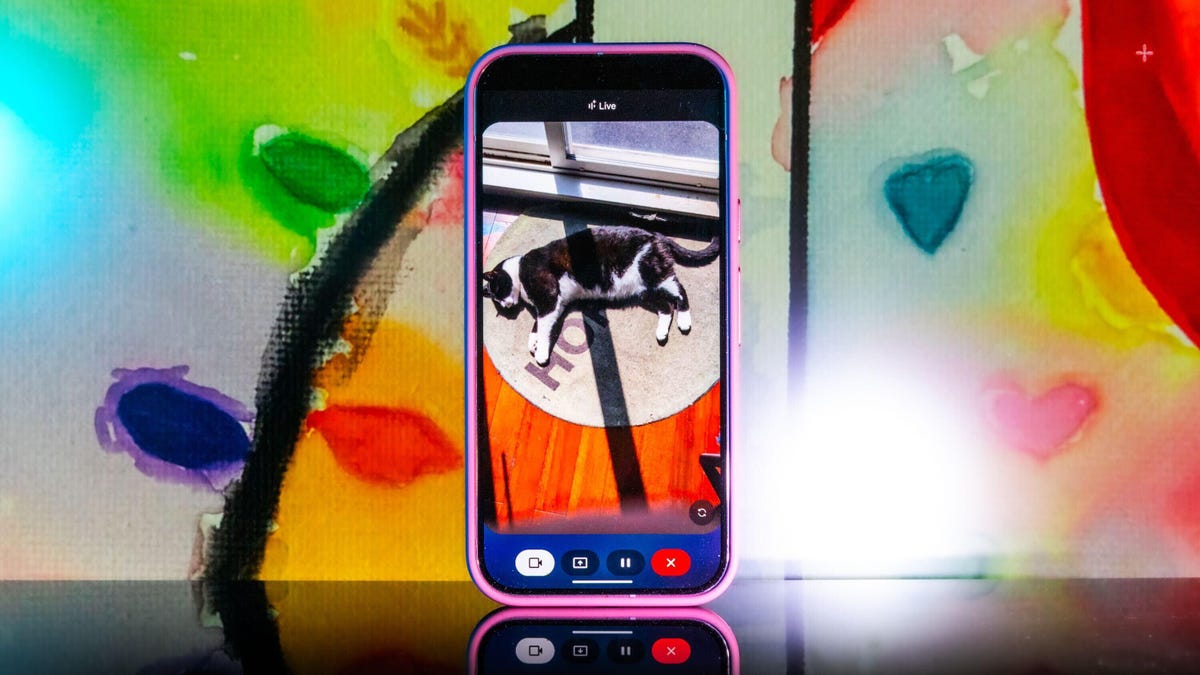

Gemini Live’s new camera mode can identify objects around you and more. I tested it out with my offbeat collectibles.

When Gemini Live’s new camera feature popped up on my phone, I didn’t hesitate to try it out. In one of my longer tests, I turned it on and started walking through my apartment, asking Gemini what it saw. It identified some fruit, chapstick and a few other everyday items with no problem, but I was wowed when I asked where I left my scissors. «I just spotted your scissors on the table, right next to the green package of pistachios. Do you see them?»

It was right, and I was wowed.

I never mentioned the scissors while I was giving Gemini a tour of my apartment, but I made sure their placement was in the camera view for a couple of seconds before moving on and asking additional questions about other objects in the room.

I was following the lead of the demo that Google did last summer when it first showed off these Live video AI capabilities. Gemini reminded the person giving the demo where they left their glasses, and it seemed too good to be true, so I had to try it out and came away impressed.

Gemini Live will recognize a whole lot more than household odds and ends. Google says it’ll help you navigate a crowded train station or figure out the filling of a pastry. It can give you deeper information about artwork, like where an object originated and whether it was a limited edition.

It’s more than just a souped-up Google Lens. You talk with it, and it talks to you. I didn’t need to speak to Gemini in any particular way — it was as casual as any conversation. Way better than talking with the old Google Assistant that the company is quickly phasing out.

Google and Samsung are just starting to roll out the feature to all Pixel 9 (including the new, Pixel 9a) and Galaxy S25 phones. It’s free for those devices, and other Pixel phones can access it via a Google AI Premium subscription. Google also released a new YouTube video for the April 2025 Pixel Drop showcasing the feature, and there’s now a dedicated page on the Google Store for it.

To get started, you can go live with Gemini, enable the camera and start talking.

Gemini Live follows on from Google’s Project Astra, first revealed last year as possibly the company’s biggest «we’re in the future» feature, an experimental next step for generative AI capabilities, beyond your simply typing or even speaking prompts into a chatbot like ChatGPT, Claude or Gemini. It comes as AI companies continue to dramatically increase the skills of AI tools, from video generation to raw processing power. Similar to Gemini Live, there’s Apple’s Visual Intelligence, which the iPhone maker released in a beta form late last year.

My big takeaway is that a feature like Gemini Live has the potential to change how we interact with the world around us, melding our digital and physical worlds together just by holding your camera in front of almost anything.

I put Gemini Live to a real test

The first time I tried it, Gemini was shockingly accurate when I placed a very specific gaming collectible of a stuffed rabbit in my camera’s view. The second time, I showed it to a friend in an art gallery. It identified the tortoise on a cross (don’t ask me) and immediately identified and translated the kanji right next to the tortoise, giving both of us chills and leaving us more than a little creeped out. In a good way, I think.

I got to thinking about how I could stress-test the feature. I tried to screen-record it in action, but it consistently fell apart at that task. And what if I went off the beaten path with it? I’m a huge fan of the horror genre — movies, TV shows, video games — and have countless collectibles, trinkets and what have you. How well would it do with more obscure stuff — like my horror-themed collectibles?

First, let me say that Gemini can be both absolutely incredible and ridiculously frustrating in the same round of questions. I had roughly 11 objects that I was asking Gemini to identify, and it would sometimes get worse the longer the live session ran, so I had to limit sessions to only one or two objects. My guess is that Gemini attempted to use contextual information from previously identified objects to guess new objects put in front of it, which sort of makes sense, but ultimately, neither I nor it benefited from this.

Sometimes, Gemini was just on point, easily landing the correct answers with no fuss or confusion, but this tended to happen with more recent or popular objects. For example, I was surprised when it immediately guessed one of my test objects was not only from Destiny 2, but was a limited edition from a seasonal event from last year.

At other times, Gemini would be way off the mark, and I would need to give it more hints to get into the ballpark of the right answer. And sometimes, it seemed as though Gemini was taking context from my previous live sessions to come up with answers, identifying multiple objects as coming from Silent Hill when they were not. I have a display case dedicated to the game series, so I could see why it would want to dip into that territory quickly.

Gemini can get full-on bugged out at times. On more than one occasion, Gemini misidentified one of the items as a made-up character from the unreleased Silent Hill: f game, clearly merging pieces of different titles into something that never was. The other consistent bug I experienced was when Gemini would produce an incorrect answer, and I would correct it and hint closer at the answer — or straight up give it the answer, only to have it repeat the incorrect answer as if it was a new guess. When that happened, I would close the session and start a new one, which wasn’t always helpful.

One trick I found was that some conversations did better than others. If I scrolled through my Gemini conversation list, tapped an old chat that had gotten a specific item correct, and then went live again from that chat, it would be able to identify the items without issue. While that’s not necessarily surprising, it was interesting to see that some conversations worked better than others, even if you used the same language.

Google didn’t respond to my requests for more information on how Gemini Live works.

I wanted Gemini to successfully answer my sometimes highly specific questions, so I provided plenty of hints to get there. The nudges were often helpful, but not always. Below are a series of objects I tried to get Gemini to identify and provide information about.

Technologies

Facebook Brings Back Local Job Listings: How to Apply

One of Facebook’s most practical features from 2022 is being revived by Meta.

On the hunt for work? A Local Jobs search is being rolled out by Meta to make it easier for people in the US to discover and apply for nearby work directly on Facebook. The feature is inside Facebook Marketplace, Groups and Pages, Meta said last week, letting employers post openings and job seekers filter roles by distance, category or employment type.

You can apply or message employers directly through Facebook Messenger, while employers can publish job listings with just a few taps — similar to how you would post items for sale on Marketplace.

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

Facebook offered a Jobs feature before discontinuing it in 2022, pushing business hiring toward its other platforms. Its return suggests Meta is attempting to expand Facebook’s usefulness beyond social networking and to position it once again as a hub for community-driven opportunities.

Read more: Meta’s All In on AI Creating the Ads You See on Instagram, Facebook and WhatsApp

«We’ve always been about connecting with people, whether through shared interests or key life events,» the press release states. «Now, if you’re looking for entry-level, trade and service industry employment in your community, Facebook can help you connect with local people and small businesses who are hiring.»

Read more: What Is Meta AI? Everything to Know About These AI Tools

How to get started with Local Jobs on Facebook

According to Meta, Local Jobs will appear as a dedicated section in Facebook Marketplace starting this week. If you’re 18 or older, you can:

- Tap the Marketplace tab on the Facebook app or website.

- Select Jobs to browse available positions nearby.

- Use filters for job type, category and distance.

- Tap Apply or message the employer directly via Messenger.

Businesses and page admins can post jobs by creating a new listing in Marketplace or from their Facebook Page. Listings can include job details, pay range, and scheduling information and will appear in local searches automatically.

The Local Jobs feature is rolling out across the US now, with Meta saying it plans to expand it in the months ahead.

Technologies

Tesla Has a New Range of Affordable Electric Cars: How Much They Cost

The new, stripped-back versions of the Model Y and Model 3 have a more affordable starting price.

Technologies

Today’s NYT Strands Hints, Answers and Help for Oct. 22 #598

Here are hints and answers for the NYT Strands puzzle for Oct. 22, No. 598.

Looking for the most recent Strands answer? Click here for our daily Strands hints, as well as our daily answers and hints for The New York Times Mini Crossword, Wordle, Connections and Connections: Sports Edition puzzles.

Today’s NYT Strands puzzle is a fun one — I definitely have at least two of these in my house. Some of the answers are a bit tough to unscramble, so if you need hints and answers, read on.

I go into depth about the rules for Strands in this story.

If you’re looking for today’s Wordle, Connections and Mini Crossword answers, you can visit CNET’s NYT puzzle hints page.

Read more: NYT Connections Turns 1: These Are the 5 Toughest Puzzles So Far

Hint for today’s Strands puzzle

Today’s Strands theme is: Catch all.

If that doesn’t help you, here’s a clue: A mess of items.

Clue words to unlock in-game hints

Your goal is to find hidden words that fit the puzzle’s theme. If you’re stuck, find any words you can. Every time you find three words of four letters or more, Strands will reveal one of the theme words. These are the words I used to get those hints but any words of four or more letters that you find will work:

- BATE, LICE, SLUM, CAPE, HOLE, CARE, BARE, THEN, SLAM, SAMBA, BACK

Answers for today’s Strands puzzle

These are the answers that tie into the theme. The goal of the puzzle is to find them all, including the spangram, a theme word that reaches from one side of the puzzle to the other. When you have all of them (I originally thought there were always eight but learned that the number can vary), every letter on the board will be used. Here are the nonspangram answers:

- TAPE, COIN, PENCIL, BATTERY, SHOELACE, THUMBTACK

Today’s Strands spangram

Today’s Strands spangram is JUNKDRAWER. To find it, look for the J that’s five letters down on the far-left row, and wind down, over and then up.

Quick tips for Strands

#1: To get more clue words, see if you can tweak the words you’ve already found, by adding an «S» or other variants. And if you find a word like WILL, see if other letters are close enough to help you make SILL, or BILL.

#2: Once you get one theme word, look at the puzzle to see if you can spot other related words.

#3: If you’ve been given the letters for a theme word, but can’t figure it out, guess three more clue words, and the puzzle will light up each letter in order, revealing the word.

-

Technologies3 года ago

Tech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Best Handheld Game Console in 2023

-

Technologies3 года ago

Tighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Verum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Black Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies4 года ago

Google to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies4 года ago

Olivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

iPhone 13 event: How to watch Apple’s big announcement tomorrow