Technologies

I Saw the AI Future of Video Games: It Starts With a Character Hopping Over a Box

At the 2025 Game Developers Conference, graphics-chip maker Nvidia showed off its latest tools that use generative AI to augment future games.

At its own GTC AI show in San Jose, California, earlier this month, graphics-chip maker Nvidia unveiled a plethora of partnerships and announcements for its generative AI products and platforms. At the same time, in San Francisco, Nvidia held behind-closed-doors showcases alongside the Game Developers Conference to show game-makers and media how its generative AI technology could augment the video games of the future.

Last year, Nvidia’s GDC 2024 showcase had hands-on demonstrations where I was able to speak with AI-powered nonplayable characters, or NPCs, in pseudo-conversations. They replied to things I typed out, with reasonably contextual responses (though not quite as natural as scripted ones). AI also radically modernized old games for a contemporary graphics look.

This year, at GDC 2025, Nvidia once again invited industry members and press into a hotel room near the Moscone Center, where the convention was held. In a large room ringed with computer rigs packed with its latest GeForce 5070, 5080 and 5090 GPUs, the company showed off more ways gamers could see generative AI remastering old games, offering new options for animators, and evolving NPC interactions.

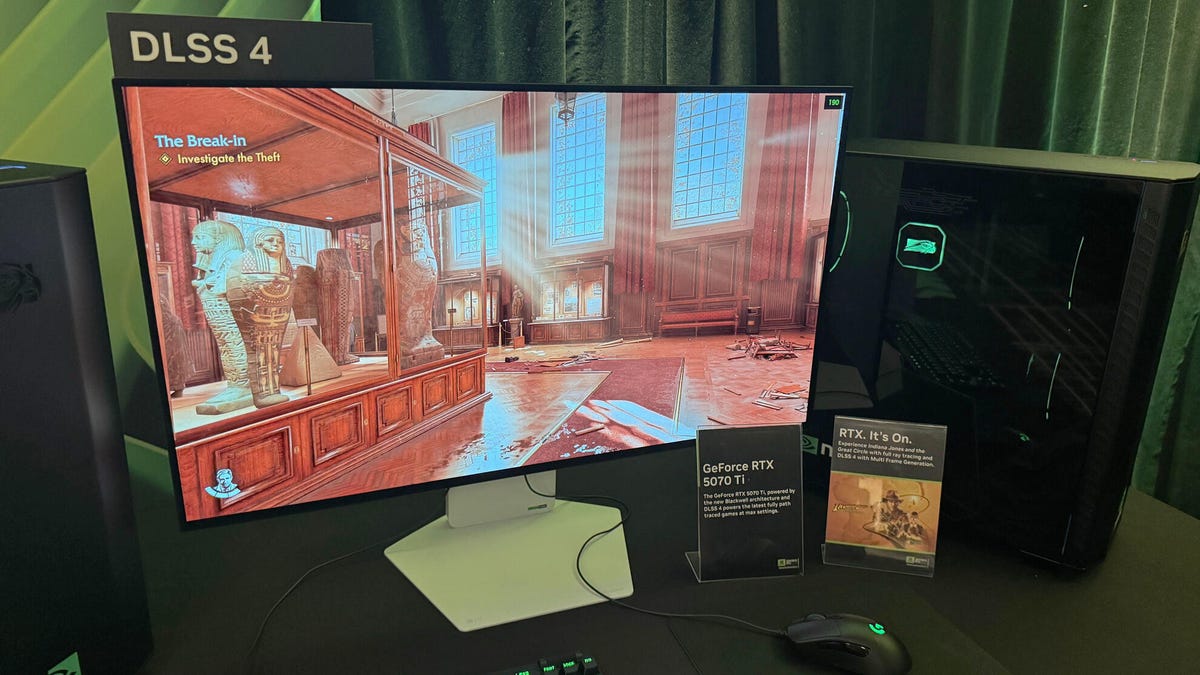

Nvidia also demonstrated how its latest AI graphics rendering tech, DLSS 4 for its GPU line, improves image quality, light path and framerates in modern games, features that affect gamers every day, though these efforts by Nvidia are more conventional than its other experiments. While some of these advancements rely on studios to implement new tech into their games, others are available right now for gamers to try.

Making animations from text prompts

Nvidia detailed a new tool that generates character model animations based on text prompts — sort of like if you could use ChatGPT in iMovie to make your game’s characters move around in scripted action. The goal? Save developers time. Using the tool could turn programming a several-hour sequence into a several-minute task.

Body Motion, as the tool is called, can be plugged into many digital content creation platforms; Nvidia Senior Product Manager John Malaska, who ran my demo, used Autodesk Maya. To start the demonstration, Malaska set up a sample situation in which he wanted one character to hop over a box, land and move forward. On the timeline for the scene, he selected the moment for each of those three actions and wrote text prompts to have the software generate the animation. Then it was time to tinker.

To refine his animation, he used Body Motion to generate four different variations of the character hopping and chose the one he wanted. (All animations are generated from licensed motion capture data, Malaska said.) Then he specified where exactly he wanted the character to land, and then selected where he wanted them to end up. Body Motion simulated all the frames in between those carefully selected motion pivot points, and boom: animation segment achieved.

In the next section of the demo, Malaska had the same character walking through a fountain to get to a set of stairs. He could edit with text prompts and timeline markers to have the character sneak around and circumvent the courtyard fixtures.

«We’re excited about this,» Malaska said. «It’s really going to help people speed up and accelerate workflows.»

He pointed to situations where a developer may get an animation but want it to run slightly differently and send it back to the animators for edits. A far more time-consuming scenario would be if the animations had been based on actual motion capture, and if the game required such fidelity, getting mocap actors back to record could take days, weeks or months. Tweaking animations with Body Motion based on a library of motion capture data can circumvent all that.

I’d be remiss not to worry for motion capture artists and whether Body Motion could be used to circumvent their work in part or in whole. Generously, this tool could be put to good use making animatics and virtually storyboarding sequences before bringing in professional artists to motion capture finalized scenes. But like any tool, it all depends on who’s using it.

Body Motion is scheduled to be released later in 2025 under the Nvidia Enterprise License.

Another stab at remastering Half-Life 2 using RTX Remix

At last year’s GDC, I’d seen some remastering of Half-Life 2 with Nvidia’s platform for modders, RTX Remix, which is meant to breathe new life into old games. Nvidia’s latest stab at reviving Valve’s classic game was released to the public as a free demo, which gamers can download on Steam to check out for themselves. What I saw of it in Nvidia’s press room was ultimately a tech demo (and not the full game), but it still shows off what RTX Remix can do to update old games to meet modern graphics expectations.

Last year’s RTX Remix Half-Life 2 demonstration was about seeing how old, flat wall textures could be updated with depth effects to, say, make them look like grouted cobblestone, and that’s present here too. When looking at a wall, «the bricks seem to jut out because they use parallax occlusion mapping,» said Nyle Usmani, senior product manager of RTX Remix, who led the demo. But this year’s demo was more about lighting interaction — even to the point of simulating the shadow passing through the glass covering the dial of a gas meter.

Usmani walked me through all the lighting and fire effects, which modernized some of the more iconically haunting parts of Half-Life 2’s fallen Ravenholm area. But the most striking application was in an area where the iconic headcrab enemies attack, when Usmani paused and pointed out how backlight was filtering through the fleshy parts of the grotesque pseudo-zombies, which made them glow a translucent red, much like what happens when you put a finger in front of a flashlight. Coinciding with GDC, Nvidia released this effect, called subsurface scattering, in a software development kit so game developers can start using it.

RTX Remix has other tricks that Usmani pointed out, like a new neural shader for the latest version of the platform — the one in the Half-Life 2 demo. Essentially, he explained, a bunch of neural networks train live on the game data as you play, and tailor the indirect lighting to what the player sees, making areas lit more like they’d be in real life. In an example, he swapped between old and new RTX Remix versions, showing, in the new version, light properly filtering through the broken rafters of a garage. Better still, it bumped the frames per second to 100, up from 87.

«Traditionally, we would trace a ray and bounce it many times to illuminate a room,» Usmani said. «Now we trace a ray and bounce it only two to three times and then we terminate it, and the AI infers a multitude of bounces after. Over enough frames, it’s almost like it’s calculating an infinite amount of bounces, so we’re able to get more accuracy because it’s tracing less rays [and getting] more performance.»

Still, I was seeing the demo on an RTX 5070 GPU, which retails for $550, and the demo requires at least an RTX 3060 Ti, so owners of graphics cards older than that are out of luck. «That’s purely because path tracing is very expensive — I mean, it’s the future, basically the cutting edge, and it’s the most advanced path tracing,» Usmani said.

Nvidia ACE uses AI to help NPCs think

Last year’s NPC AI station demonstrated how nonplayer characters can uniquely respond to the player, but this year’s Nvidia ACE tech showed how players can suggest new thoughts for NPCs that’ll change their behavior and the lives around them.

The GPU maker demonstrated the tech as plugged into InZoi, a Sims-like game where players care for NPCs with their own behaviors. But with an upcoming update, players can toggle on Smart Zoi, which uses Nvidia ACE to insert thoughts directly into the minds of the Zois (characters) they oversee… and then watch them react accordingly. These thoughts can’t go against their own traits, explained Nvidia Geforce Tech Marketing Analyst Wynne Riawan, so they’ll send the Zoi in directions that make sense.

«So, by encouraging them, for example, ‘I want to make people’s day feel better,» it’ll encourage them to talk to more Zois around them,» Riawan said. «Try is the key word: They do still fail. They’re just like humans.»

Riawan inserted a thought into the Zoi’s head: «What if I’m just an AI in a simulation?» The poor Zoi freaked out but still ran to the public bathroom to brush her teeth, which fit her traits of, apparently, being really into dental hygiene.

Those NPC actions following up on player-inserted thoughts are powered by a small language model with half a billion parameters (large language models can go from 1 billion to over 30 billion parameters, with higher giving more opportunity for nuanced responses). The one used in-game is based on the 8 billion parameter Mistral NeMo Minitron model shrunken down to be able to be used by older and less powerful GPUs.

«We do purposely squish down the model to a smaller model so that it’s accessible to more people,» Riawan said.

The Nvidia ACE tech runs on-device using computer GPUs — Krafton, the publisher behind InZoi, recommends a minimum GPU spec of an Nvidia RTX 3060 with 8GB of virtual memory to use this feature, Riawan said. Krafton gave Nvidia a «budget» of one gigabyte of VRAM in order to ensure the graphics card has enough resources to render, well, the graphics. Hence the need to minimize the parameters.

Nvidia is still internally discussing how or whether to unlock the ability to use larger-parameter language models if players have more powerful GPUs. Players may be able to see the difference, as the NPCs «do react more dynamically as they react better to your surroundings with a bigger model,» Riawan said. «Right now, with this, the emphasis is mostly on their thoughts and feelings.»

An early access version of the Smart Zoi feature will go out to all users for free, starting March 28. Nvidia sees it and the Nvidia ACE technology as a stepping stone that could one day lead to truly dynamic NPCs.

«If you have MMORPGs with Nvidia ACE in it, NPCs will not be stagnant and just keep repeating the same dialogue — they can just be more dynamic and generate their own responses based on your reputation or something. Like, Hey, you’re a bad person, I don’t want to sell my goods to you,» Riawan said.

Technologies

An AWS Outage Broke the Internet While You Were Sleeping

Reddit, Roblox and Ring are just a tiny fraction of the 1,000-plus sites and services that were affected when Amazon Web Services went down, causing a major internet blackout.

The internet kicked off the week the way that many of us often feel like doing: by refusing to go to work. An outage at Amazon Web Services rendered huge portions of the internet unavailable on Monday morning, with sites and services including Snapchat, Fortnite, Venmo, the PlayStation Network and, predictably, Amazon, unavailable for a short period of time.

The outage began shortly after midnight PT, and took Amazon around 3.5 hours to fully resolve. Social networks and streaming services were among the 1,000-plus companies affected, and critical services such as online banking were also taken down. You’ll likely find most sites and services functioning as usual this morning, but some knock-on effects will probably be seen throughout the day.

AWS, a cloud services provider owned by Amazon, props up huge portions of the internet. So when it went down, it took many of the services we know and love with it. As with the Fastly and Crowdstrike outages over the past few years, the AWS outage shows just how much of the internet relies on the same infrastructure — and how quickly our access to the sites and services we rely on can be revoked when something goes wrong. The reliance on a small number of big companies to underpin the web is akin to putting all of our eggs in a tiny handful of baskets.

When it works, it’s great, but only one small thing needs to go wrong for the internet to come to its knees in a matter of minutes.

How widespread was the AWS outage?

Just after midnight PT on October 20, AWS first registered an issue on its service status page, saying it was «investigating increased error rates and latencies for multiple AWS services in the US-EAST-1 Region.» Around 2 a.m. PT, it said it had identified a potential root cause of the issue, and within half an hour, it had started applying mitigations that were resulting in significant signs of recovery.

«The underlying DNS issue has been fully mitigated, and most AWS Service operations are succeeding normally now,» AWS said at 3.35 a.m. PT. The company didn’t respond to request for further comment beyond pointing us back to the AWS health dashboard.

Around the time that AWS says it first began noticing error rates, Downdetector saw reports begin to spike across many online services, including banks, airlines and phone carriers. As AWS resolved the issue, some of these reports saw a drop off, whereas others have yet to return to normal. (Disclosure: Downdetector is owned by the same parent company as CNET, Ziff Davis.)

Around 4 a.m. PT, Reddit was still down, while services including Ring, Verizon and YouTube were still seeing a significant number of reported issues. Reddit finally came back online around 4.30 a.m. PT, according to its status page, which was then verified by us.

In total, Downdetector saw over 6.5 million reports, with 1.4 million coming from the US, 800,000 from the UK and the rest largely spread across Australia, Japan, the Netherlands, Germany and France. Over 1,000 companies in total have been affected, Downdetector added.

«This kind of outage, where a foundational internet service brings down a large swathe of online services, only happens a handful of times in a year,» Daniel Ramirez, Downdetector by Ookla’s director of product told CNET. «They probably are becoming slightly more frequent as companies are encouraged to completely rely on cloud services and their data architectures are designed to make the most out of a particular cloud platform.»

What caused the AWS Outage?

AWS hasn’t shared full details about what caused the internet to fall off a cliff this morning. The likelihood is that now it’s deployed a fix, its next step will be to investigate what went wrong.

So far it’s attributed the outage to a «DNS issue.» DNS stands for the Domain Name System and refers to the service that translates human-readable internet addresses (for example, CNET.com) into machine-readable IP addresses that connects browsers with websites.

When a DNS error occurs, the translation process cannot take place, interrupting the connection. DNS errors are common are common internet roadblocks, but usually happen on small scale, affecting individual sites or services. But because the use of AWS is so widespread, a DNS error can have equally widespread results.

According to Amazon, the issue is geographically rooted in its US-EAST-1 region, which refers to an area of North Virginia where many of its data centers are based. It’s a significant location for Amazon, as well as many other internet companies, and it props up services spanning the US and Europe.

«The lesson here is resilience,» said Luke Kehoe, industry analyst at Ookla. «Many organizations still concentrate critical workloads in a single cloud region. Distributing critical apps and data across multiple regions and availability zones can materially reduce the blast radius of future incidents.»

Was the AWS Outage caused by a cyberattack?

DNS issues can be caused by malicious actors, but there’s no evidence at this stage to say that this is the case for the AWS outage.

Technical faults can, however, pave the way for hackers to look for and exploit vulnerabilities when companies’ backs are turned and defenses are down, according to Marijus Briedis, CTO at NordVPN. «This is a cybersecurity issue as much as a technical one,» he said in a statement. «True online security isn’t only about keeping hackers out, it’s also about ensuring you can stay connected and protected when systems fail.»

In the hours ahead, people should look out for scammers hoping to take advantage of people’s awareness of the outage, added Briedis. You should be extra wary of phishing attacks and emails telling you to change your password to protect your account.

Technologies

A New Bill Aims to Ban Both Adult Content Online and VPN Use. Could It Work?

Michigan representatives just proposed a bill to ban many types of internet content, as well as VPNs that could be used to circumvent it. Here’s what we know.

On Sept. 11, Michigan representatives proposed an internet content ban bill unlike any of the others we’ve seen: This particularly far-reaching legislation would ban not only many types of online content, but also the ability to legally use any VPN.

The bill, called the Anticorruption of Public Morals Act and advanced by six Republican representatives, would ban a wide variety of adult content online, ranging from ASMR and adult manga to AI content and any depiction of transgender people. It also seeks to ban all use of VPNs, foreign or US-produced.

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

VPNs (virtual private networks) are suites of software often used as workarounds to avoid similar bans that have passed in states like Texas, Louisiana and Mississippi, as well as the UK. They can be purchased with subscriptions or downloaded, and are built into some browsers and Wi-Fi routers as well.

But Michigan’s bill would charge internet service providers with detecting and blocking VPN use, as well as banning the sale of VPNs in the state. Associated fines would be up to $500,000.

What the ban could mean for VPNs

Unlike some laws banning access to adult content, this Michigan bill is comprehensive. It applies to all residents of Michigan, adults or children, targets an extensive range of content and includes language that could ban not only VPNs but any method of bypassing internet filters or restrictions.

That could spell trouble for VPN owners and other internet users who leverage these tools to improve their privacy, protect their identities online, prevent ISPs from gathering data about them or increase their device safety when browsing on public Wi-Fi.

Read more: CNET Survey: 47% of Americans Use VPNs for Privacy. That Number Could Rise. Here’s Why

Bills like these could have unintended side effects. John Perrino, senior policy and advocacy expert at the nonprofit Internet Society, mentioned to CNET that adult content laws like this could interfere with what kind of music people can stream, the sexual health forums and articles they can access and even important news involving sexual topics that they may want to read. «Additionally, state age verification laws are difficult for smaller services to comply with, hurting competition and an open internet,» John added.

The Anticorruption of Public Morals Act has not passed the Michigan House of Representatives committee nor been voted on by the Michigan Senate, and it’s not clear how much support the bill currently has beyond the six Republican representatives who have proposed it. As we’ve seen with state legislation in the past, sometimes bills like these can serve as templates for other representatives who may want to propose similar laws in their own states.

Could VPNs still get around bans like these?

That’s a complex question that this bill doesn’t really address. When I asked NordVPN how easy it would be track VPN use, privacy advocate Laura Tyrylyte explained, «From a technical standpoint, ISPs can attempt to distinguish VPN traffic using deep packet inspection, or they can block known VPN IP addresses. However, deploying them effectively requires big investments and ongoing maintenance, making large-scale VPN blocking both costly and complex.»

Also, VPNs have ways around deep packet inspection and other methods. CNET senior editor Moe Long mentioned obfuscation like NordWhisper, a counter to DPI that attempts to make VPN traffic look like normal web traffic so it’s harder to detect.

There are also no-log features offered by many VPNs to guarantee they don’t keep a record of your activity, and no-log audits from third parties like Deloitte that, well, try to guarantee the guarantee. There are even server tricks VPNs can use like RAM-only servers that automatically erase data each time they’re rebooted or shut down.

If you’re seriously concerned about your data privacy, you can look for features like these in a VPN and see if they are right for you. Changes like these, even on the state level, are one reason we pay close attention to how specific VPNs work during our testing, and make sure to recommend the right VPNs for the job, from speedy browsing to privacy while traveling.

Correction, Oct. 9: An earlier version of this story incorrectly stated how RAM-only servers work. RAM-only servers run on volatile memory and are wiped of data when they are rebooted or shut down.

Technologies

AWS Outage Explained: Why Half the Internet Went Down While You Were Sleeping

Reddit, Roblox and Ring are just a tiny fraction of the hundreds of sites and services that were impacted when Amazon Web Services went down.

The internet kicked off the week the way that many of us often feel like doing: by refusing to go to work. An outage at Amazon Web Services (AWS) rendered huge portions of the internet unavailable on Monday morning, with sites and services including Snapchat, Fortnite, Venmo, the PlayStation Network and, predictably, Amazon, unavailable for a short period of time.

AWS is a cloud services provider owned by Amazon that props up huge portions of the internet. As with the Fastly and Crowdstrike outages over the past few years, the AWS outage shows just how much of the internet relies on the same infrastructure — and how quickly our access to the sites and services we rely on can be revoked when something goes wrong.

Just after midnight PT on October 20, AWS first registered an issue on its service status page, saying it was «investigating increased error rates and latencies for multiple AWS services in the US-EAST-1 Region.» Around 2 a.m. PT, it said it had identified a potential root cause of the issue, and within half an hour, it had started applying mitigations that were resulting in significant signs of recovery.

«The underlying DNS issue has been fully mitigated, and most AWS Service operations are succeeding normally now,» AWS said at 3.35 a.m. PT. The company didn’t respond to request for further comment beyond pointing us back to the AWS health dashboard.

Around the time that AWS says it first began noticing error rates, Downdetector saw reports begin to spike across many online services, including banks, airlines and phone carriers. As AWS resolved the issue, some of these reports saw a drop off, whereas others have yet to return to normal. (Disclosure: Downdetector is owned by the same parent company as CNET, Ziff Davis.)

Around 4 a.m. PT, Reddit was still down, while services including Verizon and YouTube were still seeing a significant number of reported issues.

-

Technologies3 года ago

Tech Companies Need to Be Held Accountable for Security, Experts Say

-

Technologies3 года ago

Best Handheld Game Console in 2023

-

Technologies3 года ago

Tighten Up Your VR Game With the Best Head Straps for Quest 2

-

Technologies4 года ago

Verum, Wickr and Threema: next generation secured messengers

-

Technologies4 года ago

Black Friday 2021: The best deals on TVs, headphones, kitchenware, and more

-

Technologies4 года ago

Google to require vaccinations as Silicon Valley rethinks return-to-office policies

-

Technologies4 года ago

Olivia Harlan Dekker for Verum Messenger

-

Technologies4 года ago

iPhone 13 event: How to watch Apple’s big announcement tomorrow